Compressing a DeepSeek Model

Before model deployment, perform model compression, so less video memory is needed during inference and inference performance is improved. By compressing a third-party large model, the storage size of the model can be reduced, power consumption can be decreased, and computational speed can be improved.

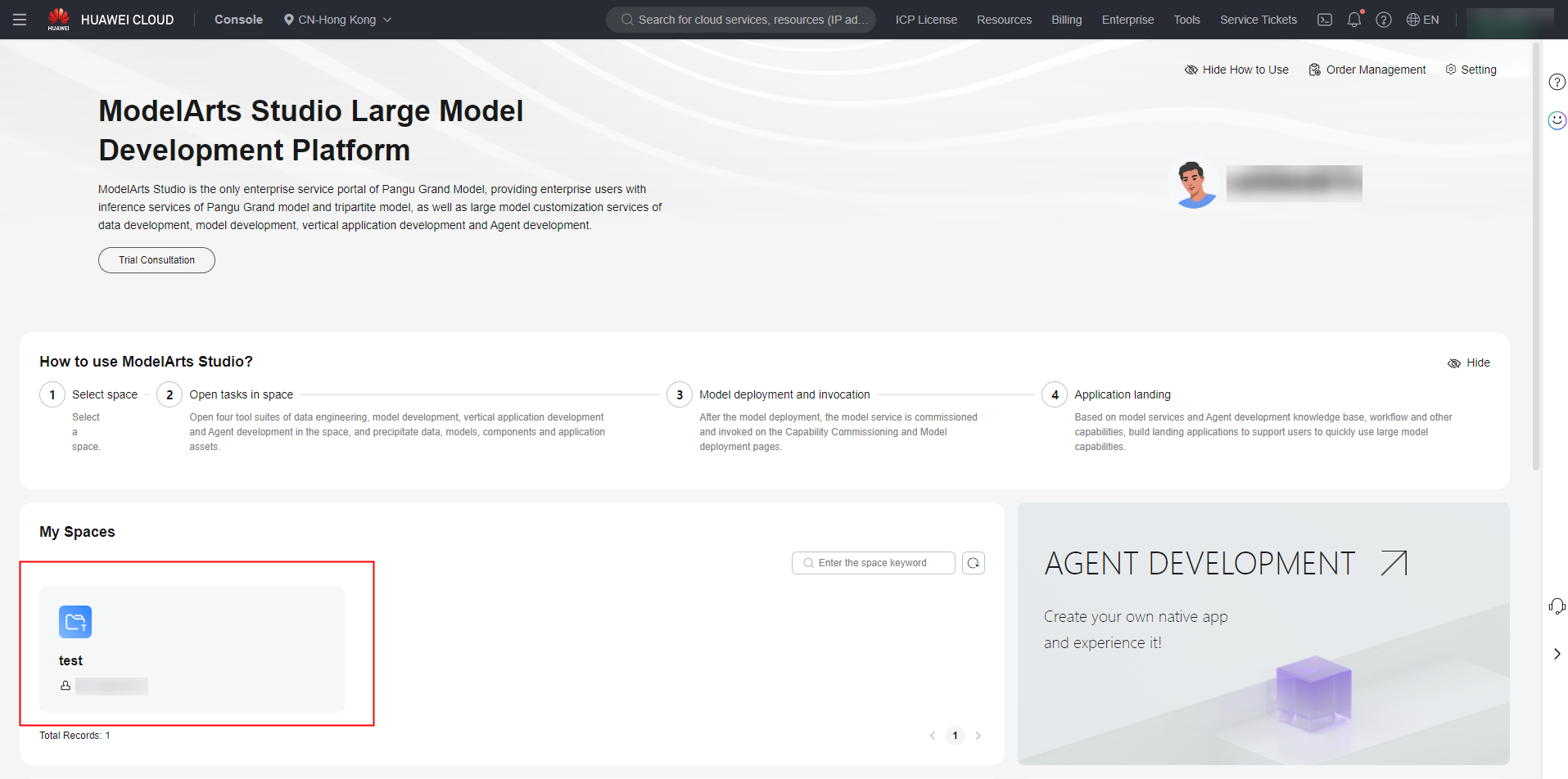

- Log in to ModelArts Studio. In the My Spaces area, click the required workspace.

Figure 1 My Spaces

- In the navigation pane, choose Model Development > Model Compression. Click Create in the upper right corner. Create a model compression task by referring to Table 1.

Table 1 Parameters for creating a model compression task Category

Parameter Name

Description

Compression Configuration

Model Source

Select Third-party large model.

Base Model

Select the model to be compressed. You can use a model from assets or jobs.

Compression Policy

Select INT8.

Resource Disposition

Resources Pool

Select the resource pool used by the model compression task.

Subscription reminder

Subscription reminder

After this function is enabled, the system sends SMS or email notifications to users when the task status is updated.

publish model

Enable automatic publishing

If this function is disabled, the model will be manually published to the model asset library after the training is complete.

If this function is enabled, configure the visibility, model name, and description.

Basic Information

Task name

Name of a model compression task.

Compressed model name

Name of the compressed model. (This configuration item is available only when Enable automatic publishing is disabled.)

Description

Name of a model compression task.

- After setting the parameters, click Create Now.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot