Labeling Text Datasets

Creating a Text Dataset Labeling Task

Before labeling a text dataset, import data. For details, see Importing Data to the Pangu Platform.

The data labeling function allows you to create annotation tasks, label datasets (annotation tasks), review labeled datasets (review tasks), and manage annotation tasks (task management). The functions supported by different roles and the displayed frontend pages are slightly different. For details, see Table 1.

|

Role Name |

Labeling Task Creation |

Labeling Task |

Labeling Review |

Labeling Task Management |

|---|---|---|---|---|

|

Super Admin |

√ |

√ |

- |

√ |

|

Administrator |

√ |

√ |

- |

√ |

|

Annotation administrator |

√ |

√ |

- |

√ |

|

Annotation operator |

- |

√ |

- |

- |

|

Annotation auditor |

- |

- |

√ |

- |

To create a text dataset annotation task, perform the following steps:

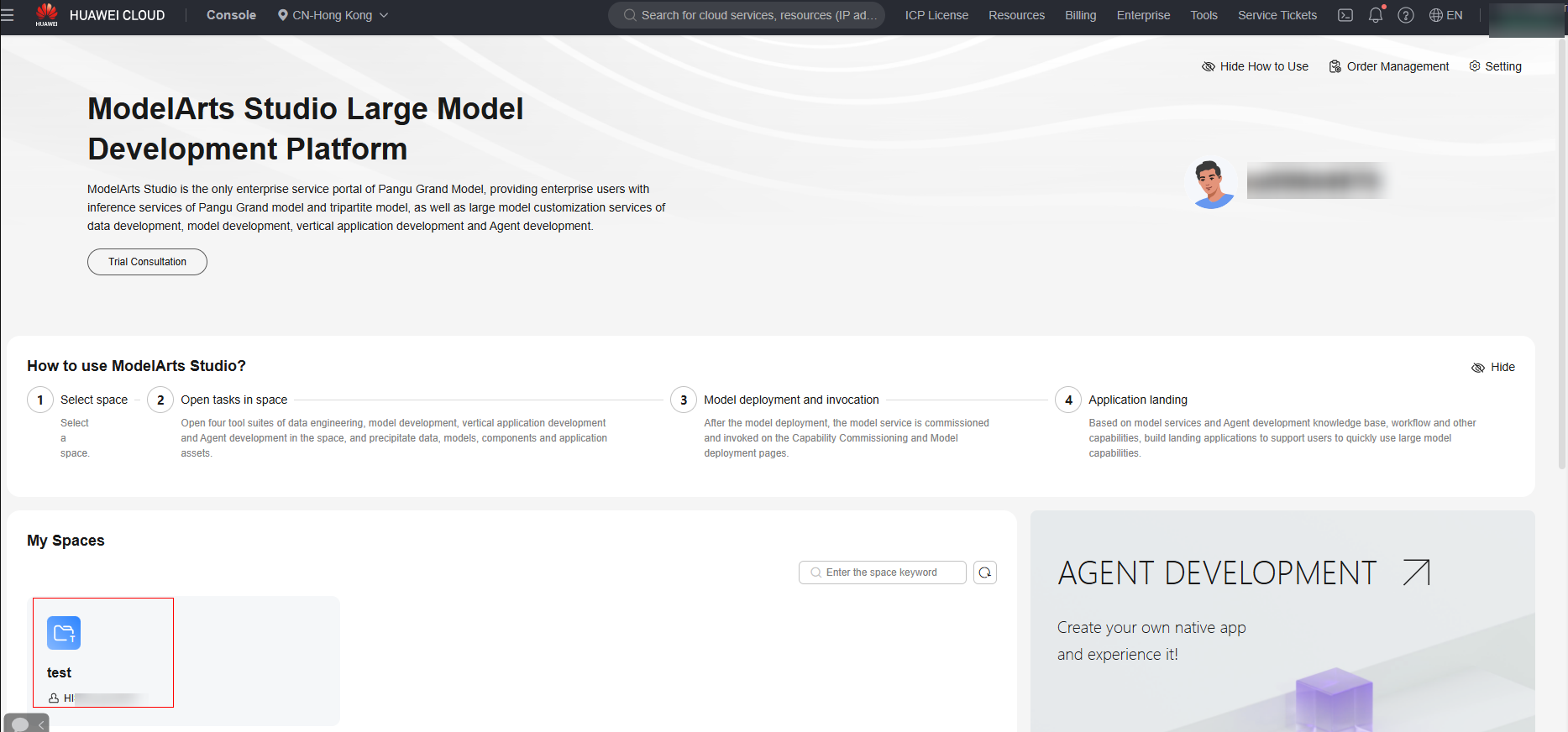

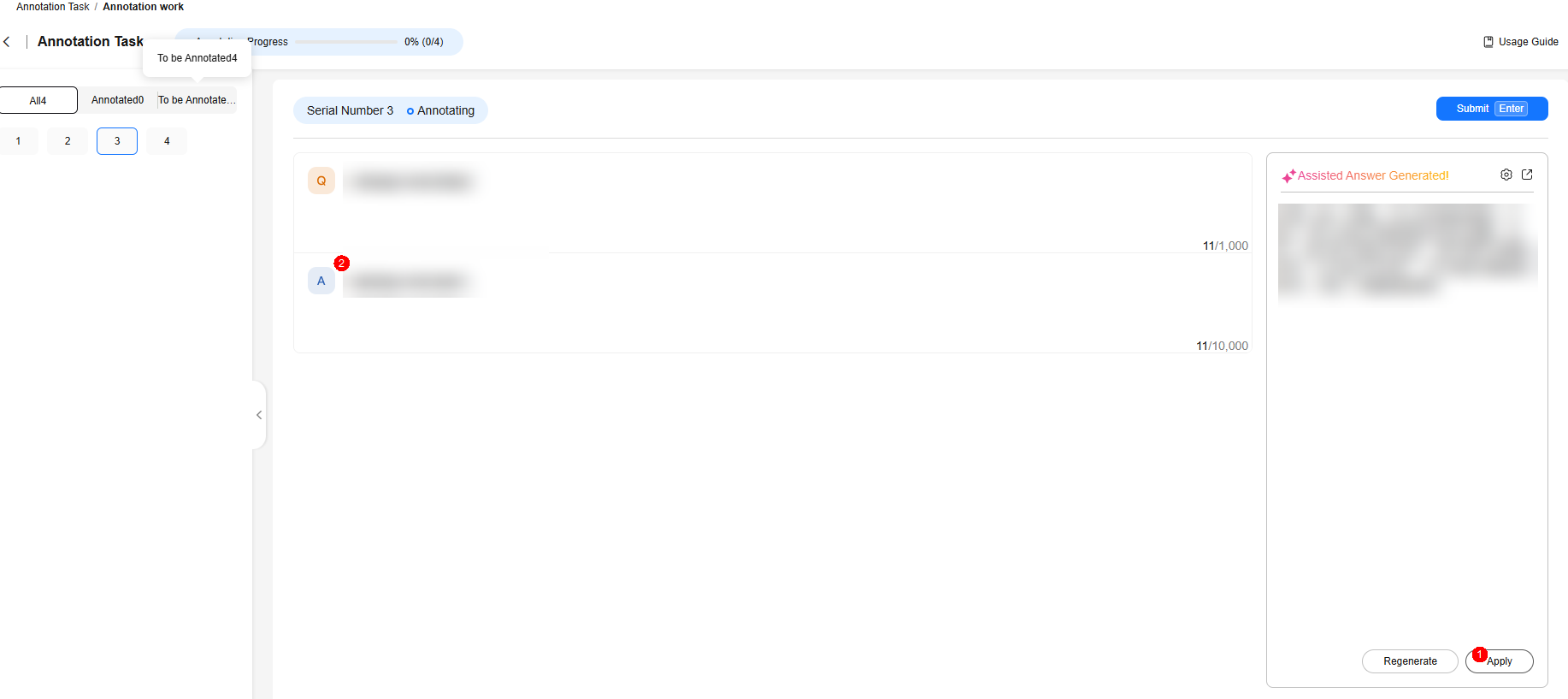

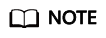

- Log in to ModelArts Studio Large Model Deveopment Platform. In the My Spaces area, click the required workspace.

Figure 1 My Spaces

- In the navigation pane, choose Data Engineering > Data Processing > Data Labeling. On the displayed page, click Create annotation task in the upper right corner.

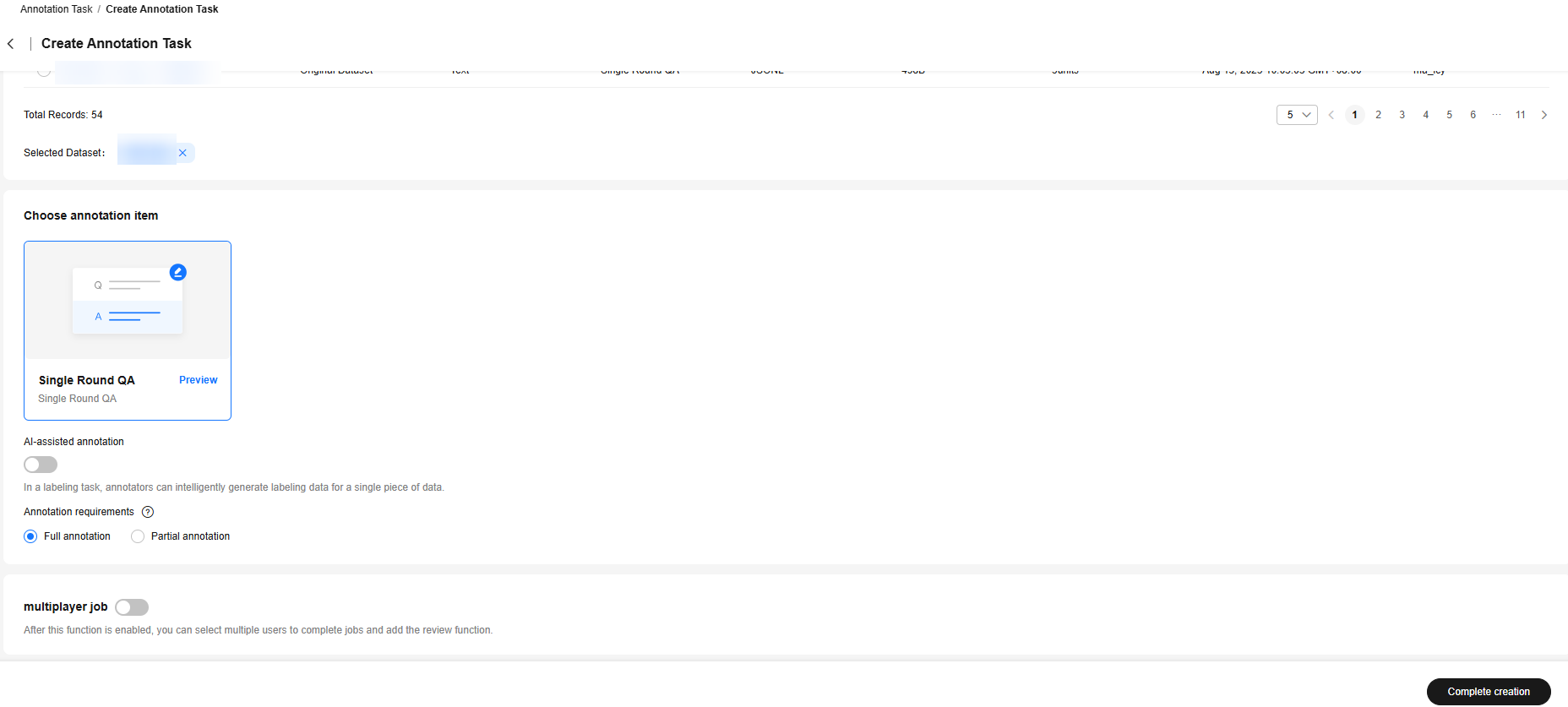

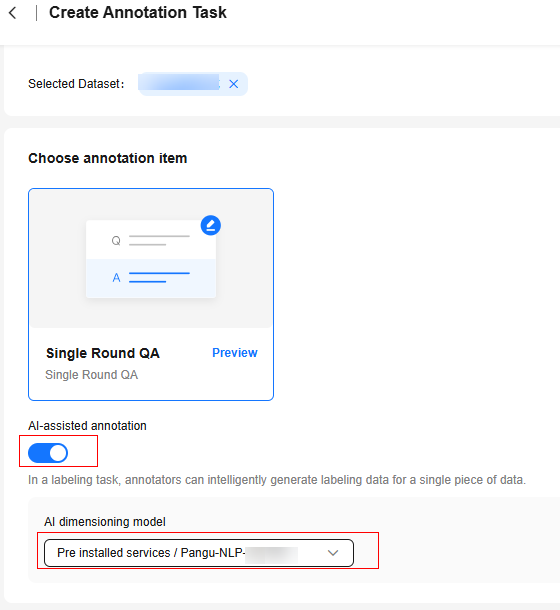

- On the Create annotation task page, select the text dataset to be annotated and select annotation items. The annotation items vary depending on the data file type. You can select annotation items as prompted.

The Single Round Q&A annotation item supports the AI-assisted annotation function.

Figure 2 Create Annotation Task

- You can enable the multiplayer job function. After this function is enabled, you can select multiple persons to complete the operation. In addition, the review function can be enabled if required. Configure labeling assignment and review by referring to Table 2.

Table 2 Labeling assignment and review configuration Category

Parameter Name

Description

Labeling assignment

Annotator

Add annotators and the number of annotations.

Labeling review

Is reviewed

- No: The review operation is not performed after labeling.

- Yes: The reviewer checks the annotation content of the annotator. If any problem is found, the reviewer can specify the reason and reject the annotation data. The annotator needs to label the data again.

Reviewer

Add reviewers and the number of reviewers.

Review requirements

- Full review: The reviewer needs to manually review all data records one by one.

- Partial review: If the labeling quality of some data is high, the reviewer can submit the remaining data for review in one-click mode. By default, the data is approved and the review task is complete.

- After the configuration is complete, click Complete creation.

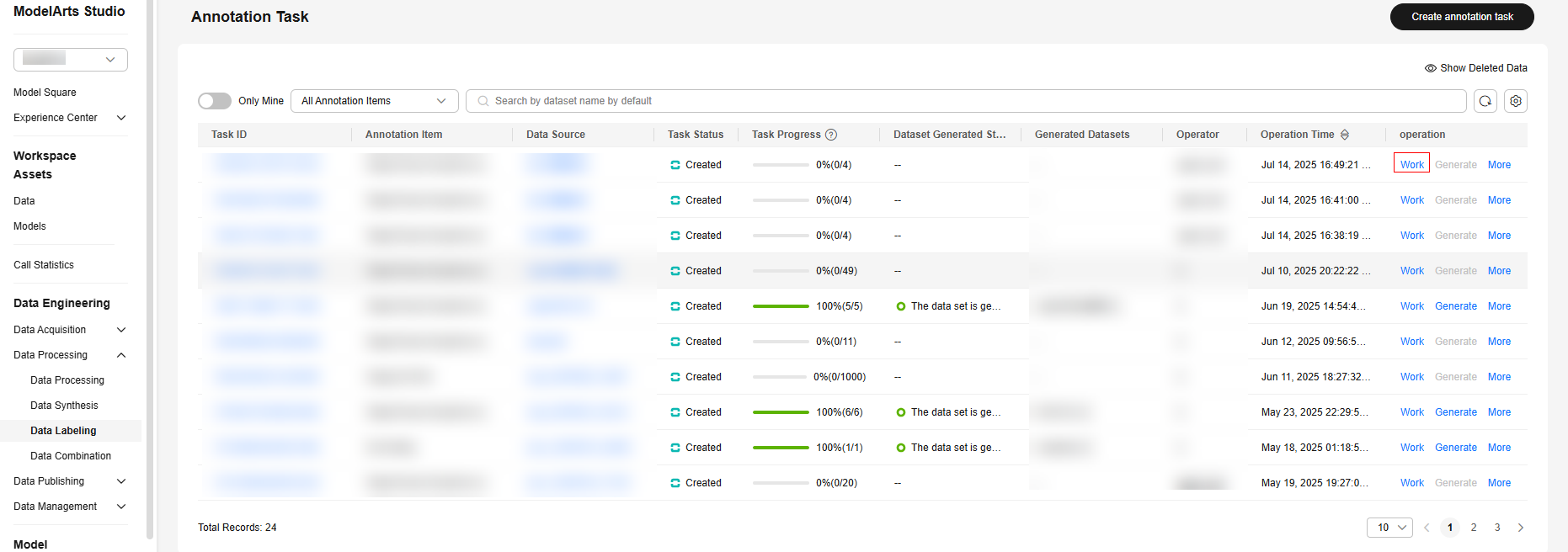

- On the Annotation Task page, click Work in the Operation column of the current labeling task to execute the task.

For the "Annotate operators" role, you can click Annotation to execute the job.

If you need to transfer the labeling task to other personnel, click Transfer, set the transfer personnel and quantity, and click OK.

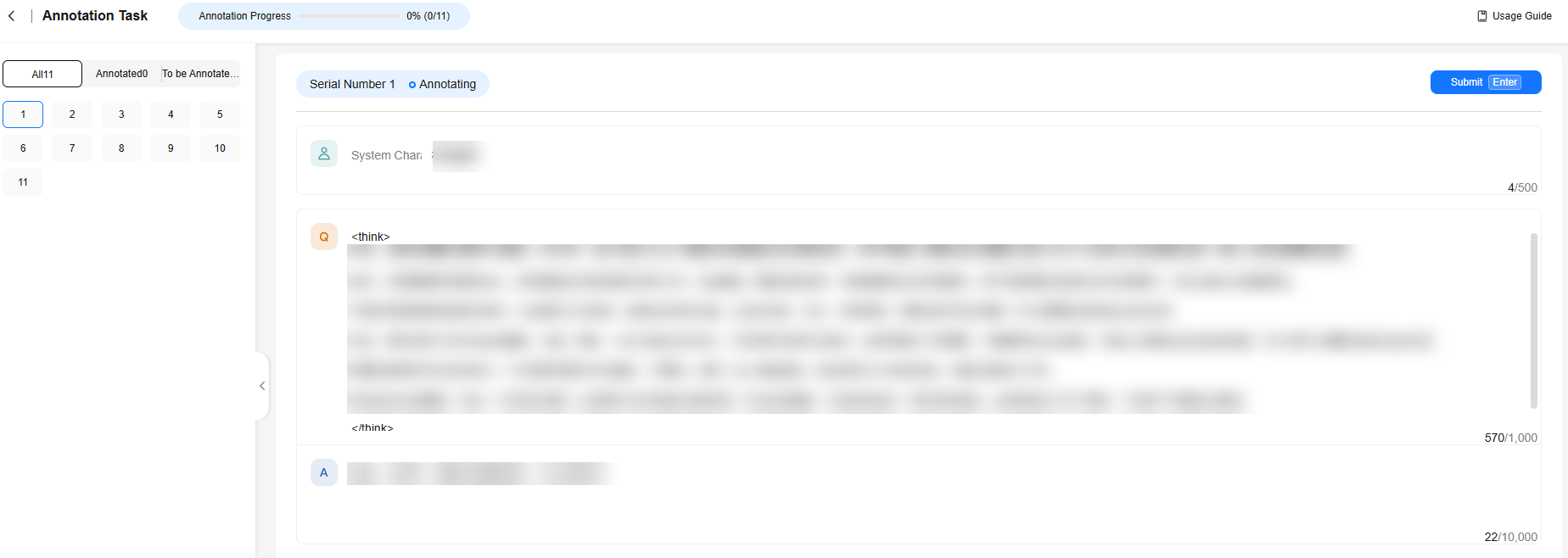

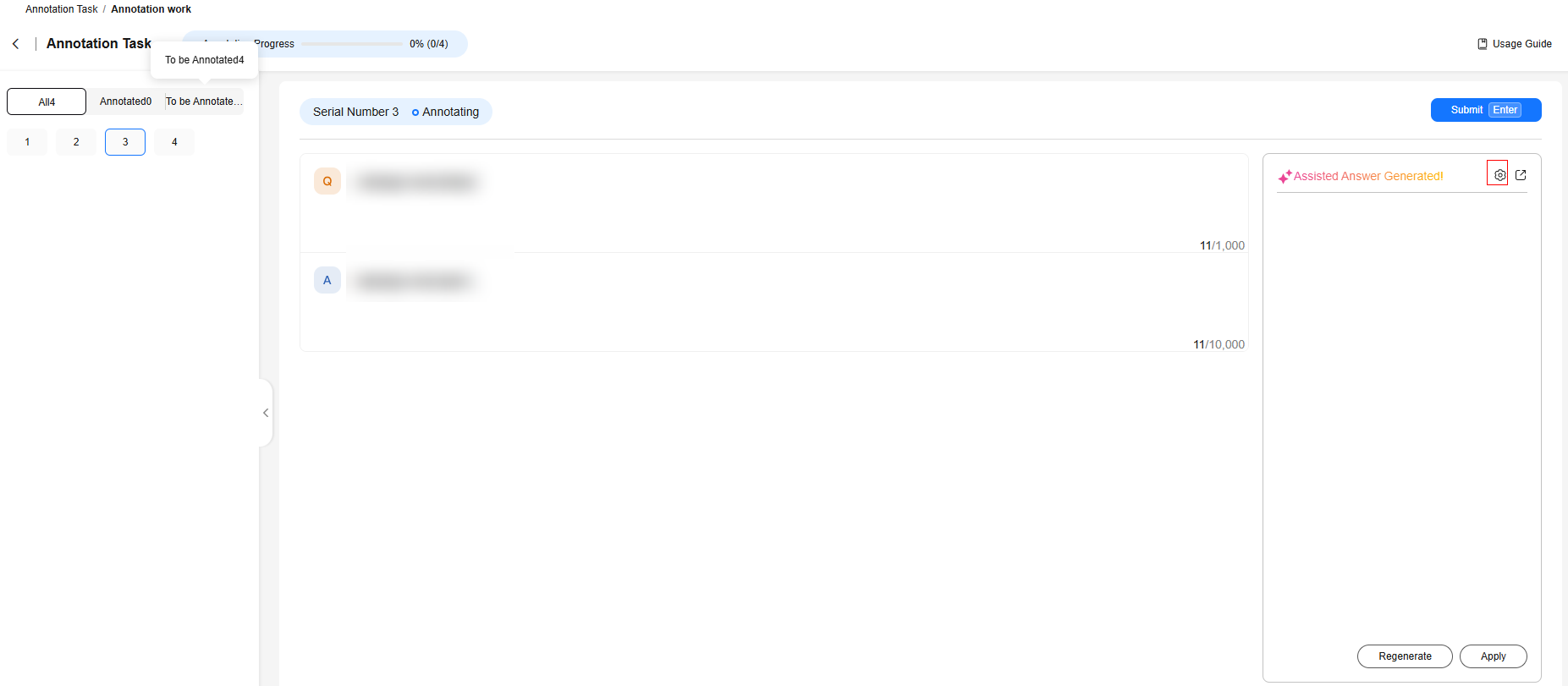

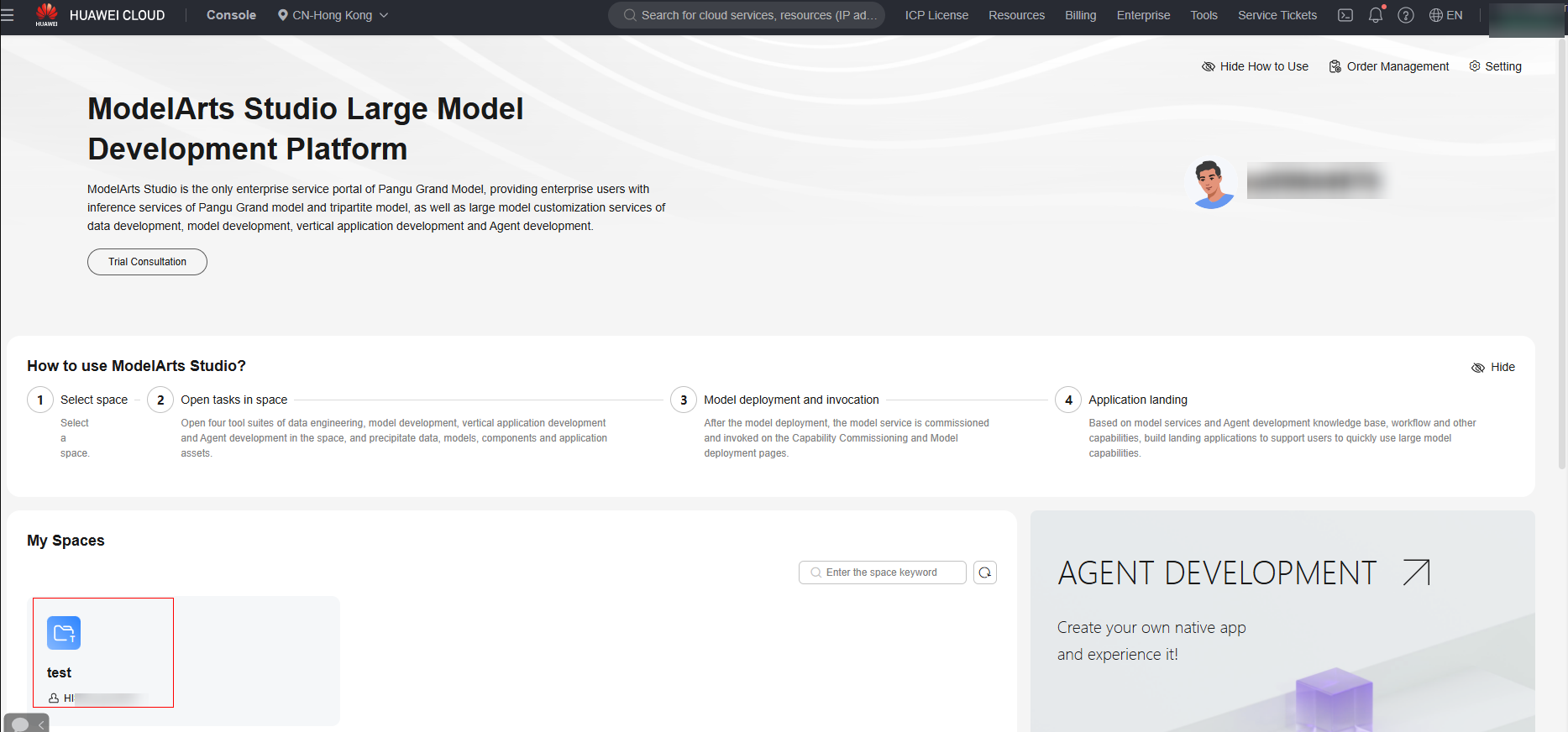

- On the labeling page, label data record one by one.

As shown in Figure 3, if the data of a single-turn Q&A is labeled, you need to check whether the question (Q) and answer (A) are correct one by one. If the question or answer is incorrect, you can edit the question or answer again. In addition, content in MarkDown format can be edited and previewed.

- After labeling a piece of data, click Submit to label the remaining data. After all data is labeled, a message is displayed, indicating that the labeling task is successful.

After data labeling is complete, if you do not need to review the labeling result, click Generate on the Annotation Task > Task Management page to generate a processed dataset.

To view the processed dataset, choose Data Engineering > Data Management > Datasets, and click the Processed Dataset tab.

AI-assisted Labeling

In a labeling task, the labeler can use intelligently generated label for a single data record. The procedure is as follows:

- On the Create Annotation Task page, select a dataset, enable AI-assisted annotation, and click Complete creation.

Figure 4 AI-assisted labeling

- On the Annotation Task page, click Work in the Operation column of the task to access the labeling page.

Figure 5 Annotation Task

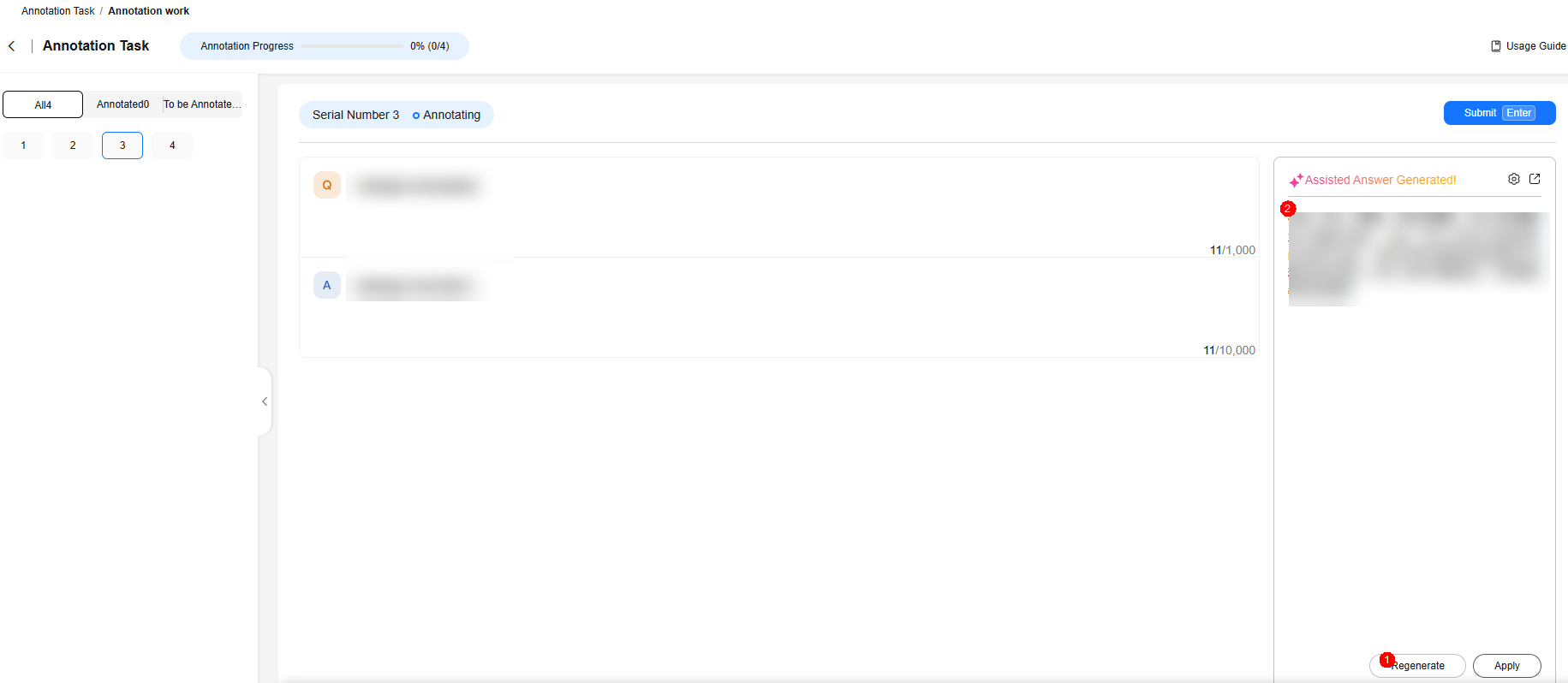

- Click the Settings button, modify the AI labeling model, and click OK.

Figure 6 Settings

- Click Regenerate to generate a new answer.

Figure 7 Regenerate

- Click Apply. The regenerated content is displayed in the answer box.

Figure 8 Apply

Reviewing a Labeled Text Dataset

If the labeling review function is enabled in Creating a Text Dataset Labeling Task, the labeling result can be reviewed after the labeling is complete. If the data fails to pass the review, you can enter the failure cause and reject the data to the annotator for re-labeling.

You must have the permission of the Annotate auditors role.

To review the labeling result of a text dataset, perform the following steps:

- Log in to ModelArts Studio. In the My Spaces area, click the required workspace.

Figure 9 My Spaces

- In the navigation pane, choose Data Engineering > Data Processing > Data Labeling.

- Click Review to go to the review page and review the data.

If you want to transfer the review task to another person, click Transfer, set the transferee and transfer quantity, and click OK.

- On the review page, you can click Approve or Reject to review the data record one by one until all data is reviewed.

After the data labeling review is complete, click Generate on the Annotation Task > Task Management page to generate a processed dataset.

To view the processed dataset, choose Data Engineering > Data Management > Datasets, and click the Processed Dataset tab.

Managing Labeled Text Datasets

The platform allows super administrators, administrators, and labeling administrators to perform the following operations on labeled datasets:

- Generate: After data labeling is reviewed, the super administrator, administrator, or labeling administrator needs to click Generate in the Operation column on the Annotation Task page to generate a processed dataset.

To view the processed dataset, choose Data Engineering > Data Management > Datasets, and click the Processed Dataset tab.

- Stop: The administrator can click More > Stop in the Operation column on the Annotation Task page to stop the current labeling task.

- Edit: If the labeling job supports the AI-assisted annotation and review functions, the administrator can click More > Edit in the Operation column on the Annotation Task page, determine whether to enable the AI-assisted annotation function, and set review requirements.

- Delete: The administrator can click More > Delete in the Operation column on the Annotation Task page to delete the current annotation task.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot