Text Dataset Processing Operators

The platform supports processing of text datasets, including data extraction, data conversion, data filtering, and data labeling. Table 1 lists the capabilities of text processing operators.

|

Category |

Operator Name |

Operator Description |

|---|---|---|

|

Data extraction |

Extracts text from a Word document and retain the contents, titles, and body of the original document, but does not retain images, tables, formulas, headers, and footers. |

|

|

Extracts all text content from a TXT file. |

||

|

Reads all text content from a CSV file and generates data in JSON format based on the key value of the file content type template. |

||

|

Extracts text from PDF files and converts the text into structured data. Texts, tables, and formulas can be extracted. |

||

|

Extracts HTML data content based on the tag path, and deletes other content irrelevant to the tag path to be extracted. |

||

|

Extracts all text content from the eBook. |

||

|

Data conversion |

Anonymizes or directly deletes sensitive personal information, such as mobile numbers, identity documents, email addresses, URLs, license plate numbers in China, IP addresses, MAC addresses, IMEIs, passports, and vehicle identification numbers. |

|

|

Searches for non-standardized symbols carried in the text for standardization and unified conversion.

|

||

|

Uses the customized regular expression to replace the text content if the data items remain unchanged. The following is an example:

|

||

|

Automatically identifies the date, time, and week, and converts the date, time, and week based on the selected format. |

||

|

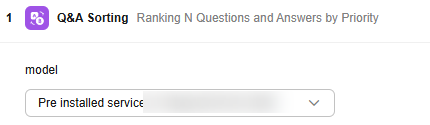

For Q&A sorting data, the large model is called to select the optimal answer and place it at the top of the answer list. The order of other answers remains unchanged. |

||

|

For Q&A sorting data, the large model is called to evaluate the scores of each pair of data, and the pre-sorting result is obtained based on the scores. |

||

|

For a single-turn Q&A, answers are generated based on the question (context field) and a new single-turn Q&A is returned. |

||

|

Checks whether the content at the end of a paragraph is complete based on the sentence-level filtering granularity, and filters out the content if the content is incomplete. |

||

|

Deletes a sentence that includes advertisement data from the text, based on a filtering granularity of a sentence. |

||

|

Data filtering |

Searches for abnormal characters carried in each data record in the dataset and replaces the abnormal characters with null values. The data items remain unchanged.

|

|

|

Deletes the data that complies with the customized regular expression. |

||

|

Deletes data that contains keywords. |

||

|

Automatically detects and filters sensitive data such as pornography, violence, and politics in text. |

||

|

Retains the data within the specified length range based on the configured text length. |

||

|

Deletes redundant information from the text based on the paragraph granularity without changing the data items. Examples include figure captions, table captions, and references. |

||

|

Determines the document repetition degree. The repetition of words in a document is calculated based on the N values of features. In this case, the following two algorithms can be used to check whether the result is greater than the feature threshold. If the result is greater than the threshold, the document is deleted.

|

||

|

The filtering is based on the following:

|

||

|

Uses punctuations in a document as sentence separators and collects statistics on the length of each sentence. If the average length of a document is greater than the configured length, the document is retained. Otherwise, the entire document is deleted. The filtering is based on the following:

|

||

|

Calculates the number of words after a document is segmented based on the system word library. After word segmentation, the total number of words is counted. The average word length is the total length of all words divided by the total number of words. If both the total length and the total number of words are met, the current document is retained. The filtering is based on the following:

|

||

|

Filters Q&A pairs that meet the following conditions:

|

||

|

Obtains the language type of the document based on the language detection model and filters the document in the required language. |

||

|

Checks and filters SFT data based on the selected rule. |

||

|

Data deduplication |

Detects and removes duplicate or highly similar text from data to prevent model overfitting or reduced generalization. |

|

|

Detects and removes duplicate or highly similar text from data to prevent model overfitting or reduced generalization. |

||

|

Data labeling |

Uses the LLM to check and score the general semantics of SFT data and filter data based on the scoring threshold. |

|

|

Uses the LLM to check and score the CoT of SFT data and filter data based on the scoring threshold. |

||

|

Analyzes the input Chinese text content and returns the JSON structured result indicating whether the text contains forbidden content. |

||

|

Analyzes the input Chinese text content and returns the JSON structured result indicating whether the text contains privacy content. |

||

|

Analyzes the input Chinese text content and returns the JSON structured result indicating whether the text contains junk content. |

||

|

Analyzes the input Chinese text content and returns the JSON structured result indicating whether the text contains junk advertisement content. |

||

|

Analyzes the input Chinese text content and returns the JSON structured result indicating whether the text contains abusive content. |

||

|

Analyzes the input Chinese text content and returns the JSON structured result indicating whether the text contains politically sensitive content. |

||

|

Classifies the pre-trained text, such as news, education, and health. The supported languages include Chinese and English. |

||

|

Assesses the general quality of text, such as fluency, clarity, and diversity. |

||

|

Checks whether the problem is time-sensitive and provides the reason. |

||

|

Scores the quality of answers to fine-tuning datasets, such as logical consistency and fact correctness. |

||

|

Assesses the syntax quality of texts, such as relevance and standardization. |

||

|

Classifies the text, such as cars, sports, and healthcare. The supported languages include Chinese and English. |

||

|

Detects the industry of the text and whether the text is scientific data, such as report analysis in the finance industry and technical research in the healthcare industry. |

Word Document Content Extraction

- Applicable file format: document > docx

- Parameter description:

Type of the content to be extracted: Extracts text from a Word document and retains the titles and body of the original document, but does not retain images, formulas, headers, and footers. Nested tables cannot be extracted.

- Parameter configuration example:

No parameters need to be set. By default, the contents, title, and body of the original document are retained, and the images, tables, formulas, headers, and footers are not retained.

- Extraction example

{"fileName":"JAVA from Beginner to Master.docx","text":"JAVA is a cross-platform..."}

TXT Content Extraction

- Applicable dataset type: Document > TXT.

- Parameter description:

Type of content to be extracted: By default, the full text is extracted into one line. Text can also be extracted by paragraph. The text is divided into multiple lines based on the entered separator. Each separator is separated by a vertical bar (|). The separator can contain a maximum of 100 characters.

- Extraction example

{"fileName":"TXT document name.txt","text":"This is the first line. "}

CSV Content Extraction

- Applicable dataset types: Text > single-turn Q&A, single-turn Q&A (with a persona), and Q&A sorting.

- Parameter description

Type of content to be extracted: Reads all text content from a CSV file and generates data in JSON format based on the key value of the file content type template.

- Parameter configuration example

- Extraction example

If the extracted CSV content is "Hello, please introduce yourself. I am Pangu model.", the extracted content is {"context":"Hello, please introduce yourself","target":"I am Pangu model."}

PDF Content Extraction

- Applicable dataset type: Document > PDF

- Parameter description

Type of content to be extracted: By default, the text, tables, formulas, and titles are retained. You can select the type to be saved. The types that are not selected will be removed.

Refined content extraction: indicates whether to support image content extraction after layout analysis.

- Parameter configuration example

- Extraction example

{"fileName":"JAVA from Beginner to Master.pdf","text":"JAVA is a cross-platform..."}

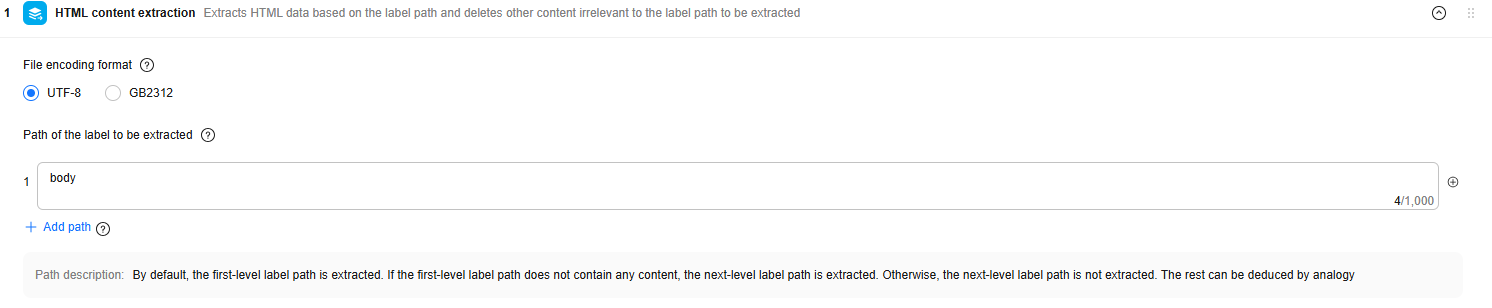

HTML Content Extraction

- Applicable dataset type: Text > Web page

- Parameter description

Type of content to be extracted: The default file encoding format is UTF-8. The GB2312 format is supported. By default, the body is extracted. You can customize the content to be extracted. Multiple labels can be extracted. Labels are separated by commas (,), for example, A, B, C. That is, the content of label A, B, or C is extracted.

- Parameter configuration example

- Extraction example

{"text":"#\nI am Pangu model.\nPangu model is an advanced AI model that is dedicated to providing intelligent solutions for various industries.\n","fileName":"Web page.htmI"}

E-book Content Extraction

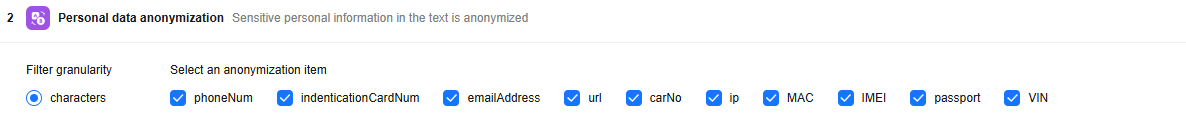

Personal Data Anonymization

- Applicable dataset type: Text.

- Parameter description

Type of content to be converted: Anonymizes sensitive personal information in the text, such as mobile numbers, ID cards, email addresses, URLs, license plate numbers in China, IP addresses, MAC addresses, IMEIs, passports, and vehicle identification numbers. By default, all options are selected. You can also select some of them.

- Parameter configuration example

- Conversion example

Before processing: "Data is from www.test.com"

After processing: "Data comes from*******"

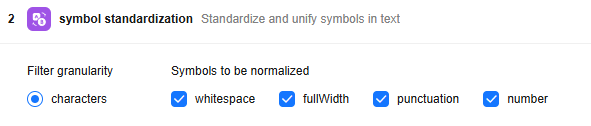

Symbol Standardization

- Applicable dataset type: Text.

- Parameter description

Type of content to be converted: Non-standard symbols in the text can be converted to standard symbols. The non-standard symbols include spaces, DBC symbols, punctuations, and number symbols. By default, all non-standard symbols are selected. The filtering granularity is character.

- Parameter configuration example

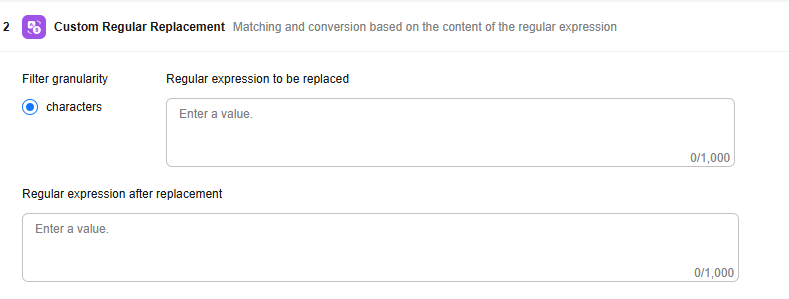

Custom Regular Expression Replacement

- Applicable dataset type: Text.

- Parameter description

Type of content to be converted: Uses the customized regular expression to replace the text content if the data items remain unchanged.

- Parameter configuration example

- Conversion example

Before processing: {"text":"This is the main content aeiou in the test aeiou. "}

After processing: {"text":"This is the main content 11111 in the test 11111. "}

Date and Time Format Conversion

- Applicable dataset type: Text.

- Parameter description

Type of content to be converted: Automatically identifies the date, time, and week, and converts the date, time, and week based on the selected format. The conversion types include date format, time format, and week format. By default, all of them are selected. You can also select some of them.

- Parameter configuration example

- Conversion example

Before processing: {"text":"Today is Monday, March 3, 2025. The rain is heavy in the morning. "}

After processing: {"text":"Today is Monday, 2025-03-03 00:00:00. The rain is heavy in the morning. "}

Data Distillation

Applicable dataset types: Text > single-turn Q&A and single-turn Q&A (with a persona) > JSONL

Parameter description:

Model: Select the model to be used. The pre-installed services and personal services are supported.

Parameter configuration example:

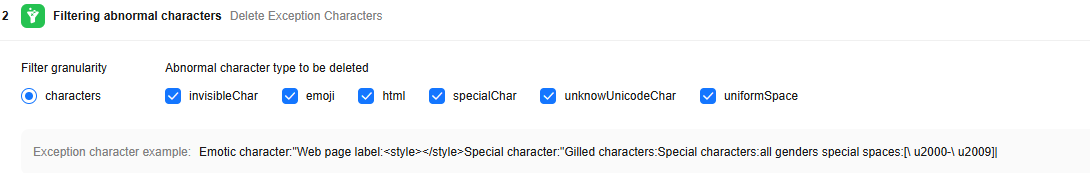

Filtering Abnormal Characters

- Applicable dataset type: Text.

- Parameter description

Type of content to be converted: Searches for abnormal characters carried in each data record in the dataset and replaces the abnormal characters with null values. The data items remain unchanged. Types of abnormal characters include invisible characters, emojis, web page labels, special characters, garbled characters, and special spaces. By default, all types are selected. You can also select some of them.

- Parameter configuration example

- Filtering example

Before processing: {"text":"Test exception. <style></style>Haha. Limited-time offer! ☺"}

After processing: {"text":"Test exception. Haha. Limited-time offer!"}

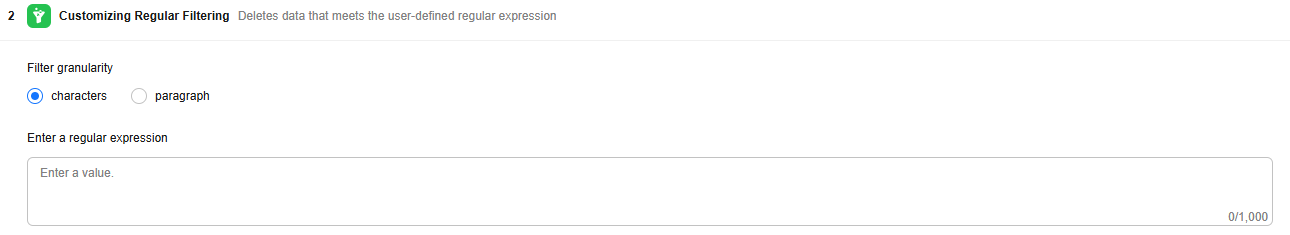

Custom Regular Expression Filtering

- Applicable dataset type: Text.

- Parameter description

Type of content to be filtered: Filters content based on a custom regular expression. The filtering granularity can be character (default) or paragraph.

- Parameter configuration example

- Filtering example

Filtering out the content following "References"

Before processing: {"text":"This is the body content. References [1] Author 1, Article 1, Journal 1, 2021.[2] Author 2, Article 2, Journal 2, 2022."}

After processing: {"text":"This is the body content. "}

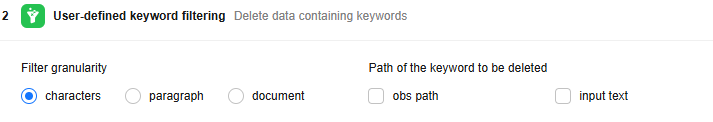

Custom Keyword Filtering

- Applicable dataset type: Text.

- Parameter description

Type of content to be filtered: The filtering granularity can be character (default), paragraph, or document. The path of the keyword to be deleted supports keyword import from OBS and text input.

- Parameter configuration example

- Filtering example

Before processing: {"text":"Keyword test. This is a test data record. "}

After processing: {"text":"Keyword. This is a test data record. "}

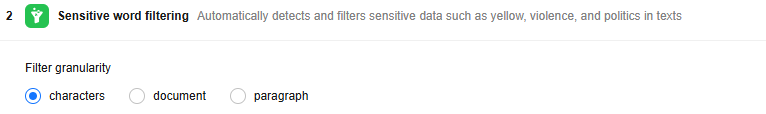

Sensitive Word Filtering

- Applicable dataset type: Text.

- Parameter description

Type of content to be filtered: Automatically detects and filters sensitive data such as pornographic, violent, and political content in the text. Sensitive words need to be preset. The filtering granularity can be character (default), paragraph, or document.

- Parameter configuration example

- Filtering example

Before processing: {"text":"prostitute test"}

After processing: {"text":"test"}

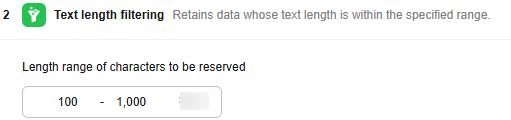

Filtering Based on the Text Length

- Applicable dataset type: Text.

- Parameter description

Type of content to be filtered: Retains data within the specified text length. By default, the length of the characters to be reserved ranges from 100 to 1000 characters, which can be modified.

- Parameter configuration example

- Filtering example

Before processing: {"text":"Test length"}

After processing: {"text":""}

Redundant Information Filtering

- Applicable dataset type: Text.

- Parameter description

Type of content to be filtered: Deletes redundant information from the text based on the paragraph granularity without changing the data items. The content types that can be filtered include figures, notes, and references. By default, all content types are selected. You can also select some of them.

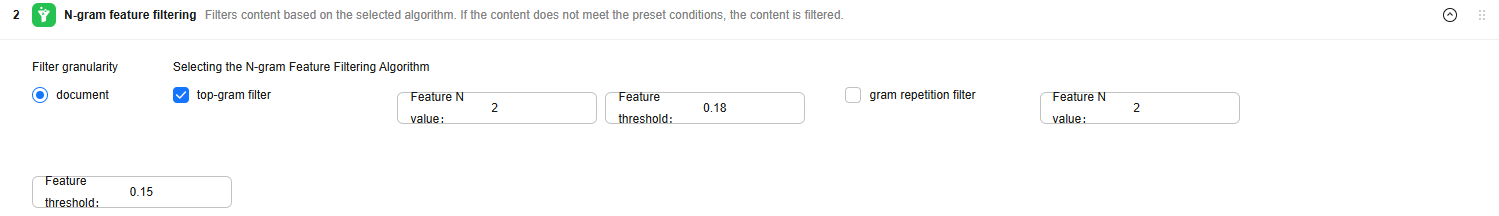

N-gram Feature Filtering

- Applicable dataset type: Text.

- Parameter description

Type of content to be filtered: The filtering granularity is document. You can select top-gram filtering or gram repetition rate filtering. By default, top-gram filtering is selected. In top-gram filtering mode, the default value of feature N is 2, and the default value of feature threshold is 0.18. In gram repetition rate filtering mode, the default value of feature N is 2, and the default value of feature threshold is 0.15. The values can be changed.

- Parameter configuration example

- Filtering example

Before processing: {"text":"Wake up. Today is Sunday. Today is a holiday. Tomorrow is Monday. Tomorrow is a working day. "}

After processing: {"text":""}

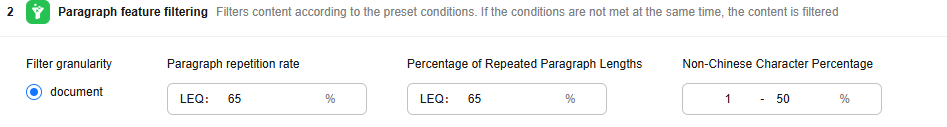

Paragraph Feature Filtering

- Applicable dataset type: Text.

- Parameter description

Types of content to be filtered: Filters the content based on the document filtering granularity, paragraph repetition rate, proportion of the length of repeated paragraphs, and proportion of non-Chinese characters. If any of the specified conditions is not met, the content is filtered out. The default values are as follows: The paragraph repetition rate is less than or equal to 65%, the proportion of the length of repeated paragraphs is less than or equal to 65%, and the proportion of non-Chinese characters ranges from 1% to 50%. The values can be changed.

- Parameter configuration example

- Filtering example

Before processing: {"text":"It is said that the fox only appears to those with pure hearts and sincere wishes. Under the light of the moon, it will gracefully emerge, gazing at the visitor with eyes that shimmer with wisdom. Only when the fox senses the visitor's sincerity and purity will it speak, asking about their desires. Yet, the granting of a wish is never without cost. Each fulfilled wish demands a corresponding price—perhaps a cherished memory, a beloved possession, or even a piece of one's life. Therefore, villagers must carefully ponder before making a wish, considering whether they are truly willing to pay such a price."} "}

After processing:

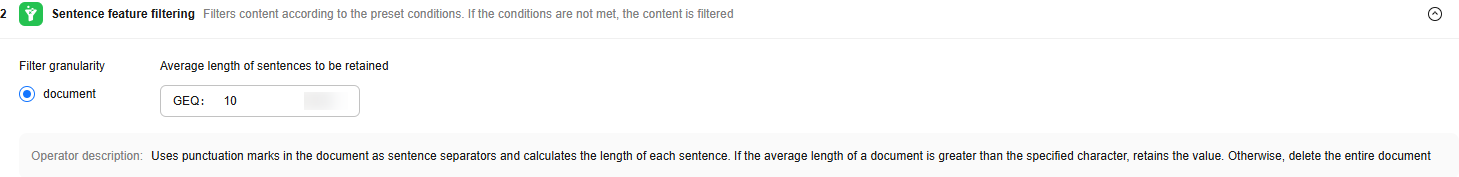

Sentence Feature Filtering

- Applicable dataset type: Text.

- Parameter description

Type of content to be filtered: Filters the content based on the document filtering granularity and the average sentence length to be retained. If the content does not meet the requirements, the content is filtered out. The default average sentence length to be retained is greater than or equal to 10 characters, which can be modified.

- Parameter configuration example

- Filtering example

Before processing: {"text":"In a small village, there is a legend. According to the legend, a mysterious fox appears in the village forest every full moon night. "}

After processing: {"text":""}

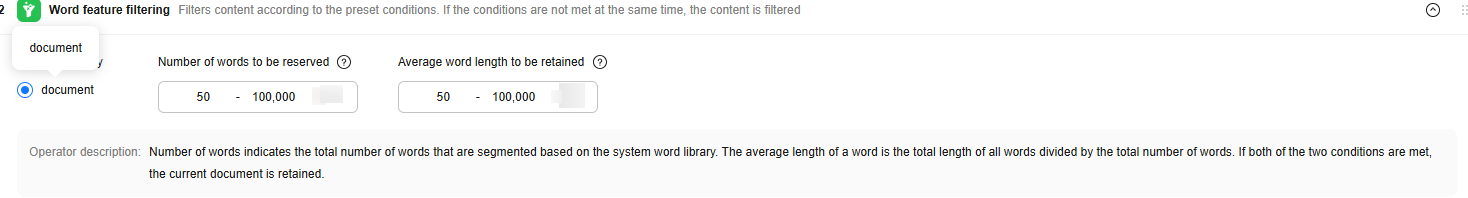

Word Feature Filtering

- Applicable dataset type: Text.

- Parameter description

Type of content to be filtered: Filters the content by document based on the number of words to be retained (50-100,000 characters by default) and the average length of words to be retained (50-100,000 characters by default). If any of two conditions is not met, the content is filtered out. The default value can be changed.

- Parameter configuration example

- Filtering example

Before processing: {"text":"It is said that the fox only appears to those with pure hearts and sincere wishes. " }

After processing: {"text":""}

Removing the Incomplete Sentence at the End of a Paragraph

- Applicable dataset type: Text.

- Parameter description

Type of content to be filtered: Checks whether the content at the end of a paragraph is complete based on the sentence-level filtering granularity, and deletes the content if the content is incomplete.

- Parameter configuration example

- Filtering example

Before processing: "JAVA is an object-oriented programming language. Use JAVA to,"

After processing: "JAVA is an object-oriented programming language."

Advertisement Data Removal

- Applicable dataset type: Text.

- Parameter description

Type of content to be filtered: Deletes a sentence that includes advertisement data from the text, based on a filtering granularity of a sentence.

- Parameter configuration example

- Filtering example

Before processing: {"text":"Specific discount! Buy our products and enjoy a discount of up to 50%! Click the link below to avail the discount at https://example.com. Seize this opportunity now and take action! "}

After processing: {"text":""}

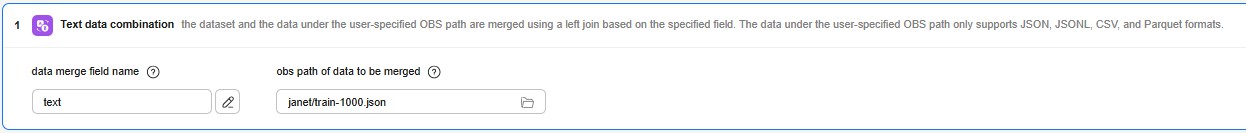

Data Merging

- Applicable dataset type: Text.

- Parameter description:

data merge field name: fields that are the same in the two data records, which are used for data merging.

obs path of data to be merged: OBS path of the dataset to be merged. Only JSON, JSONL, CSV, and Parquet files are supported.

- Parameter configuration example:

- Conversion example

Before processing: {"context": "The reference information does not provide enough information to generate a meaningful question. ","target": "Cannot generate questions based on the reference information", "start_domain_specific_l1": "Other"}

After processing: {"context": "The reference information does not provide enough information to generate a meaningful question. ","target": "Cannot generate questions based on the reference information", "start_domain_specific_l1": "Other","description":"Description knowledge of other domains"}

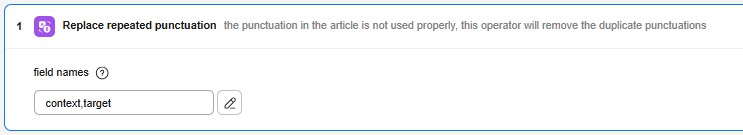

Duplicate Punctuation Replacement

- Applicable dataset type: Text.

- Parameter description:

field name: Field name of duplicate punctuation characters to be removed. Multiple fields can be configured and separated by commas (,). If this parameter is left blank, all fields are processed by default.

- Parameter configuration example:

- Conversion example

Before processing: {"context": "How's the weather today? ", "target": "It's sunny today! It is a shining day!! "}

After processing: {"context": "How's the weather today? ", "target": "It's sunny today! It is a shining day! "}

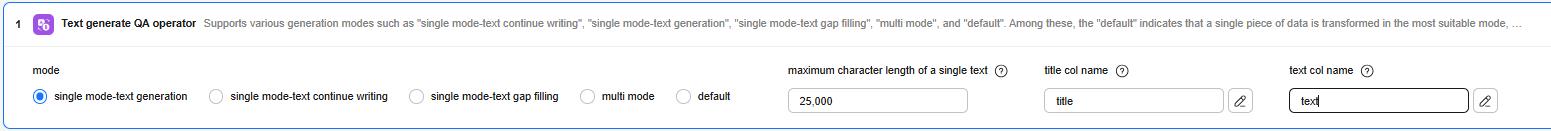

Text Generation QA

- Applicable dataset type: Text.

- Parameter description:

mode: Supports multiple generation modes, such as single-mode-continuation, single-mode-essay, single-mode-completion, multi-mode, and default mode. The default mode indicates that a single data record is converted in the most suitable mode, and only one QA data record is generated. The multi-mode indicates that a single data record is converted based on multiple models, and multiple QA data records are generated, which can increase the diversity of data task types.

maximum character length of a single text: Indicates the maximum length of a single data record: maximum length of the converted answer data. If the length exceeds the value, the data record will be filtered in essay mode, and will be split in other modes. The following provides the recommended data character length corresponding to the basic function model. You can adjust the length based on the actual data. However, ensure that the length of the token converted from the data cannot exceed the context token length of the trained model. The recommended maximum character length corresponding to the context token length of the model is as follows:

32768 tokens: The maximum character length is less than or equal to 25,000 characters.

8196 tokens: The maximum character length is less than or equal to 7,500 characters.

4096 tokens: The maximum character length is less than or equal to 3,500 characters.

title col name: used to obtain the title in the data. This field is mandatory in the single-mode composition model, and the column must exist in the data.

text col name: used to obtain the text in the data. The default value is text.

- Parameter configuration example:

- Conversion example

Before processing: {"title": "Electronic Cigarette Industry Group Responds to Scholars' Call for National Standards", "text": "Industry Feedback"}

After processing: {"context":"Please generate a professional document on the theme of \"Electronic Cigarette Industry Group Responds to Scholars' Call for National Standards\" from a professional perspective.","target":"Industry Feedback","length":4,"type":"text_generation"}

Q&A Pair Filtering

- Applicable dataset type: Text.

- Parameter description

Type of content to be filtered: Filters the Q&A pairs whose questions are not in the string format, answers are empty, or answers are meaningless.

- Parameter configuration example

- Filtering example

Before processing: {"text":[{"context":"Hello","target":"Yes"},{"context":"list","target":""}]}

After processing: {"text":[{"context":"Hello","target":"Yes"}]}

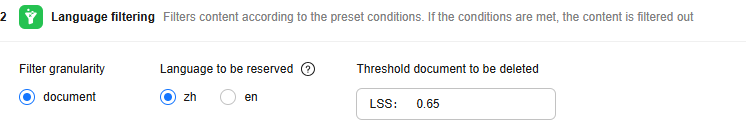

Language Filtering

- Applicable dataset type: Text.

- Parameter description

Type of content to be filtered: Filters the content by document based on the language to be retained and the threshold of documents to be deleted. If documents meet filtering criteria, they are filtered out. The language to be retained is Chinese by default. You can select English. The default value of the threshold of documents to be deleted is less than 0.65. The values can be modified.

- Parameter configuration example

- Filtering example

Before processing: {"text":"Hello, my name is Li Ming. I am excited to introduce myself and share a bit about who l am."}

After processing: {"text":""}

Global Text Deduplication

- Applicable dataset type: Text > document, web page, and pre-trained text.

- Parameter description

Type of content to be filtered: Detects and removes duplicate or highly similar text from data to prevent model overfitting or reduced generalization.

- Parameter configuration example

- Filtering example

Before processing: [{"fileName":"text1.txt","text":"It is said that the fox only appears to those with pure hearts and sincere wishes."},{"fileName":"text2.txt","text":"It is said that the fox only appears to those with pure hearts and sincere wishes."}]

After processing: {"fileName":"text1.txt","text":"It is said that the fox only appears to those with pure hearts and sincere wishes."}

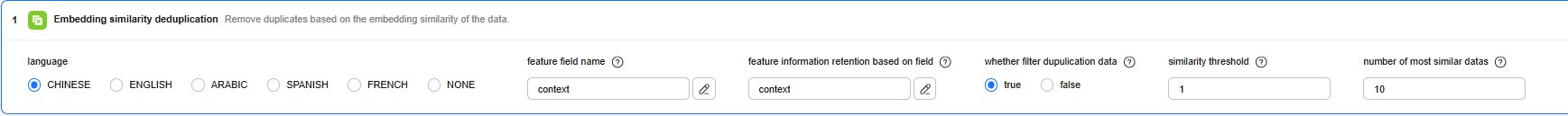

Embedding Similar Deduplication

- Applicable dataset type: Text.

- Parameter description:

language: indicates the dataset language type.

feature field name: indicates the feature obtaining field, which is used to calculate the embedding code and select similar data. If this field is left empty, the value of the text or context field is used by default.

feature information retention based on field: After top N similar data is obtained based on embedding, the record with the longest value of this field in the similar data is retained. If this field is left empty, the value of the text or context field is used by default.

Whether filter duplication data: Whether to filter out duplicate data. If you do not filter out duplicate data, the embedding_filter field is added to the result to indicate whether the record is filtered out.

similarity threshold: When the similarity between data is less than the threshold, the data is selected. The default value is 0.2. The value range is [0,2.0].

number of most similar data records: After the top N most similar data records are queried for each record, the most similar data records are obtained. The default value is 10.

- Conversion example

{"context":"National Health Commission adds e-cigarettes to the list of banned products in public places","target":"Regulatory extension"}

{"context":"Dr. Li Lei (biologist): The national standard for e-cigarettes should include nicotine content limits","target":"Ingredient standards"}

{"context":"E-cigarette national standards are expected to prohibit fruit tastes from attracting teenagers.","target":"Special clauses"}

{"context":"Experts suggest adding health warnings to e-cigarette packaging.","target":"Consumer tips"}

{"context":"Consumer representatives are introduced for the first time in the formulation of e-cigarette national standards.","target":"Democratic participation"}

{"context":"An e-cigarette consumer survey shows that 80% support mandatory quality certification.","target":"Public opinion basis"}

{"context":"Research shows that unregulated e-cigarettes contain three times the heavy metals.","target":"Safety risks"}

{"context":"Research shows that second-hand e-cigarette smoke still contains carcinogens.","target":"Public health"}

{"context":"The lack of safety standards for e-cigarette batteries causes frequent accidents.","target":"Accessory risks"}

{"context":"Comparative study: Differences in e-cigarette regulatory systems between China and the United States.","target":"International comparison"}

After processing:

{"context":"National Health Commission adds e-cigarettes to the list of banned products in public places","target":"Regulatory extension"}

{"context":"Dr. Li Lei (biologist): The national standard for e-cigarettes should include nicotine content limits","target":"Ingredient standards"}

{"context":"E-cigarette national standards are expected to prohibit fruit tastes from attracting teenagers.","target":"Special clauses"}

{"context":"Consumer representatives are introduced for the first time in the formulation of e-cigarette national standards.","target":"Democratic participation"}

{"context":"An e-cigarette consumer survey shows that 80% support mandatory quality certification.","target":"Public opinion basis"}

{"context":"Research shows that unregulated e-cigarettes contain three times the heavy metals.","target":"Safety risks"}

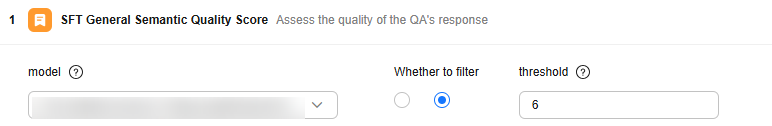

General Semantics Scoring of SFT

- Applicable dataset types: Text > single-turn Q&A, single-turn Q&A (with a persona), and Q&A sorting.

- Parameter description

- model: Select a model.

- Whether to filter: The value of this field can be true or false. The default value is false. The threshold value ranges from 0.0 to 10.0. Samples whose values are less than the threshold will be filtered out.

- threshold: The default threshold is 6, and the threshold can be changed.

- Parameter configuration example:

- Filtering example

Before processing: {"system":"You are an expert in calculations.","context":"Calculate the travel time based on the distance between two cities. Starting city: Beijing, destination city: Shanghai, distance: 1,318 km. \n","target":"To calculate the travel time between two cities based on the distance, we need to consider the mode of travel, as each has a different average speed. Here's a general calculation for common modes of travel between Beijing and Shanghai. High-speed train: Approximately 5-6 hours; Plane: Approximately 2 hours; Car: Approximately 13-14 hours. "},{"system":"You are a Q&A expert","context":"Find the number of occurrences of a specific keyword in a given article. \nArticle: Many people will stay up late to watch the matches during this year's World Cup. \nKeyword: World Cup\n","target":"Keyword\"World Cup\" appears once in the article. "}]

After processing: {"context":"Find the number of occurrences of a specific keyword in the given article. \nArticle: Many people will stay up late to watch the matches during this year's World Cup. \nKeyword: World Cup\n","filter":0.0,"qa_quality score":{"reason":"The large model correctly identified that the keyword \"World Cup\" appeared once in the article and provided the correct answer. ","score":10.0},"system":"You are a Q&A expert","target":"Keyword \"World Cup\" appears once in the article. "}

Note: Unfiltered data is labeled. The labeling fields include reason and score.

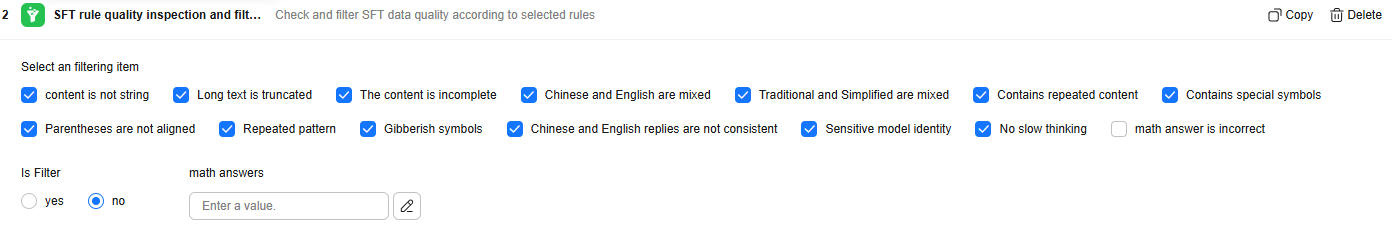

Rule-based Inspection and Filtering of SFT Data

- Applicable dataset types: Text > single-turn Q&A, single-turn Q&A (with a persona), and Q&A sorting.

- Parameter description

- Filtering item: Checks and filters SFT data based on the selected rule. The filtering items include the following: content is not string, Long text is truncated, The content is incomplete, Chinese and English are mixed, Traditional and Simplified are mixed, Contains repeated content, Contains special symbols, Parentheses are not aligned, Repeated pattern, Gibberish symbols, Chinese and English replies are not consistent, Sensitive model identity, No slow thinking, and math answer is incorrect. By default, all options are selected except the math answer is incorrect option. You can select some of the rules.

- Is Filter: The value of this field is yes or no. The default value is no.

- math answers: This field needs to be set when math answer is incorrect is selected in the filtering items. That is, the column name (key value) of the correct answer is stored in the dataset. This field is used to determine whether the model answer is correct. If this field is not matched, the answer is incorrect by default. If math answer is incorrect is not selected, this parameter can be ignored.

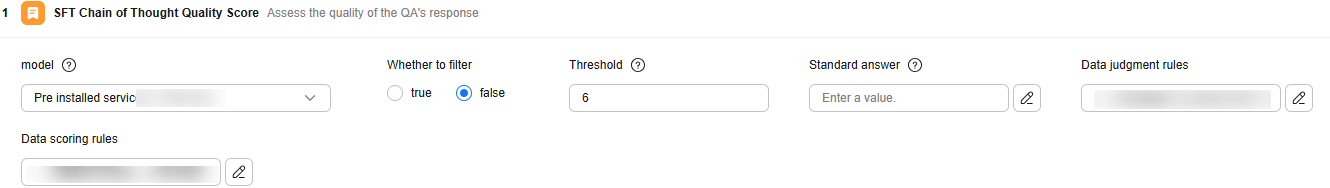

CoT Scoring of SFT Data

- Applicable dataset types: Text > single-turn Q&A, single-turn Q&A (with a persona), and Q&A sorting > JSONL.

- Parameter description

Type of content to be filtered: Uses the LLM to check and score the CoT of SFT data and filter data based on the scoring threshold.

- model: Select a model.

- Whether to Filter: The value of this field is true or false. The default value is false.

- Threshold: The value ranges from 0.0 to 6.0. Samples whose values are less than the threshold will be filtered out. The default threshold is 6 and can be modified.

- Standard answer: You can enter the field name of the standard answer on the GUI and compare it with the answer. If the operator cannot match the corresponding field name, the model will default to considering the question as having no correct answer.

- Data judgment rules: The value can be edited and contains a maximum of 1000 characters.

- Data scoring rules: The value can be edited and contains a maximum of 1000 characters.

- Parameter configuration example:

- Filtering example

Before processing: {"context":"Context","targets":["hello","hi","hello"]}

After processing: {"context":"Context","targets":["hello","hi","hello"],"qa_cot_score":{"score":[{"result":"Incorrect","score":0.0,"reason":["The model's answer is irrelevant.]},{"result":"Incorrect","score":0.0,"reason":"The answer is irrelevant."},{"result":"Incorrect","score":0.0,"reason":"[The model's answer is irrelevant.]"}]}}

Note: Unfiltered data is labeled. The labeling fields include result, score, and reason.

QA Pair Deduplication

- Applicable dataset types: Text > single-turn Q&A and single-turn Q&A (with a persona) > JSONL.

- Parameter description:

filter column: You can filter data by question (context) or answer (target). You can select both of them. The default value is context. The filtering metrics include the n-gram value, similarity threshold, and minimum number of tokens in the text. ngram size indicates the tokenization granularity. The default value is 1. The value can be modified. The value of threshold ranges from 0 to 1. The default value is 0.7. A smaller value indicates that more data is filtered out and may be incorrectly filtered out. A larger value indicates that data may not be cleaned. min length indicates the minimum number of tokens in the text. If the number of tokens is less than the value of this parameter, the text will be directly filtered out. The default value is 3.

Prohibited Text Detection

- Applicable dataset types: Q&A sorting, single-turn Q&A, and single-turn Q&A (with a persona) > JSONL.

- Parameter description: If Yes is selected, the filtering operator is used. Otherwise, the filtering operator is not used.

- Parameter configuration example:

- Filtering example

{"text": "Do you have QQ sales shareholder data?"}

After marking:

{"text":"Do you have QQ sales shareholder data?","text_ban_moderation":{"suggestion":"block","details":{"confidence":1.0,"label":"violation_info","risk_level":2,"segments":[{"segment":"QQ sales shareholder data"},{"segment":"Shareholder data"},{"segment":"Shareholder data & sales"},{"segment":"Sales shareholder data"}],"suggestion":"block"}}}

suggestion indicates whether the file passes the check. pass indicates that the file passes the check and no problem occurs. review indicates that manual review is required. You can choose to bypass or block the file based on your review policy. block indicates that the file to be reviewed is problematic.

Personal Privacy Identification

- Applicable dataset types: Q&A sorting, single-turn Q&A, and single-turn Q&A (with a persona) > JSONL.

- Parameter description: If Yes is selected, the filtering operator is used. Otherwise, the filtering operator is not used.

- Parameter configuration example:

- Filtering example

{"text": "You save my MAC address: 20-6E-D4-88-F3-98"}

After marking:

{"text": "You save my MAC address: 20-6E-D4-88-F3-98","text_pii_moderation":{"suggestion":"block","details":[{"start":33,"end":50,"length":17,"data":"20-6E-D4-88-F3-98","category":"MAC_ADDRESS"}]}}

suggestion indicates whether the file passes the check. pass indicates that the file passes the check and no problem occurs. review indicates that manual review is required. You can choose to bypass or block the file based on your review policy. block indicates that the file to be reviewed is problematic.

Junk Text Detection

- Applicable dataset types: Q&A sorting, single-turn Q&A, and single-turn Q&A (with a persona) > JSONL.

- Parameter description: If Yes is selected, the filtering operator is used. Otherwise, the filtering operator is not used.

- Parameter configuration example:

- Filtering example

{"text": "[Kaiyuan fake certificate 848777596_qq Hefei fake certificate uhc0tm] What does it mean_Kaiyuan false certificate 848777596_qq Hefei false certificate uhc0tm Translation_Phonetic mark_Pronunciation_Usage_Example sentence_Online translation_Youdao dictionary"}

After marking:

{"text":"[Kaiyuan fake certificate 848777596_qq Hefei fake certificate uhc0tm] What does it mean_Kaiyuan false certificate 848777596_qq Hefei false certificate uhc0tm Translation_Phonetic mark_Pronunciation_Usage_Example sentence_Online translation_Youdao dictionary","text_spam_moderation":{"details":[{"confidence":1.0,"label":"abuse","risk_level":2,"segments":[{"segment":"tm's"}]},"suggestion":"block"]},"suggestion":"block"}}

suggestion indicates whether the file passes the check. pass indicates that the file passes the check and no problem occurs. review indicates that manual review is required. You can choose to bypass or block the file based on your review policy. block indicates that the file to be reviewed is problematic.

Ad Text Detection

- Applicable dataset types: Q&A sorting, single-turn Q&A, and single-turn Q&A (with a persona) > JSONL.

- Parameter description: If Yes is selected, the filtering operator is used. Otherwise, the filtering operator is not used.

- Parameter configuration example:

- Filtering example

{"context":"On sale for inventory clearance. All items are only CNY2.","target":"The price is cheap."}

After marking:

{"context":"On sale for inventory clearance. All items are only CNY2.","target":"The price is cheap.","text_ad_moderation":{"details":[],"suggestion":"pass"}}

suggestion indicates whether the file passes the check. pass indicates that the file passes the check and no problem occurs. review indicates that manual review is required. You can choose to bypass or block the file based on your review policy. block indicates that the file to be reviewed is problematic.

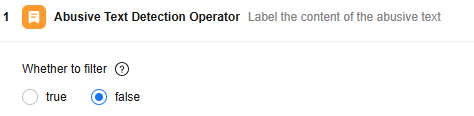

Abuse Text Detection

- Applicable dataset types: Q&A sorting, single-turn Q&A, and single-turn Q&A (with a persona) > JSONL.

- Parameter description: If Yes is selected, the filtering operator is used. Otherwise, the filtering operator is not used.

- Parameter configuration example:

- Filtering example

{"text":"Who wants to die with you? Die by yourself."}

After marking:

{"text":"Who wants to die with you? Die by yourself.","text_abuse_moderation":{"details":[{"confidence":0.9998,"label":"abuse","risk_level":2,"segments":[],"suggestion":"block"}],"suggestion":"block"}}

suggestion indicates whether the file passes the check. pass indicates that the file passes the check and no problem occurs. review indicates that manual review is required. You can choose to bypass or block the file based on your review policy. block indicates that the file to be reviewed is problematic.

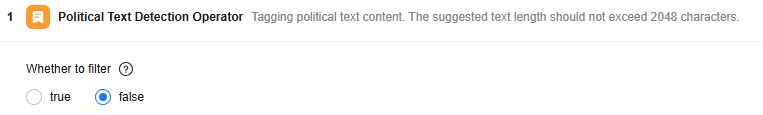

Politically Sensitive Text Detection

- Applicable dataset types: Q&A sorting, single-turn Q&A, and single-turn Q&A (with a persona) > JSONL.

- Parameter description: If Yes is selected, the filtering operator is used. Otherwise, the filtering operator is not used.

- Parameter configuration example:

- Filtering example

{"text":"However, the Chinese Communist authorities never disdained the explanation of these doubts from netizen, but directly blocked them."

After marking:

{"text":"However, the Chinese Communist authorities never disdained the explanation of these doubts from netizen, but directly blocked them.","text_polInfo_moderation":{"suggestion":"block","details":"[{'confidence': 1.0, 'label': 'politics', 'risk_level': 3, 'egments': [{'segment': 'Chinese Communist authorities'}],'suggestion': 'block'}]"}}

suggestion indicates whether the file passes the check. pass indicates that the file passes the check and no problem occurs. review indicates that manual review is required. You can choose to bypass or block the file based on your review policy. block indicates that the file to be reviewed is problematic.

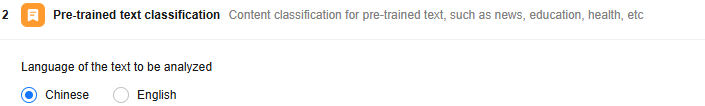

Pre-trained Text Classification

- Applicable dataset type: Document > pre-trained text.

- Parameter description

Type of content to be labeled: Classifies the content of the pre-trained text, for example, news, education, and health. The supported languages include Chinese and English. The default language is Chinese.

- Parameter configuration example

- Example

{"fileName":"News Labeling Test .docx","text":"Beijing, March 3 (Xinhua) According to the People's Bank of China, the financial market operation in January showed that China issued a total of CNY5,102.75 billion in bonds in January. Of which, CNY1,018.5 billion in government bonds, CNY557.57 billion in local government bonds, CNY704.21 billion in financial bonds, CNY1,279.17 billion in corporate credit bonds, CNY2.73 billion in asset-backed securities, and CNY1,514.78 billion in interbank certificates of deposit. \n As of the end of January, the bond market custody balance in China was CNY178.2 trillion. Of which, the custody balance of the interbank market was CNY156.9 trillion, and that of the exchange market was CNY21.3 trillion. \nAs of the end of January, the custody balance of foreign institutions in the Chinese bond market was CNY4.2 trillion, accounting for 2.3% of the custody balance of the Chinese bond market. Of which, the bond custody balance of foreign institutions in the interbank bond market was CNY4.1 trillion. By bond type, foreign institutions held CNY2.0 trillion of government bonds (48.8%), CNY1.1 trillion of certificates of deposit (25.8%), and CNY0.9 trillion of policy bank bonds (20.8%). \n","pre_classification":"Economy"}

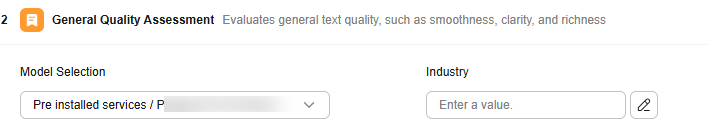

General Quality Assessment

- Applicable dataset type: Text > pre-trained text.

- Parameter description

Type of content to be labeled: Assesses the general quality of text, such as fluency, clarity, and diversity. You need to select a model and an industry. The industry can be manually entered. The maximum score is 5.

- Parameter configuration example

- Example

{"fileName":"News Labeling Test .docx","text":"Beijing, March 3 (Xinhua) According to the People's Bank of China, the financial market operation in January showed that China issued a total of CNY5,102.75 billion in bonds in January. Of which, CNY1,018.5 billion in government bonds, CNY557.57 billion in local government bonds, CNY704.21 billion in financial bonds, CNY1,279.17 billion in corporate credit bonds, CNY2.73 billion in asset-backed securities, and CNY1,514.78 billion in interbank certificates of deposit. \n As of the end of January, the bond market custody balance in China was CNY178.2 trillion. Of which, the custody balance of the interbank market was CNY156.9 trillion, and that of the exchange market was CNY21.3 trillion. \nAs of the end of January, the custody balance of foreign institutions in the Chinese bond market was CNY4.2 trillion, accounting for 2.3% of the custody balance of the Chinese bond market. Of which, the bond custody balance of foreign institutions in the interbank bond market was CNY4.1 trillion. By bond type, foreign institutions held CNY2.0 trillion of government bonds (48.8%), CNY1.1 trillion of certificates of deposit (25.8%), and CNY0.9 trillion of policy bank bonds (20.8%). \n","generalscore":{"Instructiveness":"5","Cleanliness":"5","isIncorrect":"false","Toxicity":"false","Richness":"5","Fluency":"5","knowledge":"5"}}

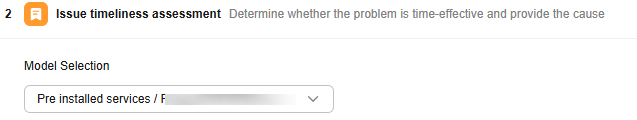

Problem Timeliness Evaluation

- Applicable dataset type: text > single-turn Q&A

- Parameter description

Type of content to be labeled: Determines whether the issue is time-sensitive and provides the reason. You need to select a model. The score can only be 0 or 1. The value 1 indicates timeliness, and the value 0 indicates no timeliness.

- Parameter configuration example

- Example

{"context":"1-1-2 Where is the capital of Jiangsu Province?","target":"Nanjing","timeliness_classification":0}

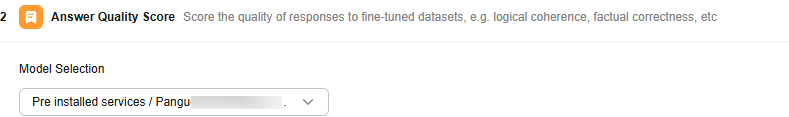

Answer Quality Scoring

- Applicable dataset type: text > single-turn Q&A

- Parameter description

Type of content to be labeled: Scores the quality of answers to fine-tuning datasets, such as logical consistency and fact correctness. You need to select a model.

- Parameter configuration example

- Example

{"context":"1-2-1 Where is the capital of China? ","target":"Beijing","answer score":{"Logical coherence":10,"Comprehensive score":10,"User requirements satisfied":10,"Completeness":10,"Fact correctness":10}}

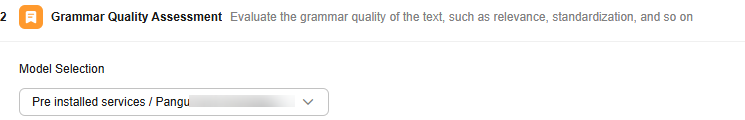

Syntax Quality Evaluation

- Applicable dataset type: text > single-turn Q&A

- Parameter description

Type of content to be labeled: evaluates the syntax quality of the text, for example, relevance and standardization. You need to select a model.

- Parameter configuration example

- Example

{"target":"Beijing","context":"1-2-1 Where is the capital of China?","grammar score":{"Unrelated reply":0,"Fact error":0,"Logical error":0,"Non-standard language":0,"Sentence truncation":0,"Improper multi-language mixing":0,"Meaningless repetition":0}}

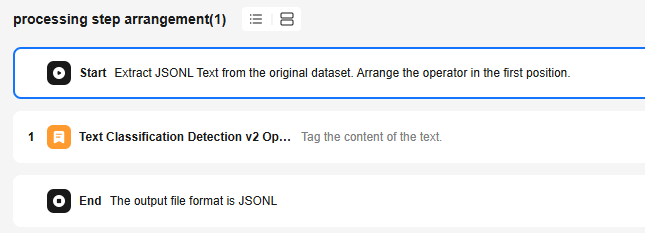

Text Classification Label v2

- Applicable dataset types: Text > pre-trained text, single-turn Q&A, single-turn Q&A (with a persona), and Q&A sorting.

- Parameter description:

Type of content to be labeled: Classifies the text, such as cars, sports, and healthcare. The supported languages include Chinese and English.

- Parameter configuration example:

- Example

{"text":"Author: Wang Junli, Wu Meng, Ultrasound Department, Zhongnan Hospital of Wuhan University\nFemale patient, 28 years old, found a right breast mass for half a year, and visited the hospital due to pain 6 days ago. The cesarean section was performed 3 years ago. Physical examination: No fever, no skin erythema or swelling in the breast area, and no nipple discharge were observed. A 2 cm mass was palpable in the lower outer quadrant of the right breast, with firm consistency and marked tenderness. No enlarged lymph nodes were palpable in the right axilla. \nUltrasonic Examination: An irregular hypoechoic area measuring approximately 2.1 cm × 1.4 cm × 1.1 cm is observed in the lower quadrant of the right breast, with unclear boundaries (Figure 1). CDFI shows minimal blood flow signals, presenting an arterial spectrum with a resistance index of 0.64. \nUltrasound finding: A solid lesion in the lower outer quadrant of the right breast (BI-RADS 4b; inflammatory changes cannot be excluded). MRI examination: An irregular high-signal mass was observed in the lower outer quadrant of the right breast on T2 fat-suppressed sequence (Fig. 2), with marked heterogeneous signal intensity and enhancement. The time-signal intensity curve showed a washout pattern. \nMRI finding: An irregular mass in the lower outer quadrant of the right breast. Biopsy is recommended. Molybdenum target examination: Irregular high-density mass effect (BI-RADS 4b) was found in the post-milk gap and thoracic muscle surface in the lower right milk quadrant. The patient underwent right breast excision and fascial tissue flap reconstruction. Pathological findings: Shuttle cell tumor (Figure 3); combined with morphological and immunohistochemical analysis, the diagnosis suggests invasive fibromatosis of the breast. \nDiscussion\nInvasive breast fibromatosis is a tumor that originates within the breast, accounting for approximately 0.2% of all breast disease cases. The cause is not clear, and it is often associated with genetic predisposition, surgery, or estrogen. It is common in women of childbearing age, and there are no characteristic clinical manifestations. \nThe pathological structure is characterized by the proliferation of fibroblasts, myofibroblasts, and collagen fibers. Immunohistochemical analysis reveals that the tumor cells are positive for Vimentin and SMA. Ultrasound reveals a hypoechoic mass with an irregular shape and indistinct borders, often appearing sawtooth or horn-like. The mass typically extends along the long axis and may involve the posterior chest muscle layer. There are minimal internal blood flow signals. \nCurrently, this disease is mainly treated by surgery. Patients need to follow up for a long time after surgery. If the disease recurs, they need to perform surgery again or use radiotherapy or hormone treatment. This disease needs to be differentiated from spindle cell carcinoma and scar tissue. ","tag":null,"domain_specific_l1":"medicine\/medical","domain_specific_l2":"oncology","domain_specific_confidence":0.429}

Science Technology Text Detection

- Applicable dataset types: Text > pre-trained text, single-turn Q&A, single-turn Q&A (with a persona), and Q&A sorting.

- Parameter description:

Type of the content to be labeled: Detects the industry of the text and whether the text is scientific data, such as report analysis in the finance industry and technical research in the healthcare industry. You need to select a model. Pangu-NLP-N1-32K-3.2.36.3 is recommended.

- Parameter configuration example:

- Example

{"system":"Witty and humorous","context":"Additionally, incomplete fitting of the stent components may further increase the risk of thrombosis and restenosis. \nAdditionally, the incomplete apposition of stent components can further exacerbate risks associated with thrombosis and restenosis.","target":"ccccccc","ori_response":"It belongs to medical science.<|im_end|>","industry_category": "Medical","science_classification": "Science and technology data"}

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot