Migrating Incremental Data

Synchronize data that has been added, modified, or deleted after the previous migration is complete from source databases to Huawei Cloud DLI.

Precautions

- MgC allows you to narrow the migration scope to specific partitions in MaxCompute using a template. You must enter the names of involved tables in the Excel template in lowercase letters, as MaxCompute converts table names to lowercase during table creation. If the table names in the Excel template are in uppercase, the migration will fail as the tables cannot be identified.

- Using SQL statements to query data in Information Schema of MaxCompute experiences a delay of about 3 hours, as data in Information Schema is not updated in real time. Consequently, when a new table is added to the source database, Information Schema may not immediately reflect the change. This delay can lead to inconsistencies in the data tables after migration.

Prerequisites

- You have completed all preparations.

- A source connection has been created.

- Target connections have been created.

- At least one full data migration has been completed.

- You have been added to the whitelist that allows JAR programs to access DLI metadata. If you have not been added, contact technical support.

Procedure

- Sign in to the MgC console. In the navigation pane, under Project, select your big data migration project from the drop-down list.

- In the navigation pane on the left, choose Migrate > Big Data Migration.

- In the upper right corner of the page, click Create Migration Task.

- Select MaxCompute for Source Component, Data Lake Insight (DLI) for Target Component, Incremental data migration for Task Type, and click Next.

- Configure parameters required for creating an incremental data migration task based on Table 1.

Table 1 Parameters for creating an incremental data migration task Area

Parameter

Configuration

Basic Settings

Task Name

The default name is Incremental-data-migration-from-MaxCompute-to-DLI-4 random characters (including letters and numbers). You can also customize a name.

MgC Agent

Select the MgC Agent you connected to MgC in Preparations.

Source Settings

Source Connection

Select the source connection you created.

MaxCompute Parameters (Optional)

The parameters are optional and usually left blank. If needed, you can configure the parameters by referring to MaxCompute Documentation.

Data Scope

Migration Filter

- Time: Select which incremental data to migrate based on when it was last changed. If you select this option, you need to set parameters such as Time Range, Filter Partitions, and Define Scope.

- Custom: Migrate incremental data in specified partitions. If you select this option, perform the following steps to specify the partitions to be migrated:

- Under Include Partitions, click Download Template to download the template in CSV format.

- Open the downloaded CSV template file with Notepad.

CAUTION:

Do not use Excel to edit the CSV template file. The template file edited and saved in Excel cannot be identified by MgC.

- Retain the first line in the CSV template file. From the second line onwards, enter the information about tables to be migrated in the format of {MaxCompute-project-name},{table-name},{partition-field},{partition-key}. {MaxCompute-project-name} refers to the name of the MaxCompute project where the data to be migrated is managed. {table-name} refers to the data table to be migrated.

NOTICE:

Use commas (,) to separate elements in each line. Do not use spaces or other separators.

After adding the information about a table, press Enter to start a new line.

- After all table information is added, save the changes to the CSV file.

- Under Include Partitions, click Add File and upload the edited and saved CSV file to MgC.

Time Range

Select a T-N option to limit the migration to the incremental data generated within a specific time period (24 × N hours) before the task start time (T). For example, if you select T-1 and the task was executed at 14:50 on June 6, 2024, the system migrates incremental data generated from 14:50 on June 5, 2024 to 14:50 on June 6, 2024.

If you select Specified Date, only incremental data generated on the specified date is migrated.

Filter Partitions

Decide whether to filter partitions to be migrated by update time or by creation time. The default value is By update time.

- Select By update time to migrate the data that was changed in the specified period.

- Select By creation time to migrate the data that was created in the specified period.

By database

Enter the names of databases where incremental data needs to be migrated in the Include Databases text box. Click Add to add more entries. A maximum of 10 databases can be added. If you do not enter a database name, all source databases will be migrated by default.

To exclude certain tables from the migration, download the template in CSV format, add information about these tables to the template, and upload the template to MgC. For details, see 2 to 5.

By table

- Download the template in CSV format.

- Open the downloaded CSV template file with Notepad.

CAUTION:

Do not use Excel to edit the CSV template file. The template file edited and saved in Excel cannot be identified by MgC.

- Retain the first line in the CSV template file. From the second line onwards, enter the information about tables to be migrated in the format of {MaxCompute-project-name},{table-name}. {MaxCompute-project-name} refers to the name of the MaxCompute project to be migrated. {table-name} refers to the data table to be migrated.

NOTICE:

- Use commas (,) to separate the MaxCompute project name and the table name in each line. Do not use spaces or other separators.

- After adding the information about a table, press Enter to start a new line.

- After all table information is added, save the changes to the CSV file.

- Upload the edited and saved CSV file to MgC.

Target Settings

Target Connection

Select the DLI connection with a general queue created in Creating a Target Connection.

CAUTION:Do not select a DLI connection with a SQL queue configured.

Custom Parameters

Set the parameters as required. For details about the supported custom parameters, see Configuration parameters and Custom parameters.

- If the migration is performed over the Internet, set the following four parameters:

- spark.dli.metaAccess.enable: Enter true.

- spark.dli.job.agency.name: Enter the name of the DLI agency you configured.

- mgc.mc2dli.data.migration.dli.file.path: Enter the OBS path for storing the migration-dli-spark-1.0.0.jar package. For example, obs://mgc-test/data/migration-dli-spark-1.0.0.jar

- mgc.mc2dli.data.migration.dli.spark.jars: Enter the OBS path for storing the fastjson-1.2.54.jar and datasource.jar packages. The value is transferred in array format. Package names must be enclosed using double quotation marks and be separated with commas (,) For example: ["obs://mgc-test/data/datasource.jar","obs://mgc-test/data/fastjson-1.2.54.jar"]

- If the migration is performed over a private network, set the following eight parameters:

- spark.dli.metaAccess.enable: Enter true.

- spark.dli.job.agency.name: Enter the name of the DLI agency you configured.

- mgc.mc2dli.data.migration.dli.file.path: Enter the OBS path for storing the migration-dli-spark-1.0.0.jar package. For example, obs://mgc-test/data/migration-dli-spark-1.0.0.jar

- mgc.mc2dli.data.migration.dli.spark.jars: Enter the OBS path for storing the fastjson-1.2.54.jar and datasource.jar packages. The value is transferred in array format. Package names must be enclosed using double quotation marks and be separated with commas (,) For example: ["obs://mgc-test/data/datasource.jar","obs://mgc-test/data/fastjson-1.2.54.jar"]

- spark.sql.catalog.mc_catalog.tableWriteProvider: Enter tunnel.

- spark.sql.catalog.mc_catalog.tableReadProvider: Enter tunnel.

- spark.hadoop.odps.end.point: Enter the VPC endpoint of the region where the source MaxCompute service is provisioned. For details about the MaxCompute VPC endpoint in each region, see Endpoints in different regions (VPC). For example, if the source MaxCompute service is located in Hong Kong, China, enter http://service.cn-hongkong.maxcompute.aliyun-inc.com/api.

- spark.hadoop.odps.tunnel.end.point: Enter the VPC Tunnel endpoint of the region where the source MaxCompute service is located. For details about the MaxCompute VPC Tunnel endpoint in each region, see Endpoints in different regions (VPC). For example, if the source MaxCompute service is located in Hong Kong, China, enter http://dt.cn-hongkong.maxcompute.aliyun-inc.com.

Migration Settings

Large Table Migration Rules

NOTE:This parameter is available only when Migration Filter is set to Time.

Control how large a table will be split into multiple migration subtasks. You are advised to retain the default settings. You can also change the settings as needed.

Decide whether to select Split Subtasks by Minimum Partition Change. If this option is selected, a migration subtask will be created for each lowest-level incremental partition.

Subtask Merging Rules

Define the logic for generating subtasks. When the combined size of partitions or tables in a subtask reaches the limit you configure here, a new subtask is generated. The process then repeats, with accumulation continuing until the limit is reached again.

For example, if the limit is set to 1 GB, when the combined size of some partitions or tables in a subtask reaches 1 GB, the system will assign these partitions or tables to the current subtask. The remaining partitions or tables will be split into a new subtask, with accumulation continuing until 1 GB is reached again, and this process is repeated.

Concurrency

Set the number of concurrent migration subtasks. The default value is 3. The value ranges from 1 to 10.

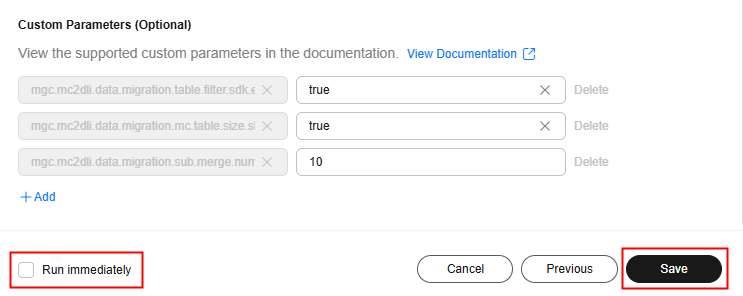

Custom Parameters (Optional)

- mgc.mc2dli.data.migration.table.filter.sdk.enable: indicates whether MaxCompute fetches tables using SDK or SQL statements. The default value is false. To migrate partitioned tables, set this parameter to true.

- mgc.mc2dli.data.migration.mc.table.size.skip: indicates whether to query the table size. The default value is true.

- mgc.mc2dli.data.migration.sub.merge.number: indicates the total number of tables or partitions that can be assigned to a subtask. The default value is 10.

For details about custom parameters, see Configuration parameters and Custom parameters.

- After the configuration is complete, execute the task.

- A migration task can be executed repeatedly. Each time a migration task is executed, a task execution is generated.

- You can click the task name to modify the task configuration.

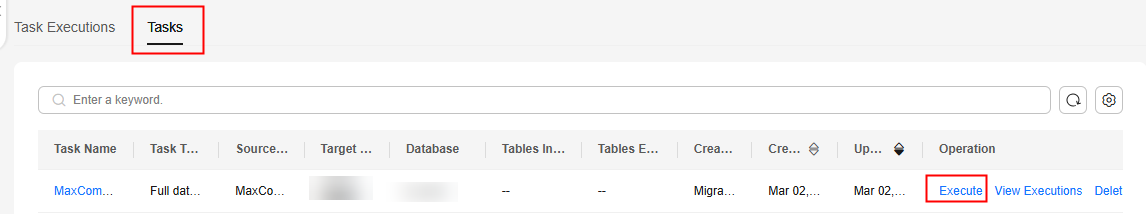

- You can select Run immediately and click Save to create the task and execute it immediately. You can view the created task on the Tasks page.

- You can also click Save to just create the task. You can view the created task on the Tasks page. To execute the task, click Execute in the Operation column.

- After the migration task is executed, click the Tasks tab, locate the task, and click View Executions in the Operation column. On the Task Executions tab, you can view the details of the running task execution and all historical executions.

Click Execute Again in the Status column to run the execution again.

Click View in the Progress column. On the displayed Progress Details page, view and export the task execution results.

- (Optional) After the data migration is complete, verify data consistency between the source and the target databases. For details, see Verifying the Consistency of Data Migrated from MaxCompute to DLI.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot