From DMS Kafka to OBS

Entire databases can be migrated.

Entire DB

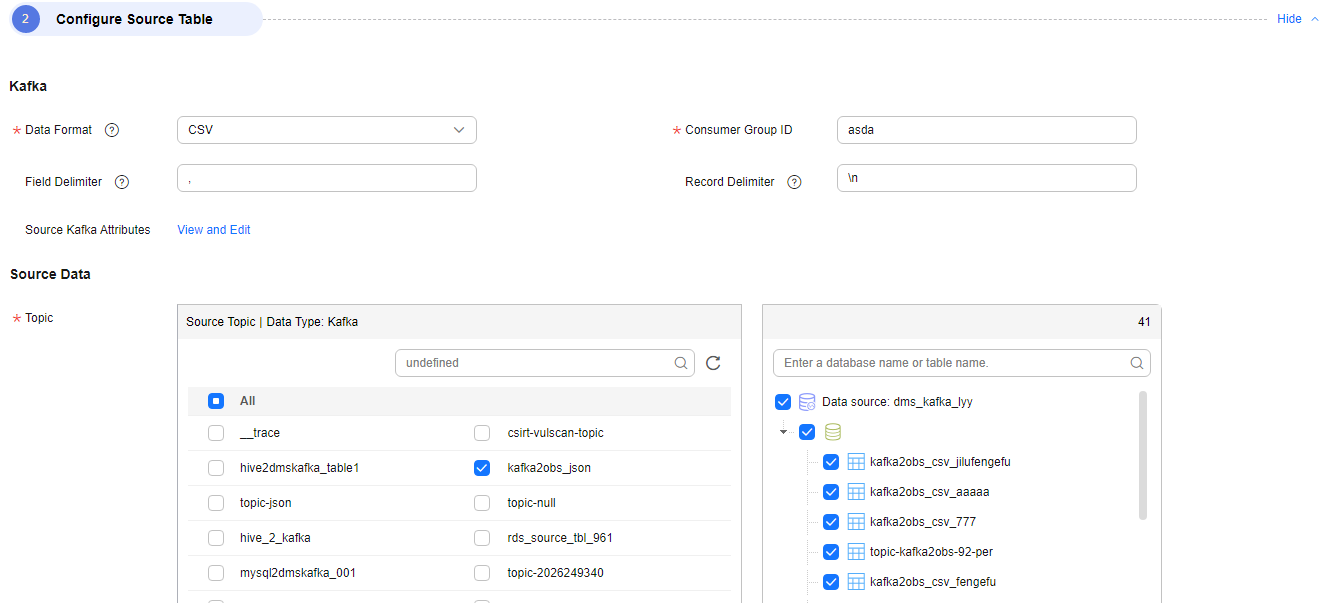

- Configure source parameters.

- Configure Kafka.

- Data Format: format of the message content in the source Kafka topic

Currently, the JSON, CSV, and TEXT formats are supported.

- JSON: Messages can be parsed in JSON format.

- CSV: Messages can be parsed using specified separators in CSV format.

- TEXT: The entire message is synchronized as text.

- Consumer Group ID: ID of the consumer group of the real-time processing integration job

After a migration job consumes messages of a topic in the DMS Kafka cluster, you can view the configured consumer group ID on the consumer group management page of the Kafka cluster and query the consumption attribute group.id on the message query page. Kafka regards the party that consumes messages as a consumer. Multiple consumers form a consumer group. A consumer group is a scalable and fault-tolerant consumer mechanism provided by Kafka. You are advised to configure a consumer group.

- Field Delimiter: This parameter is available when Data Format is CSV.

The delimiter is used to separate columns in a CSV message. Only one character is allowed. The default value is a comma (,).

- Record Delimiter: This parameter is available when Data Format is CSV.

The delimiter is used to separate lines in a CSV message. The default value is a newline character \n.

- Source Kafka Attributes: You can add Kafka configuration items with the properties. prefix. The job automatically removes the prefix and transfers configuration items to the underlying Kafka client, for example, properties.connections.max.idle.ms=600000.

- Data Format: format of the message content in the source Kafka topic

- Add a data source.

Figure 1 Adding a data source

- Configure Kafka.

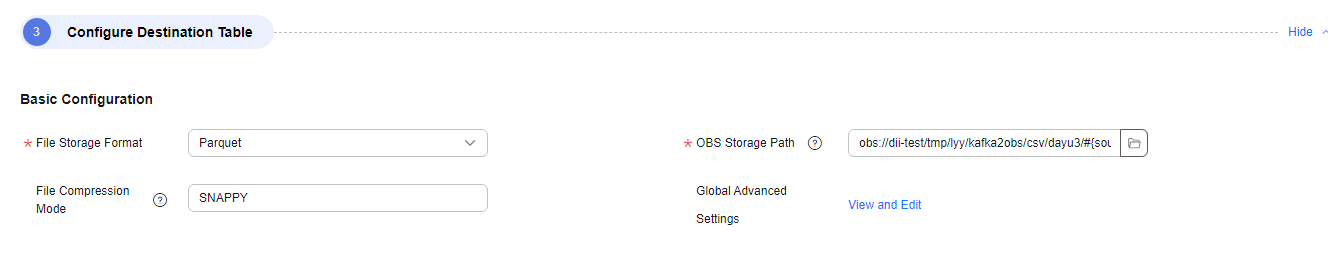

- Configure destination parameters.

Figure 2 Configuring destination parameters

- Basic Configuration:

- File Storage Format: The value can be Parquet, TextFile, or SequenceFile.

- OBS Storage Path: Specify the OBS path for storing the file.

You can enter the #{source_topic_name} built-in variable to write source data of different topics to different paths. Example: obs://bucket/dir/test.db/prefix_#{source_topic_name}_suffix/.

- File Compression Mode: compression mode of the file to be written. By default, the file is not compressed.

Parquet format: UNCOMPRESSED or SNAPPY

SequenceFile format: UNCOMPRESSED, SNAPPY, GZIP, or LZ4

BZIP2TextFile format: UNCOMPRESSED

- Global advanced settings: Click View and Edit to configure advanced attributes.

auto-compaction: Data is written to a temporary file first. This parameter specifies whether to compact the generated temporary files after the checkpoint is complete.

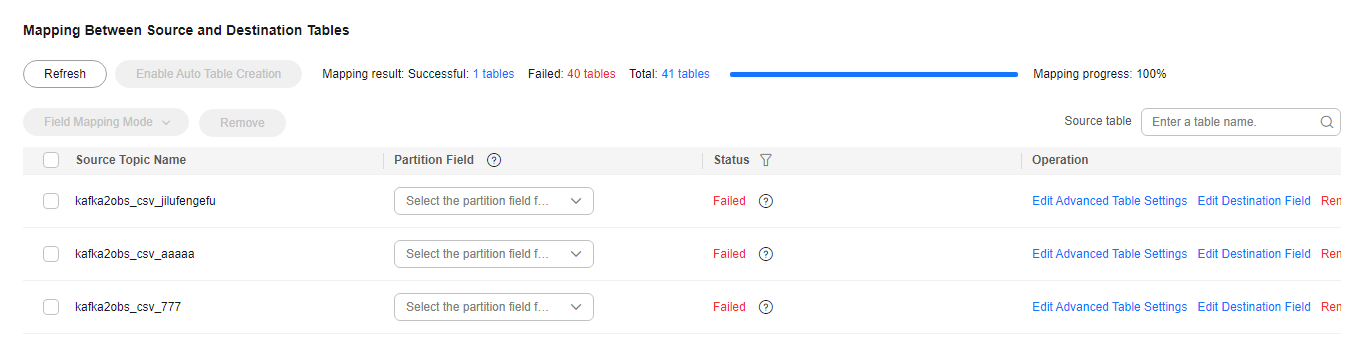

- Mapping Between Source and Destination Tables

Figure 3 Configuring the mapping between source and destination tables

- Advanced Table Settings: Click Edit Advanced Table Settings to configure attributes for a topic in the list.

auto-compaction: Data is written to a temporary file first. This parameter specifies whether to compact the generated temporary files after the checkpoint is complete.

- Edit Destination Field: Click Edit Destination Field in the Operation column to customize fields in the destination table after migration.

Table 1 Supported destination field values Type

Example

Source table field

-

Built-in variable

__key__

__value__

_topic__

__partition__

__offset__

_timestamp__

Manually assigned value

-

UDF

Flink built-in function used for data transformation

Example: CONCAT(CAST(NOW() as STRING), `col_name`) and DATE_FORMAT(NOW(), 'yy')

Note that the field name must be enclosed by backquote.

You can customize a field name (for example, custom_defined_col), select the field type, and enter the field value.

You can add multiple fields at a time.

- Advanced Table Settings: Click Edit Advanced Table Settings to configure attributes for a topic in the list.

- Basic Configuration:

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot