LeaderWorkerSet Add-on

LLM inference uses a distributed architecture that consists of multiple nodes and GPUs. It uses tensor parallelism to split model parameters across GPUs, and pipeline parallelism to allocate computing phases across nodes, for collaborative inference of multiple devices. Kubernetes Deployments and StatefulSets can be used to deploy distributed LLM inference services, but they have some limitations:

- Deployments are used to deploy stateless applications. Although they can be used to deploy LLM inference services, it is difficult to manage dependencies and lifecycle of pods. For example, during a rolling update, some pods may have been updated, while other pods have not been updated, affecting the availability of the inference service.

- StatefulSets manage stateful applications. The pods are deployed in sequence and have unique identities. However, the scaling and updates of StatefulSets are complex, which makes it difficult to implement fast auto scaling required by the LLM inference service. In addition, StatefulSets have strict policies for deleting and rebuilding pods, which may affect the quick recovery of the service.

To address this issue, CCE standard and Turbo clusters provide the LeaderWorkerSet add-on, a CRD resource designed for AI/ML inference. This add-on can more effectively address the limitations of Deployments and StatefulSets in LLM distributed inference scenarios. It has the following advantages:

- Distributed inference optimization: Tensor parallelism and pipeline parallelism can be used together for efficient computing collaboration and zero-copy communication across pods.

- Lifecycle management by group: Auto scaling, rolling upgrades, and restart can be implemented by group, which improves service availability.

- Identity and intelligent routing: Each LeaderWorkerSet (LWS) can be uniquely identified and supports intelligent routing based on KV cache affinity.

- Multi-tenant resource isolation: Each team or user can have an independent inference instance (Serve instance). This ensures resource isolation and flexible resource management.

The LeaderWorkerSet add-on is a good fit in the following scenarios:

- LLM distributed inference service: The add-on can be used in various LLM inference scenarios that require high performance and high throughput, such as online Q&A, text generation, chatbot, and AI-assisted content.

- High-performance multi-node inference acceleration: The add-on supports mainstream AI inference frameworks such as vLLM and SGLang and can fully utilize computing resources of multiple nodes to accelerate the inference process. It is especially suitable for scenarios with high requirements on inference latency, such as real-time recommendation and intelligent search.

- Session-aware inference service optimization: For applications (such as chatbots and intelligent customer services) that need to maintain session status, the LeaderWorkerSet and AI Inference Gateway can work together to implement session-based precise routing, which increases the KV cache hit rate, reduces repeated computing, and improves performance.

Basic Concepts

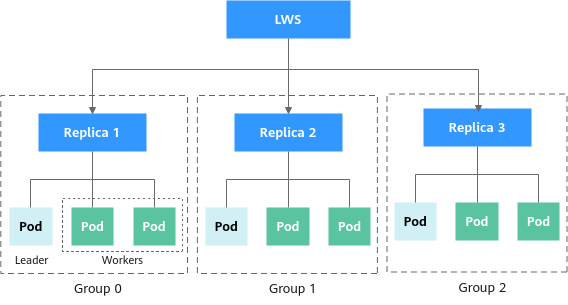

LeaderWorkerSet (LWS) is a workload type designed by Kubernetes for AI and LLM inference scenarios. It allows multiple pods to collaborate as a logical whole through replicas, so that the lifecycle of complex distributed inference tasks can be managed centrally. LWS greatly simplifies the elastic deployment and efficient O&M of AI inference services (such as vLLM and SGLang).

- Each LWS replica corresponds to an independent AI inference instance (Serve instance) that provides services externally.

- Each pod in a replica corresponds to a parallel slice on the Serve instance. For example, each shard of tensor parallel corresponds to a pod. Each pod has a unique ID, which is used for model sharding and communication.

- A replica is a group of a single leader pod and a set of worker pods. The leader needs to handle service loads and takes care of responsibilities such as instance initialization and scheduling. However, in some special scenarios, the leader only coordinates and manages the resources and does not participate in inference and computing.

Prerequisites

- You have created a CCE standard or Turbo cluster of v1.27 or later.

- You have created nodes and add-ons of the corresponding type based on the service type, and the node resources are sufficient.

- NPU services: NPU nodes with the add-on described in CCE AI Suite (Ascend NPU) installed

- GPU services: GPU nodes with the add-on described in CCE AI Suite (NVIDIA GPU) installed

Notes and Constraints

- The LeaderWorkerSet add-on cannot be upgraded online.

- Before uninstalling the add-on, you need to clear all CRD resources related to LWS.

- This add-on is being deployed. For details about the regions where this add-on is available, see the console.

- This add-on is in the OBT phase. You can experience the latest add-on features. However, the stability of this add-on version has not been fully verified, and the CCE SLA is not valid for this version.

Installing the Add-on

- Log in to the CCE console and click the cluster name to access the cluster console.

- In the navigation pane, choose Add-ons. On the displayed page, locate LeaderWorkerSet and click Install.

- In the lower right corner of the Install Add-on page, click Install. If Installed is displayed in the upper right corner of the add-on card, the installation is successful.

You can use tools such as kubectl to deploy AI inference workloads of the LWS type.

Uninstalling the Add-on

- Log in to the CCE console and click the cluster name to access the cluster console.

- In the navigation pane, choose Add-ons. On the displayed page, locate LeaderWorkerSet and click Uninstall.

- In the Uninstall Add-on dialog box, enter DELETE and click OK. If Not installed is displayed in the upper right corner of the add-on card, the add-on has been uninstalled.

Before uninstalling the add-on, clear all CRD resources related to LeaderWorkerSet.

Components

|

Component |

Description |

Resource Type |

|---|---|---|

|

leaderworkerset-lws-controller-manager |

LWS resource controller, which manages resources in a cluster. |

Deployment |

Use Case

The following are steps for you to deploy the vLLM distributed inference service on GPU nodes using LWS. For details, see Deploy LeaderWorkerSet of vLLM provided by LeaderWorkerSet. vLLM supports distributed inference based on the Megatron-LM algorithm, and its runtime is managed by the Ray framework.

In this example, pods in the cluster need to access the public network for obtaining the vLLM official image and downloading the Llama-3.1-405B model weight file using the Hugging Face token. You can configure an SNAT rule for the cluster to ensure that pods can access the public network. You will be billed for the SNAT rule. For details, see NAT Gateway Price Calculator.

- Install kubectl on an existing ECS and access the cluster using kubectl. For details, see Accessing a Cluster Using kubectl.

- Create a YAML file named vllm-lws to deploy the vLLM distributed inference service.

vim vllm-lws.yamlThe file content is as follows:

apiVersion: leaderworkerset.x-k8s.io/v1 kind: LeaderWorkerSet metadata: name: vllm # Name of the LeaderWorkerSet instance spec: replicas: 2 #Two Serve instances leaderWorkerTemplate: size: 2 # Each instance contains two pods (one leader and one worker) to handle concurrent inference loads. restartPolicy: RecreateGroupOnPodRestart leaderTemplate: #Defines the leader pods. metadata: labels: role: leader # Pod role spec: containers: - name: vllm-leader image: vllm/vllm-openai:latest # Use the official vLLM image. env: - name: HUGGING_FACE_HUB_TOKEN value: <your-hf-token> # Enter the Hugging Face token to obtain the Llama-3.1-405B model. command: # Command used to load the Llama-3.1-405B model - sh - -c - "bash /vllm-workspace/examples/online_serving/multi-node-serving.sh leader --ray_cluster_size=$(LWS_GROUP_SIZE); python3 -m vllm.entrypoints.openai.api_server --port 8080 --model meta-llama/Meta-Llama-3.1-405B-Instruct --tensor-parallel-size 8 --pipeline_parallel_size 2" resources: limits: nvidia.com/gpu: "8" # Each pod can be mounted with a maximum of eight NVIDIA GPUs. memory: 1124Gi ephemeral-storage: 800Gi # Ephemeral storage space used to cache model weights requests: ephemeral-storage: 800Gi cpu: 125 ports: - containerPort: 8080 readinessProbe: # Readiness probe tcpSocket: port: 8080 initialDelaySeconds: 15 periodSeconds: 10 volumeMounts: # Mount a temporary storage volume to a leader pod. - mountPath: /dev/shm name: dshm volumes: # Temporary storage volume - name: dshm emptyDir: medium: Memory sizeLimit: 15Gi workerTemplate: # Defines the worker pods. spec: containers: - name: vllm-worker image: vllm/vllm-openai:latest command: # Command used to add the worker pods to the Ray cluster as worker nodes and connect to the head node through $(LWS_LEADER_ADDRESS) to get ready to participate in distributed inference tasks. - sh - -c - "bash /vllm-workspace/examples/online_serving/multi-node-serving.sh worker --ray_address=$(LWS_LEADER_ADDRESS)" resources: limits: nvidia.com/gpu: "8" # Each pod can be mounted with a maximum of eight NVIDIA GPUs. memory: 1124Gi ephemeral-storage: 800Gi requests: ephemeral-storage: 800Gi cpu: 125 env: - name: HUGGING_FACE_HUB_TOKEN value: <your-hf-token> volumeMounts: - mountPath: /dev/shm name: dshm volumes: - name: dshm emptyDir: medium: Memory sizeLimit: 15Gi --- apiVersion: v1 # Used to access the Serve instances in the cluster. kind: Service metadata: name: vllm-leader spec: ports: - name: http port: 8080 protocol: TCP targetPort: 8080 selector: leaderworkerset.sigs.k8s.io/name: vllm role: leader type: ClusterIP

- Create the above resources.

kubectl apply -f vllm-lws.yaml

Information similar to the following is displayed:

leaderworkerset.leaderworkerset.x-k8s.io/vllm created service/vllm-leader created

- Verify that the pods are running.

kubectl get pod

If the following information is displayed and the status is Running, the deployment is successful. vllm-0 and vllm-0-1 belong to a Serve instance, vllm-1 and vllm-1-1 belong to a Serve instance, and vllm-0 and vllm-1 are leader pods.NAME READY STATUS RESTARTS AGE vllm-0 1/1 Running 0 2s vllm-0-1 1/1 Running 0 2s vllm-1 1/1 Running 0 2s vllm-1-1 1/1 Running 0 2s

- Access the vllm-leader instance through the ClusterIP Service.

kubectl port-forward svc/vllm-leader 8080:8080If the following information is displayed, the vllm-leader instance can be accessed:

{ "id": "cmpl-1bb34faba88b43f9862cfbfb2200949d", "object": "text_completion", "created": 1715138766, "model": "meta-llama/Meta-Llama-3.1-405B-Instruct", "choices": [ { "index": 0, "text": " top destination for foodies, with", "logprobs": null, "finish_reason": "length", "stop_reason": null } ], "usage": { "prompt_tokens": 5, "total_tokens": 12, "completion_tokens": 7 } }

Release History

|

Add-on Version |

Supported Cluster Version |

New Feature |

Community Version |

|---|---|---|---|

|

0.6.1 |

v1.27 v1.28 v1.29 v1.30 v1.31 |

CCE standard and Turbo clusters support the LeaderWorkerSet add-on. |

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot