Migrating Data from Hudi to MRS with CDM

Scenarios

Cloud Data Migration (CDM) is an efficient and easy-to-use service for batch data migration. Leveraging cloud-based big data migration and intelligent data lake solutions, CDM offers user-friendly functions for migrating data and integrating diverse data sources into a unified data lake. These capabilities simplify the complexities of data source migration and integration, significantly enhancing efficiency.

This section describes how to migrate data from Hudi clusters in an on-premises IDC or on a public cloud to Huawei Cloud MRS.

Solution Architecture

Based on the big data migration to the cloud and intelligent data lake solution, CDM provides easy-to-use migration capabilities and capabilities of integrating multiple data sources to the data lake, reducing the complexity of data source migration and integration and effectively improving the data migration and integration efficiency.

CDM supports both full migration and incremental migration. When CDM file migration is used, you can implement full migration by copying files. When CDM file migration is used, you can implement incremental migration by setting Duplicate File Processing Method to Skip.

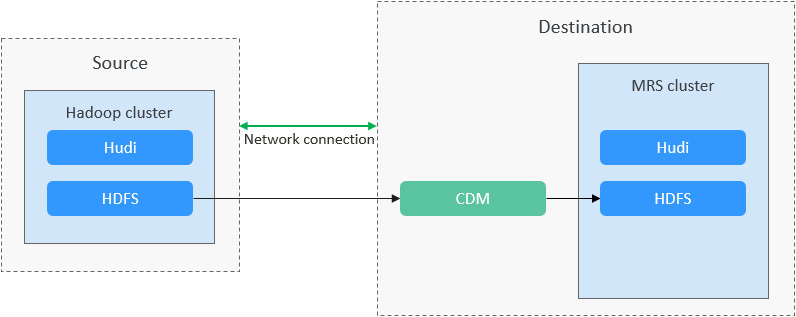

Figure 1 shows the solution for using CDM to migrate Hudi data to an MRS cluster.

Solution Advantages

- Easy-to-use: The wizard-based development interface eliminates the need for manual programming, allowing you to create migration tasks through intuitive, step-by-step configurations within minutes.

- High migration efficiency: Built on a distributed computing framework, the system significantly boosts data migration and transmission performance. It also features targeted optimizations for writing to specific data sources, ensuring faster and more efficient data transfers.

- Real-time monitoring: You stay informed throughout the migration process with automatic real-time monitoring, alarm reporting, and notifications.

Impact on the System

- During the migration, data inconsistency may occur if the changes on the Hudi files in the source cluster are not timely synchronized to the destination cluster. You can use the verification tool to identify inconsistent data, and migrate or add the data.

- The migration may cause the performance of the source cluster to deteriorate, increasing the response time of source services. It is recommended that you migrate data during off-peak hours and properly configure resources, including compute, storage, and network resources, in the source cluster to ensure that the cluster can handle the migration workloads.

Notes and Constraints

- Migrating a large volume of data has high requirements on network communication. When a migration task is executed, other services may be adversely affected. You are advised to migrate data during off-peak hours.

- This section uses Huawei Cloud CDM 2.9.2.200 as an example to describe how to migrate data. The operations may vary depending on the CDM version. For details, see the operation guide of the required version.

- For details about the data sources supported by CDM, see Supported Data Sources. If the data source is Apache HDFS, the recommended version is 2.8.X or 3.1.X. Before performing the migration, ensure that the data source supports migration.

Prerequisites

- The source and destination clusters can communicate with CDM.

- Before starting the migration, you need to identify the tables to be migrated. For metadata-defined tables, you have obtained the database name and table name. For path-defined tables, you have obtained the table path.

Migrating Existing Hudi Data Using CDM

- Log in to the CDM console.

- Create a CDM cluster. The security group, VPC, and subnet of the CDM cluster must be the same as those of the destination MRS cluster to ensure that the CDM cluster can communicate with the MRS cluster.

- On the Cluster Management page, locate the row containing the desired cluster and click Job Management in the Operation column.

- On the Links tab page, click Create Link.

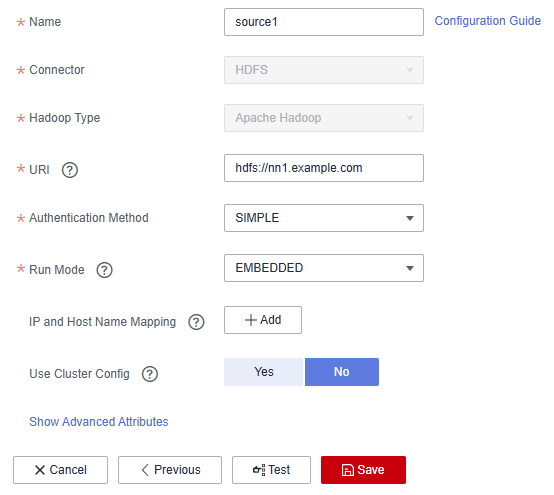

- Add a link to the source cluster by referring to Creating a Link Between CDM and a Data Source. Select a connector based on your cluster. For example, select Apache Hadoop. For details about link parameters, see Apache HDFS.

Figure 2 Link to the source cluster

- On the Links tab page, click Create Link.

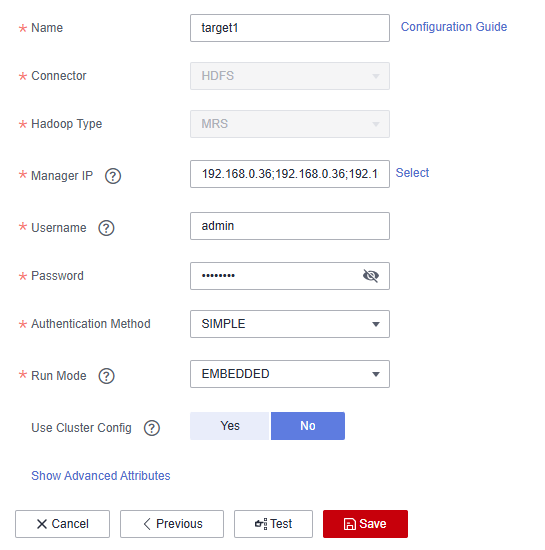

- Add a link to the destination cluster by referring to Creating a Link Between CDM and a Data Source. Select a connector based on your cluster. For example, select MRS HDFS. For details about link parameters, see MRS HDFS.

Figure 3 Link to the destination cluster

- Choose Job Management and click the Table/File Migration tab. Then, click Create Job.

- Select the source and destination links.

- Job Name: Enter a custom job name, which can contain 1 to 256 characters consisting of letters, underscores (_), and digits.

- Source Link Name: Select the HDFS link of the source cluster. Data is exported from this link when the job is running.

- Destination Link Name: Select the HDFS link of the destination cluster. Data is imported to this link when the job is running.

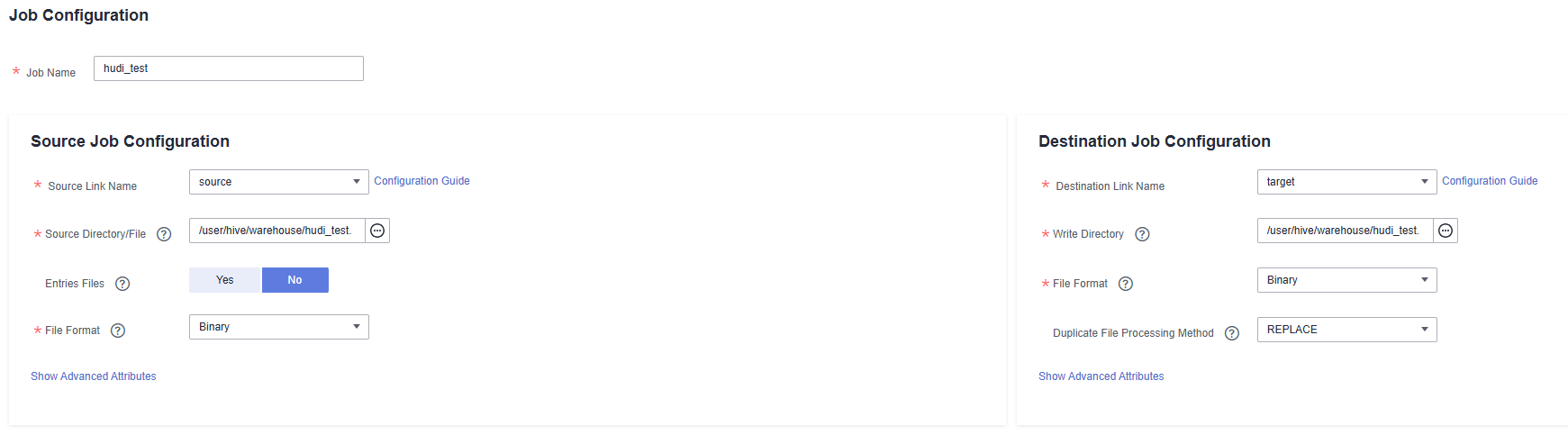

- Set job parameters for the source and destination links.

Configure source job parameters by referring to From HDFS. You can set Directory Filter and File Filter to specify the directories and files to be migrated.

Configure destination job parameters by referring to To HDFS.

- If you use CDM to perform full data migration, select REPLACE for Duplicate File Processing Method in the Destination Job Configuration area.

- If you use CDM to perform incremental data migration, select Skip for Duplicate File Processing Method in the Destination Job Configuration area.

Figure 4 Hudi job parameters

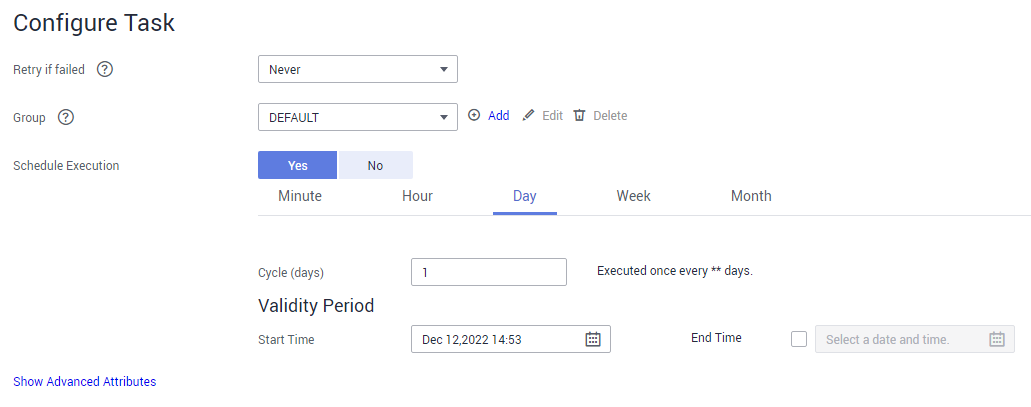

- Click Next. The task configuration page is displayed.

- If you need to periodically migrate new data to the destination cluster, configure a scheduled task on this page. Alternatively, you can configure a scheduled task later.

- If no new data needs to be migrated periodically, skip the configurations on this page and click Save.

Figure 5 Task configuration

- Choose Job Management and click the Table/File Migration tab. Click Run in the Operation column of the job to be executed to start migrating Hudi data. Wait until the job execution is complete.

- Check data after the migration.

- Log in to the node where the client is installed as user root and run the following command:

cd Client installation directory

- Run the following commands to load environment variables:

source bigdata_env

source Hudi/component_env

- If Kerberos authentication has been enabled for the cluster (in security mode), run the following command for user authentication. If Kerberos authentication is disabled for the cluster (in normal mode), user authentication is not required.

kinit Component service user

- Start Spark SQL and query the migrated table data.

spark-sql --master yarn

select count(*) from hudi_test.hudi_table1;

- Log in to the node where the client is installed as user root and run the following command:

- (Optional) If new data in the source cluster needs to be periodically migrated to the destination cluster, configure a scheduled task for incremental data migration until all services are migrated to the destination cluster.

- On the Cluster Management page of the CDM console, choose Job Management and click the Table/File Migration tab.

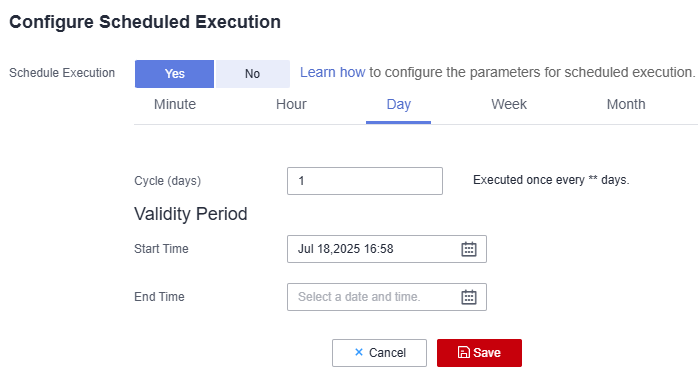

- In the Operation column of the migration job, click More and select Configure Scheduled Execution.

- Enable the scheduled job execution function, configure the execution cycle based on service requirements, and set the end time of the validity period to the time after all services are migrated to the new cluster.

Figure 6 Configuring schedule execution

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot