Using Ray, Docker, and vLLM to Manually Deploy a DeepSeek-R1 or DeepSeek-V3 Model on Multi-GPU Linux ECSs

Scenarios

DeepSeek-V3 and DeepSeek-R1 are two high-performance large language models launched by DeepSeek. DeepSeek-R1 is an inference model that is designed for math, code generation, and complex logical inference. Reinforcement learning (RL) is applied to this model to improve the inference performance. DeepSeek-V3 is a model that focuses on general tasks such as natural language processing, knowledge Q&A, and content creation. It aims to balance high performance and low cost and is suitable for applications such as intelligent customer service and personalized recommendation. In this section, we will learn how to use vLLM to quickly deploy a DeepSeek-R1 or DeepSeek-V3 model.

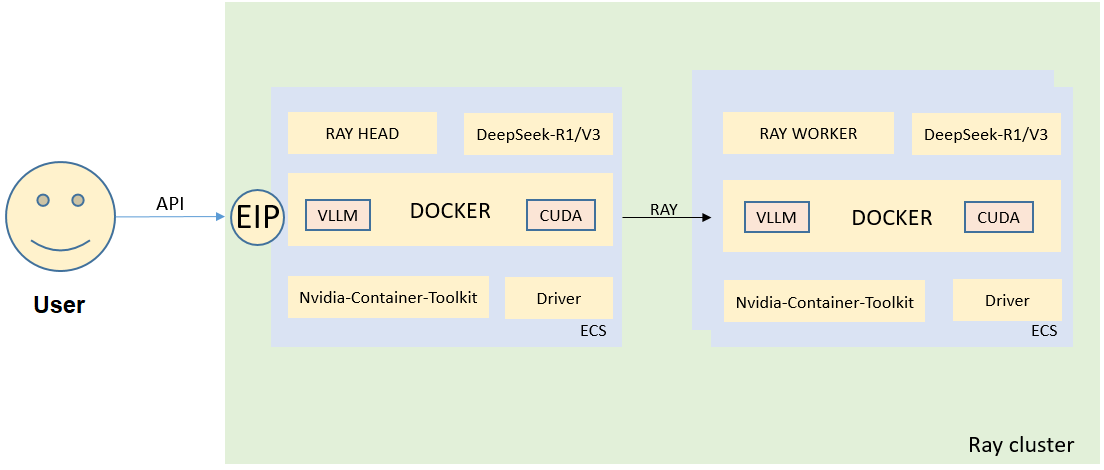

Solution Architecture

Advantages

You will be able to manually deploy DeepSeek-R1 and DeepSeek-V3 models using Ray, Docker, and vLLM and better understand model dependencies. This will give you a chance to experience the superb inference performance of DeepSeek-R1 and DeepSeek-V3 models.

Resource Planning

|

Resource |

Description |

Cost |

|---|---|---|

|

VPC |

VPC CIDR block: 192.168.0.0/16 |

Free |

|

VPC subnet |

|

Free |

|

Security group |

Inbound rule:

|

Free |

|

ECS |

|

The following resources generate costs:

For billing details, see Billing Mode Overview. |

|

No. |

Model Name |

Minimum Flavor |

GPU |

Nodes |

|---|---|---|---|---|

|

0 |

DeepSeek-R1 DeepSeek-V3 |

p2s.16xlarge.8 |

V100 (32 GiB) × 8 |

8 |

|

p2v.16xlarge.8 |

V100 (16 GiB) × 8 |

16 |

||

|

pi2.4xlarge.4 |

T4 (16 GiB) × 8 |

16 |

Contact Huawei Cloud technical support to select GPU ECSs suitable for your deployment.

Manually Deploying a DeepSeek-R1 or DeepSeek-V3 model Using Ray, Docker, and vLLM

To use Ray, Docker, and vLLM to manually deploy a DeepSeek-R1 or DeepSeek-V3 model on multi-GPU Linux ECSs, do as follows:

- Create two GPU ECSs.

- Check the GPU driver and CUDA versions.

- Install Ray.

- Install Docker.

- Install the NVIDIA Container Toolkit.

- Install dependencies, such as modelscope.

- Download a Docker image.

- Download the run_cluster.sh script.

- Download the DeepSeek-R1 or DeepSeek-V3 model file.

- Start the head node and all worker nodes of the Ray cluster.

- In the container on the head node, start vllm.server to run the large model.

- Call a model API to test the model performance.

Implementation Procedure

- Create two GPU ECSs.

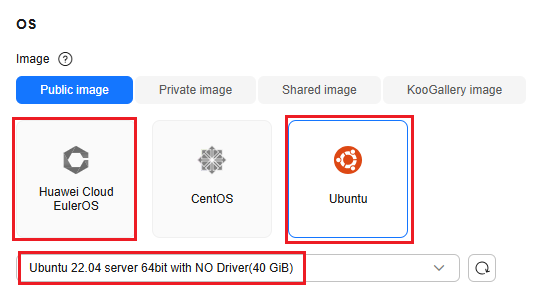

- Select the public image Huawei Cloud EulerOS 2.0 or Ubuntu 22.04 without a driver installed. Ubuntu 22.04 is used as an example.

Figure 2 Selecting an image

- Select Auto assign for EIP. EIPs will be assigned for downloading dependencies and calling model APIs.

- Select the public image Huawei Cloud EulerOS 2.0 or Ubuntu 22.04 without a driver installed. Ubuntu 22.04 is used as an example.

- Check the GPU driver and CUDA versions.

Install the driver of version 535 and CUDA of 12.2. For details, see Manually Installing a Tesla Driver on a GPU-accelerated ECS.

- Install Ray.

- Update the pip.

apt-get install -y python3 python3-pip

- Install Ray.

pip install -U ray

- Verify the installation.

python3 -c "import ray; ray.init()"

- Update the pip.

- Install Docker.

- Update the package index and install dependencies.

apt-get update apt-get install -y ca-certificates curl gnupg lsb-release

- Add Docker's official GPG key.

mkdir -p /etc/apt/keyrings curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg

- Set the APT source for Docker.

echo \ "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/ubuntu \ $(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

- Install Docker Engine.

apt update apt install docker-ce docker-ce-cli containerd.io docker-compose-plugin

- Configure Docker Hub.

cat <<EOF > /etc/docker/daemon.json { "registry-mirrors": [ "https://docker.m.daocloud.io", "https://registry.cn-hangzhou.aliyuncs.com" ] } EOF systemctl restart docker - Check whether Docker is installed successfully.

docker --version

- Update the package index and install dependencies.

- Install the NVIDIA Container Toolkit.

Ubuntu 22.04 is used as an example. For details about how to install the NVIDIA Container Toolkit on other OSs, see Installing the NVIDIA Container Toolkit.

- Add NVIDIA's official GPG key.

curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | sudo gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg \ && curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list | \ sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' | \ sudo tee /etc/apt/sources.list.d/nvidia-container-toolkit.list

- Set the APT source for the NVIDIA Container Toolkit.

sed -i -e '/experimental/ s/^#//g' /etc/apt/sources.list.d/nvidia-container-toolkit.list

- Update the index package and install the NVIDIA Container Toolkit.

apt update apt install -y nvidia-container-toolkit

- Configure Docker to use the NVIDIA Container Toolkit.

nvidia-ctk runtime configure --runtime=docker systemctl restart docker

- Add NVIDIA's official GPG key.

- Install dependencies, such as modelscope.

- Update the pip.

python -m pip install --upgrade pip -i https://mirrors.tuna.tsinghua.edu.cn/pypi/web/simple

- Install modelscope.

pip install modelscope -i https://mirrors.tuna.tsinghua.edu.cn/pypi/web/simple

ModelScope is an open-source model platform in China. It is fast to download dependencies from ModelScope if you are in China. However, if you are outside China, download dependencies from Hugging Face.

- Update the pip.

- Download a Docker image.

You can download the latest container image provided by vLLM or the image provided by the Huawei Cloud heterogeneous computing team.

- Download the latest image from the vLLM official website.

docker pull vllm.vllm-openai:latest

- Download an image provided by the Huawei Cloud heterogeneous computing team.

docker pull swr.cn-north-4.myhuaweicloud.com/hgcs/vllm0.7.3-pt312-ray2.43-cuda12.2:latest

The vLLM version is v0.7.3. It will continue to be updated.

- Download the latest image from the vLLM official website.

- Download the run_cluster.sh script.

Download the run_cluster.sh file from https://github.com/vllm-project/vllm/blob/main/examples/online_serving/run_cluster.sh to start the Ray cluster.

- Download the DeepSeek-R1 or DeepSeek-V3 model file.

- Create a script for downloading a model.

vim download_models.py

Write the following content into the script:

from modelscope import snapshot_download model_dir = snapshot_download('deepseek-ai/DeepSeek-R1', cache_dir='/root', revision='master')The model name DeepSeek-R1 is used as an example. You can change it to DeepSeek-V3. The local directory for storing the model is /root. You can change it as needed.

- Download a model.

python3 download_models.py

The total size of the model is 642 GB. The download may take 24 hours or longer, depending on the EIP bandwidth.

- Create a script for downloading a model.

- Start the head node and all worker nodes of the Ray cluster.

- Start the head node of the Ray cluster.

bash run_cluster.sh ${image-name} ${IP-address-of-the-head-node} --head ${model-directory} -e VLLM_HOST_IP=${IP-address-of-the-head-node} -e ${communications-library-environment-variable}Example:

bash run_cluster.sh swr.cn-north-4.myhuaweicloud.com/hgcs/vllm0.7.3-pt312-ray2.43-cuda12.2 192.168.200.249 --head /root/deepseek-ai/DeepSeek-R1 -e VLLM_HOST_IP=192.168.200.249 -e GLOO_SOCKET_IFNAME=eth0 &

- Wait until a container is started and access it.

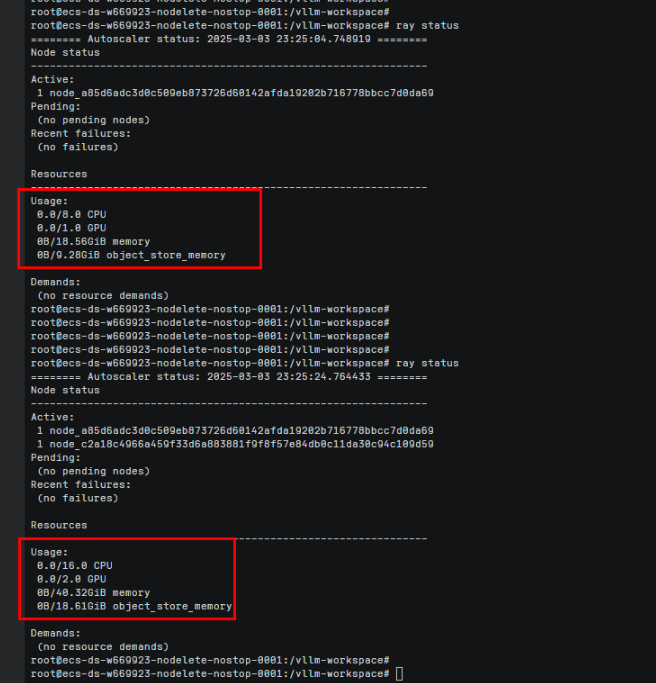

docker exec -it ${container-name} /bin/bash - Check the Ray cluster status.

ray status

- Start all worker nodes in the Ray cluster.

bash run_cluster.sh ${image-name} ${IP-address-of-the-head-node} --worker ${model-directory} -e VLLM_HOST_IP=${IP-address-of-the-current-node} -e ${communications-library-environment-variable}Example:

bash run_cluster.sh swr.cn-north-4.myhuaweicloud.com/hgcs/vllm0.7.3-pt312-ray2.43-cuda12.2 192.168.200.249 --worker /root/deepseek-ai/DeepSeek-R1 -e VLLM_HOST_IP=192.168.200.211 -e GLOO_SOCKET_IFNAME=eth0 &

- Wait until a container is started and access it.

docker exec -it ${container-name} /bin/bash - Check the Ray cluster status.

ray status

- Start the head node of the Ray cluster.

- In the container on the head node, start vllm.server to run the large model.

- Access the container on the head node.

- Run the large model.

vllm serve ${model-mapping-address} --served_model_name ${model-name} --tensor-parallel-size ${GPU_NUM} --gpu_memory_utilization 0.9 --max_model_len 20480 --dtype float16 --enforce-eager${model-mapping-address} indicates the container address in the run_cluster.sh script. By default, the value is /root/.cache/huggingface/. If the container address is changed in the script, change the value of ${model-mapping-address} accordingly.

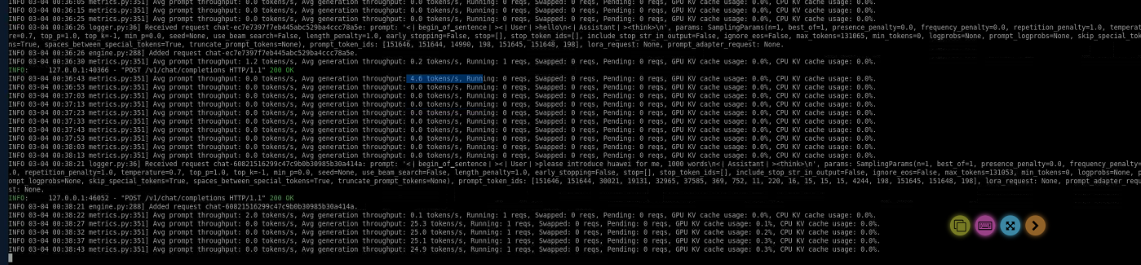

- Call a model API to test the model performance.

- Call an API to chat.

curl http://localhost:8000/v1/chat/completions \ -H "Content-Type: application/json" \ -d '{ "model": "DeepSeek-R1", "messages": [{"role": "user", "content": "hello\n"}] }'

- If a streaming conversation is required, add the stream parameter.

curl http://localhost:8000/v1/chat/completions \ -H "Content-Type: application/json" \ -d '{ "model": "DeepSeek-R1", "messages": [{"role": "user", "content": "hello\n"}], "stream": true }'You can use an EIP to call a model API for chats from your local Postman or your own service.

- Call an API to chat.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot