Deploying a Quantized DeepSeek Model with Ollama on a Single Server (Linux)

Scenarios

Quantization is a process of converting 32-bit floating-point numbers into 8-bit or 4-bit integers by reducing the accuracy of model parameters. This process compresses and optimizes models, reduces the GPU memory and compute required for running models, improves the efficiency, and reduces the energy consumption. However, quantization sacrifices the accuracy. In this section, we will learn how to quickly deploy a quantized DeepSeek model with Ollama.

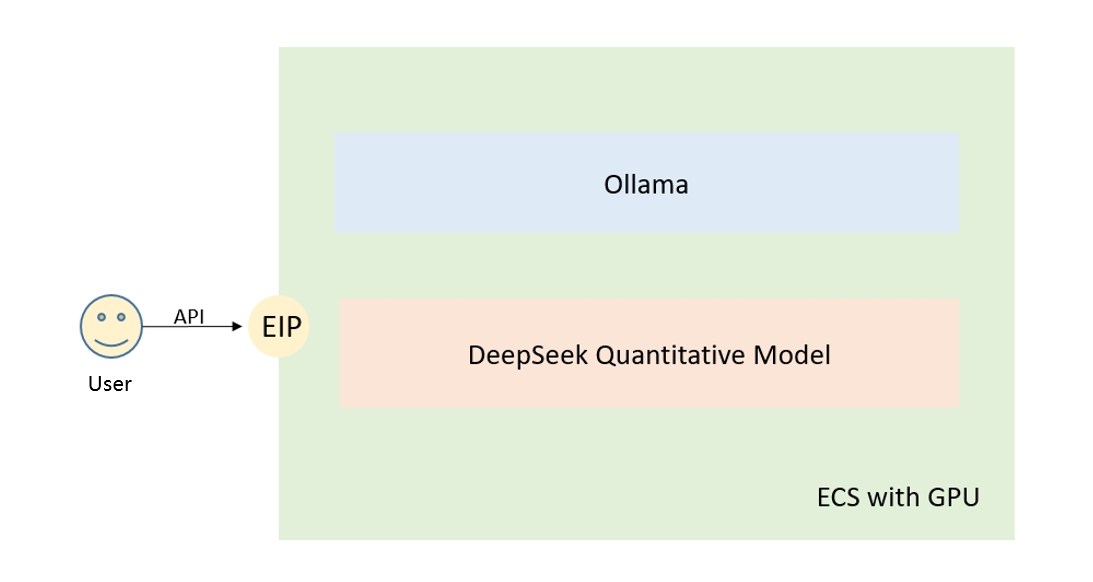

Solution Architecture

Advantages

Ollama is used to deploy distilled DeepSeek models from scratch. It thoroughly understands the model runtime dependencies. With a small number of resources, Ollama can quickly and efficiently connect to services for production and achieves more refined performance and cost control.

Resource Planning

|

Resource |

Description |

Cost |

|---|---|---|

|

VPC |

VPC CIDR block: 192.168.0.0/16 |

Free |

|

VPC subnet |

|

Free |

|

Security group |

Inbound rule:

|

Free |

|

ECS |

|

The following resources generate costs:

For billing details, see Billing Mode Overview. |

|

No. |

Model Name |

Minimum Flavor |

GPU |

|---|---|---|---|

|

0 |

deepseek-r1:7b deepseek-r1:8b |

p2s.2xlarge.8 |

V100 (32 GiB) × 1 |

|

p2v.4xlarge.8 |

V100 (16 GiB) × 1 |

||

|

pi2.4xlarge.4 |

T4 (16 GiB) × 1 |

||

|

g6.18xlarge.7 |

T4 (16 GiB ) × 1 |

||

|

1 |

deepseek-r1:14b |

p2s.4xlarge.8 |

V100 (32 GiB) × 1 |

|

p2v.8xlarge.8 |

V100 (16 GiB) × 1 |

||

|

pi2.8xlarge.4 |

T4 (16 GiB ) × 1 |

||

|

2 |

deepseek-r1:32b |

p2s.8xlarge.8 |

V100 (32 GiB) × 1 |

|

p2v.16xlarge.8 |

V100 (16 GiB) × 2 |

||

|

3 |

deepseek-r1:70b |

p2s.16xlarge.8 |

V100 (32 GiB) × 2 |

Contact Huawei Cloud technical support to select GPU ECSs suitable for your deployment.

Deploying a DeepSeek Distillation Model with Ollama

To manually deploy a quantized DeepSeek model on a Linux ECS with Ollama, do as follows:

Implementation Procedure

- Create a GPU ECS.

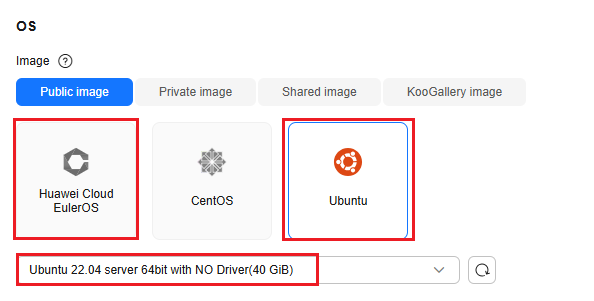

- Select the public image Huawei Cloud EulerOS 2.0 or Ubuntu 22.04 without a driver installed.

Figure 2 Selecting an image

- Select Auto assign for EIP. An EIP will be assigned for downloading dependencies and calling model APIs.

- Select the public image Huawei Cloud EulerOS 2.0 or Ubuntu 22.04 without a driver installed.

- Check the GPU driver and CUDA versions.

Install the driver of version 535 and CUDA of 12.2. For details, see Manually Installing a Tesla Driver on a GPU-accelerated ECS.

- Install Ollama.

- Download the Ollama installation script.

curl -fsSL https://ollama.com/install.sh -o ollama_install.sh chmod +x ollama_install.sh

- Install Ollama.

sed -i 's|https://ollama.com/download/|https://github.com/ollama/ollama/releases/download/v0.5.7/|' ollama_install.sh sh ollama_install.sh

- Download the Ollama installation script.

- Download the large model file.

Download the required model.

ollama pull deepseek-r1:7b ollama pull deepseek-r1:14b ollama pull deepseek-r1:32b ollama pull deepseek-r1:70b

- Run the large model using Ollama.

Run the large model.

ollama run deepseek-r1:7b ollama run deepseek-r1:14b ollama run deepseek-r1:32b ollama run deepseek-r1:70

- Call a model API to test the model performance. Ollama is fully compatible with OpenAI APIs.

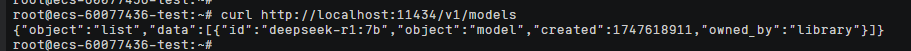

- Call an API to view the running model.

curl http://localhost:11434/v1/models

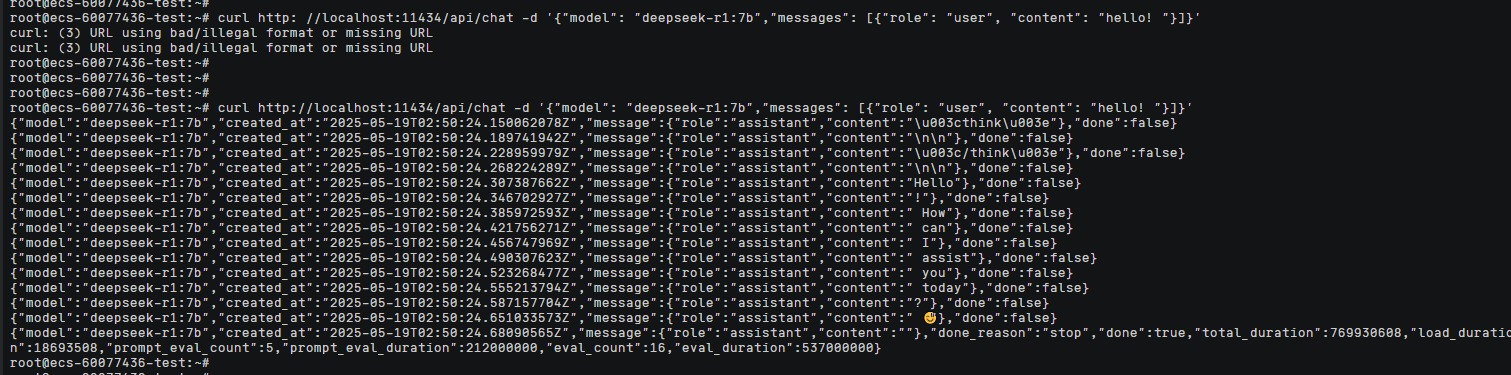

- Call an API to chat.

curl http: //localhost:11434/api/chat -d '{"model": "deepseek-r1:7b","messages": [{"role": "user", "content": "hello!"}]}'

The model is deployed and verified. You can use an EIP to call a model API for chats from your local Postman or your own service.

- Call an API to view the running model.

Related Operations

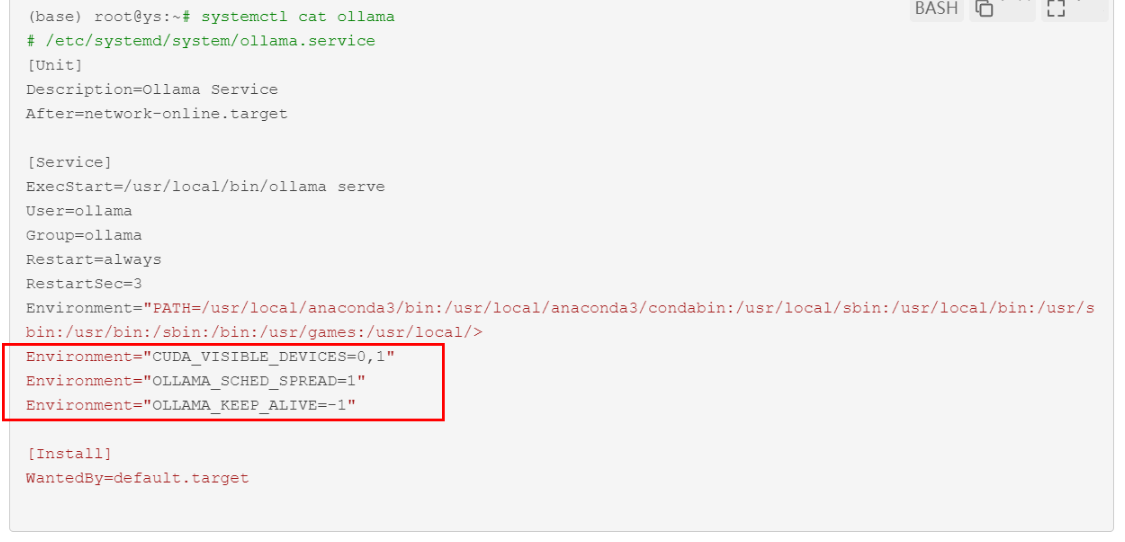

- To run on multiple GPUs, add the following parameters to the Ollama service and set CUDA_VISIBLE_DEVICES to the IDs of the GPUs being used.

vim /etc/systemd/system/ollama.service

- Restart Ollama.

systemctl daemon-reload systemctl stop ollama.service systemctl start ollama.service

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot