Running a SparkSubmit Job

Spark is an open-source parallel data processing framework. It helps you easily and quickly develop big data applications and perform offline processing, stream processing, and interactive analysis on data. spark-submit is a utility provided by Spark for deploying and running Spark applications in different clusters. You can use this tool to submit compiled Spark jobs to YARN or execute them locally. It achieves this by packaging application code into independent JAR or WAR files and then leveraging the resource management capabilities of the distributed computing framework for efficient Spark job submitting and execution.

MRS allows you to submit and run your own programs, and get the results. This section will show you how to submit a SparkSubmit job in an MRS cluster.

You can create a job online and submit it for running on the MRS console, or submit a job in CLI mode on the MRS cluster client.

Prerequisites

- You have uploaded the program packages and data files required for running jobs to OBS or HDFS.

- If the job program needs to read and analyze data in the OBS file system, you need to configure storage-compute decoupling for the MRS cluster. For details, see Configuring Storage-Compute Decoupling for an MRS Cluster.

Notes and Constraints

- When the policy of the user group to which an IAM user belongs changes from MRS ReadOnlyAccess to MRS CommonOperations, MRS FullAccess, or MRS Administrator, or vice versa, wait for five minutes after user synchronization for the System Security Services Daemon (SSSD) cache of the cluster node to refresh. Submit a job on the MRS console after the new policy takes effect. Otherwise, the job submission may fail.

- If the IAM username contains spaces (for example, admin 01), you cannot create jobs on the MRS console.

Submitting a Job

You can create and run jobs online using the management console or submit jobs by running commands on the cluster client.

- Prepare the application and data.

This section uses the spark-examples sample program provided by the cluster as an example. The sample program provides multiple functions. You can obtain the sample program from the MRS cluster client (Client installation directory/Spark/spark/examples/jars/spark-examples-XXX.jar). In some versions, the name of the Spark2x folder in the cluster is Spark. Replace it with the actual name. Upload the obtained sample program to a specified directory in HDFS or OBS. For details, see Uploading Application Data to an MRS Cluster.

Program class name: It is specified by a function in your program. In this application, the class name is SparkPi for calculating the value of Pi (π).

- Log in to the MRS console.

- On the Active Clusters page, select a running cluster and click its name to switch to the cluster details page.

- On the Dashboard page, click Synchronize on the right side of IAM User Sync to synchronize IAM users.

Perform this step only when Kerberos authentication is enabled for the cluster.

After IAM user synchronization, wait for five minutes before submitting a job. For details about IAM user synchronization, see Synchronizing IAM Users to MRS.

- Click Job Management. On the displayed job list page, click Create.

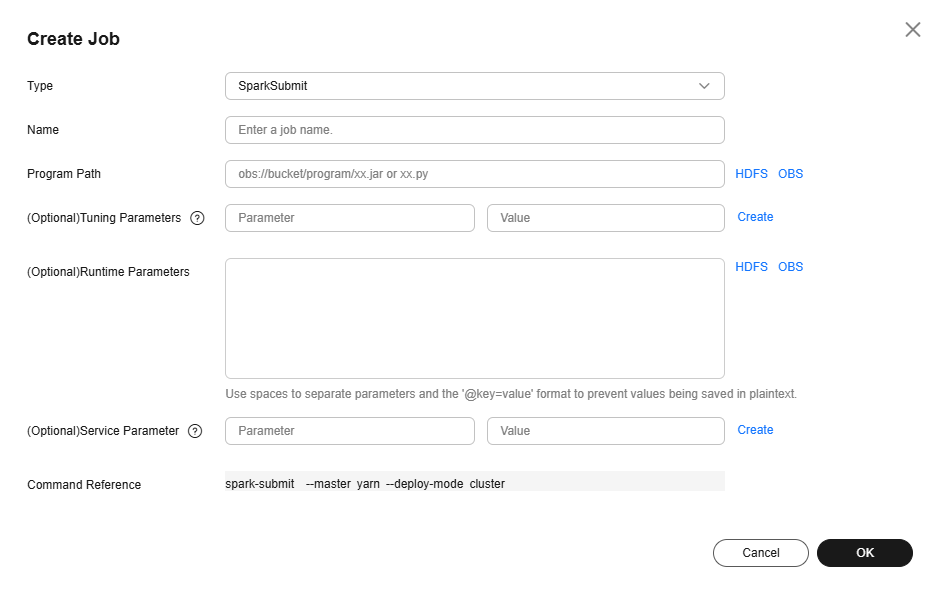

- In Type, select SparkSubmit. Configure other job information.

Figure 1 Adding a Spark job

Table 1 Job parameters Parameter

Description

Example

Name

Job name. It can contain 1 to 128 characters. Only letters, digits, hyphens (-), and underscores (_) are allowed.

spark_job

Program Path

Path of the program package to be executed. You can enter the path or click HDFS or OBS to select a file.

- The path can contain a maximum of 1,023 characters. It cannot contain special characters (;|&>,<'$) and cannot be left blank or all spaces.

- The OBS program path should start with obs://, for example, obs://wordcount/program/XXX.jar. The HDFS program path should start with hdfs://, for example, hdfs://hacluster/user/XXX.jar.

- The SparkSubmit job execution program must end with .jar or .py.

obs://mrs-demotest/program/spark-examples_XXX.jar

Tunning Parameters

(Optional) Used to configure optimization parameters such as threads, memory, and vCPUs for the job to optimize resource usage and improve job execution performance.

Table 2 lists the common program parameters of Spark jobs. You can configure the parameters based on the execution program and cluster resources.

--class org.apache.spark.examples.SparkPi

Runtime Parameters

(Optional) Key parameters for program execution. The parameters are specified by the function of the user's program. MRS is only responsible for loading the parameters.

Multiple parameters are separated by spaces. The value can contain a maximum of 150,000 characters and can be left blank. The value cannot contain special characters such as ;|&><'$

CAUTION:When entering a parameter containing sensitive information (for example, login password), you can add an at sign (@) before the parameter name to encrypt the parameter value. This prevents the sensitive information from being persisted in plaintext.

When you view job information on the MRS console, the sensitive information is displayed as *.

Example: username=testuser @password=User password

-

Service Parameter

(Optional) Service parameters for the job.

To modify the current job, change this parameter. For permanent changes to the entire cluster, refer to Modifying the Configuration Parameters of an MRS Cluster Component and modify the cluster component parameters accordingly.

For example, if decoupled storage and compute is not configured for the MRS cluster and jobs need to access OBS using AK/SK, you can add the following service parameters:

- fs.obs.access.key: key ID for accessing OBS.

- fs.obs.secret.key: key corresponding to the key ID for accessing OBS.

-

Command Reference

Commands submitted to the background for execution when a job is submitted.

N/A

Table 2 Spark job running program parameters Parameter

Description

Example

--conf

Spark job configuration in property=value format.

For more information about parameters required for submitting Spark jobs, see https://spark.apache.org/docs/latest/submitting-applications.html.

spark.executor.memory=2G

--conf spark-yarn.maxAppAttempts

Maximum number of retry attempts YARN will make to run the Spark application before giving up.

For example, if this parameter is set to 0, retry is not allowed. If this parameter is set to 1, one retry is allowed.

0

--driver-memory

Amount of memory to allocate to the driver process in a Spark job.

For example, if the allocated memory is 2 GB, set this parameter to 2g. If the allocated memory is 512 MB, set this parameter to 512m.

2g

--num-executors

Number of executors to run in a Spark job.

5

--executor-cores

Number of CPU cores to use on each executor in a Spark job.

2

--executor-memory

Amount of memory to use per executor in a Spark job.

For example, if the allocated memory is 2 GB, set this parameter to 2g. If the allocated memory is 512 MB, set this parameter to 512m.

2g

--class

Name of the main class to use for a job. It is specified by a function in your program and is usually a class containing the main method.

org.apache.spark.examples.SparkPi

--files

Upload files to a job. The files can be user-defined configuration files or some data files from OBS or HDFS.

-

--jars

JAR files to include on the driver and executor classpaths. Use commas (,) to separate multiple JAR files.

-

- Confirm job configuration information and click OK.

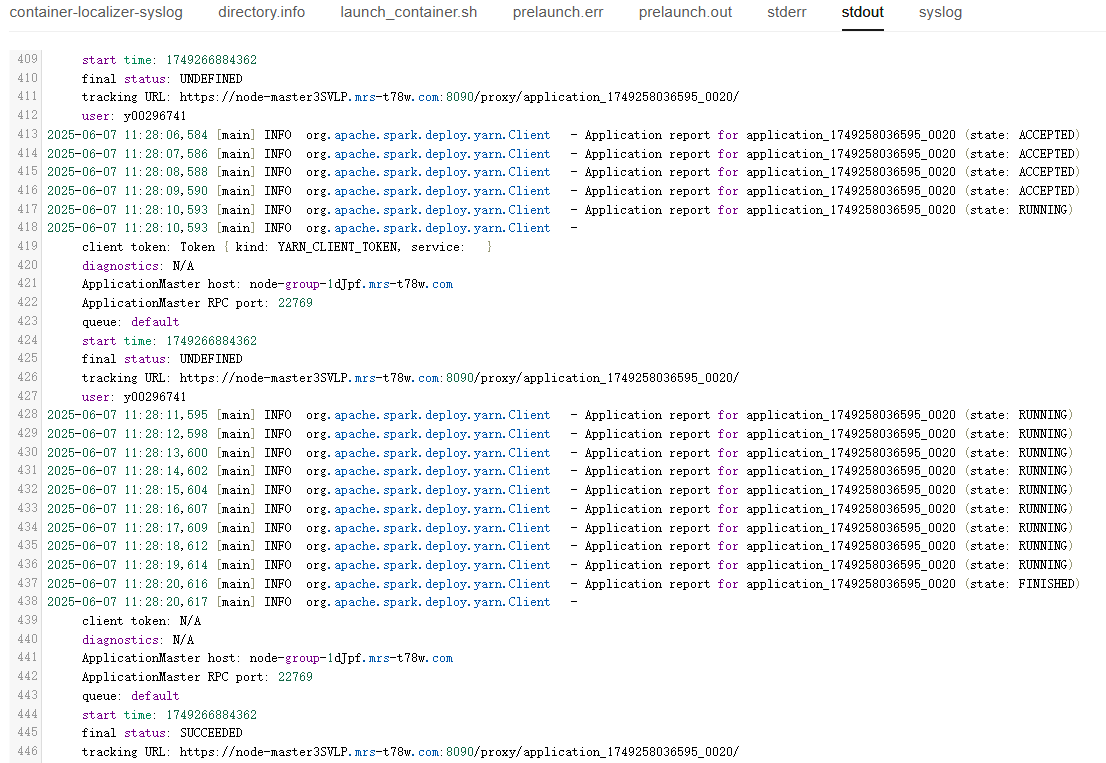

- After the job is submitted, you can view the job running status and execution result in the job list. After the job status changes to Completed, you can view the analysis result of related programs.

In this sample program, you can click View Log to view the detailed execution process of the Spark job.

Figure 2 Viewing the job execution result

During job execution, you can click View Log or choose More > View Details to view program execution details. If the job execution is abnormal or fails, you can locate the fault based on the error information.

A created job cannot be modified. If you need to execute the job again, you can click Clone to quickly copy the created job and adjust required parameters.

- Prepare the application and data.

This section uses the spark-examples sample program provided by the cluster as an example. The sample program provides multiple functions. You can obtain the sample program from the MRS cluster client (Client installation directory/Spark/spark/examples/jars/spark-examples-XXX.jar). In some versions, the name of the Spark2x folder in the cluster is Spark. Replace it with the actual name. Upload the obtained sample program to a specified directory in HDFS or OBS. For details, see Uploading Application Data to an MRS Cluster.

Program class name: It is specified by a function in your program. In this application, the class name is SparkPi for calculating the value of Pi (π).

- If Kerberos authentication has been enabled for the current cluster, create a service user with job submission permissions on FusionInsight Manager in advance. For details, see Creating an MRS Cluster User.

In this example, create human-machine user testuser, and associate the user with user group supergroup and role System_administrator.

- Install an MRS cluster client.

For details, see Installing an MRS Cluster Client.

The MRS cluster comes with a client installed for job submission by default, which can also be used directly. In MRS 3.x or later, the default client installation path is /opt/Bigdata/client on the Master node. In versions earlier than MRS 3.x, the default client installation path is /opt/client on the Master node.

- Log in to the node where the client is located as the MRS cluster client installation user.

For details, see Logging In to an MRS Cluster Node.

- Run the following command to go to the client installation directory:

cd /opt/Bigdata/client

Run the following command to load the environment variables:

source bigdata_env

If Kerberos authentication is enabled for the current cluster, run the following command to authenticate the user. If Kerberos authentication is disabled for the current cluster, you do not need to run the kinit command.

kinit testuser

- Run the following command to switch to the Spark component directory:

cd $SPARK_HOME

- Run the following command to submit a Spark job:

./bin/spark-submit --master yarn --deploy-mode client --class org.apache.spark.examples.SparkPi examples/jars/spark-examples_*.jar 10

The command output is as follows:

... Pi is roughly 3.1402231402231404

spark-submit parameter description:

- deploy-mode: running mode of the Spark driver. The value can be client or cluster. In this example, the client mode is used to submit the job.

- class: main class name of the application, which is specified by the running application.

- XXX.jar: program run by the Spark job

- --conf: additional configuration properties of the Spark job. If the keytab file is used for user authentication, you can set the following parameters:

- spark.yarn.principal: name of the user who submits the job.

- spark.yarn.keytab: keytab file for user authentication.

For more information about parameters required for submitting Spark jobs, see https://spark.apache.org/docs/latest/submitting-applications.html.

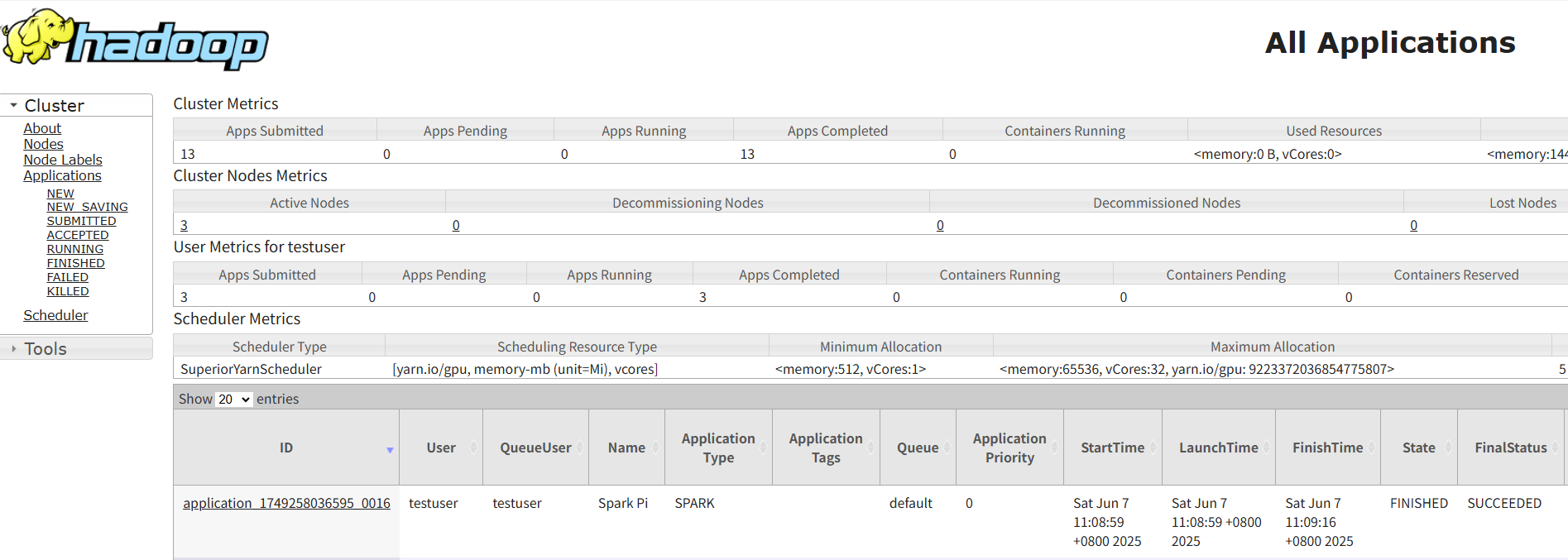

- Log in to FusionInsight Manager as user testuser, choose Cluster > Services > Yarn, and click the hyperlink on the right of ResourceManager Web UI to access the YARN Web UI. Click the application ID of the job to view the job running information and related logs.

Figure 3 Viewing Spark job details

Helpful Links

- For details about the differences between the client and cluster modes of Spark jobs, see What Are the Differences Between the Client Mode and Cluster Mode of Spark Jobs?

- Kerberos authentication has been enabled for a cluster and IAM user synchronization has not been performed. When you submit a job, an error is reported. For details about how to handle the error, see What Can I Do If the System Displays a Message Indicating that the Current User Does Not Exist on Manager When I Submit a Job?

- For more Spark job troubleshooting cases, see Job Management FAQs and Spark Troubleshooting.

- For more MRS application development sample programs, see MRS Developer Guide.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot