Developing a Spark Job

This section introduces how to develop a Spark job on Data Development.

Scenario Description

In most cases, SQL is used to analyze and process data when using Data Lake Insight (DLI). However, SQL is usually unable to deal with complex processing logic. In this case, Spark jobs can help. This section uses an example to demonstrate how to submit a Spark job on Data Development.

The general submission procedure is as follows:

- Create a DLI cluster and run a Spark job using physical resources of the DLI cluster.

- Obtain a demo JAR package of the Spark job and associate with the JAR package on Data Development.

- Create a Data Development job and submit it using the DLI Spark node.

Preparations

Obtaining Spark Job Codes

The Spark job code used in this example comes from the maven repository (download address: Spark job code). Download spark-examples_2.10-1.1.1.jar. The Spark job is used to calculate the approximate value of π.

- After obtaining the JAR package of the Spark job codes, upload it to the OBS bucket. The save path is s3a://dlfexample/spark-examples_2.10-1.1.1.jar.

- In the navigation tree of the console, choose . Create resource spark-example on Data Development and associate it with the JAR package obtained in 1.

Figure 1 Creating a resource

Submitting a Spark Job

You need to create a job on Data Development and submit the Spark job using the DLI Spark node of the job.

- Create an empty DLF job named job_spark.

Figure 2 Creating a job

- Go to the job development page, drag the DLI Spark node to the canvas, and click the node to configure node properties.

Figure 3 Configuring node properties

Description of key properties:

- DLI Cluster Name: Name of the Spark cluster created in Preparations.

- Job Running Resource: Maximum CPU and memory resources that can be used when a DLI Spark node is running.

- Major Job Class: Main class of a DLI Spark node. In this example, the main class is org.apache.spark.examples.SparkPi.

- JAR Package: Resource created in 2.

- After the job orchestration is complete, click

to test the job.

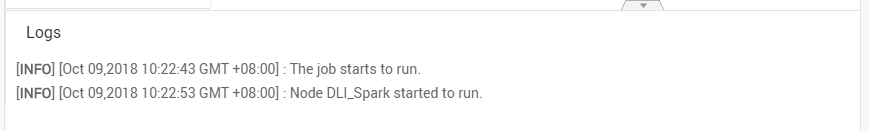

Figure 4 Job logs (for reference only)

to test the job.

Figure 4 Job logs (for reference only)

- If no problems are recorded in logs, click

to save the job.

to save the job.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot