Using Kettle to Import Data

Kettle is an open-source ETL tool. You can use Kettle to extract, transform, import, and load data.

During massive data migration, the data import speed of using the data import plug-in provided by Kettle is about 1500 records per second, resulting in a long migration time. In the same environment, the custom data import plugin with dws-client imports over 7,500 records per second, boosting speed by 5 to 15 times. This data is for reference and depends on factors like table size, column count, and cluster load.

Therefore, when using Kettle to migrate data, you can use the dws-client-integrated custom import plug-in to greatly improve the data migration speed.

Preparing the Kettle Environment

- Install JDK 11 or later and configure environment variables.

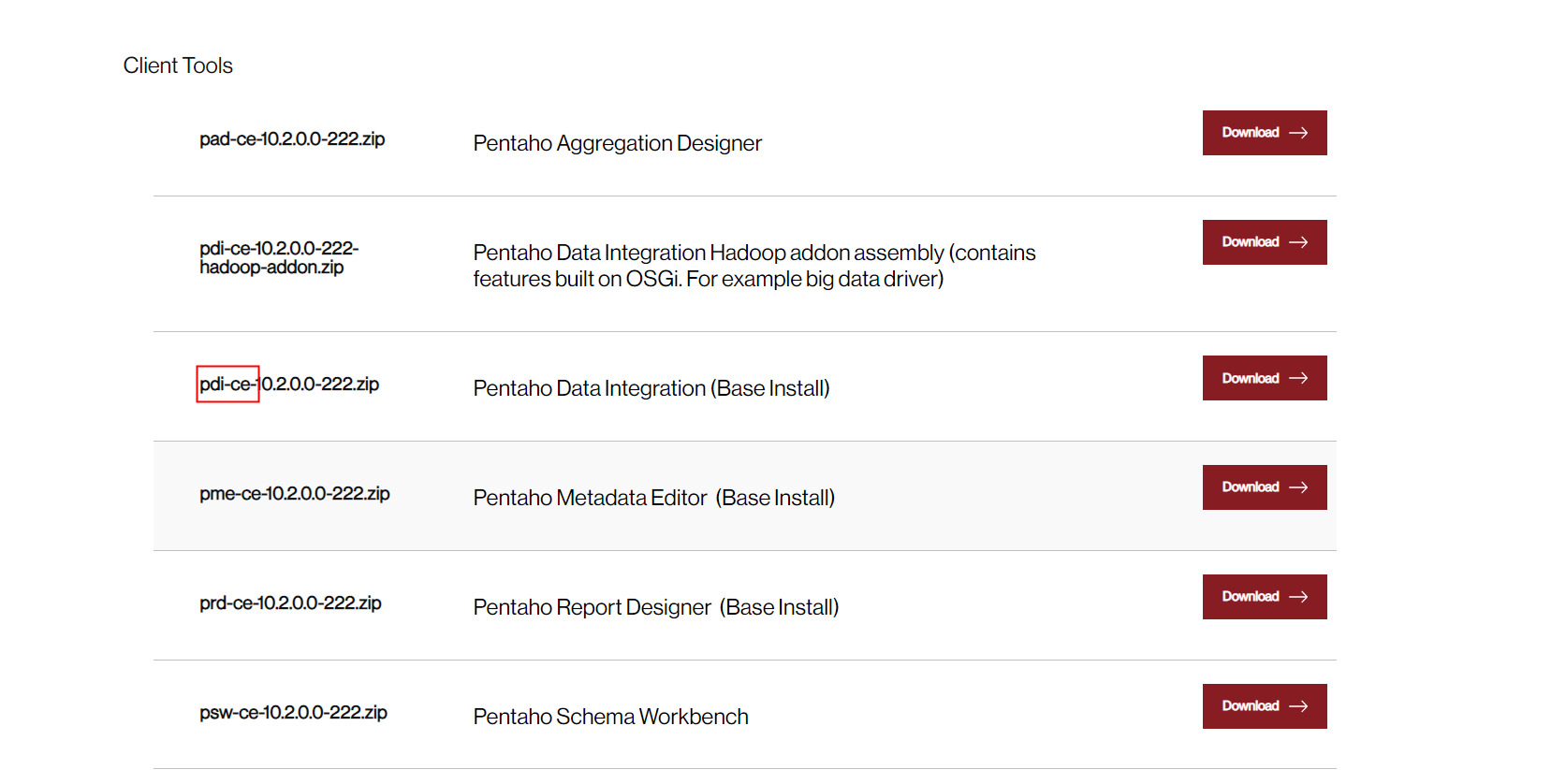

- Download Kettle from the official website.

Installing the dws-client Custom Plug-in

- Download dws-client plug-ins.

- Download dws-kettle-plugin.jar.

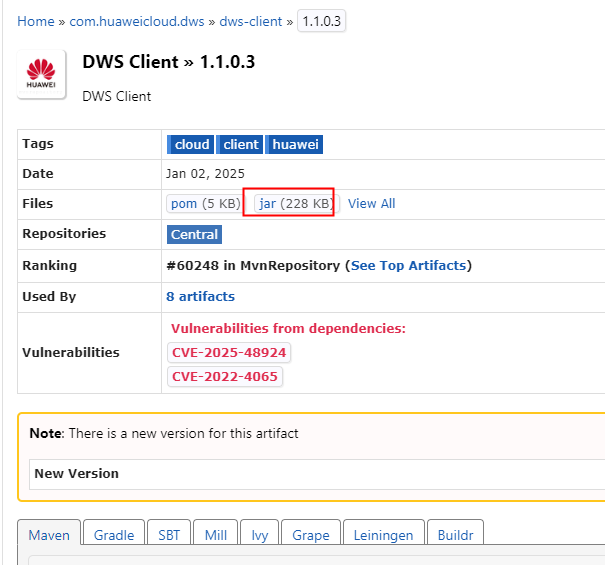

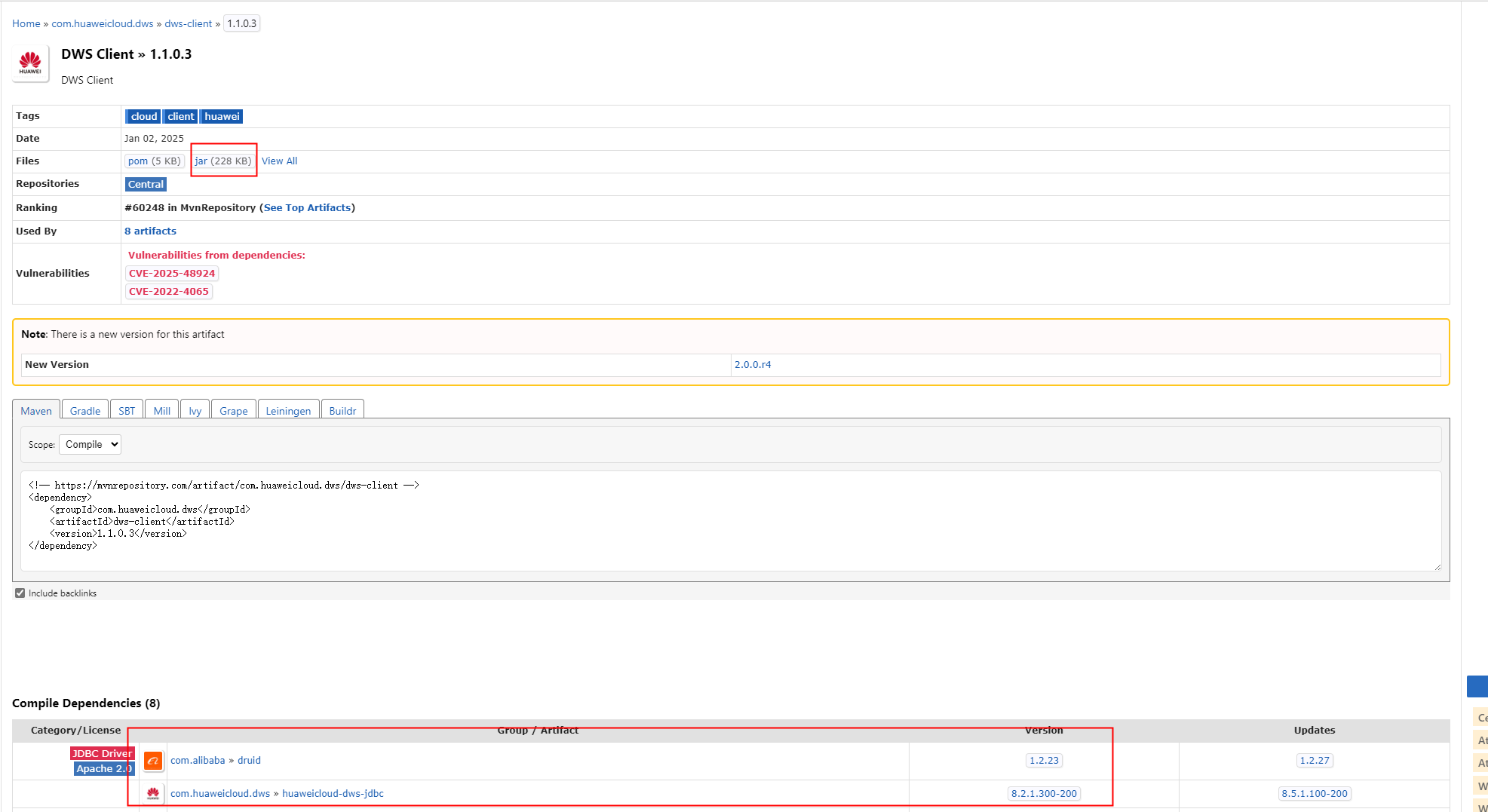

- dws-client.jar: Use 1.1.0.3 version.

- druid.jar: Select the version on which dws-client depends, and obtain druid.jar 1.1.0.3.

You must select the version on which dws-client depends. Otherwise, compatibility issues may occur.

- Download huaweicloud-dws-jdbc.jar and choose the version that is compatible with dws-client. For example, if you are using dws-client 1.1.0.3, choose huaweicloud-dws-jdbc.jar 1.1.0.3.

- Create a directory, for example, dws-plugin, in the Kettle installation directory data-integration\plugins.

- Place dws-kettle-plugin-xxx.jar in the data-integration\plugins\dws-plugin directory.

- Create the lib folder in data-integration\plugins\dws-plugin and place dws-client-xxx.jar and druid-xxx.jar in data-integration\plugins\dws-plugin\lib.

- Place huaweicloud-dws-jdbc-xxx.jar in data-integration\lib.

Using Kettle to Import Data

- Double-click Spoon.bat (spoon.sh in Linux) in the Kettle installation directory pdi-ce-10.2.0.0-222\data-integration to open Spoon and choose Create > Conversion to create a conversion task.

If the following error information is displayed, the Java version is incorrect. In this case, reinstall the Java.

- Add the Table Input node and configure the database connection, tables to be migrated, and table fields. When creating a database connection, you can click Test on the corresponding page to check whether the connection parameters are correctly set. After configuring the SQL statements corresponding to the tables to be migrated and the table fields, you can click Preview to view the preview data to be migrated.

- Add the DWS Table Output node, and configure the database connection, destination table, mapping between destination table fields and data source fields, and other parameters. The DWS Table Output node supports only PostgreSQL as the data source.

- Save the conversion task and start the task.

- View the running result and check whether the total number of migrated data records and detailed data in the destination data table are the same as those before migration.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot