Deploying an NGC Container Environment to Create a Deep Learning Development Environment

Scenarios

Huawei Cloud supports the deployment of deep learning frameworks using Docker containers. NVIDIA Docker can be used to start GPU acceleration-supported containers. You can download container images from NVIDIA GPU Cloud (NVIDIA NGC) and run them on Huawei Cloud GPU ECSs.

This section uses the TensorFlow deep learning framework as an example to describe how to use the containerized solution to deploy the NGC container environment on a GPU ECS to pre-install the deep learning development environment.

Background

NGC container images are a series of optimized Docker container images provided by NVIDIA. They have the following features:

- Pre-configured environment: These container images integrate CUDA, cuDNN, deep learning frameworks, and other related dependencies. You do not need to perform complex environment configurations. Instead, you can directly pull the images and run them.

- GPU acceleration: These images are optimized to unlock the compute power of NVIDIA GPUs to boost compute efficiency, especially in deep learning, large-scale data processing, and high-performance computing tasks.

- Optimized design: Container images are optimized for specific tasks (such as deep learning frameworks and AI tasks) to ensure performance and compatibility.

- Multiple deep learning frameworks: NVIDIA provides container images of multiple common deep learning frameworks, including TensorFlow, PyTorch, MXNet, and Caffe. You can select one as required.

Advantages

- No need to manually configure the environment: Container images have integrated CUDA, cuDNN, deep learning frameworks (such as TensorFlow and PyTorch), and other common tool libraries.

- Easy migration: You can run container images in different cloud environments to ensure consistency between the development and production environments.

- Version control: NGC provides container images of multiple versions (for example, different TensorFlow or PyTorch versions). You can select the version that meets your requirements.

Deploying an NGC Container Environment to Create a Deep Learning Development Environment

To deploy an NGC container environment to create a deep learning development environment, do as follows:

Procedure

- Create a GPU ECS.

- Log in to the ECS console and open the Buy ECS page.

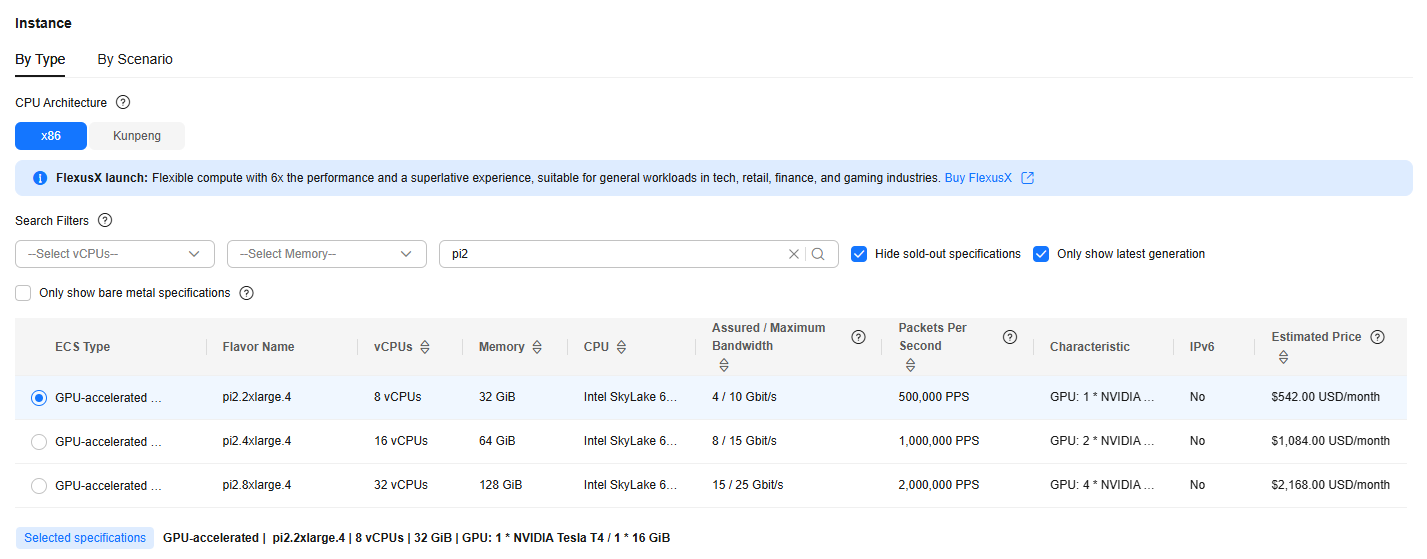

- Select a GPU-accelerated ECS (for example, Pi2, Pi3, or Pi5 ECS that supports NVIDIA Tesla T4, A30, or L2 GPUs).

The following uses pi2.2xlarge.4 as an example.

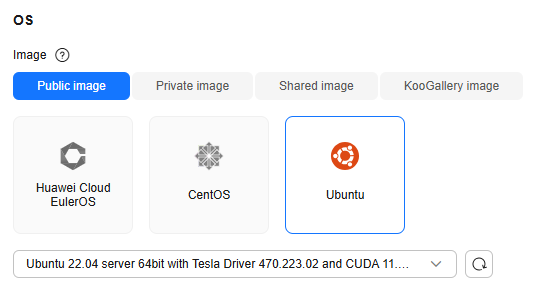

- Select an image that has the CUDA and NVIDIA driver installed.

The following uses Ubuntu 22.04 server 64bit with Tesla Driver 470.223.02 and CUDA 11.4 as an example.

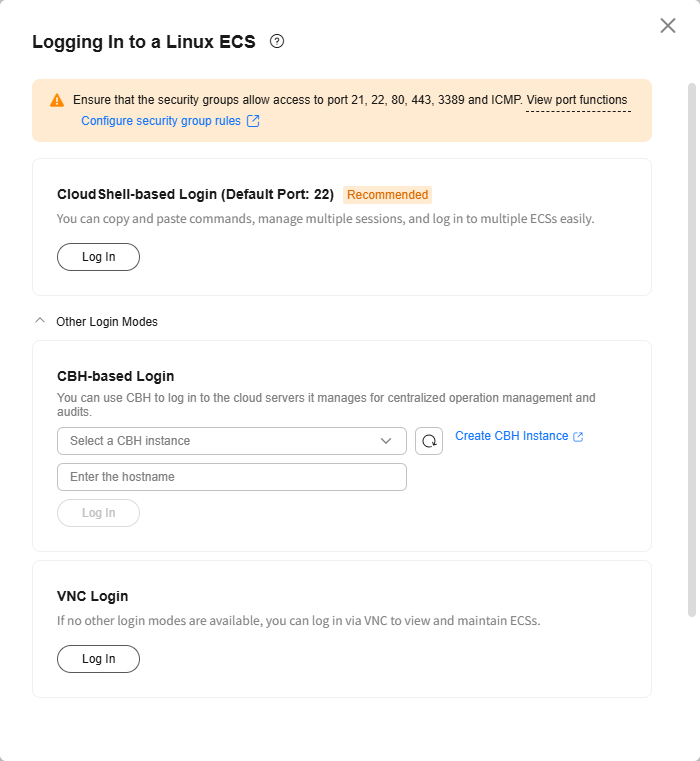

- Remotely log in to the GPU ECS (using VNC or CloudShell).

The following uses VNC login as an example.

- Install Docker.

NGC containers are based on Docker. You need to install Docker and NVIDIA Docker to use GPU-accelerated containers.

The following describes how to install Docker on Ubuntu. For details about how to install Docker on other Linux distributions, Windows, or Mac, see https://docs.docker.com/get-docker/.- Update the package index and install Docker.

sudo apt update sudo apt install -y docker.io

- Start Docker and set it to start automatically at boot.

sudo systemctl start docker sudo systemctl enable docker

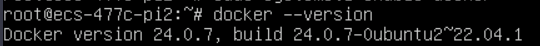

- Verify the Docker installation.

docker --version

If information similar to the following is displayed, Docker is installed.

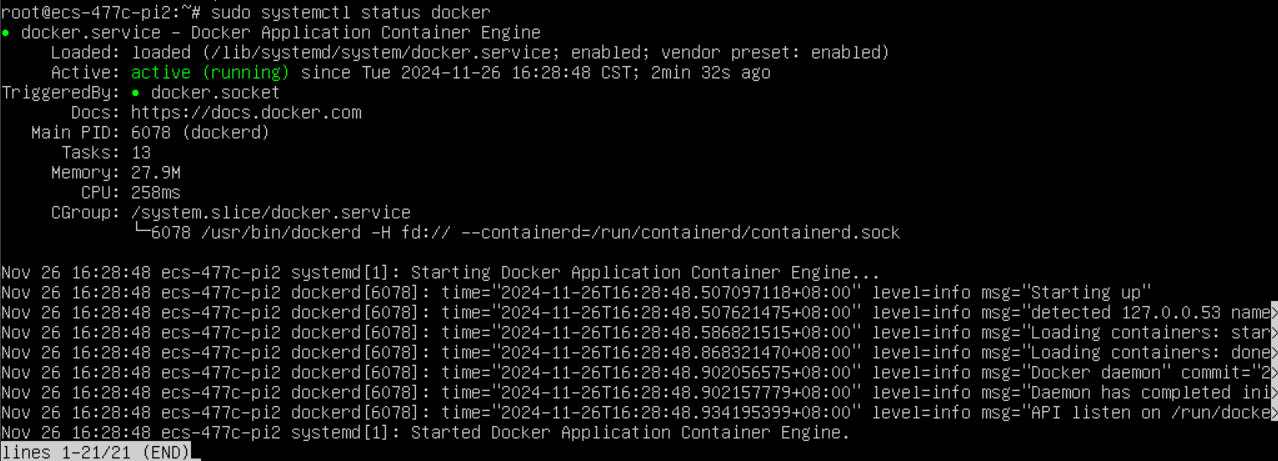

- Verify the Docker status.

sudo systemctl status docker

- Update the package index and install Docker.

- Install NVIDIA Docker.

- Add the NVIDIA Docker repository.

# Obtain and add the NVIDIA Docker GPG key.

curl -s -L https://nvidia.github.io/nvidia-docker/gpgkey | sudo apt-key add -

# Add the NVIDIA Docker repository.

distribution=$(. /etc/os-release;echo $ID$VERSION_ID) curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.list | sudo tee /etc/apt/sources.list.d/nvidia-docker.list

- Install NVIDIA Docker.

sudo apt update

# Install nvidia-docker2 and related dependencies.

sudo apt install nvidia-docker2

# Restart Docker.

sudo systemctl restart docker

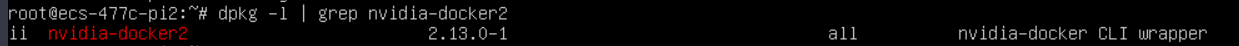

- Verify the NVIDIA Docker configuration.

# Check whether nvidia-docker2 has been installed.

dpkg -l | grep nvidia-docker2

If information similar to the following is displayed, the installation is successful.

- Add the NVIDIA Docker repository.

- Pull an NGC container image.

- Use Docker to pull a required container image.

# Pull a TensorFlow container image.

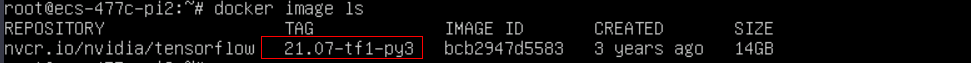

docker pull nvcr.io/nvidia/tensorflow:xx.xx-py3- xx.xx-py3 indicates the TensorFlow version and Python version, for example, 21.07-tf1-py3.

- The CUDA version in the TensorFlow image must match the driver version of the GPU ECS, or the TensorFlow development environment fails to be deployed. For details about the mapping between TensorFlow image versions, CUDA versions, and GPU ECS driver versions, see https://docs.nvidia.com/deeplearning/frameworks/tensorflow-release-notes/index.html.

- It may take a long time to download the TensorFlow container image. Please wait.

- Check the details of the downloaded TensorFlow container image.

docker image ls

- Use Docker to pull a required container image.

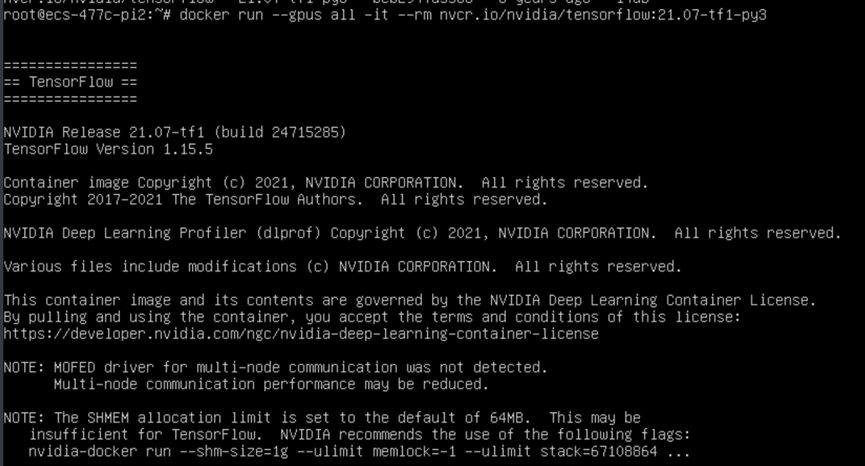

- Run the NGC container.

Run the following command to start the TensorFlow container:

docker run --gpus all -it --rm nvcr.io/nvidia/tensorflow:xx.xx-py3

For example:

docker run --gpus all -it --rm nvcr.io/nvidia/tensorflow:21.07-tf1-py3

- Test TensorFlow.

Run the following commands in sequence to test TensorFlow:

python

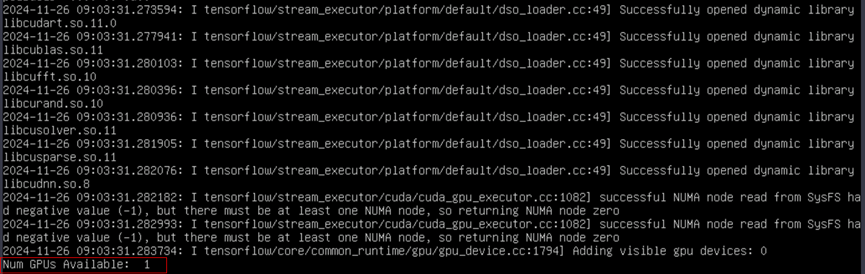

import tensorflow as tf print("Num GPUs Available: ", len(tf.config.experimental.list_physical_devices('GPU')))In the displayed information similar to the following, check the number of GPUs.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot