Scaling Options for DWS with a Coupled Storage-Compute Architecture

Scalability is a critical feature for cloud services. It refers to cloud services' ability to increase or decrease compute and storage resources to meet changing demand, achieving a balance between performance and cost.

Typically, a distributed architecture offers the following types of scalability:

- Scale-out (horizontal scaling)

With a scale-out, more nodes are added to an existing system to increase storage and compute capacities. For DWS, this means to expand the cluster size. To ensure proper resource utilization, make sure the hardware devices you add use the same specifications as the ones already in the cluster do.

- Scale-in (horizontal scaling)

Scale-in is the opposite of scale-out. With a scale-in, nodes are removed from an existing system to decrease storage and compute capacities and by doing so, increase resource utilization. DWS is deployed by security ring, which means DWS clusters are scaled in or out by security ring as well. We will talk about security rings in more detail in a later section.

- Scale-up (vertical scaling)

With a scale-up, more CPUs, memory, disks, or NICs are added to existing servers to increase the corresponding capacities. In some cases, lower-capacity hardware is replaced by higher-capacity ones. This is also referred to as hardware upgrade, which may entail an OS upgrade sometimes.

- Scale-down (vertical scaling)

Scale-down is the opposite of scale-up. With a scale-down, the hardware of an existing system is downgraded to match demand.

DWS offers various auto-scaling capabilities. You can adjust storage and computing resources by modifying hardware configurations (such as disks, memory, CPUs, and NICs) or by scaling distributed nodes. Additionally, you can scale out or scale up the cluster and adjust its topology.

A Closer Look at DWS Cluster Topology

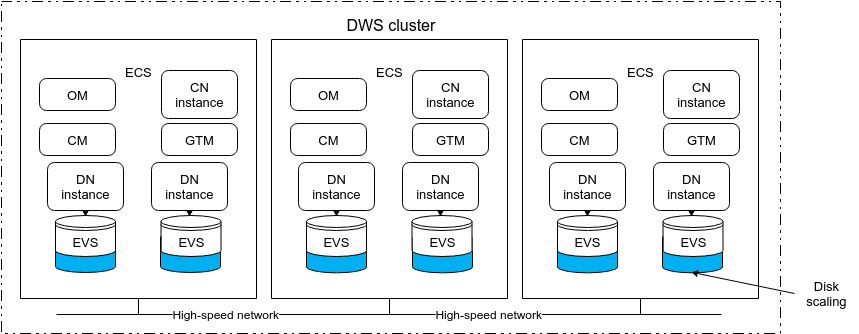

To fully understand the scalability of DWS, one needs to understand DWS's typical cluster topology. The following figure shows a simplified ECS+EVS deployment structure of DWS.

- ECSs provide compute resources, including CPUs and memory. DWS database instances (such as CNs and DNs) are deployed on ECSs.

- EVS provides storage resources. An EVS disk is attached to each DN.

- All ECSs in a DWS cluster are within the same VPC to ensure high-speed connections between them.

- All the database instances deployed on ECSs form a distributed, massively parallel processing database (MPPDB) cluster to provide data analysis and processing capabilities as a whole.

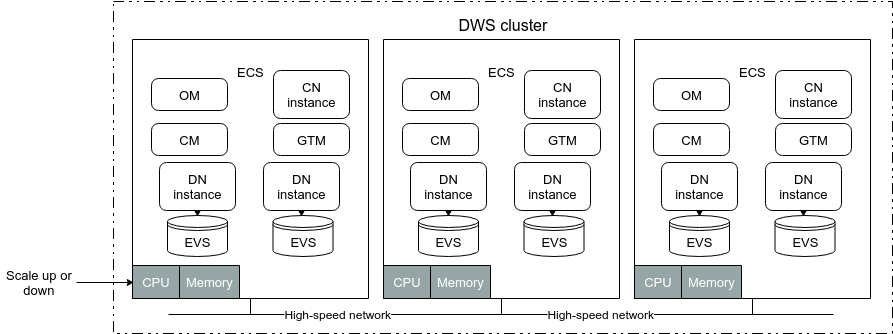

Once you have had a good look at the typical topology of a DWS cluster, you can better understand DWS's scalability features. At present, DWS offers the following scaling options: disk scaling, node flavor change, cluster scale-out, cluster scale-in, cluster resizing, and CN addition or deletion, as illustrated by the figure below:

Disk Scaling

- With disk scaling, the size of all EVS disks attached to all ECSs in a cluster is changed. This option can be used to quickly scale disk capacity.

- Disk capacity can only be scaled up, and not down.

- Disk scaling is a lightweight operation that typically can be completed within 5 to 10 minutes. It does not entail data migration or the restarting of services, so it does not interrupt services. Nonetheless, you are advised to perform this operation during off-peak hours.

- For DWS's coupled storage-compute architecture, EVS disk specifications support disk scaling. The cluster version must be 8.1.1.203 or later.

- For details, see Disk Capacity Expansion of an EVS Cluster.

Changing the Node Flavor

- This operation changes the flavor of all ECSs in a cluster. It can be used to quickly change CPU and memory specifications.

- A flavor is a preset resource template of a combination of a specific number of vCPUs and memory. For example, the flavor dwsx.16xlarge includes 64 vCPUs and 512 GB memory.

- Changing the node flavor is a lightweight operation that typically can be completed within 5 to 10 minutes. It does not involve data migration, but services will need to be restarted once, causing a service interruption in minutes. You are advised to perform this operation during off-peak hours.

- For DWS's coupled storage-compute architecture, EVS specifications support specification scaling. The cluster version must be 8.1.1.300 or later.

- For details, see Changing the Node Flavor.

Scaling Out a Cluster

Cluster scale-out is a typical horizontal scaling scenario for MPPDBs, where homogeneous nodes are added to an existing cluster to increase capacity. DWS 2.0 uses coupled storage and compute, so a cluster scale-out expands both compute and storage capacities.

To balance the load and achieve optimal performance, metadata replication and data redistribution are performed during a cluster scale-out. Therefore, the time needed to complete a cluster scale-out is positively correlated with the number of database objects as well as the data size. To ensure reliability, new nodes are automatically added to security rings. This is why at least three nodes must be added for a scale-out operation.

8.1.1 and later versions support online scale-out. During an online scale-out, DWS does not restart and can continue to provide services. During data redistribution, you can perform insert, update, and delete operations on tables, but data updates may still be blocked for a short period of time. Redistribution consumes large quantities of CPU and I/O resources, significantly impacting job performance. Therefore, you are advised to perform redistribution when services are stopped or during periods of light load. A phase-by-phase approach is recommended for cluster scale-out: Perform high-concurrency redistribution during periods of light load, and stop redistribution or perform low-concurrency redistribution during periods of heavy load.

Cluster scale-out can be performed phase by phase or in one-click mode.

A phase-by-phase approach separates a scale-out operation into three phases: adding ECSs, adding nodes, and data redistribution. You can schedule the scale-out tasks in a way that can minimize the risk of service interruption.

On the other hand, a one-click scale-out is more convenient to users.

|

Approach |

Characteristics |

Impact |

|---|---|---|

|

Phase-by-phase scale-out |

A scale-out operation is divided into three phases: adding ECSs, adding nodes, and data redistribution. You can schedule each phase for the most appropriate times and perform them separately. |

The risk of service interruption can be minimized. |

|

One-click scale-out |

During a one-click scale-out, adding ECSs, adding nodes, and redistributing data are all performed automatically. |

It is more convenient to users. |

DWS Cluster Security Ring

A security ring is the minimum set of nodes required for the horizontal deployment of multi-replica DNs. Cluster scale-out and scale-in are both performed by security ring. The main idea behind security rings is fault isolation. Any fault that occurs within a security ring stays within that ring.

DWS uses a primary-standby-secondary architecture, so the minimum number of nodes in a security ring is 3. When a fault occurs within a ring, it has no impact on nodes outside that ring. The scope of impact is minimized (3 nodes), and the impact on each node in that faulty ring is 1/(N-1), that is, 1/2. In extreme scenarios, the entire cluster is a security ring. If a fault occurs within this ring, the scope of impact is the largest (the entire cluster), but the impact on each node in the ring is the smallest, that is, 1/(N-1).

A common practice is to form an N+1 ring, where each node evenly distributes its N replicas to the remaining N nodes in the same ring. When a fault occurs in the ring, the scope of impact in the entire cluster is N+1 nodes, and the impact on each node in the ring is 1/N.

Scaling In a Cluster

- Cluster scale-in is also a typical horizontal scaling scenario for MPPDBs, where some of the nodes of an existing cluster are removed to reduce capacity. A cluster scale-in reduces both compute and storage capacities.

- Each DWS cluster physically consists of multiple ECSs. To improve reliability, a set number of ECSs (typically three) form a logical security ring, so each DWS cluster consists of a number of security rings. A cluster scale-in is performed by security ring. The security rings at the end of a cluster are first removed.

- A cluster scale-in involves data migration. Data on the removed nodes needs to be redistributed to the remaining nodes. This means the time needed to complete a cluster scale-in is positively correlated with the number of database objects as well as the data size.

- DWS's coupled storage-compute architecture supports cluster scale-in. 8.1.1.300 and later versions support online scale-in. During an online scale-in, DWS does not restart and can continue to provide services. During data redistribution, you can perform insert, update, and delete operations on tables, but data updates may still be blocked for a short period of time. Redistribution consumes large quantities of CPU and I/O resources, significantly impacting job performance. Therefore, you are advised to perform redistribution when services are stopped or during periods of light load.

Adding or Deleting CNs

- Adding or deleting coordinator nodes (CNs) is another way of cluster scaling in DWS.

- CNs are an important component of DWS. It provides interfaces to external applications, optimizes global execution plans, distributes execution plans to data nodes (DNs), and summarizes results from each node into a single result set.

- CN capacities determine the entire cluster's concurrency handling capability. By adding more CNs, you increase the cluster's concurrency handling capability.

- CNs use a multi-active architecture. To ensure data consistency, if data on some CNs is damaged, DDL services will be blocked. To quickly restore DDL services, you can remove the faulty CNs.

- DWS supports adding or deleting CNs in 8.1.1 and later versions.

- When a CN is added, metadata needs to be synchronized. The time it takes to add a CN depends on the metadata size. In 8.1.3, CNs can be added and deleted online. During CN addition, DWS does not restart and can continue to provide services. DDL services will be blocked for a short period of time (with no error reported). No other services are affected.

Resizing a Cluster

- Cluster resizing allows you to perform horizontal and vertical scaling at the same time, including cluster scale-out and scale-in, as well as scale-up and scale-down. The cluster topology can also be adjusted.

- Clustering resizing relies on multiple node groups and data redistribution. During cluster resizing, a new cluster is created based on new resource requirements and cluster planning. Then, data is redistributed between the old and new clusters. Once data migration is complete, services are migrated to the new cluster, and after that, the old cluster is released.

- Cluster resizing involves data migration. Data on the nodes in the old cluster needs to be redistributed to the nodes in the new cluster, with the data still available in the old cluster. The time it takes to resize a cluster is positively correlated with the number of database objects as well as the data size.

- DWS supports cluster resizing, but agents must be upgraded to 8.2.0.2. Currently, during cluster resizing, the old cluster can only support read-only services. Online service capabilities can be expected later.

- For details, see Changing All Specifications.

Comparing Different Scaling Options

The table below compares different scaling options for DWS.

|

Option |

Scaled Object |

Scope |

Impact |

Product |

|---|---|---|---|---|

|

Disk scaling |

Disk capacity |

EVS disks attached to all ECSs in a cluster |

Can be completed within 5 to 10 minutes. There is no need to restart services, so it has no impact on services. Should be performed during off-peak hours. |

Cluster version: 8.1.1.203 or later |

|

Changing the node flavor |

Compute capacity |

The flavor (CPU cores and memory size) of all ECSs in a cluster |

Can be completed within 5 to 10 minutes. Services will need to be restarted once, causing a service interruption in minutes. Should be performed during off-peak hours. |

Cluster version: 8.1.1.300 or later |

|

Cluster scale-out |

Disk and compute capacities |

Adding homogeneous ECSs in a distributed architecture |

Online scale-out supported. During an online scale-out, DWS does not restart and can continue to provide services. The duration is positively correlated with the number of database objects as well as the data size. |

Cluster version: all versions. Online scale-out is supported since 8.1.1. |

|

Cluster scale-in |

Disk and compute capacities |

Removing some of the ECSs in a distributed architecture |

Online scale-in supported. During an online scale-in, DWS does not restart and can continue to provide services. The duration is positively correlated with the number of database objects as well as the data size. |

Cluster version: 8.1.1.300 |

|

Cluster resizing |

Disk and compute capacities, and cluster topology |

Using a new ECS flavor (new hardware specifications) and new cluster topology to create a new cluster, and redistributing data between the old and new clusters |

The duration is positively correlated with the number of database objects as well as the data size. Read-only services can be provided during cluster resizing. |

Cluster version: Agent 8.2.0.2 or later |

|

Adding or deleting CNs |

CN instances |

Adding CNs to enhance concurrency, or removing faulty CNs to quickly restore DDL services |

Online addition and deletion of CNs is supported. During CN addition, DWS does not restart and can continue to provide services. |

Cluster version: 8.1.1 (Online addition and deletion of CNs is supported in 8.1.3 and later.) |

Application Scenarios for Different Scaling Options

Table 3 describes when to use each scaling option.

|

Category |

Problem to Solve |

Recommended Scaling Option |

Impact on Services |

Estimated Duration |

|---|---|---|---|---|

|

Storage |

Insufficient storage space. CPU, memory, and disk I/O capacities are sufficient. |

Increase disk capacity. |

Online services can be maintained. |

No need for data migration. Can be completed within 5 to 10 minutes. |

|

Excessive storage space, which needs to be reduced to cut costs. CPU, memory, and disk I/O capacities are sufficient. |

Create a cluster with smaller disk capacity (but otherwise unchanged), and migrate data to the new cluster by performing a DR switchover. |

Data becomes read-only during the DR switchover, which typically takes less than 30 minutes. |

The duration is positively correlated with the data size. |

|

|

Compute |

Insufficient CPU or memory capacity |

Use a larger ECS flavor. |

The cluster needs to restart once. |

No need for data migration. Can be completed within 5 to 10 minutes. |

|

Insufficient disk I/O |

Create a cluster with smaller disk capacity (but otherwise unchanged), and migrate data to the new cluster by performing a DR switchover. |

Data becomes read-only during the DR switchover, which typically takes less than 30 minutes. |

The duration is positively correlated with the data size. |

|

|

Distributed compute and storage |

Insufficient distributed capabilities due to insufficient nodes |

Scale out the cluster. |

Online services can be maintained (partially impacted). |

Data migration is needed. The duration is positively correlated with the sizes of metadata as well as service data. |

|

Too many nodes, leading to a high cost |

Scale in the cluster. |

Online services can be maintained (partially impacted). |

Data migration is needed. The duration is positively correlated with the size of service data. |

|

|

Cluster topology |

Change both the cluster topology and node flavor (the number of DNs changes). |

Resizes the cluster. |

Read-only services |

Data migration is needed. The duration is positively correlated with the sizes of metadata as well as service data. |

|

Change both the cluster topology and node flavor (the number of DNs remains the same). |

Perform cluster DR switchover and data migration |

Online services can be maintained (partially impacted). |

Data migration is needed. The duration is positively correlated with the size of service data. |

|

|

Insufficient concurrency support |

Add CNs. |

Online services can be maintained (partially impacted). |

Data migration is needed. The duration is positively correlated with the size of metadata. |

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot