kube-prometheus-stack

Introduction

The Cloud Native Cluster Monitoring add-on (formerly kube-prometheus-stack) uses Prometheus-operator and Prometheus and provides easy-to-use, end-to-end Kubernetes cluster monitoring.

This add-on enables monitoring data to connect to the monitoring center, so that you can view monitoring data and configure alarms on the console.

Open-source community: https://github.com/prometheus/prometheus

Scenario

- If you need to report resource pool metrics to a third-party platform, you can install this plug-in. If this plug-in is not installed, metrics will be reported to Huawei Cloud AOM.

If neither of the preceding scenarios applies, you do not need to install the plug-in.

Constraints

The kube-state-metrics component of the plug-in does not collect labels or annotations of Kubernetes resources.

Permissions

The node-exporter component of this plug-in needs to read the Docker info data from the /var/run/docker.sock directory on the host for monitoring the Docker disk space.

The cap_dac_override privilege is required for running node-exporter to read Docker info data.

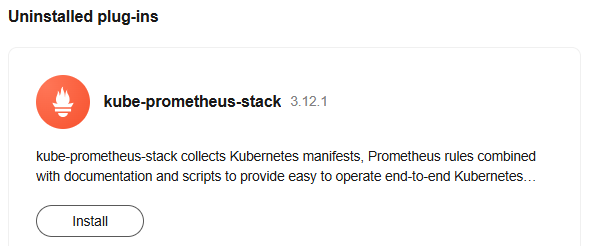

Installing a Plug-in

- Log in to the ModelArts console. In the navigation pane on the left, choose Standard Cluster under Resource Management.

- Click the resource pool name to access its details page.

- On the resource pool details page, click the Plug-ins tab.

- Locate the plug-in to be installed in the list and click Install.

Figure 1 Installing a plug-in

Table 1 Parameters Parameter

Sub-Parameter

Description

Data Storage Configuration (Select at Least One Item)

Report Monitoring Data to a Third-Party Platform

To report Prometheus data to a third-party monitoring system, enter the address and token of the third-party monitoring system and determine whether to skip certificate authentication.

- Data Reporting Address: Enter a complete RemoteWrite address of a third-party platform or Huawei Cloud Prometheus instance.

- Authentication Mode: Select the authentication mode for reporting monitoring data and enter the username and password of the third-party platform in each authentication mode.

Local data storage

Local data storage: Monitoring data is stored within the cluster for local metric-based queries. This will use significant CPU, memory, and disk resources. The amount is directly proportional to the size of the cluster and the number of custom metrics being used.

No local data storage: Monitoring data is stored outside of the cluster, in either AOM or a third-party monitoring system, to save cluster resources.

If this function is enabled, monitoring data is stored in attached EVS or DSS disks so that functions relying on local monitoring data (for example, HPA using custom metrics) can run properly. If you uninstall the plug-in, data on the EVS disk is automatically deleted. To use HPA function, enable this option. Created storage volumes will be billed and counted towards the storage quota.

Once enabled, you need to set the EVS disk type and capacity.

Local data storage: Monitoring data is stored within the cluster for local metric-based queries. This will use significant CPU, memory, and disk resources. The amount is directly proportional to the size of the cluster and the number of custom metrics being used.

No local data storage: Monitoring data is stored outside of the cluster, in either AOM or a third-party monitoring system, to save cluster resources.

Specifications

Plug-in Version

Specify the version of the plug-in to be deployed.

Plug-in Specifications

Preset:

- Demo: applicable to clusters with less than 100 Pods

- Small: applicable to clusters with less than 2,000 Pods

- Medium: applicable to clusters with less than 5,000 Pods

- Large: applicable to clusters with more than 5,000 Pods.

Custom: Set the CPU and memory quotas as required. Ensure the cluster has sufficient node resources. Otherwise, plug-in instances will fail to be scheduled.

High Availability

Select Local data storage. Deploy prometheusOperator, prometheus-server, alertmanager, thanosSidecar, thanosQuery, adapter, and kubeStateMetrics in multi-instance mode.

Alternatively, do not select Local data storage, and deploy prometheusOperator, prometheus-lightweight, and kubeStateMetrics in multi-instance mode.

The supported deployment modes vary depending on the component. For details, see Components.

Configuration List

Detailed configurations of the specified specifications.

Parameter Settings

Collection Period (s)

If the cluster scale is large (≥ 200 nodes or ≥ 10,000 Pods), set this parameter to 60 or 30.

Data Retention Period

Set the data retention period.

Advanced Settings

- Node-Exporter Listening Port: This port uses the host network and allows Prometheus to collect metrics from the node where the port is located. If the port conflicts with another application port, you can modify it.

- Scheduling Policy: The component can run on the node that tolerates the configured taint.

After Prometheus is upgraded, you can configure node affinity and taint tolerance for the Prometheus plug-in group on this page. Currently, only taint key-level tolerance is supported. This allows components to run on nodes with a matching taint key.

You can add multiple scheduling policies. Policies with specific component names take priority over the global policy. By default, a scheduling policy is disabled if its affinity node key or toleration node taint key is not configured.

- Read "Usage Notes" and select I have read and understand the preceding information.

- Click OK.

Components

|

Component |

Description |

Deployment Mode |

Resource Type |

|---|---|---|---|

|

prometheusOperator (workload name: prometheus-operator) |

Deploys and manages the Prometheus Server based on custom resource definitions (CRDs), and monitors and processes the events related to these CRDs. It is the control center of the entire system. |

All |

Deployment |

|

prometheus (workload name: prometheus-server) |

A Prometheus Server cluster deployed by the operator based on the Prometheus CRDs that can be regarded as StatefulSets. |

All |

StatefulSet |

|

alertmanager (workload name: alertmanager-alertmanager) |

Alarm center of the plug-in. It receives alarms sent by Prometheus and manages alarm information by deduplicating, grouping, and distributing. |

Local data storage enabled |

StatefulSet |

|

thanosSidecar |

Available only in HA mode. Runs with prometheus-server in the same pod to implement persistent storage of Prometheus metric data. |

Local data storage enabled |

Container |

|

thanosQuery |

Entry for PromQL query when Prometheus is in HA scenarios. It can delete duplicate metrics from Store or Prometheus. |

Local data storage enabled |

Deployment |

|

adapter (workload name: custom-metrics-apiserver) |

Aggregates custom metrics to the native Kubernetes API server. |

Local data storage enabled |

Deployment |

|

kubeStateMetrics (workload name: kube-state-metrics) |

Converts the Prometheus metric data into a format that can be identified by Kubernetes APIs. By default, the kube-state-metrics component does not collect all labels or annotations of Kubernetes resources. To collect such information, see Collecting All Labels and Annotations of a Pod. If the components run in multiple pods, only one pod provides metrics. |

All |

Deployment |

|

nodeExporter (workload name: node-exporter) |

Deployed on each node to collect node monitoring data. |

All |

DaemonSet |

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot