Creating a Third-Party Model Evaluation Dataset

A model with excellent performance must possess strong generalization capabilities. This means that the model should perform well not only on the provided data (training data) but also on unseen data. To achieve this goal, model evaluation is indispensable. When collecting evaluation datasets, ensure that the datasets are independent and random to guarantee that the collected data can represent a sample of real-world data. This helps to avoid biasing the evaluation result, thereby more accurately reflecting the performance of the model in different scenarios. By using the evaluation dataset to evaluate a model, developers can understand the advantages and disadvantages of the model and find the optimization direction.

Third-party models support manual evaluation and automatic evaluation.

- Manual evaluation: The answers generated by the model are evaluated based on the manually created evaluation dataset and evaluation metrics. During evaluation, the model answers need to be manually scored based on the created evaluation items. After the evaluation is complete, an evaluation report is generated based on the scoring result.

- Automatic evaluation: There are two types of evaluation rules: rule-based and LLM-based.

- The answers generated by the model are automatically evaluated based on rules (similarity/accuracy). You can use the professional datasets preset in the evaluation template or customize evaluation datasets. For details about the supported preset datasets, see Table 1.

- The model is used to automatically score the generated result of the evaluation model, which is applicable to open or complex Q&A scenarios. There are grading mode and comparison mode.

Table 1 Preset evaluation sets Type

Input Dataset

Description

General knowledge and skills

Common sense

Evaluate the mastery of basic knowledge and information about daily life, including history, geography, and culture.

Mathematical capability

Evaluate the model's capability of solving mathematical problems, including arithmetic operations, algebraic equation solving, and geometric shape analysis.

Logical reasoning

Evaluate the capability of the evaluation model to perform reasonable inference and analysis based on known information, involving multiple logical processes of thinking such as deduction and induction.

Chinese capability

Evaluate the advanced knowledge and inference capabilities of large models in the Chinese language and cultural background.

Domain knowledge

Finance

Evaluate the capabilities of large models in the financial field, including quick understanding and interpretation of complex financial concepts, risk prediction and data analysis, investment suggestions, and financial decision-making support.

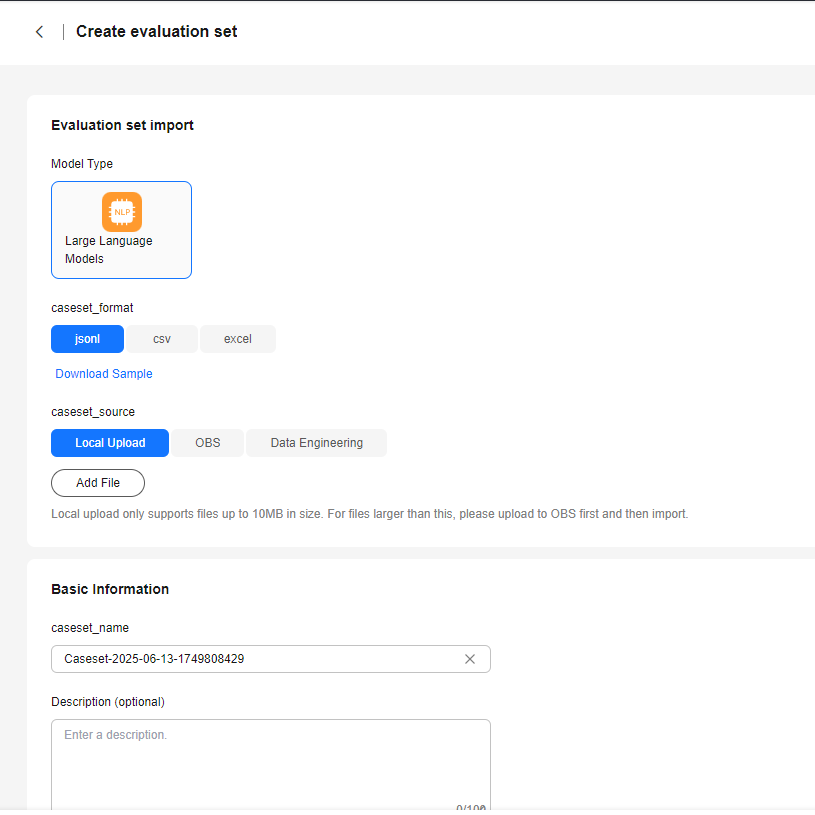

Importing or Uploading Evaluation Datasets from a Local PC, OBS files, and Data Engineering Datasets

- Log in to ModelArts Studio and access the required workspace.

- In the navigation pane, choose Evaluation Center > Evaluation Set Management. Click Create evaluation set in the upper right corner.

- On the Create evaluation set page, select the required model type, file format, and import source, and click Add File to upload the data file. For details, see Table 2.

Creating a Dataset Using Data Engineering

The procedure for creating an evaluation dataset is the same as that for creating a training dataset. This section describes only the procedure. For details, see Using Data Engineering to Build a Third-Party Model Dataset.

- Log in to ModelArts Studio and access the required workspace.

- In the navigation pane, choose Data Engineering > Data Acquisition. On the Import Task page, click Create Import Job in the upper right corner.

- On the Create Import Job page, select the required dataset type, file format, and import source, and select the storage path to upload the data file.

Table 3 lists the formats supported by the NLP model evaluation dataset.

- After the data file is uploaded, enter the dataset name and description, and click Create Now.

- In the navigation pane, choose Data Engineering > Data Publishing > Publish Task. On the displayed page, click Create Data Publish Task in the upper right corner.

- On the Create Data Publish Task page, select a dataset modality and a dataset file.

- Click Next, select a format, enter the name, select the dataset visibility, and click OK.

To evaluate a Pangu model, you need to publish the dataset in Pangu format.

Setting Prompts

Set a prompt template for the evaluation set.

- Binding prompts during evaluation set import

Import an NLP model evaluation set in Excel, CSV, or JSONL format. Fill in the prompt template and prompt name columns in the uploaded Excel file.

- Setting a prompt template for an existing evaluation set

Click Evaluation Set Management. On the Custom Evaluation Dataset page, select the evaluation set and click the evaluation set name. The evaluation set details page is displayed.

Select a category name in Configure prompt words area, and then click Configure prompt words.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot