The LLM node allows you to call the capability of LLMs. You can configure a deployed model for the node, write prompts and set parameters to make models process specific tasks.

The LLM node is an optional node. If it does not need to be configured, skip this section.

To configure an LLM node, perform the following steps:

- Click Add Node at the bottom of the canvas, drag the LLM node from the node drawer to the canvas, and click the node to open the node configuration page.

- Configure the LLM node by referring to Table 1.

Table 1 LLM node configuration

|

Configuration Type |

Parameter Name |

Description |

|

Parameter configuration

|

Input params |

Input parameters required for model processing. The values will be dynamically added to the prompt. The default input parameter name is query.

- Param name: The value can contain only letters, digits, and underscores (_), and cannot start with a digit.

- Type and Value: Type can be set to ref and literal.

- ref: You can select the output parameters of the previous nodes contained in the workflow.

- literal: transfers user-defined content to the LLM. In this mode, no matter what output content is generated by the previous node, the content will not be transferred to the LLM.

|

|

Output params |

This parameter is used to parse the output of the LLM node and provide the output for the subsequent node.

- Param name: The length of the parameter name must be greater than or equal to one character. The character must be one of the following types:

- Letters (A–Z and a–z)

- Digits (0–9)

- Special characters: underscores (_)

NOTE:

The name of a user-defined output parameter cannot be the same as that of the built-in output parameter rawOutput. The LLM node has a built-in output parameter rawOutput, which indicates the original output of the node that is not parsed. The output can be directly referenced by the subsequent nodes connected to the LLM node.

- Param type: type of an output parameter. The options are String, Integer, Number, Boolean, Object, and Array.

- Description: description of the output parameter.

- Output format: The supported output formats include Text, Markdown, and JSON.

Text: original content output of the LLM. Only one parameter is supported. The default value is raw_output. The parameter name can be changed.

Markdown: Select this option when the model is expected to output content in markdown format. Only one parameter is supported. The default value is raw_output. The parameter name can be changed.

JSON: The model must respond in JSON format. Multiple parameters can be added.

|

|

Model configuration |

Model selection |

Select the LLM that has been configured in the model access module. |

|

Top P |

During output, the model selects words with the highest probability until the total probability of these words reaches the Top P value. The Top P value can restrict the model to select these high-probability words, thereby controlling the diversity of output content. You are advised not to adjust this parameter together with the Temperature parameter. |

|

Temperature |

Controls the randomness of the generation result. A higher temperature makes the model output more diverse and innovative. A lower temperature makes the output more compliant with the instruction requirements, but reduces the diversity of the model output. |

|

Prompt configuration

|

Prompt |

Prompts instruct the model to complete tasks better. When configuring prompts, you can use the {{variable}} format to reference the parameters defined in the input parameters of the current node. The replaced content is transferred to the model. |

|

Memory |

Whether to enable the memory function. After it is enabled, the content of multiple rounds of dialogs can be recorded. By default, it is disabled. |

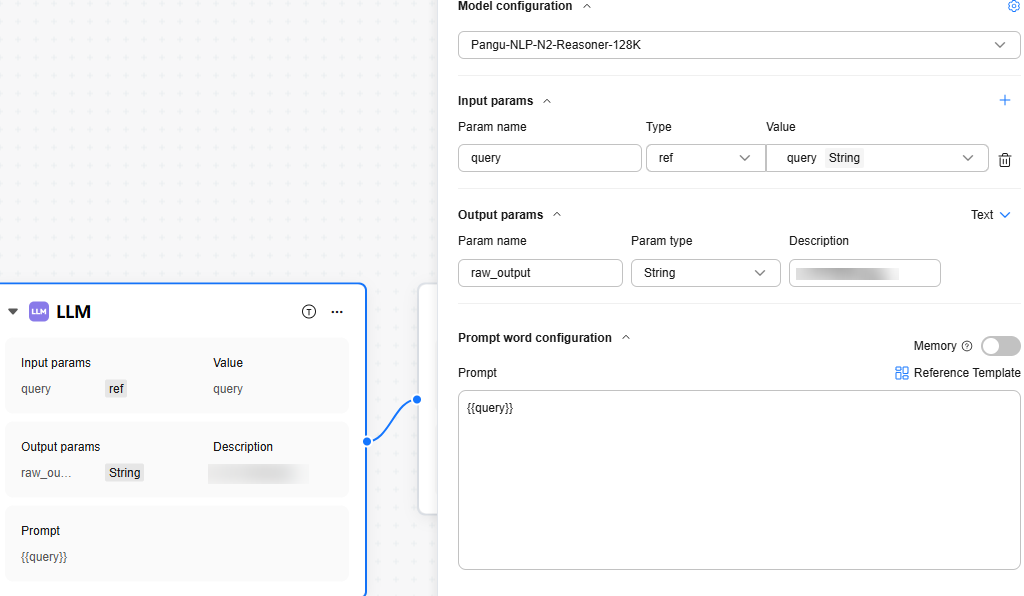

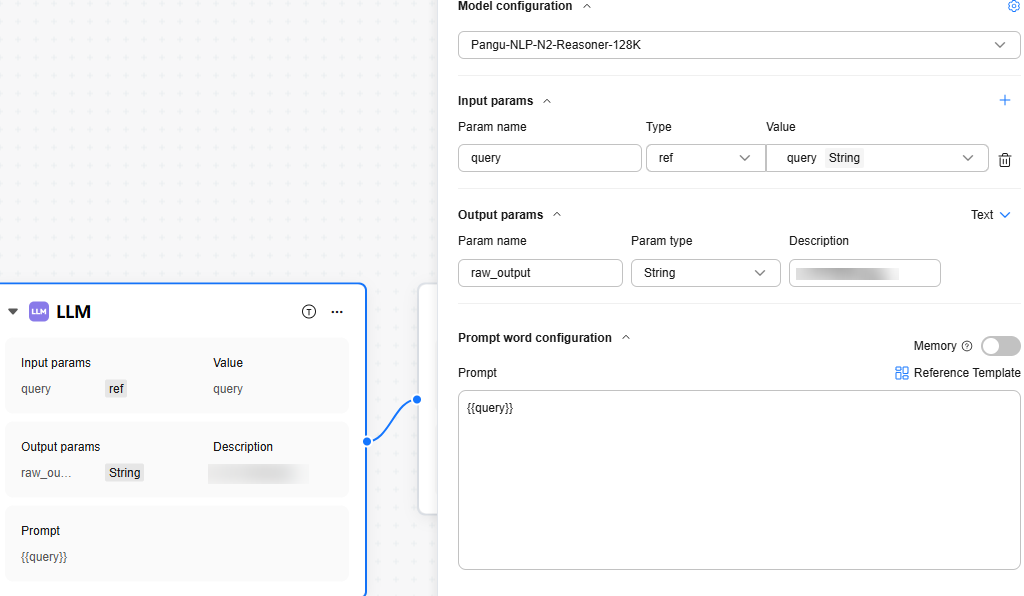

Figure 1 LLM node configuration example

- After completing the configuration, click OK.

- Connect the LLM node to other nodes.