Upgrading the Version of an OpenSearch Cluster

In CSS, you can upgrade the version of an OpenSearch cluster to use feature and performance enhancements or fix known issues.

|

Upgrade Type |

Scenario |

Upgrade Process |

|---|---|---|

|

Same-version upgrade |

Update the cluster's kernel patches. The cluster is upgraded to the latest image of the current version to fix known issues or optimize performance. For example, if the cluster version is 1.3.6(1.3.6_24.3.3_0102), upon a same-version upgrade, the cluster will be upgraded to the latest image 1.3.6(1.3.6_24.3.4_0109) of version 1.3.6. (The version numbers used here are examples only.) |

Nodes in clusters are upgraded one by one to avoid interrupting services. |

|

Cross-version upgrade |

Upgrade a cluster to the latest image of the target version to enhance functionality or incorporate versions. For example, if the cluster version is 1.3.6(1.3.6_24.3.3_1224), upon a cross-version upgrade, the cluster will be upgraded to the latest image 2.19.0(2.19.0_25.9.0_1107) of version 2.19.0. (The version numbers used here are examples only.) |

Version Support

Constraints

- A maximum of 20 clusters can be upgraded at the same time.

- During an upgrade, the data on the upgraded nodes needs to be migrated to other nodes. The timeout for data migration per node is 48 hours. Upgrade will fail if this timeout expires. When the cluster has large quantities of data, you are advised to manually adjust the data migration rate and avoid performing the migration during peak hours.

Upgrade Impact

Before upgrading a cluster, it is essential to assess the potential impacts and review operational recommendations. This enables proper scheduling of the upgrade time, ensuring a smooth upgrade process and minimizing service interruptions.

- Impact on performance

The nodes of a cluster are upgraded one at a time to ensure service continuity. However, data migration that occurs during the upgrade consumes I/O performance, and taking individual nodes offline still has some impact on the overall cluster performance.

To minimize this impact, it is advisable to adjust the data migration rate based on the cluster's traffic cycle: increase the data migration rate during off-peak hours to shorten the task duration, and decrease it before peak hours arrive to ensure optimal cluster performance. The data migration rate is determined by the indices.recovery.max_bytes_per_sec parameter. The default value of this parameter is the number of vCPUs multiplied by 8 MB. For example, for four vCPUs, the data migration rate is 32 MB. You can adjust it based on the service requirements.PUT /_cluster/settings { "transient": { "indices.recovery.max_bytes_per_sec": "128MB" } } - Impact on request handling

During the upgrade, nodes are upgraded one at a time. Requests sent to the node that is being upgraded may fail. To mitigate this impact, the following measures may be taken:

- Use a VPC endpoint or a dedicated load balancer to handle access requests to your cluster, which makes sure that requests are automatically routed to available nodes.

- Enable an exponential backoff & retry mechanism on the client (configure three retries).

- Perform the upgrade during off-peak hours.

- Rebuilding OpenSearch Dashboards and Cerebro

OpenSearch Dashboards and Cerebro will be rebuilt during the upgrade, making them temporarily unavailable. Additionally, due to cross-version compatibility issues, OpenSearch Dashboards may become unavailable during the upgrade. These problems will go away once the upgrade is completed.

- Characteristics of the upgrade process

Once started, an upgrade task cannot be stopped until it succeeds or fails. An upgrade failure only impacts a single node, and does not interrupt services if there are data replicas. If necessary, you can restore a node that has failed to be upgraded by performing Replacing Specified Nodes for an OpenSearch Cluster.

Upgrade Duration

The following formula can be used to estimate how long it takes to upgrade a cluster:

Upgrade duration (min) = 15 (min) x Total number of nodes to be upgraded + Data migration duration (min)

where,

- 15 minutes is how long non-data migration operations (e.g., initialization and image upgrade) typically take per node. It is an empirical value.

- The total number of nodes is the sum of the number of data nodes, master nodes, client nodes, and cold data nodes in the cluster.

Data migration duration (min) = Total data size (MB)/[Total number of vCPUs of the data nodes x 8 (MB/s) x 60 (s) x Concurrency]

where,

- Typically, when concurrency is higher than 1, the actual data migration rate can only reach half of the throughput intended by the configured concurrency, depending on the cluster load.

- 8 MB/s indicates that each vCPU can process 8 MB of data per second. It is an empirical value.

The formulas above use estimates under ideal conditions. In practice, it is advisable to add 20% to 30% of redundancy.

Pre-Upgrade Check

To ensure a successful upgrade, you must check the items listed in the following table before performing an upgrade.

|

Check Item |

Check Method |

Description |

Normal Status |

|---|---|---|---|

|

Cluster status |

System check |

After an upgrade task is started, the system automatically checks the cluster status. Clusters whose status is green or yellow can work properly and have no unallocated primary shards. |

The cluster is available and there are no ongoing tasks. |

|

Node quantity |

System check |

After an upgrade task is started, the system automatically checks node quantities. To ensure service continuity, there has to be at least two nodes of each type for a cluster in each of its AZs. For a cluster with master nodes, there has to be at least two data nodes. For a cluster without master nodes, the number of data nodes plus cold data nodes must be three at least. |

|

|

Disk capacity |

System check |

After an upgrade task is started, the system automatically checks the disk capacity. During the upgrade, nodes are brought offline one by one and then new nodes are created. Ensure that the total disk capacity of the remaining nodes is sufficient for handling all the data of the cluster, and that the nodes' disk usage stays below 80%. |

After a single node is brought offline, the total disk capacity of the remaining nodes is sufficient for handling all the data of the cluster, and the nodes' disk usage stays below 80%. |

|

Data replicas |

System check |

Check that the remaining data nodes and cold data nodes can handle the maximum number of primary and standby shards of indexes in the cluster. There should not be unallocated replicas during the upgrade. |

Number of data nodes + Number of cold data nodes > Maximum number of index replicas + 1 |

|

Data backup |

System check |

Before the upgrade, back up data to prevent data loss caused by upgrade failures. When submitting an upgrade task, you can choose whether to check for full index snapshots. |

Check whether data has been backed up. |

|

Resources |

System check |

After an upgrade task is started, the system automatically checks resources. Resources will be created during the upgrade. Ensure that resources are available. |

Resources are available and sufficient. |

|

Custom plugins |

System and manual check |

Perform this check only if custom plugins are installed in the source cluster. If a cluster has a custom plugin, upload all plugin packages of the target version on the plugin management page before the upgrade. During the upgrade, install the custom plugin in the new nodes. Otherwise, the custom plugins will be lost after the cluster is successfully upgraded. After an upgrade task is started, the system automatically checks whether the custom plugin package has been uploaded, but you need to check whether the uploaded plugin package is correct.

NOTE:

If the uploaded plugin package is incorrect or incompatible, the plugin package cannot be automatically installed during the upgrade. As a result, the upgrade task fails. To restore a cluster, you can terminate the upgrade task and restore the node that fails to be upgraded by performing Replacing Specified Nodes for an OpenSearch Cluster. After the upgrade is complete, the status of the custom plugin is reset to Uploaded. |

The plugin package of the cluster to be upgraded has been uploaded to the plugin list. |

|

Custom configurations |

System check |

During the upgrade, the system automatically synchronizes the content of the cluster configuration file opensearch.yml. |

Clusters' custom configurations are not lost after the upgrade. |

|

Non-standard operations |

Manual check |

Check whether non-standard operations have been performed in the cluster. Non-standard operations refer to manual operations that are not recorded. These operations cannot be automatically passed on during the upgrade, for example, modification of the opensearch_dashboards.yml configuration file, system settings, and return routes. |

Some non-standard operations are compatible. For example, the modification of a security plugin can be retained through metadata, and the modification of system configuration can be retained using images. Some non-standard operations, such as the modification of the opensearch_dashboards.yml file, cannot be retained, and you must back up the file in advance. |

|

Compatibility check |

System and manual check |

After a cross-version upgrade task is started, the system automatically checks whether the source and target versions have incompatible configurations. If a custom plugin is installed for a cluster, the version compatibility of the custom plugin needs to be manually checked. |

Configurations before and after the cross-version upgrade are compatible. |

|

Check Cluster Loads |

System and manual check |

If the cluster is heavily loaded, there is a high probability that the upgrade will get stuck or fail. You are advised to check the cluster load before the upgrade and perform the upgrade only during off-peak hours. You can also choose to check the cluster load while configuring upgrade information. |

|

Performing the Upgrade Task

- Log in to the CSS management console.

- In the navigation pane on the left, choose Clusters > OpenSearch.

- In the cluster list, click the name of the target cluster. The cluster information page is displayed.

- Click the Cluster Snapshots tab, and perform a full data backup. For details, see Manually Creating a Snapshot.

When creating an upgrade task, you can choose to check whether the full index data has been backed up using snapshots. This helps to prevent data loss in case of an upgrade failure.

- Click the Version Upgrade tab, and set upgrade parameters.

Table 4 Upgrade parameters Parameter

Description

Upgrade Type

Select an upgrade type.

- Same-version upgrade: upgrade kernel patches to the latest images within the current cluster version.

- Cross-version upgrade: upgrade a cluster to the latest image of the target version.

Target Image

Image of the target version. When you select an image, the image name and target version details are displayed.

The supported target versions are displayed in the drop-down list of Target Image. If no target image is available, possible causes are as follows:

- The current cluster is of the latest version.

- The current cluster was created before 2023 and has vector indexes.

- The new version images are unavailable in the current region.

- The current cluster does not support the upgrade type you have selected.

Agency

After a node is upgraded, NICs need to be reattached to it. To do that, you must have the permission to access VPC resources. By configuring an IAM agency, you can authorize CSS to access its VPC resources through an associated account.- If you are configuring an agency for the first time, click Automatically Create IAM Agency to create css-upgrade-agency.

- If there is an IAM agency automatically created earlier, you can click One-click authorization to have the permissions associated with the VPC Administrator role or the VPC FullAccess system policy deleted automatically, and have the following custom policies added automatically instead to implement more refined permissions control.

"vpc:subnets:get", "vpc:ports:*"

- To use Automatically Create IAM Agency and One-click authorization, the following minimum permissions are required:

"iam:agencies:listAgencies", "iam:roles:listRoles", "iam:agencies:getAgency", "iam:agencies:createAgency", "iam:permissions:listRolesForAgency", "iam:permissions:grantRoleToAgency", "iam:permissions:listRolesForAgencyOnProject", "iam:permissions:revokeRoleFromAgency", "iam:roles:createRole"

- To use an IAM agency, the following minimum permissions are required:

"iam:agencies:listAgencies", "iam:agencies:getAgency", "iam:permissions:listRolesForAgencyOnProject", "iam:permissions:listRolesForAgency"

- Click Submit.

- In the displayed dialog box, configure upgrade check and acceleration options.

- Select whether to enable Check Full Index Snapshot.

When enabled, CSS checks for valid snapshots by matching indexes by name. Snapshots help prevent potential data loss caused by upgrade failures.

CSS cannot check the content or backup times of snapshots. You should manually check existing snapshots. If any of them is over one month old, create the latest snapshot.

- Select whether to enable Check Cluster Loads.

During an upgrade, data migration and node restarts will consume cluster resources and increase loads. When this option is enabled, CSS evaluates overload risks for the cluster and reduce the likelihood of a cluster upgrade failure caused by an overload.

The check items are as follows:

- nodes.thread_pool.search.queue < 1000: Check that the maximum number of requests in the search queue is less than 1000.

- nodes.thread_pool.write.queue < 200: Check that the maximum number of requests in the write queue is less than 200.

- nodes.process.cpu.percent < 90: Check that the maximum CPU usage of cluster nodes is less than 90%.

- nodes.os.cpu.load_average/Number of vCPUs < 80%: Check that the number of running processes plus the number of processes waiting for CPUs is less than 80% of the total number of vCPUs.

If any of the results is abnormal, wait until the load drops or actively optimize it before performing the upgrade.

- Set Data migration concurrency control.

Increasing the data migration concurrency can accelerate the upgrade process, but faster data migration leads to higher I/O usage. A higher concurrency is likely to lead to higher cluster load, which may impact cluster performance. You are advised to retain the default value 1. The value should not exceed half of the number of data nodes.

- Select whether to enable Check Full Index Snapshot.

- Click OK to start the pre-upgrade check. The system automatically performs the check based on Pre-Upgrade Check, and if the check is successful, proceeds to the upgrade.

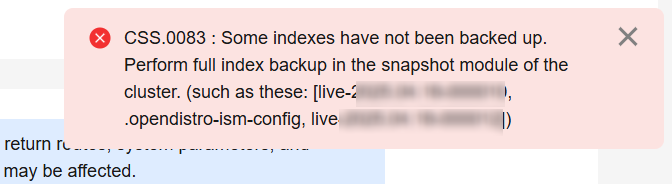

If the upgrade check fails, error information is displayed in the upper right corner of the console. Adjust the cluster configuration accordingly and try again.

Figure 1 Upgrade check errors

When Task Status in the task list below changes to Successful, the upgrade is completed.

- Confirm the upgrade result.

In the cluster list, find the target cluster, and check the cluster version in the Version column to see if the upgrade is successful.

Checking the Update Task

On the upgrade page, you can check the current upgrade task in the Upgrade Records area to learn the task progress and status.

Expand the task list and click View Progress to check the upgrade progress and node status.

If Task Status is Failed, you can retry the task or terminate it.

- Retry the task: Click Retry in the Operation column.

- Terminate the task: Click Terminate in the Operation column. Before terminating a cross-version upgrade task, ensure that none of the nodes have been upgraded.

After an upgrade task is terminated, nodes that have failed to be upgraded will remain in the current state, while these that have been successfully upgraded will not be rolled back to the old version. As a result, the nodes in the same cluster may have different versions. You are advised to restart the upgrade as soon as relevant issues are handled, ensuring that all nodes have the same version.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot