NPU Topology-aware Affinity Scheduling on a Single Node

NPU topology-aware affinity scheduling on a single node is an intelligent resource management technology based on the hardware topology of Ascend AI processors. This technology optimizes resource allocation and network path selection, reduces compute resource fragments and network congestion, and maximizes NPU compute utilization. It can significantly improve the execution efficiency of AI training and inference jobs and implement efficient scheduling and management of Ascend compute resources.

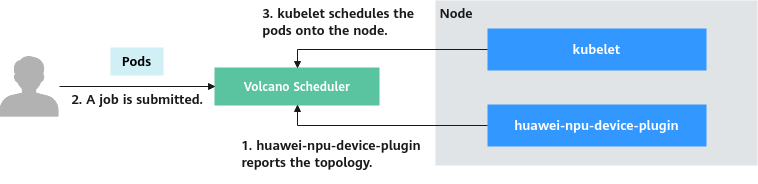

In a CCE standard or Turbo cluster, NPU topology-aware affinity scheduling on a single node requires the cooperation of multiple components. The process is detailed as follows:

- huawei-npu-device-plugin in the CCE AI Suite (Ascend NPU) add-on queries and reports the topology of NPUs on each node.

- After a job is submitted, Volcano Scheduler selects the optimal node and NPU allocation solution based on the job requirements and NPU topology.

- After the pods are scheduled onto the node, kubelet allocates NPUs based on the allocation solution.

Figure 1 How NPU topology-aware affinity scheduling is implemented

Principles of NPU Topology-aware Affinity Scheduling

Due to the differences in the interconnection architectures, the type of NPU topology-aware affinity scheduling varies depending on the hardware models. However, all types of scheduling comply with the following principles:

- Primary principle: Ensure high-speed network channels.

- Secondary principle: Minimize resource fragments.

- Huawei Cache Coherent System (HCCS) is a high-speed bus used for interconnection between CPUs and NPUs.

- Peripheral Component Interconnect Express (PCIe) is a high-speed serial computer expansion bus standard for connecting a computer's motherboard with peripherals.

- Serial Input/Output (SIO) is a method of communicating data between devices, one master and one slave, by using two lines, one data line and one clock line.

|

Hardware Type |

Interconnection Mode |

Affinity Policy Type |

Constraints |

|---|---|---|---|

|

Snt9A |

There are eight NPUs (NPUs 0 to 7) on a node. NPUs 0 to 3 form one HCCS ring, and NPUs 4 to 7 form another HCCS ring. Each HCCS ring is a small-network group. The two groups are interconnected through PCIe. The network transmission speed within a group is higher than that between groups. |

Only small-network group affinity scheduling is supported. The details are as follows:

|

|

|

Snt9B |

There are eight NPUs on a node. The NPUs use the star topology and are interconnected through HCCS. |

Only basic affinity scheduling is supported. The node with higher resource usages is preferentially selected. |

|

|

Snt9C |

There are eight training cards on a node. Each training card forms a die and has two NPUs. NPUs on a training card are interconnected through SIO, and two training cards are interconnected through HCCS. The network transmission speed within a die is higher than that between dies. |

Only die affinity scheduling is supported. There are two modes: hard affinity and no affinity.

|

|

|

Snt3P |

There are eight NPUs on a node. The NPUs use the star topology and are interconnected through PCIe. |

Only basic affinity scheduling is supported. The node with higher resource usages is preferentially selected. |

|

|

Snt3P IDUO2 |

There are four inference cards on a node. Each inference card forms a die and has two NPUs. NPUs on an inference card are interconnected through HCCS, and two inference cards are interconnected through PCIe. The network transmission speed within a die is higher than that between dies. |

Only die affinity scheduling is supported. There are two modes: hard affinity and no affinity.

|

|

Prerequisites

- A CCE standard or Turbo cluster has been created. Different types of affinity scheduling have their own requirements on cluster versions:

- Die affinity scheduling: The cluster version must be v1.23.18, v1.25.13, v1.27.10, v1.28.8, v1.29.4, v1.30.1, or later.

- Small-network group affinity scheduling: The cluster version must be v1.23 or later.

- There are nodes of the corresponding type in the cluster. Snt9 nodes cannot be purchased for CCE standard or Turbo clusters. You can purchase Ascend Snt9 nodes in ModelArts in advance. After the purchase, CCE automatically accepts and manages the nodes. For details, see Creating a Standard Dedicated Resource Pool.

- CCE AI Suite (Ascend NPU) of v2.1.23 or later has been installed. For details about how to install the add-on, see CCE AI Suite (Ascend NPU).

- The Volcano Scheduler add-on has been installed. For details about the add-on version requirements, see Table 1. For details about how to install the add-on, see Volcano Scheduler.

Notes and Constraints

In a single pod, only one container can request NPU resources, and init containers cannot request NPU resources. Otherwise, the pod cannot be scheduled.

Enabling NPU Topology-aware Affinity Scheduling

The parameters vary depending on the types of affinity scheduling.

Only Snt9A nodes support small-network group affinity scheduling. Configure this function as needed.

- Log in to the CCE console and click the cluster name to access the cluster console. In the navigation pane, choose Overview. In the navigation pane, choose Settings. Then click the Scheduling tab.

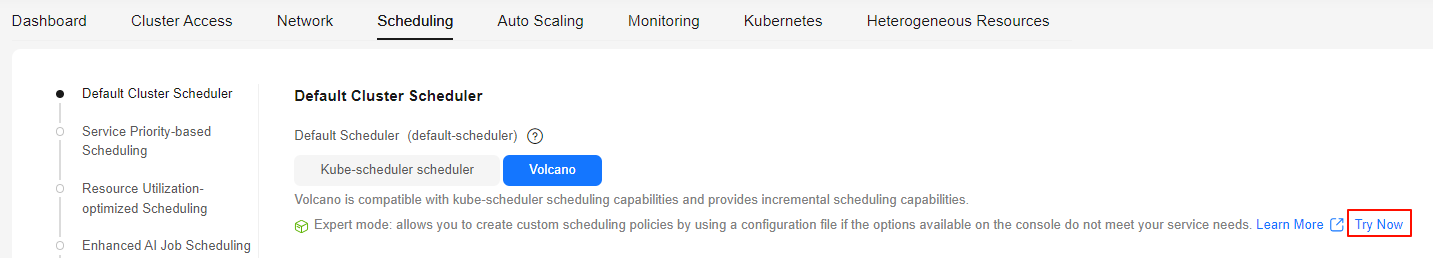

- Set Default Cluster Scheduler to Volcano. After Volcano Scheduler is enabled, enable small-network group affinity scheduling.

- (Optional) In Expert mode, click Try Now and set gpuaffinitytopologyaware.weight for the cce-gpu-topology-priority plugin to specify the priority weight of each small-network group. Before configuring the priority weights, ensure that the cce-gpu-topology-priority plugin has been configured.

... tiers: - plugins: - name: priority - enableJobStarving: false enablePreemptable: false name: gang - name: conformance - plugins: - enablePreemptable: false name: drf - name: predicates - name: nodeorder - plugins: - name: cce-gpu-topology-predicate - name: cce-gpu-topology-priority # If this parameter exists, the cce-gpu-topology-priority plugin has been configured. If not, add this parameter. arguments: # Configure the priority weights. gpuaffinitytopologyaware.weight: 10 - name: xgpu - plugins: - name: nodelocalvolume - name: nodeemptydirvolume - name: nodeCSIscheduling - name: networkresource ...Table 2 Parameters Parameter

Example Value

Description

arguments.gpuaffinitytopologyaware.weight

10

Priority weight. The value ranges from 0 to 2147483647.

A higher weight indicates that a node with a higher NPU usage in the group is more likely to be selected to reduce resource fragments.

- In the lower right corner of the tab, click Confirm Settings. In the displayed dialog box, confirm the modification and click Save.

- Log in to the CCE console and click the cluster name to access the cluster console. In the navigation pane, choose Overview. In the navigation pane, choose Settings. Then click the Scheduling tab.

- Set Default Cluster Scheduler to Volcano. After Volcano Scheduler is enabled, enable basic affinity scheduling without other configurations.

- In the lower right corner of the tab, click Confirm Settings. In the displayed dialog box, confirm the modification and click Save.

Only Snt9C and Snt3P IDUO2 nodes support die affinity scheduling. Configure this function as needed.

- Log in to the CCE console and click the cluster name to access the cluster console. In the navigation pane, choose Overview. In the navigation pane, choose Settings. Then click the Scheduling tab.

- In the Volcano Scheduler configuration, die affinity topology scheduling is enabled by default. You can take the following steps to verify it.

- Set Default Cluster Scheduler to Volcano and click Expert mode > Try Now.

Figure 2 Expert mode > Try Now

- In the YAML file, check the parameters. Die affinity topology scheduling depends on the cce-gpu-topology-predicate and cce-gpu-topology-priority plugins, which are enabled by default in the Volcano Scheduler configuration. If the following parameters do not exist, manually configure them.

... tiers: - plugins: - name: priority - enableJobStarving: false enablePreemptable: false name: gang - name: conformance - plugins: - enablePreemptable: false name: drf - name: predicates - name: nodeorder - plugins: - name: cce-gpu-topology-predicate - name: cce-gpu-topology-priority - name: xgpu - plugins: - name: nodelocalvolume - name: nodeemptydirvolume - name: nodeCSIscheduling - name: networkresource ... - After the cce-gpu-topology-predicate plugin is configured, the hard affinity is used by default. The following parameters can be configured for the cce-gpu-topology-predicate and cce-gpu-topology-priority plugins.

... tiers: - plugins: - name: priority - enableJobStarving: false enablePreemptable: false name: gang - name: conformance - plugins: - enablePreemptable: false name: drf - name: predicates - name: nodeorder - plugins: - name: cce-gpu-topology-predicate arguments: # No affinity is configured npu-die-affinity: none - name: cce-gpu-topology-priority arguments: # Configure the priority weight value, which is only applied in hard affinity. gpuaffinitytopologyaware.weight: 10 - name: xgpu - plugins: - name: nodelocalvolume - name: nodeemptydirvolume - name: nodeCSIscheduling - name: networkresource ...Table 3 Parameters Parameter

Example Value

Description

arguments.npu-die-affinity

none

Die affinity scheduling. The options are as follows:

- required: Hard affinity is used.

- none: No affinity is configured.

If this parameter is not specified, hard affinity is used by default.

arguments.gpuaffinitytopologyaware.weight

10

Priority weight, which is only applied in hard affinity. The value ranges from 0 to 2147483647.

A higher weight indicates that a node with a higher NPU usage in the die is more likely to be selected to reduce resource fragments.

- Click Save in the lower right corner.

- Set Default Cluster Scheduler to Volcano and click Expert mode > Try Now.

- In the lower right corner of the tab, click Confirm Settings. In the displayed dialog box, confirm the modification and click Save.

Use Case

The following uses die affinity scheduling as an example. Assume that an Snt3P IDUO2 node has four inference cards, each of which has two NPUs. There are three remaining NPUs on two inference cards, with two NPUs on one inference card and one NPU on the other inference card. A Volcano job needs to be created, with the number of pods set to 1 and the number of NPUs to 2, to verify that the entire inference card can be scheduled.

- Create a Volcano job for executing tasks.

- Create a YAML file for the Volcano job.

vim volcano-job.yamlBelow is the file content (Only one container in a pod can request NPU resources. Init containers cannot request NPUs. Otherwise, the pod cannot be scheduled):apiVersion: batch.volcano.sh/v1alpha1 kind: Job metadata: name: job-test spec: maxRetry: 10000 # Maximum number of retries when the job fails schedulerName: volcano tasks: - replicas: 1 name: worker maxRetry: 10000 template: metadata: spec: containers: - image: busybox command: ["/bin/sh", "-c", "sleep 1000000"] imagePullPolicy: IfNotPresent name: running resources: requests: cpu: 1 "huawei.com/ascend-310": 2 limits: cpu: 1 "huawei.com/ascend-310": 2 restartPolicy: OnFailure

- The parameters in resources.requests are described as follows:

- "huawei.com/ascend-1980": indicates the number of NPUs that can be requested on Snt9C nodes. The value can be 1, 2, 4, 8, or 16.

- "huawei.com/ascend-310": indicates the NPU resources requested on an Snt3P IDUO2 node. The value can be 1, 2, 4, or 8.

- The parameters in resources.requests are described as follows:

- Create the Volcano job.

kubectl apply -f volcano-job.yamlInformation similar to the following is displayed:

job.batch.volcano.sh/job-test created

- Check whether the pod is successfully scheduled.

kubectl get pod

If the following information is displayed, the Volcano job has been executed and the pod has been scheduled:

NAME READY STATUS RESTARTS AGE job-test-worker-0 1/1 Running 0 20s

- Create a YAML file for the Volcano job.

- Check the NPUs allocated to the pod.

kubectl describe pod job-test-worker-0On the Snt3P IDUO2 node, the NPUs are numbered 0, 1, 2, and so on by default. NPU 0 and NPU 1 are on the same inference card, and NPU 2 and NPU 3 are on the same inference card, and so on. According to the command output, NPU 2 and NPU 3 are allocated to the pod, and the two NPUs are on the same inference card, meeting the die affinity scheduling policy.

Name: job-test-worker-0 Namespace: default Priority: 0Service Account: default Node: 192.168.147.31/192.168.147.31 Start Time: Mon, 09 Sep 2024 21:23:01 +0800 Labels: volcano.sh/job-name=job-test volcano.sh/job-namespace=default volcano.sh/queue-name=default volcano.sh/task-index=0 volcano.sh/task-spec=worker Annotations: huawei.com/AscendReal: Ascend310-2,Ascend310-3 huawei.com/kltDev: Ascend310-2,Ascend310-3 scheduling.cce.io/gpu-topology-placement: huawei.com/ascend-1980=0x0c

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot