Creating a Data Quality Job

You can create quality jobs to apply the created rules to existing tables.

Procedure

- On the DataArts Studio console, locate a workspace and click DataArts Quality.

- (Optional) In the left navigation pane, choose and create a directory. If a directory exists, you do not need to create one. Note that rule templates, quality jobs, and comparison job are in the same directory.

Currently, you can create a directory using either of the following methods:

Click and enter a directory name in the displayed dialog box. In this way, you can create a maximum of seven layers of directories.

Figure 1 Creating a directory

and enter a directory name in the displayed dialog box. In this way, you can create a maximum of seven layers of directories.

Figure 1 Creating a directory You can also click

You can also click to synchronize the subjects in DataArts Architecture as directories. (Only published subjects can be synchronized.) The synchronized directories are consistent with the published subjects in DataArts Architecture and are displayed by layer, such as

to synchronize the subjects in DataArts Architecture as directories. (Only published subjects can be synchronized.) The synchronized directories are consistent with the published subjects in DataArts Architecture and are displayed by layer, such as  and

and  .

.

- The directories you create are not affected by the synchronization. If the name of a created directory conflicts with that of a subject:

- If they conflict during the first synchronization, a subject layer (such as

and

and  ) is added to the name of the directory.

) is added to the name of the directory. - If they conflict after the subject is modified, the synchronization fails.

- If they conflict during the first synchronization, a subject layer (such as

- Changes to subjects or subject layers in DataArts Architecture cannot be automatically synchronized. You must click

again to synchronize them.

again to synchronize them.

If a subject or subject layer in DataArts Architecture is deleted and synchronized to DataArts Quality, the corresponding directory will not be deleted. Instead, the subject attributes will be deleted from the directory.

- After the synchronization is complete, the system automatically displays the synchronization result details. You can view the names of the subjects that fail to be synchronized.

Figure 2 Synchronizing subjects from DataArts Architecture

- The directories you create are not affected by the synchronization. If the name of a created directory conflicts with that of a subject:

- On the Quality Jobs page, click Create. In the dialog box displayed, set the parameters based on Table 1.

Table 1 Quality job parameters Parameter

Description

*Job Name

Quality job name

Description

Information to better identify the quality job. It cannot exceed 1,024 characters.

Tag

Select desired tags from the list of tags that were defined Data Map. If Data Map is disabled, tags do not take effect.

*Directory

Directory for storing the quality job. You can select a created directory. For details about how to create a directory, see (Optional) Creating a Directory.

*Job Level

The options are Warning, Minor, Major, and Critical. The job level determines the template for sending notification messages.

Issue Handler

Handler of the issues detected by the quality job

Timeout

Timeout duration. Enter a value from 5 to 1440. The unit is minute. If this parameter is left empty or the default value 360 is used, the timeout duration is 6 hours by default and can be changed.

- Click Next to go to the Define Rule page, on which each rule card corresponds to a subjob. Click

on the rule card and configure it based on Table 2. You can also add more quality rules and click Next to apply them to a created database or table.

Figure 3 Configuring rules for a quality job

on the rule card and configure it based on Table 2. You can also add more quality rules and click Next to apply them to a created database or table.

Figure 3 Configuring rules for a quality job

Table 2 Parameters for configuring a rule Parameter

Sub-parameter

Description

Basic Information

Subjob Name

In the job execution result, each rule corresponds to a subjob. You are advised to set the subjob information so that you can view the job execution result and locate faults through logs more easily.

Description

Information to better identify the subjob It cannot exceed 1,024 characters.

Object

Rule Type

Database rule, table rule, field rule, cross-field rule, cross-source rule, multi-table and multi-field rule, or custom rule configured for specific fields in a table.

NOTE:- If you select a cross-field rule, you need to configure both a data table and a reference table in Object Scope.

- Currently, cross-source rules support only field comparison jobs between MRS Hive and DWS based on Hetu connections.

- Before configuring cross-source rules, you need to create the MRS Hive and GaussDB data sources in Management Center. For details, see MRS Hive Connection Parameters and Open Gauss Connection Parameters.

Data Connection

Source and destination objects support the following data connection types: DWS, MRS Hive, MRS Spark, DLI, RDS (MySQL and PostgreSQL), Hetu, Oracle, Doris, MRS Spark (Hudi), and MRS ClickHouse.

Select a created data connection from the drop-down list box.

NOTE:- Rules are based on data connections. Therefore, you must create data connections in Management Center before creating data quality rules. For MRS Hive and MRS Spark data sources, if Rule Type is set to Table rule or Field rule, Connection Type must be set to Proxy connection (MRS API connection is not supported) during the creation of connections to these data sources.

- For MRS Hive connected through a proxy, select MRS API connection or Proxy connection.

- MRS API connection: An MRS API is used for submission. By default, historical jobs are submitted through MRS APIs. You are advised to keep the default settings when editing the job.

- Proxy connection: A username and a password are used for submission. You are advised to select this mode for new jobs to prevent job submission failures caused by permission issues.

- The strict mode of the MRS Hive component is not supported.

Database

Select the database to which the configured data quality rules are applied.

NOTE:- The database is tailored to the created data connection.

- When Rule Type is set to Database rule or Table rule, set the data object to the corresponding database.

- When Rule Type is set to Custom rule, select the corresponding database.

scheme

This parameter is displayed and needs to be set only when the data source has a scheme.

Data Table

Select the table to which the configured data quality rules apply.

NOTE:- The table is closely related to the database.

- When Rule Type is set to Table rule, set the data object to the corresponding table.

- When Rule Type is set to Custom rule, select the corresponding table.

SQL

This parameter is mandatory if you select Custom rule for Rule Type. Enter a complete SQL statement to define how to monitor the quality of data objects.

The semantics of SQL statements can be verified. The verification result is for reference only.

Default Value

Input parameters for the custom SQL statement. The sequence of the parameters must be consistent with that of the default parameter values (when a data quality job is executed in DataArts Factory).

NOTE:When a data quality operator is scheduled by a data development job, the parameter values defined in the data development job are preferentially used.

Field

This parameter is used only for abnormal tables.

Example: column1, column2, or column3

Failure Policy

Select Ignore rule errors as required.

Select Fields

This parameter is mandatory if you select Field rule for Rule Type. Select a field in the corresponding data table.

NOTE:Fields names containing only one letter (such as a, b, c, and d) cannot be verified.

Data Object

This parameter is not required if you select Custom rule for Rule Type. Otherwise, this parameter is mandatory. Select the data fields for reference.

When you select a table name, the search box is case sensitive.

Reference Data Object

This parameter is mandatory if you select Cross-field rule for Rule Type. Select a reference data field.

When you select a table name, the search box is case sensitive.

Dimension

This parameter is mandatory if you select Custom rule for Rule Type. It associates the custom rule with one of the six quality attributes, including completeness, validity, timeliness, consistency, accuracy, and uniqueness.

Output Description

This parameter is mandatory if you select Custom rule for Rule Type.

- Description of each column in the SQL result. The description corresponds to the output result defined by the SQL relationship. If the number of fields in the output result description is different from the number of output parameters of the SQL, the configuration cannot be saved and an error message is displayed.

- The output description can contain only letters, digits, underscores (_), hyphens (-), and spaces.

- For example, if the SQL is set to select max(${Column1}),min(${Column2}) from ${Schema_Table1}, the output result is Maximum value,Minimum value (pay attention to the input order). If there are multiple fields in the output description, separate them with commas (,). If Chinese commas are used in the command output, they will be automatically replaced with English commas when you save the configuration.

Quality Rule

Input Parameter

This parameter is mandatory when Rule Type is set to Multi-table and multi-field.

For example, you can enter input parameter Input_String1 and set the parameter value based on the site requirements.

NOTE:When Rule Type is set to Multi-table and multi-field and the rule template version is selected, the SQL statement is automatically displayed. The SQL statement contains the same number of parameters as the number of input parameters. If the SQL statement does not contain any parameters, you do not need to set input parameters.

Compute Engine

Queue Name

Select the engine for running the quality job. This parameter is valid only for Hive, DLI, or Hetu data connections. Enter a queue name.

If the connection type is Hetu, and the rule type is not database rule, the queue name is the resource queue name of the Hetu engine. To view the resource queue name of the Hetu engine, log in to FusionInsight Manager of MRS and click HetuEngine in the navigation pane. In the Basic Information area, click the HSConsole WebUI link and view the resource queue name of the Hetu engine in the computing instance list.

Rule Template

Template

Select a system or custom rule template.

NOTE:The template type is closely related to the rule type. For details, see Table 1. In addition to system rule templates, you can select the custom rule template created in Creating a Data Quality Rule.

If Rule Type is set to Field rule and Rule Template is set to Regular expression verification or Regular expression verification ignore null, the regular expression rule can contain a maximum of 1,024 characters.

Version

This parameter is required only when you select a custom rule template. Select the version of the published custom rule template.

SQL

After you select the template name and version, the SQL statement is automatically displayed.

Statistical Method

This parameter is mandatory when Rule Type is set to Table rule and a rule template related to volatility is selected for Template.

You can select Table Partition or Full Table.

If you select Table Partition, click Edit and select the partition fields of the required table. Select a date field in yyyyMMdd format from the table.

You can delete the table partition fields that you have selected.

Rule Weight

Set the weight for the rule based on the field level. The value is an integer from 1 to 9. The default value is 5.

Object Scope

Scanning Scope

You can select All or Partial. The default value is All.

If you want only part of data to be computed or quality jobs to be executed periodically based on a timestamp, you can set a WHERE condition for scanning.

You can transfer environment variables to data quality jobs.

If rules can be configured for multiple tables, the data range of each table can be set independently. If both Data Object and Reference Data Object are set, you need to configure the data to scan in the scanning scope.

WHERE Clause

Enter a WHERE clause. The system will scan the data that matches the clause.

NOTE:Add and at the beginning of the clause for syntax verification during SQL statement generation. Otherwise, a syntax error will be reported.

For example, if you want to filter out the data for which the value range of the age field is (18, 60], enter the following WHERE clause:and age > 18 and age <= 60

You can also enter a dynamic SQL expression. For example, if you want to filter out the data generated 24 hours ago based on the time field, enter the following WHERE clause:

and time >= (date_trunc('hour', now()) - interval '24 h') and time <= (date_trunc('hour', now()))DataArts Quality allows you to transfer parameters. You can enter a condition expression to transfer environment variables. The following is an example:

and p_date=${target_date}You can also transfer parameters from DataArts Factory to DataArts Quality. DataArts Quality can also proactively obtain parameters from DataArts Factory. This is supported for both system and custom rule templates.

Default Value

This parameter is required when you select Partial for Scanning Scope.

Enter the default values of the parameters in the where clause text box.

NOTE:The default parameter value is preferentially transferred by DataArts Factory. If the value is empty, the quality job may encounter an error.

After DataArts Factory transfers parameters to DataArts Quality and the job is executed, you can click View SQL to view the parameters and their values transferred by DataArts Factory.

Alarm Condition

Alarm Expression

Set this parameter if you want to set an alarm condition for the current rule. If you want to use the logical operations of multiple rules to set a unified alarm condition expression, you do not need to set this parameter. Instead, you can set it on the next Set Alarm page.

After the alarm conditions are configured, the system determines whether to generate an alarm based on the value of Parameter and the alarm condition. Apart from a single alarm expression, you can also use more complex alarm conditions consisting of logical operators. The alarm expression supports the following logical operators, which can be enclosed by "(" and ")".

- +: addition

- -: subtraction

- *: multiplying

- /: division

- ==: equal to

- !=: not equal to

- >: greater than

- <: less than

- >=: greater than or equal to

- <=: less than or equal to

- !: non

- ||: or

- &&: and

For example, if Rule Template is set to Null value, you can set this parameter as follows:

- If you want an alarm to be generated when the number of rows with a null value is greater than 10, enter ${1}>10 (${1} is the number of rows with a null value).

- If you want an alarm to be generated when the ratio of fields with a null value is greater than 80%, enter ${3}>0.8 (${3} is the ratio of fields with a null value).

- If you want an alarm to be generated when the number of rows with a null value is greater than 10 or the ratio of fields with a null value is greater than 80%, enter (${1}>10)||(${3}>0.8) (${1} is the number of rows with a null value, ${3} is the ratio of fields with a null value, and || indicates that an alarm will be generated if either of the conditions is met).

Parameter

The value of this parameter is obtained from the output of the rule template. If you can click a parameter, the expression of the parameter is displayed in Alarm Expression.

For example, if Template is set to Null value, ${1} is displayed in Alarm Expression when you click alarm parameter Null Value Rows.

Logical Operator

This parameter is optional. You can perform logical operations on the result of an alarm expression to generate more complex alarm conditions.

You can move the cursor between two alarm expressions in Alarm Expression and click one of the following operators to insert them. You can also manually enter an operator. The current expression supports the following logical operators which can be enclosed by brackets ().

- +: addition

- -: subtraction

- *: multiplying

- /: division

- ==: equal to

- !=: not equal to

- >: greater than

- <: less than

- >=: greater than or equal to

- <=: less than or equal to

- !: non

- ||: or

- &&: and

For example, if Template is set to Null value and you want an alarm to be generated when the number of rows with a null value is greater than 10 or the ratio of fields with a null value is greater than 80%, enter (${1}>10)||(${3}>0.8) for Alarm Expression (${1} is the number of rows with a null value, ${3} is the ratio of fields with a null value, and || indicates that an alarm will be generated if either of the conditions is met).

Score Quality

This parameter is mandatory if you select Custom rule for Rule Type.

If you enable quality scoring and set Schema (if the data source contains schemas) and Table Name, the name in the execution result is <Database name>.<Table name>, and the name of a subjob whose quality is not scored is <Database name>.custom-sql.

Scoring Formula

This parameter is mandatory if you select Custom rule for Rule Type.

Enter a scoring formula. You can try the scoring formula.

For example, you can enter ${1}/${2}. The parameters in the formula are the same as those in the alarm expression. The return value of the formula ranges from 0 to 1.

Weight Rule

This parameter is mandatory if you select Custom rule for Rule Type.

Set the weight of the rule. The value is an integer from 1 to 9. The default value is 5.

Generate Anomaly Data

Enable Generate Anomaly Data and click Select next to Anomaly Table to store the anomaly data that does not comply with the preset rules.

NOTE:- The following built-in templates cannot be used to generate anomaly data: the Table rows template for the Table rule, Average value, Total values, Max. value, and Min. value templates for the Field rule, and Multi-table and multi-field template.

- If periodic scheduling or re-execution is configured for a quality job, abnormal data detected in each instance scan is inserted into the anomaly table. You are advised to periodically delete the data in the anomaly table to reduce cost and ensure good performance.

- If Rule Type is set to Cross-source rule, you must enable Generate Anomaly Data.

Anomaly Table

Click Select Database and Schema and configure the prefix and suffix of the name of the table to export data to, select an existing table, and set abnormal fields. If no abnormal field is configured, all fields in the table are exported by default.

NOTE:You can set Anomaly Table to Prefix and suffix, Prefix, Suffix, or Existing table. The table prefix starts with a letter or underscore (_) and can only contain letters, digits, and underscores (_). The table suffix can contain only letters, digits, and underscores (_).

If you select Existing table for Anomaly Table, you need to select a table name. The database and schema are set by default. If no table name is selected, Database name.scheme.undefined is displayed.

When you set an anomaly table, the system adds suffix err to the table name by default.

Output Settings

- Output Rule Settings: If you select this option, the quality job settings will show up in the anomaly tables so that you can view the anomaly data sources with ease.

- Output null: If you select this option, and the preset rules are not complied, the null value will show up in anomaly tables.

- Clear abnormal data: If you select this option, historical abnormal data of the current sub-rule will be deleted. Exercise caution when performing this operation. When the data quality job is rerun, historical data in the abnormal table is cleared.

Anomaly Data Amount

You can choose to export all anomaly data or the specified amount of anomaly data.

Anomaly Table SQL

This parameter is mandatory if you select Custom rule for Rule Type. You need to enter a complete SQL statement to specify the abnormal data to be exported. The system can verify the semantics of the anomaly table SQL statement.

Viewing SQL Statement

You can click this to view the SQL statements of the anomaly table, including created and injected SQL statements.

View Duplicate Rules

Click it to view the following duplicate rules:

- Determine the rule repetition based on tables and fields.

- View the related sub-rules and quality jobs that already exist.

When configuring the rule, if you have set some parameters, you can click Save Locally in the lower right corner. Then message "The browser saved the file successfully locally." is displayed. After that, the Apply Local Draft button is displayed in the upper right corner of the page. You can click Apply Local Draft. In the displayed dialog box that shows the message "Applying the local draft will overwrite all configurations (including basic configurations). Continue?", click Yes. The local draft will overwrite all current configurations. If you exit the quality job creation page and enter the page again in the future, the local draft is still available.

- When you save a local draft, the system verifies the data. The data can be saved only when it meets the basic requirements. The local draft is no longer available after the browser is closed.

- If you save local drafts multiple times, the latest draft overwrites the previous one.

- Click Next and set alarm information. If you have configured an alarm expression in the previous step, the configured expression is automatically displayed. If there are two or more sub-rules, you can use either of the following methods to configure alarms:

- Use the alarm conditions of sub-rules to report alarms.

- Perform mathematical and logical operations on the alarm parameter values to generate a universal alarm expression to specify whether to report alarms for jobs.

The alarm expression supports the following logical operators, which can be enclosed by "(" and ")".- +: addition

- -: subtraction

- *: multiplying

- /: division

- ==: equal to

- !=: not equal to

- >: greater than

- <: less than

- >=: greater than or equal to

- <=: less than or equal to

- !: non

- ||: or

- &&: and

- Click Next and set the subscription information. If the SMN notification is required, enable Notification, and set Notification Type and Topic.

The notification type can be Alarm triggered or Run successfully. Currently, only SMS and email are available for subscribing to topics.

- After notification is enabled, a notification is sent for all the subjobs of the configured notification type. If you enable alarming, you do not need to set the notifications for failures. Alarms will be automatically reported if a task fails.

- If you enable Suppress Notifications, you can set the condition for sending an alarm notification, that is, the number of consecutive alarms within a certain period of time (minutes). The period ranges from 1 minute to 360 minutes, and the number of consecutive times ranges from 1 to 10.

- If you enable Notification Combination, notifications of all subjobs in the job are sent together. For example, if subjob A and B both fail, a job-level notification is sent based on the notification type. This function is disabled by default.

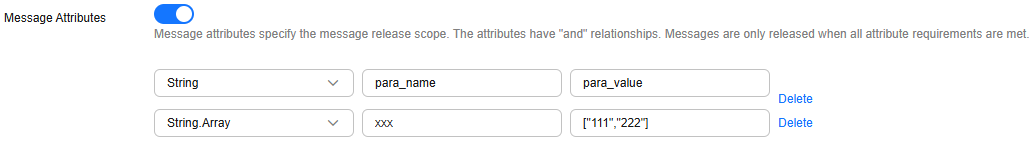

- If you enable Message Attributes, notifications will be sent based on the message attributes. Message attributes specify the message release scope. The system sends messages based on the configured attribute names and values. For example, you can configure different alarm receiving conditions for different personnel. You can configure a maximum of 10 String.Array values. For details about how to configure message attributes, see Using a Subscription Filtering Policy.

Figure 4 Configuring message attributes

- The constraints on SMN messages are as follows:

- The message attribute name can contain 1 to 32 characters, including only lowercase letters, digits, and underscores (_). It cannot start or end with an underscore (_), and cannot contain consecutive underscores (_). If the attribute type is String, the message attribute value is a string that can contain 1 to 32 characters, including only letters, digits, and underscores (_). If the attribute type is String.Array, the message attribute value is an array containing 1 to 10 strings. Each string in the array must be unique and contain 1 to 32 characters, including only letters, digits, and underscores (_). You can configure a maximum of 10 String.Array values.

- For details about the constraints on messages, see Publishing a Message.

- Click Next to go to the page where you can select a scheduling mode. Currently, Once and On schedule are supported. Set parameters for scheduling periodically by referring to Table 3. Click Submit.

- If Once is selected, a manual task instance is generated. A manual task has no dependency on scheduling and must be manually triggered.

- If On schedule is selected, a periodic instance is generated. A periodic instance is an instance snapshot that is automatically scheduled when a periodic task reaches the scheduled execution time.

- When a periodic task is scheduled once, an instance workflow is generated. You can perform routine O&M on scheduled instance tasks, such as viewing the running status, stopping and rerunning the scheduled tasks.

- Only MRS clusters that support job submission through an agency support periodic scheduling of quality jobs. MRS clusters that support job submission through an agency are as follows:

- MRS non-security cluster

- MRS security cluster whose version is later than 2.1.0, and that has MRS 2.1.0.1 or later installed

Table 3 Parameters Parameter

Description

Effective

Effective date of a scheduling task.

Cycle

The frequency at which a scheduling task is executed. Related parameters are:

- Minutes

- Hours

- Days

- Weeks

NOTE:

- If Cycle is set to Minutes or Hours, set the start time, end time, and interval for the scheduling task. Currently, the start time is in minute for stagger scheduling.

- If Cycle is set to Days, set a specified time when the scheduling task is enabled every day.

- If Cycle is set to Weeks, set Scheduling Time and Start from for the scheduling task, that is, XX o'clock XX minutes on XXX every week.

After a quality job is created, you can view it in the job list. You can also filter jobs by job name, creator, owner, table name, and time range. The system supports fuzzy search.

After a quality job is created, you can edit, delete, run, start scheduling, and stop scheduling it.

You cannot start scheduling a one-off quality job.

Running a Quality Job

To run a quality job, perform the following operations:

- In the left navigation pane on the DataArts Quality page, choose Quality Monitoring > Quality Jobs. In the right pane, locate a quality job.

- Click Run in the Operation column.

- In enterprise mode, select the development environment or production environment.

- Click OK.

Exporting Quality Jobs

You can export a maximum of 200 quality jobs. Each cell of the exported file can contain a maximum of 65,534 characters.

- In the left navigation pane on the DataArts Quality page, choose Quality Monitoring > Quality Jobs. In the right pane, select the quality jobs to export.

Figure 5 Export

- Click Export. The Export Quality Job dialog box is displayed.

Figure 6 Exporting Quality Jobs

- Click the Export Records tab to view the export result.

Figure 7 Export Records

- In the list of exported files, locate an exported quality job and click Download in the Operation column to download the Excel file of the job to the local PC.

Exporting All Quality Jobs

To export all quality jobs, perform the following operations: Each cell of the exported file can contain a maximum of 65,534 characters.

- Choose Quality Monitoring > Quality Jobs and click Export All.

Figure 8 Export All

- In the displayed Export Quality Job dialog box, click Export.

Figure 9 Exporting all quality jobs

- Click the Export Records tab to view the export result.

Figure 10 Export Records

- In the list of exported files, locate an exported quality job and click Download in the Operation column to download the Excel file of the job to the local PC.

Importing Quality Jobs

You can import a file containing a maximum of 4 MB data. Each cell of the file to be imported can contain a maximum of 65,534 characters.

- In the left navigation pane on the DataArts Quality page, choose Quality Monitoring > Quality Jobs. In the right pane, click Import. The Import Quality Job dialog box is displayed.

Figure 11 Importing quality jobs

- On the Import Configurations tab page, set Duplicate Name Policy.

- Terminate: If quality job names repeat, all quality jobs will fail to be imported.

- Skip: If quality job names repeat, the quality jobs will still be imported.

- Overwrite: If quality job names repeat, new jobs will replace existing ones with the same names.

If you select Overwrite, stop job scheduling before uploading a file. Otherwise, the upload will fail.

- Click Upload and select the prepared data file.

Edit the data file using either of the following methods:

- (Recommended) Click Export to export data and import the data to the system directly or import it after modification.

- Click Download Template, fill in the template with the data to import, and import the data to the system.

- Configure resource mapping for the data connection, cluster, catalog, and topic. If you do not configure the resource mapping, the original mapping is used by default.

Figure 12 Configuring the resource mapping

- Data Connection: Select the type of the imported data connection.

- Cluster: If the data connection type is DLI, select the corresponding queue.

- Directory: Select the directory where the imported quality job is stored.

- Topic: If SMN is configured, you need to select a topic.

- Click Import to import the Excel template to the system.

- Click the Import Records tab to view the import records.

Running Quality Jobs

You can run a maximum of 200 quality jobs.

- In the left navigation pane on the DataArts Quality page, choose Quality Monitoring > Quality Jobs. In the right pane, select the quality jobs you want to run.

- Above the job list, click More and select Run to run the selected quality jobs.

Figure 13 Running jobs

- In enterprise mode, select the development environment or production environment.

- Click OK.

Scheduling Quality Jobs

You can schedule a maximum of 200 quality jobs at a time.

- In the left navigation pane on the DataArts Quality page, choose Quality Monitoring > Quality Jobs. In the right pane, select the quality jobs you want to schedule.

- Above the job list, click More and select Start Schedule to schedule the selected quality jobs.

Figure 14 Scheduling jobs

Stopping Scheduling Quality Jobs

You can stop scheduling a maximum of 200 quality jobs at a time.

- In the left navigation pane on the DataArts Quality page, choose Quality Monitoring > Quality Jobs. In the right pane, select the quality jobs you want to stop scheduling.

- Above the job list, click More and select Stop Scheduling to stop scheduling the selected quality jobs.

Figure 15 Stopping scheduling jobs

Stopping Quality Jobs

You can stop a maximum of 200 quality jobs at a time.

Only quality jobs in Running state can be stopped.

- In the left navigation pane on the DataArts Quality page, choose Quality Monitoring > O&M. In the right pane, select the quality jobs you want to stop.

- Click Stop. In the displayed Stop Instance dialog box, confirm the instances to stop and click Yes.

Figure 16 Stopping instances

Figure 17 Stopping instances

Figure 17 Stopping instances

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot