Big Data Reference Architecture

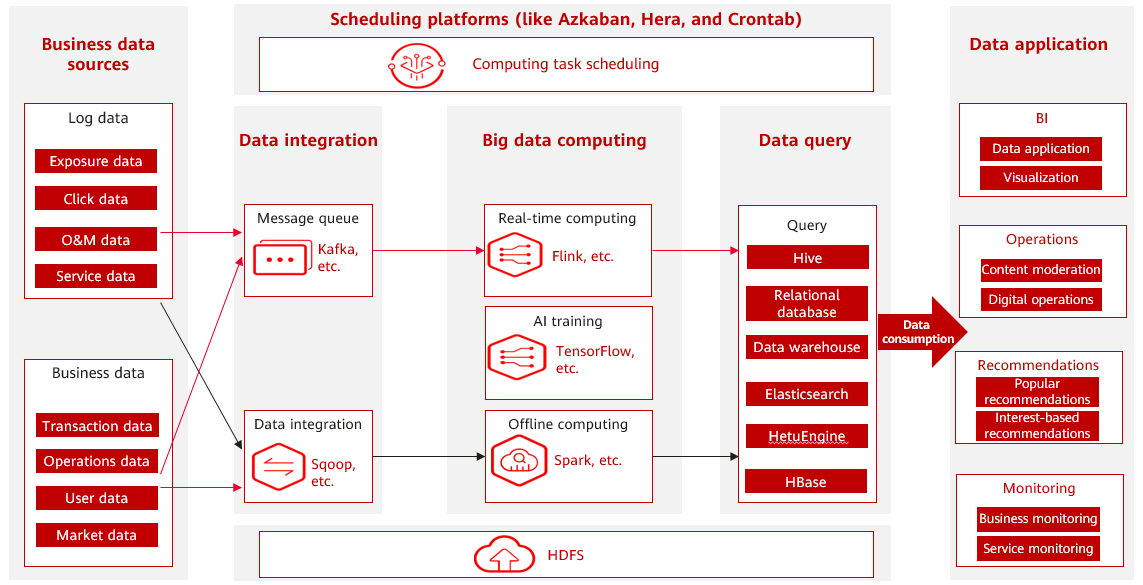

The following figure shows a typical big data architecture. Data integration, storage, computing, scheduling, query, and application constitute a complete data flow.

The big data architecture usually includes the following core components and processes. Enterprises can select cloud services or build big data components as needed.

- Business data sources

Big data platforms collect information from various business data sources like sensors, website logs, mobile apps, and social media. Through data collection and extraction, raw data is collected and transferred to the big data platform for subsequent processing and analysis.

- Data integration

Data integration is a process of integrating and converting data from different data sources. It includes operations such as data cleansing, data preprocessing, data format conversion, and data merging to ensure data consistency and accuracy.

- Data storage

The big data platform must have efficient data storage capabilities to carry massive amounts of data. Distributed file systems like HDFS and columnar databases like HBase are common data storage solutions. These storage systems provide high reliability, scalability, and fault tolerance to support large-scale data storage and access.

- Big data computing

Big data computing is a key step for processing massive amounts of data in a distributed, parallel, and real-time manner. Hadoop, Spark, and Flink are key computing frameworks that enable distributed computing and manage task scheduling. These computing frameworks can be used to perform complex computing and analysis tasks such as data processing, feature extraction, machine learning, and data mining.

- Data query and analysis

A large amount of data stored on the big data platform requires flexible, high-performance query and analysis capabilities. This can be achieved by using an SQL query engine like Apache Hive or a distributed database like Elasticsearch. The tools and systems allow you to query, aggregate, and view massive datasets for better insights and informed decisions.

- Task scheduling

Big data platforms often handle complex data tasks. Task scheduling systems (such as Azkaban) help manage and schedule data processing tasks. They allow you to set task dependencies, scheduling frequency, and retry policies for reliable and on-time task execution.

- Data application

Big data platforms aim to deliver useful data applications for various businesses. Data applications can be real-time reports, visual dashboards, intelligent recommendation systems, and fraud detection systems based on big data analysis. Combining big data analysis results with business processes enables data-driven business decision-making and innovation.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot