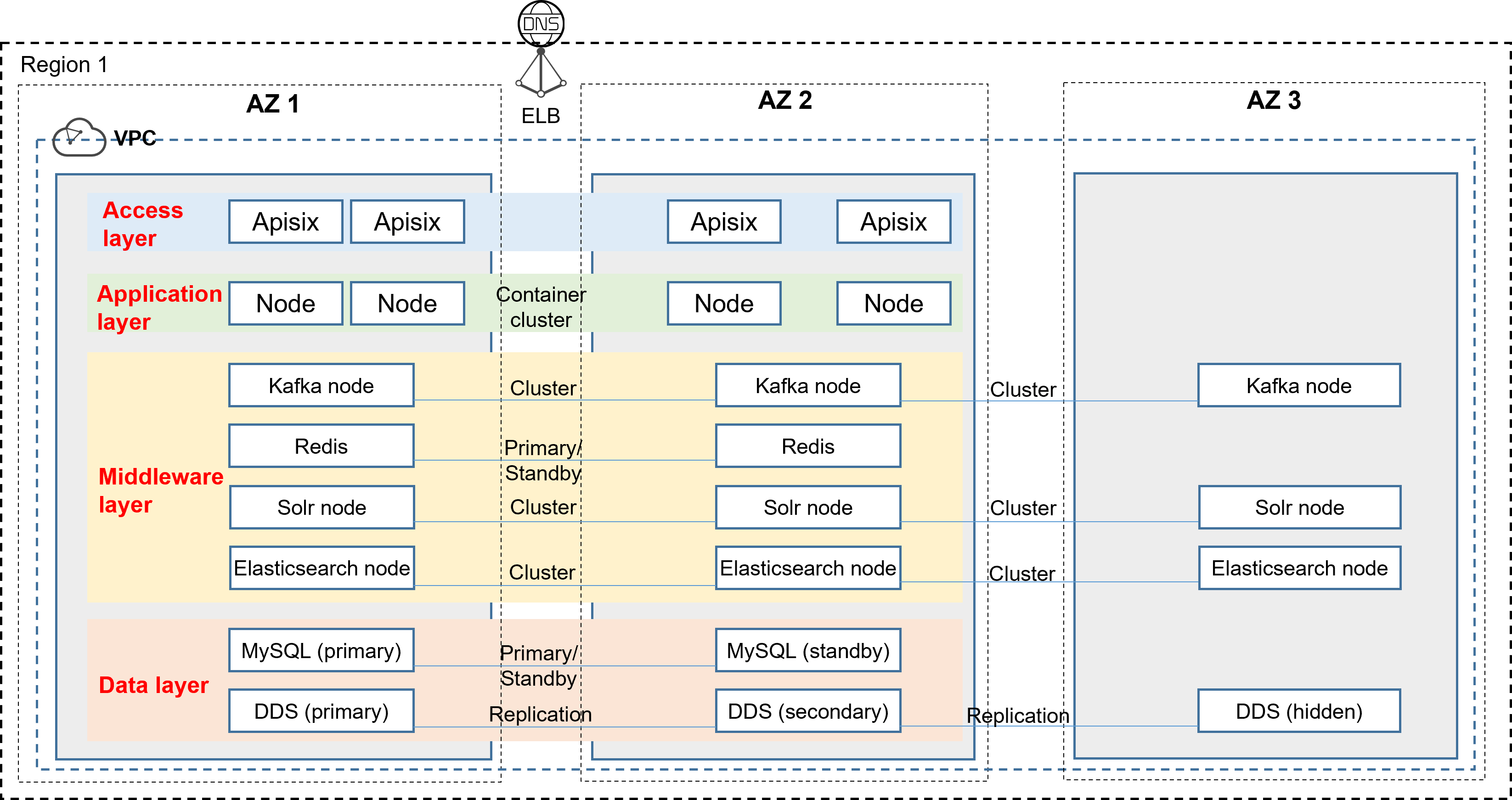

Cross-AZ HA Design Example

Cross-AZ HA is one of the most important benefits of migrating IDCs to the cloud. Cross-AZ HA is the best choice for enterprises after cloud migration. It is cost-effective and convenient. The following uses a large-scale retail e-commerce platform as an example to describe how to design cross-AZ HA after cloud migration.

- Access layer: Apisix instances are evenly distributed in dual AZs. If an AZ is faulty, ELB can still forward traffic to the Apisix instances in the normal AZ.

- Application layer: Containers are deployed, and service nodes are distributed across AZs. Even if an AZ is abnormal, Apisix can forward traffic to normal servers.

- Middleware layer: Kafka, Solr, and Elasticsearch are deployed in a three-AZ cluster. If any AZ is faulty, services are still available. Redis is deployed in primary/standby mode across two AZs.

- Data layer: MySQL databases are deployed in primary/standby mode across two AZs to implement HA. MongoDB uses replica sets or clusters across three AZs. If an AZ is faulty, other AZs provide services properly.

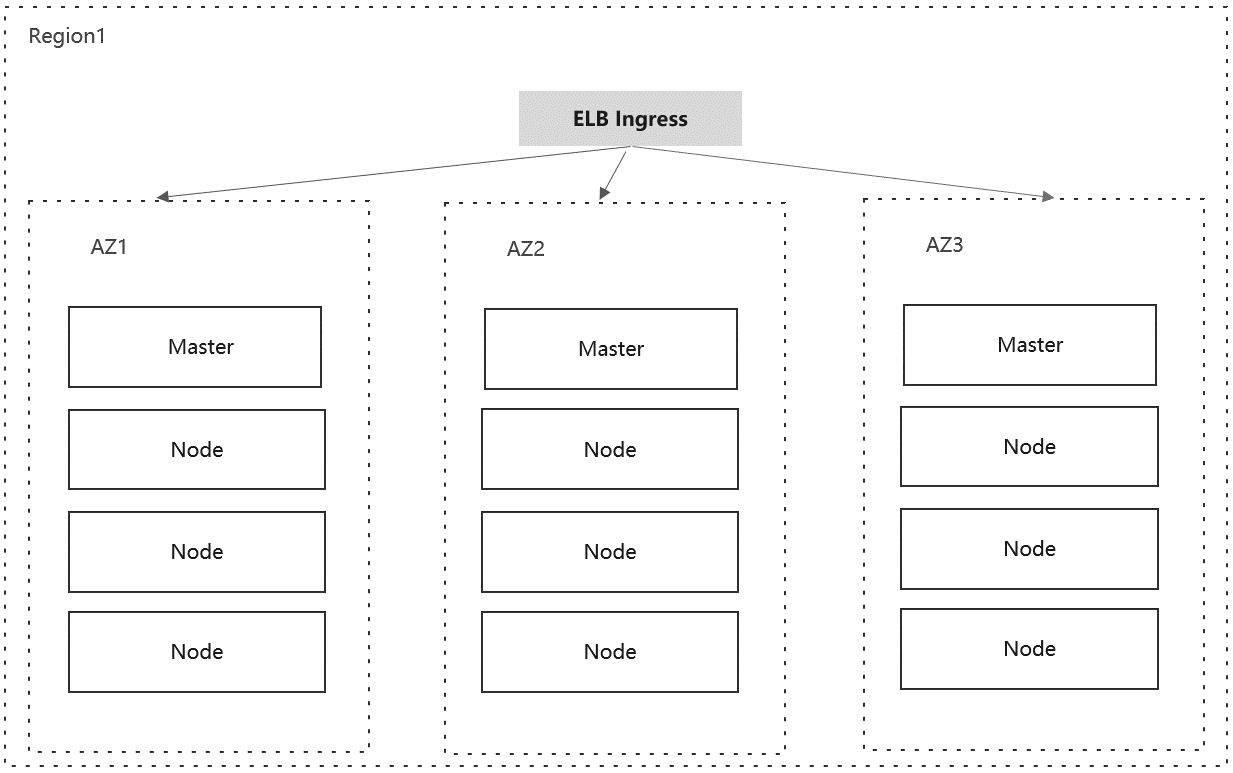

- Application layer - container cluster HA

- Master HA: Master nodes in the container cluster are evenly distributed in three AZs.

- Ingress gateway HA: If the multi-AZ function is enabled for load balancers, ELB ingresses support cross-AZ HA.

- Application HA: Kubernetes supports application HA. You can configure topologyKey to distribute pods across AZs.

Figure 2 Application HA design example

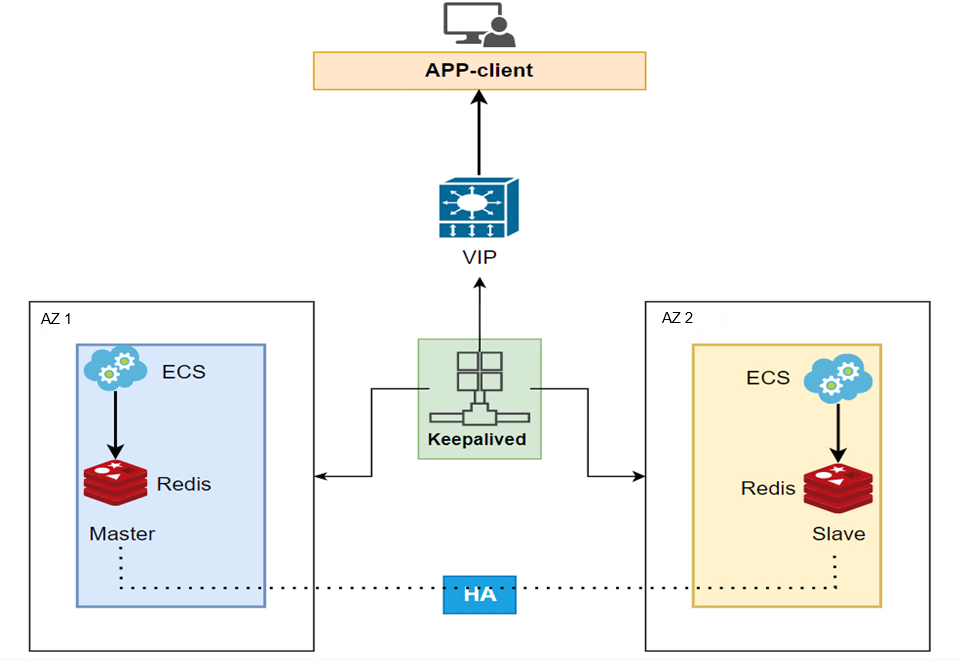

- Middleware layer - Redis HA

- Data is persisted on the primary node and synchronized to the standby node in real time. The standby node also persists a copy of the data.

- Primary/standby instances are deployed in different AZs with isolated power supplies and networks. If the equipment room where the primary node is located is faulty due to power or network faults, the standby node takes over services, and the client can connect to the standby node and read and write data.

- Redis clusters work with Keepalived to generate VIPs, improving service availability.

Figure 3 Example of Redis HA design at the middleware layer

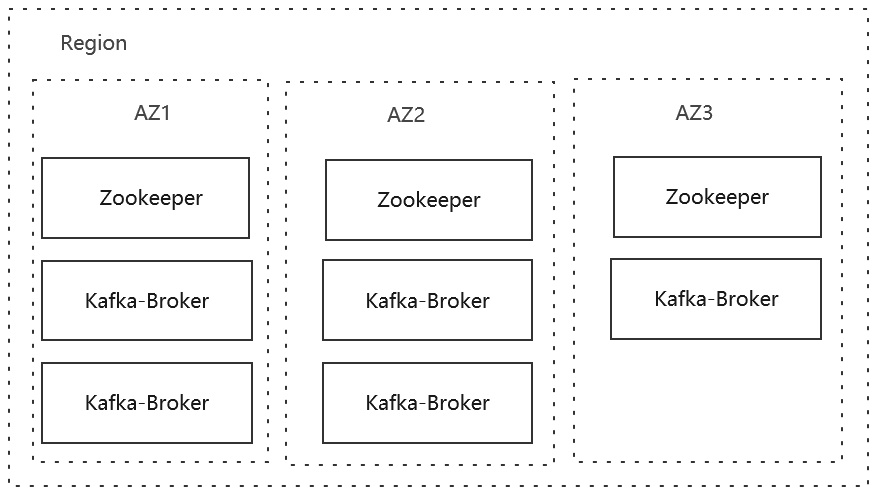

- Middleware layer - Kafka HA

- ZooKeeper HA: ZooKeeper nodes are deployed in three AZs. Each node is in one AZ, or two nodes in AZ 1, two in AZ 2, and one in AZ 3. If an AZ is unavailable, the cluster still has more than half of the quorum nodes for election.

- Kafka-Broker data node HA: Kafka-Broker nodes are deployed in three AZs. Two nodes in AZ 1, two in AZ 2, and one in AZ 3. At least three replicas are configured for a topic, and unclean.leader.election.enable is set to true. If any AZ is down, at least one replica is available in the cluster.

Figure 4 Example of Kafka HA design at the middleware layer

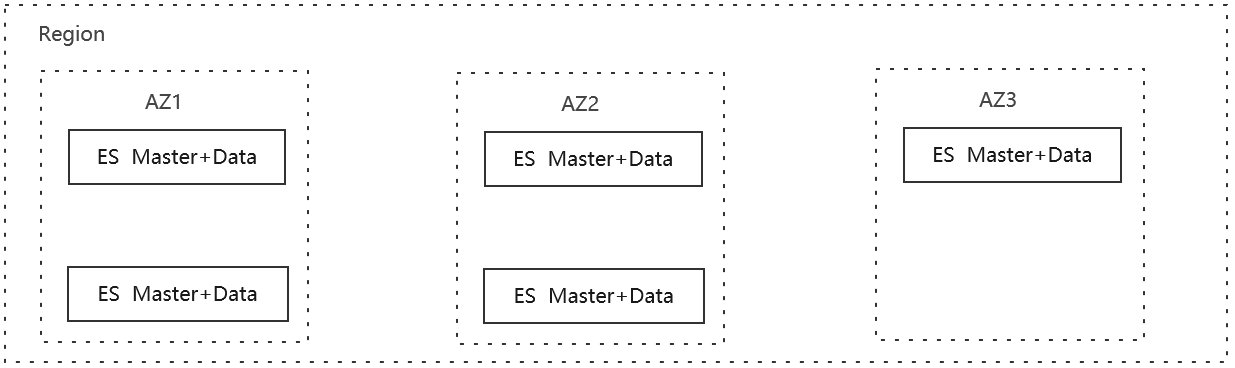

- Middleware layer - Elasticsearch HA

- Master HA: Elasticsearch Master nodes are deployed in three AZs. Two nodes in AZ 1, two in AZ 2, and one in AZ 3. If an AZ is unavailable, the cluster still has more than half of the quorum nodes for election.

- Data node: Elasticsearch Data nodes are deployed in three AZs. Two nodes in AZ 1, two in AZ 2, and one in AZ 3. At least two replicas are configured for index shards, and three replicas are configured for the primary shard. If any AZ breaks down, the cluster still has a complete replica to ensure HA.

-

Figure 5 Example of Elasticsearch HA design at the middleware layer

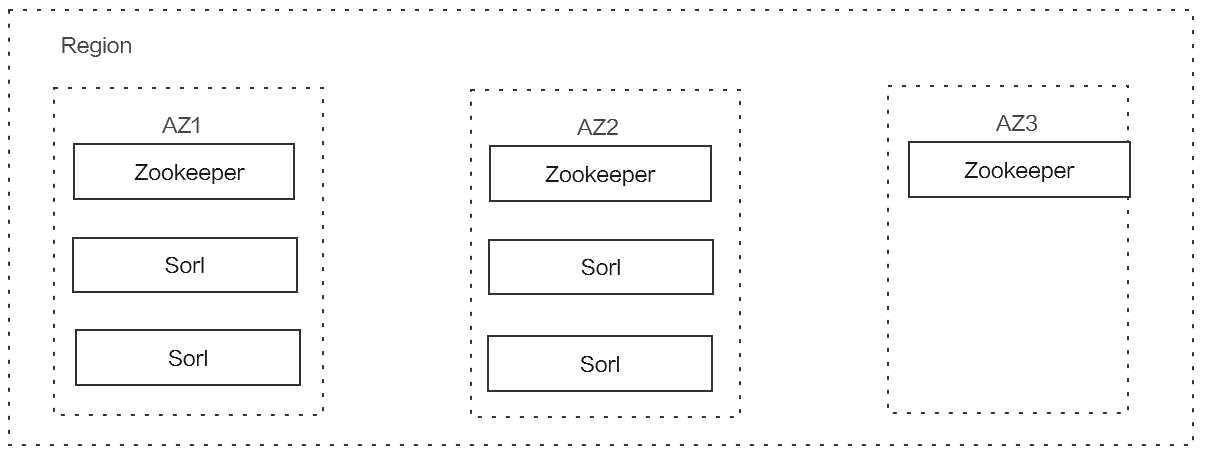

- Middleware layer - Solr HA

- ZooKeeper HA: ZooKeeper nodes are deployed in three AZs. Each node is in one AZ, or two nodes in AZ 1, two in AZ 2, and one in AZ 3. If an AZ is unavailable, the cluster still has more than half of the quorum nodes for election.

- Sorl data nodes: Sorl data nodes are evenly distributed in two AZs. At least (N/2) + 1 replicas are set for index shards. If an AZ is down, the cluster still has a complete replica to ensure HA.

-

Figure 6 Example of Sorl HA design at the middleware layer

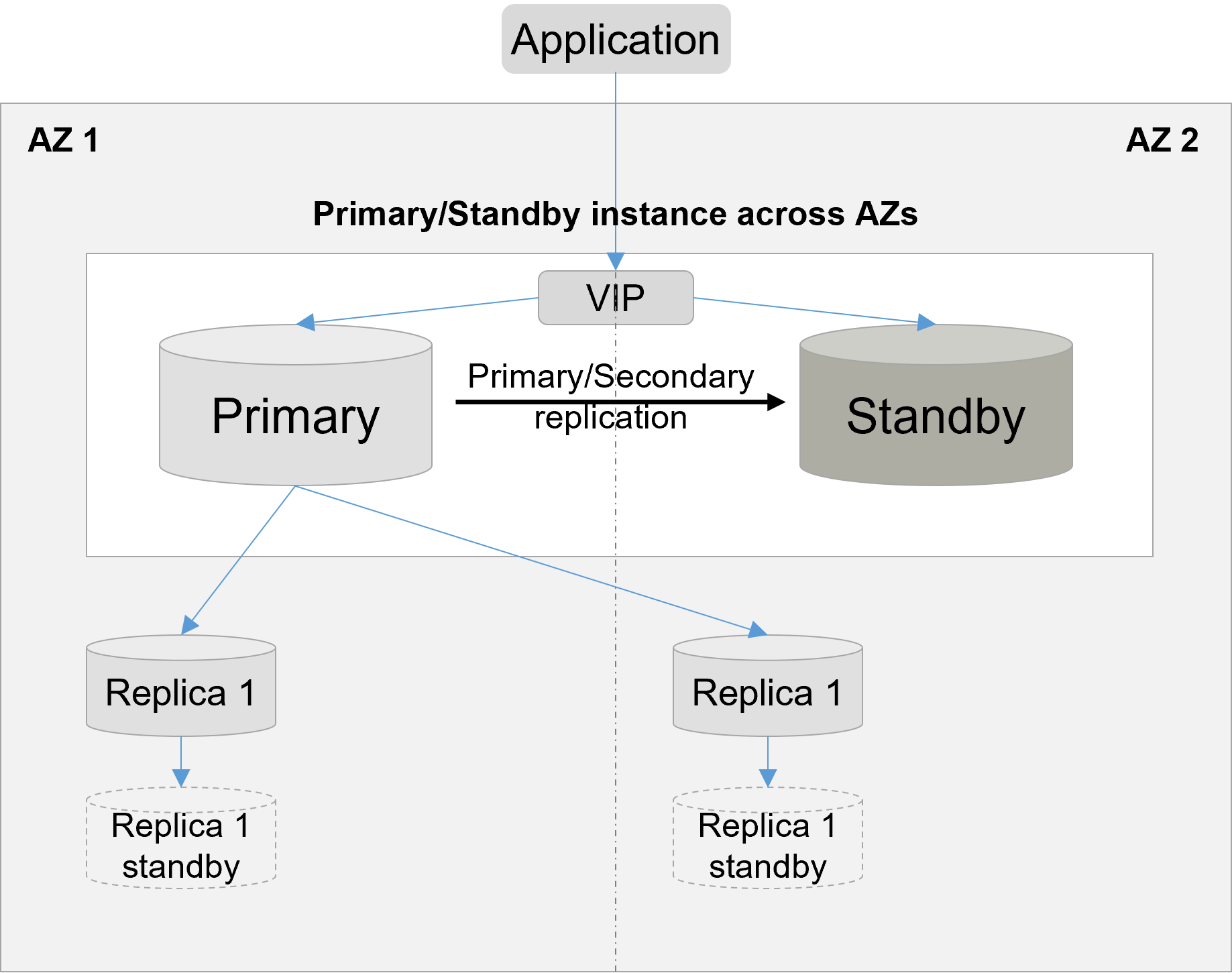

- Data layer - MySQL HA

- The primary and standby instances are deployed across AZs. Data is seamlessly synchronized between the primary and standby instances by leveraging the built-in replication and synchronization features of MySQL.

- The primary/standby instances provide services through a VIP, and their actual IP addresses are inaccessible to tenants.

- Primary/Standby switchover is performed within seconds. During the switchover, the VIP is switched to the new primary node, and applications are interrupted for only seconds.

- Read replicas can be attached to the instances and deployed across AZs.

Figure 7 Example of MySQL HA design

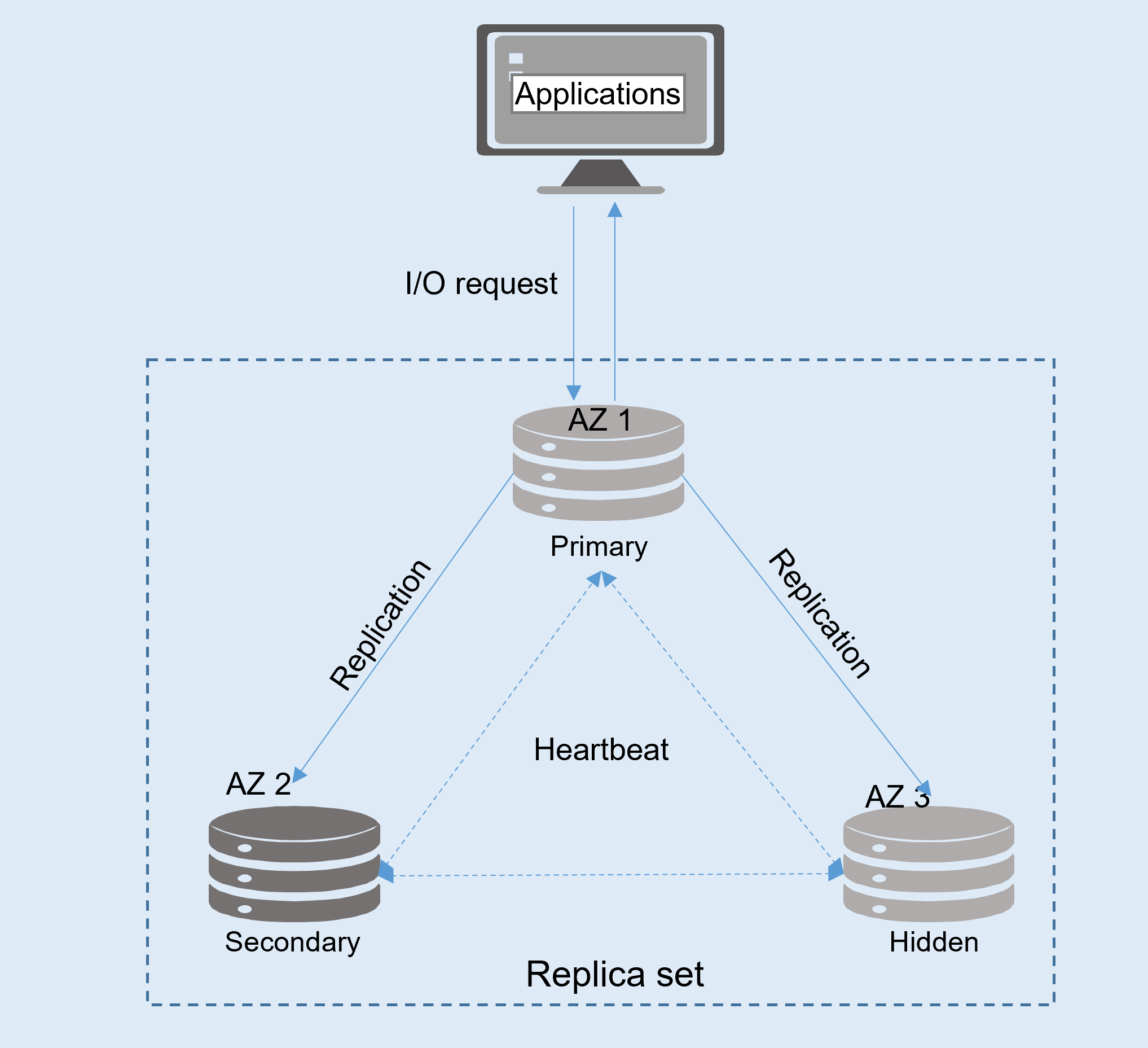

- Data layer - MongoDB HA

- DDS replica sets can be deployed across three AZs. There are three nodes by default, and a maximum of seven nodes are supported. They are deployed in three AZs. Data is seamlessly synchronized by leveraging the built-in replication features of MongoDB.

- The Mongo client supports multiple server addresses and availability detection.

- If the AZ where the primary node is located is faulty, a new primary node will be elected. If the secondary node is unavailable, the hidden node takes over services to ensure HA. Currently, three, five, and seven replicas can be configured.

Figure 8 Example of MongoDB HA design

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot