How Do I Reference a Python Script in a Spark Python Job?

Prerequisites

You have created an MRS Spark connection in Management Center. The connection type is MRS API.

Procedure

- In the navigation pane of the DataArts Factory homepage, choose Data Development > Develop Script.

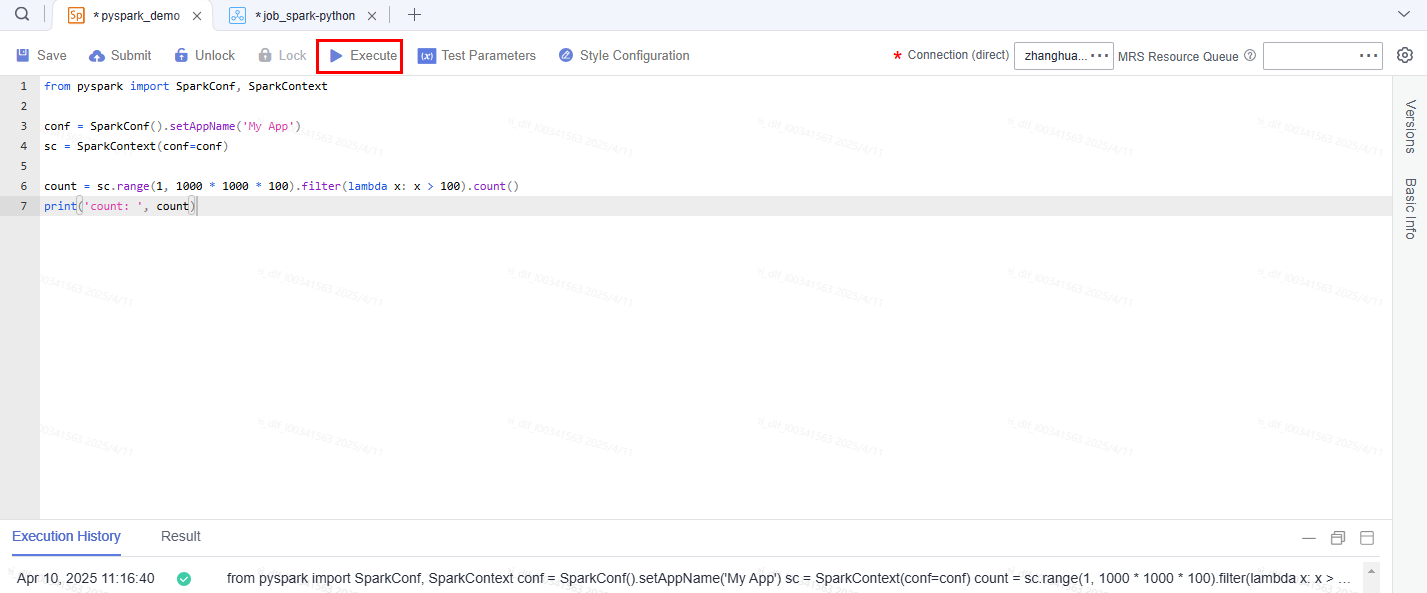

- Create a Spark Python script named pyspark_demo. Select the MRS Spark connection that has been created in Management Center.

from pyspark import SparkConf, SparkContextconf = SparkConf().setAppName('My App')sc = SparkContext(conf=conf)count = sc.range(1, 1000 * 1000 * 100).filter(lambda x: x > 100).count()print('count: ', count) - Save and submit the version.

- Click Execute to run the script.

Figure 1 Executing the script

- View the script execution result.

To reference the created script in a job, perform the following steps:

- Create a batch processing pipeline job.

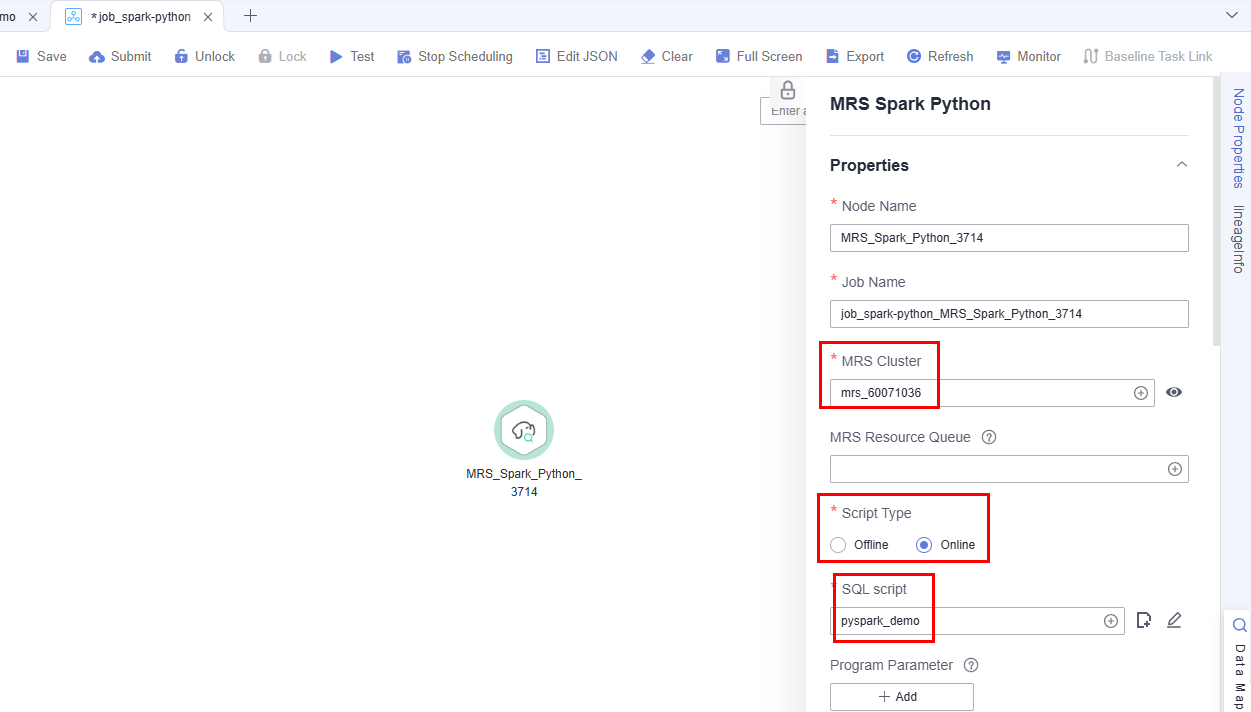

- Open the job, drag an MRS Spark Python node to the canvas, and configure parameters for the node.

MRS Cluster: Select the cluster you selected when creating the connection in Management Center.

Select Online for Script Type and then select the pyspark_demo script you have created.

Retain the default values for other parameters.

Figure 2 Configuring node parameters

- Select Run once or Run periodically for Scheduling Type.

- Save and submit the version.

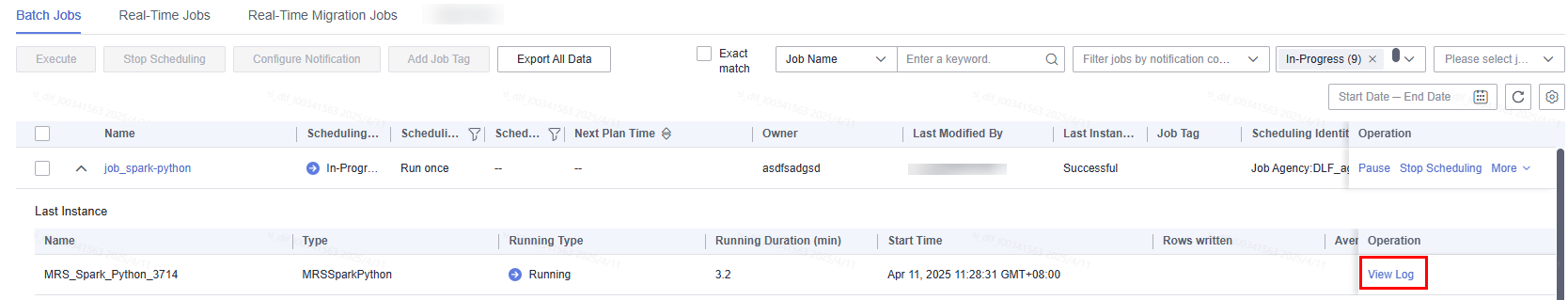

- Click Execute to run the job.

Figure 3 Viewing the execution result

- On the Job Monitoring page, view the job scheduling status and log.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot