Format Requirements for Video Datasets

ModelArts Studio supports the creation of video datasets. During the creation, you can import data in various formats. Table 1 lists the format requirements.

|

File Content |

File Format |

Requirement |

|---|---|---|

|

Video |

MP4 or AVI |

|

|

Video + Annotation |

Video + JSON |

The following is an example.

For details about the annotation file in JSONL format, refer to the following: {"video_fn": "13/ad098173-af09-48fe-95c3-e72fd629688e.mp4" Relative path of the video,

"prompt": "A person pours a clear liquid from a bottle into a shot glass, then lifts the glass to their mouth and drinks the shot. The background includes a red coat and other indistinct background elements." Video synopsis generation (simplified),

"long_prompt": "A person is seen pouring a clear liquid from a green glass bottle into a small glass. The individual is wearing a white shirt with a lace collar and a beige cardigan. The background appears to be a cozy indoor setting, possibly a cafe or a restaurant, with red and white elements visible, such as a red coat hanging on the wall and a white table. The person carefully pours the liquid, ensuring it is filled to the brim of the glass. The liquid is clear and has some green leaves floating in it. The person then holds the glass up, possibly to show the contents or to prepare for a drink.", Video synopsis generation (detailed)

} |

|

Event detection |

Video + JSON |

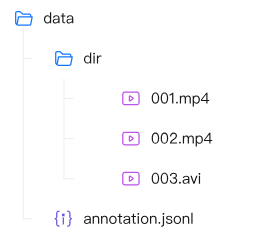

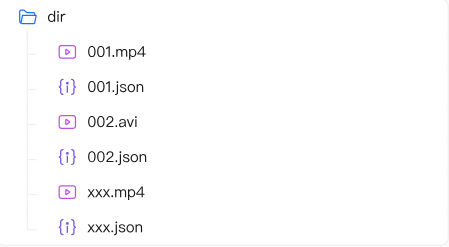

Data source samples must be in AVI or MP4 format, and annotation files must be in JSON format. The data source must contain two or more files in AVI or MP4 format. One video file can correspond to one or more annotation files. The duration of each video must be greater than 128s, the FPS must be greater than or equal to 10, and the test set and training set must contain videos. Supported video formats include MP4 and AVI. The duration of each video must be greater than 128s, and the FPS must be greater than or equal to 10. The annotation.json file is the annotation file. Import from OBS: The size of a single file cannot exceed 50 GB, and the number of files is not limited. The following is an example. Video + JSON (many-to-one)

Video + JSON (one-on-one)

For details about the annotation file in JSON format, refer to the following: {

'version': 'dataset_name_v.x.x',// Dataset version information.

'classes': [category1',category2', ...],// List of all category names. Each category corresponds to a label, which is used to mark events or actions in the video.

'database': {

'video_name':{

// Training set: train; test set: test.

'subset': 'train',

'duration': 1660.3, // Total video duration, in seconds.

'fps': 30.0,// Video frame rate.

'width': 720,// Video width, in pixels.

'height': 1280,// Video height, in pixels.

'ext': 'mp4',//Video file name extension.

// 34.5 and 42.4 indicate the start time and end time, respectively. The unit is second.

// label indicates the category. It must be an element in the classes list, indicating the event or action type corresponding to the video clip.

'annotations': [

{'label': 'category1', 'segment': [34.5, 42.4]},

{'label': 'category1', 'segment': [124.4, 142.9]},

...

]

},

'video_name':{

'subset': xxx,// Video file name, excluding the file name extension.

'duration': xxx,

'fps': xxx,

'width': xxx,

'height': xxx,

'ext': xxx,

'annotations': [

{'label': xxx, 'segment': xxx},

{'label': xxx, 'segment': xxx},

...

]

},

...

}

} |

|

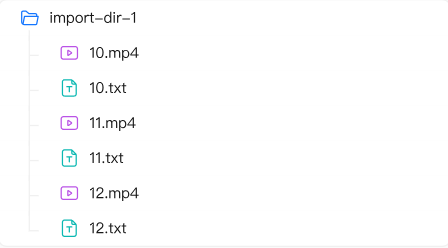

Video classification |

Video + TXT |

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot