From MySQL to DWS

This section describes how to configure the parameters of a real-time processing migration job for synchronizing data from one or more MySQL instances to a DWS database.

- Entire database: Synchronize multiple tables from a MySQL instance to multiple tables of a DWS instance in real time.

- Database and table shard: Synchronize multiple table shards of multiple database shards from a MySQL instance to an aggregation table on a DWS instance.

- DDL supports new columns.

Entire DB

- Configure source parameters.

- Select synchronization objects.

- Table-level synchronization: Synchronize multiple tables in multiple databases of a MySQL instance.

- Database-level synchronization: Synchronize all tables in multiple databases of a MySQL instance.

- Select the MySQL tables to be migrated.

Figure 1 Selecting tables

Both databases and tables can be customized. You can select one database and one table, or multiple databases and tables.

- Select synchronization objects.

- Configure destination parameters.

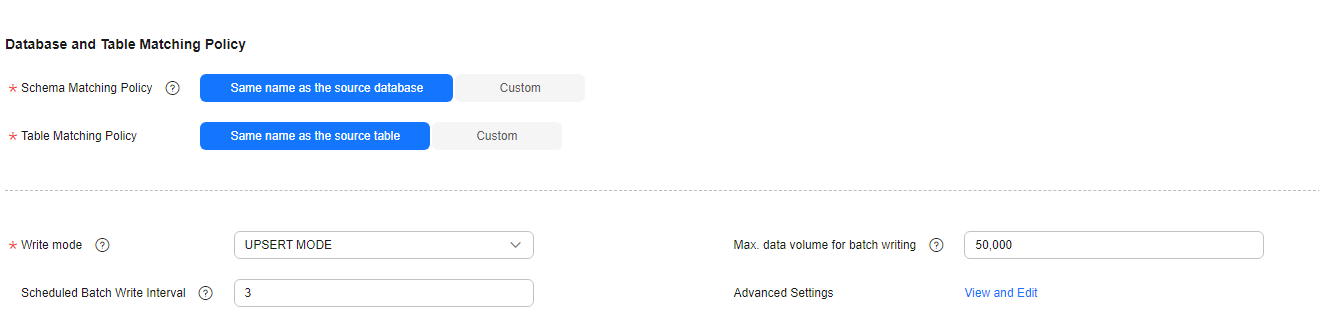

Figure 2 Configuring destination parameters

- Database and Table Matching Policy:

- Database Matching Policy: Configure a matching policy for the destination database so that data can be migrated to the destination database as planned.

You need to create the corresponding database in advance. Otherwise, automatic table creation may fail.

- Same name as the source database: Data will be migrated to the destination database with the same name as the source database.

- Custom: You can specify the destination database name or use #{source_db_name} to add a custom field to the source database name to change it to the name of the destination database of the DWS table.

- Table Matching Policy: Configure a matching policy for the destination table so that data can be migrated to the destination table as planned. This configuration item is unavailable for database-level synchronization.

You do not need to create the corresponding data table in advance. A table will be automatically created.

- Same name as the source table: Data will be migrated to the destination table with the same name as the source table.

- Custom: You can specify the destination table name or use the built-in parameter #{source_table_name} to add a prefix or suffix to the source table name to change it to the name of the destination Hudi table.

- Write mode: Select the data write mode.

UPSERT is the batch update mode, and COPY is the DWS-dedicated high-performance batch import mode.

- Max. data volume for batch writing: Set this parameter based on the table data size and job memory usage. The value range is 1 to 10,000,000.

- Scheduled Batch Write Interval: interval for writing data to the database, in seconds. The value range is 1 to 1,000.

- Advanced Settings: Set the following parameters to configure advanced properties for writing data.

- sink.buffer-flush.max-size: maximum memory for batch write based on the memory and data size configured for the job. The unit is MB.

- sink.case-sensitive: whether fields are case-sensitive. The value can be true or false. When the write mode is COPY MODE and the primary key name contains uppercase letters, set this parameter to true.

- Database Matching Policy: Configure a matching policy for the destination database so that data can be migrated to the destination database as planned.

- Mapping Between Source and Destination Tables: This configuration item is unavailable for database-level synchronization.

Figure 3 Configuring the mapping between source and destination tables

- Edit additional field: Click Edit Additional Field in the Operation column to add custom fields to the migrated DWS table.

Table 1 Supported additional field values Type

Example

Constant

-

Built-in variable

- Source host IP address: source.host

- Source schema name: mgr.source.schema

- Source table name: mgr.source.table

- Destination schema name: mgr.target.schema

- Destination table name: mgr.target.table

Field variable

-

UDF

- substring(#col, pos[, len]): obtains a substring of a specified length from the source column name. The substring range is [pos, pos+len).

- date_format(#col, time_format[, src_tz, dst_tz]): formats the source column name based on a specified time format. The time zone can be converted using src_tz and dst_tz.

- now([tz]): obtains the current time in a specified time zone.

- if(cond_exp, str1, str2): returns str1 if the condition expression cond_exp is met and returns str2 otherwise.

- concat(#col[, #str, ...]): concatenates multiple parameters, including source columns and strings.

- from_unixtime(#col[, time_format]): formats a Unix timestamp based on a specified time format.

- unix_timestamp(#col[, precision, time_format]): converts a time into a Unix timestamp of a specified time format and precision.

For a new table, you can add additional fields to the existing fields in the source table. You can customize the field name (for example, custom_defined_col), select the field type, and enter the field value.

You can add multiple additional fields at a time.

- Automatic table creation: Click Auto Table Creation to automatically create tables in the list based on the configured rules. After the tables are created, Existing table is displayed them.

- Edit additional field: Click Edit Additional Field in the Operation column to add custom fields to the migrated DWS table.

- Database and Table Matching Policy:

Database/Table Partition Scenario

Tables in different databases can be aggregated into one table and written to a destination table during synchronization. The structure of matched shards and tables must be consistent.

- Configure source parameters.

Figure 4 Adding a logical table

Logical Table Name: Enter the name of the table to be written to DWS.

Source Database Filter: You can enter a regular expression to filter all the database shards to be written to the destination GaussDB(DWS) aggregation table.

Source Table Filter: You can enter a regular expression to filter all the table shards in the source database shard to be written to the destination GaussDB(DWS) aggregation table.

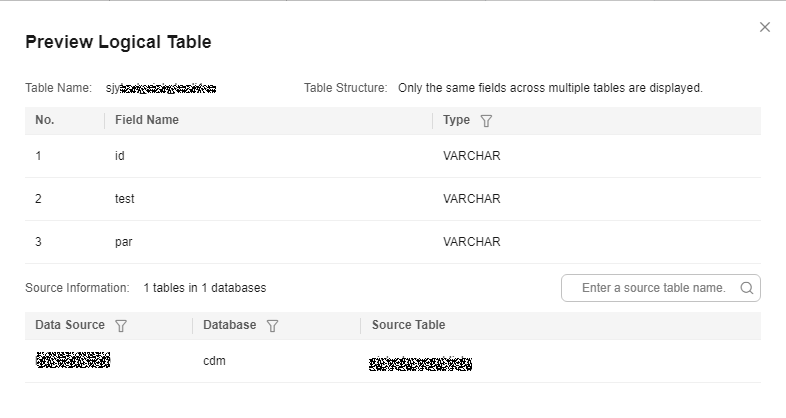

You can click Preview in the Operation column to preview an added logical table.

Preview the logical table. The more the source tables, the longer the waiting time.

Figure 5 Previewing the logical table

- Configure destination parameters.

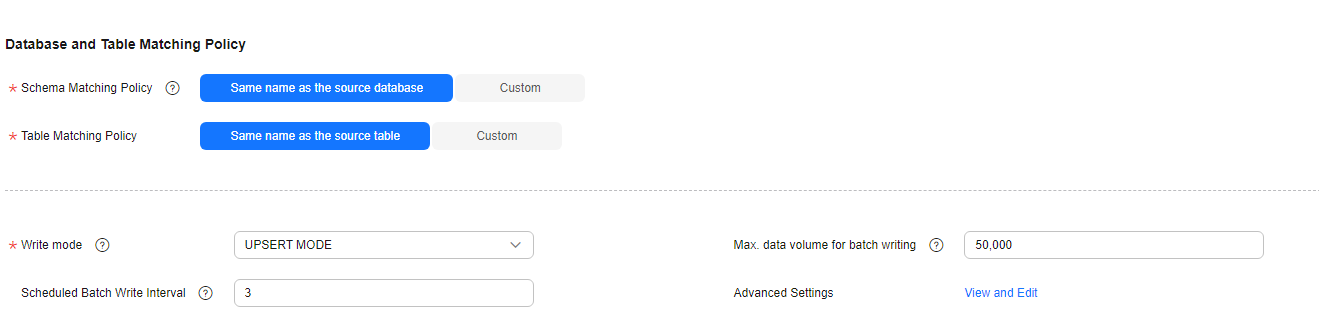

Figure 6 Configuring destination parameters

- Database and Table Matching Policy:

- Destination Database Name: Enter a custom destination database name.

- Table Matching Policy: The default value is the same as the name of the source logical table and cannot be changed.

- Write mode: Select the data write mode.

UPSERT is the batch update mode, and COPY is the DWS-dedicated high-performance batch import mode.

- Max. data volume for batch writing: Set this parameter based on the table data size and job memory usage. The value range is 1 to 10,000,000.

- Scheduled Batch Write Interval: interval for writing data to the database, in seconds. The value range is 1 to 1,000.

- Advanced Settings: Set the following parameters to configure advanced properties for writing data.

- sink.buffer-flush.max-size: maximum memory for batch write based on the memory and data size configured for the job. The unit is MB.

- sink.case-sensitive: whether fields are case-sensitive. The value can be true or false. When the write mode is COPY MODE and the primary key name contains uppercase letters, set this parameter to true.

- Mapping Between Source and Destination Tables

Figure 7 Configuring the mapping between source and destination tables

- Edit additional field: Click Edit Additional Field in the Operation column to add custom fields to the migrated DWS table.

Table 2 Supported additional field values Type

Example

Constant

-

Built-in variable

- Source host IP address: source.host

- Source schema name: mgr.source.schema

- Source table name: mgr.source.table

- Destination schema name: mgr.target.schema

- Destination table name: mgr.target.table

Field variable

-

UDF

- substring(#col, pos[, len]): obtains a substring of a specified length from the source column name. The substring range is [pos, pos+len).

- date_format(#col, time_format[, src_tz, dst_tz]): formats the source column name based on a specified time format. The time zone can be converted using src_tz and dst_tz.

- now([tz]): obtains the current time in a specified time zone.

- if(cond_exp, str1, str2): returns str1 if the condition expression cond_exp is met and returns str2 otherwise.

- concat(#col[, #str, ...]): concatenates multiple parameters, including source columns and strings.

- from_unixtime(#col[, time_format]): formats a Unix timestamp based on a specified time format.

- unix_timestamp(#col[, precision, time_format]): converts a time into a Unix timestamp of a specified time format and precision.

For a new table, you can add additional fields to the existing fields in the source table. You can customize the field name (for example, custom_defined_col), select the field type, and enter the field value.

You can add multiple additional fields at a time.

- Automatic table creation: Click Auto Table Creation to automatically create tables in the list based on the configured rules. After the tables are created, Existing table is displayed them.

- Edit additional field: Click Edit Additional Field in the Operation column to add custom fields to the migrated DWS table.

- Database and Table Matching Policy:

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot