Developing a Batch Processing Single-Task SQL Job

This section describes how to develop and configure a job.

For details about how to develop a batch processing job in single-task mode, see sections Developing an SQL Script, Configuring Job Parameters, Data Table, Testing and Saving the Job, and Downloading or Dumping a Script Execution Result.

Prerequisites

- A job has been created. For details, see Creating a Job.

- You have locked the job. Otherwise, you must click Lock so that you can develop the job. A job you create or import is locked by you by default. For details, see the lock function.

Developing an SQL Script

- Log in to the DataArts Studio console by following the instructions in Accessing the DataArts Studio Instance Console.

- On the DataArts Studio console, locate a workspace and click DataArts Factory.

- In the left navigation pane of DataArts Factory, choose .

- In the job directory, double-click the name of a single-task job to access the job development page.

- On the right of the SQL editor, click Basic Info to configure basic information, properties, and advanced settings of the job. Table 1 lists the basic information, Table 2 lists the properties, and Table 3 lists the advanced settings.

Table 1 Basic job information Parameter

Description

Owner

An owner configured during job creation is automatically matched. This parameter value can be modified.

Job Agency

This parameter is available when Scheduling Identities is set to Yes.

After an agency is configured, the job interacts with other services as an agency during job execution.

Priority

Priority configured during job creation is automatically matched. This parameter value can be modified.

Execution Timeout

Timeout of the job instance. If this parameter is set to 0 or is not set, this parameter does not take effect. If the notification function is enabled for the job and the execution time of the job instance exceeds the preset value, the system sends a specified notification, and the job keeps running.

Exclude Waiting Time from Instance Timeout Duration

Whether to exclude the wait time from the instance execution timeout duration

If you select this option, the time to wait before an instance starts running is excluded from the timeout duration. You can modify this setting in Default Configuration > Exclude Waiting Time from Instance Timeout Duration.

If you do not select this option, the time to wait before an instance starts running is included in the timeout duration.

Custom Parameter

Set the name and value of the parameter.

Job Tag

Configure job tags to manage jobs by category.

Click Add to add a tag to the job. You can also select a tag configured in Managing Job Tags.

Table 2 Attributes of a batch processing single-task SQL job Property

Description

DLI SQL properties

DLI Data Directory

Select the DLI data directory.

- Default DLI data directory dli

- Metadata catalog that has been created in LakeFormation associated with DLI.

Database Name

Select a database.

If you select the default DLI data directory dli, select a DLI database and tables.

If you select a metadata catalog that has been created in LakeFormation associated with DLI, select a LakeFormation database and tables.

Queue Name

The queue set in the SQL script is selected by default. You can change another one.

You can create a resource queue using either of the following methods:- Click

. On the displayed Queue Management page of DLI, create a resource queue.

. On the displayed Queue Management page of DLI, create a resource queue. - Go to the DLI console to create a resource queue.

Record Dirty Data

Click

to specify whether to record dirty data.

to specify whether to record dirty data.- If you select

, dirty data will be recorded.

, dirty data will be recorded. - If you do not select

, dirty data will not be recorded.

, dirty data will not be recorded.

DLI Environmental Variable

- The environment variable must start with hoodie., dli.sql., dli.ext., dli.jobs., spark.sql., or spark.scheduler.pool.

- If the environment variable is dli.sql.autoBroadcastJoinThreshold, the value must be an integer. If the environment variable is dli.sql.shuffle.partitions, the value must be a positive integer.

- If the key of the environment variable is dli.sql.shuffle.partitions or dli.sql.autoBroadcastJoinThreshold, the environment variable cannot contain the greater than (>) or less than (<) sign.

- If a parameter with the same name is configured in both a job and a script, the parameter value configured in the job will overwrite that configured in the script.

NOTE:

When you run a DLI SQL script or test a DLI SQL single-task job in non-scheduling scenarios, the following parameters are enabled by default:

- spark.sql.adaptive.enabled: Adaptive Query Execution (AQE) is enabled so that Spark can dynamically optimize the query execution plan based on the characteristics of the data being processed and improve the performance by reducing the amount of data to be processed.

- spark.sql.adaptive.join.enabled: AQE is enabled for join operations. The optimal join algorithm is selected based on the data being processed to improve performance.

- spark.sql.adaptive.skewedJoin.enabled: AQE is enabled for skewed join operations. Skewed data can be automatically detected and the join algorithm is optimized accordingly to improve performance.

- spark.sql.mergeSmallFiles.enabled: Merging of small files is enabled. Small files can be merged into large ones, improving performance and shortening the processing time. In addition, fewer files need to be read from remote storage, and more local files can be used.

If you do not want to use these functions, you can set the values of the preceding parameters to false.

DWS SQL properties

Data Connection

Select a data connection.

Database

Select a database.

Dirty Data Table

Name of the dirty data table defined in the SQL script.

The dirty data attributes cannot be edited. They are automatically recommended by the SQL script content.

Matching Rule

Enter a Java regular expression used to match the DWS SQL result. For example, if the expression is (?<=\()(-*\d+?)(?=,) and the SQL result is (1,"error message"), then the matched result is "1".

Failure Matching Value

If the matched content equals the set value, the node fails to be executed.

RDS SQL properties

Data Connection

Select a data connection.

Database

Select a database.

Spark SQL properties

MRS Job Name

MRS job name. The system automatically sets this parameter based on the job name.

If the MRS job name is not set and the direct connection mode is selected, the node name can contain only letters, digits, hyphens (-), and underscores (_). A maximum of 64 characters are allowed, and Chinese characters are not allowed.

NOTE:If you select an MRS API data connection, you cannot set the job name.

Data Connection

Select a data connection.

MRS Resource Queue

Select a created MRS resource queue.

NOTE:Select a queue you configured in the queue permissions of DataArts Security. If you set multiple resource queues for this node, the resource queue you select here has the highest priority.

Database

Select a database. If you select an MRS API connection, you cannot select a database.

Program Parameter

Set program parameters.

The following is an example:

Set Parameter to --queue and Value to default_cr, indicating that a specified queue of the MRS cluster is configured. You can also go to the MRS console, click the name of the MRS cluster and then the Jobs tab, locate the job, click More in the Operation column, and select View Details to view the job details.

NOTE:Configure optimization parameters such as threads, memory, and vCPUs for the job to optimize resource usage and improve job execution performance. This configuration is unavailable if a Spark proxy connection is used.

Spark SQL jobs with a single operator and using a connection of the MRS API type support program parameters.

Hive SQL properties

MRS Job Name

MRS job name. The system automatically sets this parameter based on the job name.

If the MRS job name is not set and the direct connection mode is selected, the node name can contain only letters, digits, hyphens (-), and underscores (_). A maximum of 64 characters are allowed, and Chinese characters are not allowed.

Data Connection

Select a data connection.

Database

Select a database.

MRS Resource Queue

Select a created MRS resource queue.

Program Parameter

Set program parameters.

The following is an example:

Set Parameter to --hiveconf and Value to mapreduce.job.queuename=default_cr, indicating that a specified queue of the MRS cluster is configured. You can also go to the MRS console, click the name of the MRS cluster and then the Jobs tab, locate the job, click More in the Operation column, and select View Details to view the job details.

NOTE:Configure optimization parameters such as threads, memory, and vCPUs for the job to optimize resource usage and improve job execution performance. This configuration is unavailable if a Hive proxy connection is used.

Hive SQL jobs with a single operator and using a connection of the MRS API type support program parameters.

Doris SQL properties

Data Connection

Select a data connection.

Database

Select a database.

Table 3 Advanced parameters Parameter

Mandatory

Description

Node Status Polling Interval (s)

Yes

How often the system checks whether the node execution is complete. The value ranges from 1 to 60 seconds.

During the node execution, the system checks whether the node execution is complete at the configured interval.

Max. Node Execution Duration

Yes

Execution timeout interval for the node. If retry is configured and the execution is not complete within the timeout interval, the node will be executed again.

Retry upon Failure

Yes

Whether to re-execute a node if it fails to be executed.

- Yes: The node will be re-executed, and the following parameters must be configured:

- Retry upon Timeout

- Maximum Retries

- Retry Interval (seconds)

- No: The node will not be re-executed. This is the default value.

NOTE:

If retry is configured for a job node and the timeout duration is configured, the system allows you to retry a node when the node execution times out.

If a node is not re-executed when it fails upon timeout, you can go to the Default Configuration page to modify this policy.

Retry upon Timeout is displayed only when Retry upon Failure is set to Yes.

Policy for Handling Subsequent Nodes If the Current node Fails

Yes

Policy for handling subsequent nodes if the current node fails

- End the current job execution plan: Execution of the current job will stop, and the job instance status will become Failed. If the job is scheduled periodically, subsequent periodic scheduling will run properly.

- Ignore failed: The current node fails to be ignored, and the job instance status is Ignore failed. If the job is scheduled periodically, subsequent periodic scheduling will run properly.

- Enter one or more SQL statements in the SQL editor.

- SQL statements are separated by semicolons (;). If semicolons are used in other places but not used to separate SQL statements, escape them with backslashes (\). The following is an example:

select 1; select * from a where b="dsfa\;"; --example 1\;example 2.

- RDS SQL does not support the begin ... commit transaction syntax. If necessary, use the start transaction ... commit transaction syntax.

- The SQL script cannot be larger than 1 MB.

- The system date obtained by using an SQL statement is different from that obtained by using the database tool. The query result is stored in the database in the YYYY-MM-DD format, but the query result displayed on the page is in the converted format.

- When a user submits a Spark SQL script to MRS, the script is submitted to the tenant queue bound to the user by default. The bound queue is the queue corresponding to tenant role of the user. If there are multiple queues, the system preferentially selects a queue based on the queue priorities. To set a fixed queue for the user to submit scripts, log in to FusionInsight Manager, choose Tenant Resources > Dynamic Resource Plan, and click the Global User Policy tab. For details, see Managing Global User Policies.

- You can click Check Syntax to check the syntax of a Spark SQL or Hive SQL script. Click Check Syntax. After the SQL statement is checked, you can view the syntax check details.

To facilitate script development, DataArts Factory provides the following capabilities:- The script editor supports the following shortcut keys, which improve the script development efficiency:

- F8: Run a script.

- F9: Stop running a script.

- Ctrl + /: Comment out or uncomment the line or code block at the cursor.

- Ctrl +Z: Cancel

- Ctrl + F: Search

- Ctrl + Shift + R: Replace

- Ctrl + X: Cut

- Ctrl + S: Save a script.

- Alt + mouse dragging: Select columns to edit a block.

- Ctrl + mouse click: Select multiple lines to edit or indent them together.

- Ctrl + → (or ←): Move the cursor rightwards (or leftwards) by word.

- Ctrl + Home or Ctrl + End: Navigate to the beginning or end of the current file.

- Home or End: Navigate to the beginning or end of the current line.

- Ctrl + Shift + L: Double-click all the same character strings and add cursors to them to implement batch modification.

- Ctrl + D: Delete a line.

- Shift + Ctrl + U: Unlock a script.

- Ctrl + Alt + K: Select the word where the cursor resides.

- Ctrl + B: Format

- Ctrl + Shift + Z: Redo

- Ctrl + Enter: Execute the selected line or content.

- Ctrl + Alt + F: Flag

- Ctrl + Shift + K: Search for the previous one.

- Ctrl + K: Search for the next one.

- Ctrl + Backspace: Delete the word to the left of the cursor.

- Ctrl + Delete: Delete the word to the right of the cursor.

- Alt + Backspace: Delete all content from the beginning of the line to the cursor.

- Alt + Delete: Delete all content from the cursor to the end of the line.

- Alt + Shift-Left: Select all content from the beginning of the line to the cursor.

- Alt + Shift-Right: Select all content from the cursor to the end of the line.

- System functions

To view the functions supported by this type of data connection, click System Functions on the right of the editor. You can double-click a function to the editor to use it.

- Script parameters

Enter script parameters in the SQL statement and click Parameter Setup in the right pane of the editor and then click Update from Script. You can also directly configure parameters and constants for the job script.

In the following script example, str1 indicates the parameter name. It can contain only letters, digits, hyphens (-), underscores (_), greater-than signs (>), and less-than signs (<), and can contain a maximum of 16 characters. The parameter name must be unique.

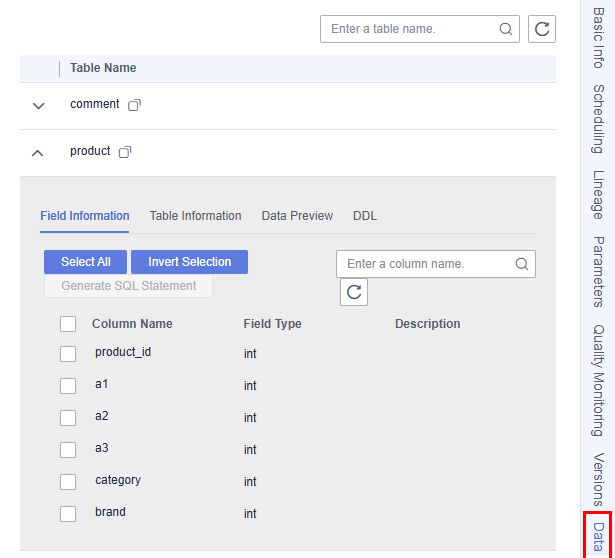

select ${str1} from data; - Visualized reading of data tables to generate SQL statements

Click Data Tables on the right of the editor to display all the tables in the current database or schema. You can select tables and columns and click Generate SQL Statement in the lower right corner to generate an SQL statement, which you need to manually format.

- SQL statements are separated by semicolons (;). If semicolons are used in other places but not used to separate SQL statements, escape them with backslashes (\). The following is an example:

- (Optional) In the upper part of the editor, click Format to format SQL statements.

- In the upper part of the editor, click Execute. If you need to execute some SQL statements separately, select the SQL statements first. After executing the SQL statements, view the execution history and result of the script in the lower part of the editor.

- You can click View Log to view logs of the job.

- You can control display of the script execution history by setting Script Execution History in Default Configuration to Myself or All users.

- You can clear the filter criteria for the SQL script execution results displayed in a list with one click.

- Above the editor, click Save to save the job.

Configuring Job Parameters

Click Parameter Setup on the right of the editor and set the parameters described in Table 4.

|

Module |

Description |

|---|---|

|

Variables |

|

|

Add |

Click Add and enter the variable parameter name and parameter value in the text boxes.

After the parameter is configured, it is referenced in the format of ${Parameter name} in the job. |

|

Edit Parameter Expression |

Click |

|

Modifying a Job |

Change the parameter name or value in the corresponding text boxes. |

|

Mask |

If the parameter value is a key, click |

|

Delete |

Click |

|

Constant Parameter |

|

|

Add |

Click Add and enter the constant parameter name and parameter value in the text boxes.

After the parameter is configured, it is referenced in the format of ${Parameter name} in the job. |

|

Edit Parameter Expression |

Click |

|

Modifying a Job |

Modify the parameter name and parameter value in text boxes and save the modifications. |

|

Delete |

Click |

|

Workspace Environment Variables |

|

|

View the variables and constants that have been configured in the workspace. |

|

Click the Parameter Preview tab and configure the parameters listed in Table 5.

|

Module |

Description |

|---|---|

|

Current Time |

This parameter is displayed only when Scheduling Type is set to Run once. The default value is the current time. |

|

Event Triggering Time |

This parameter is displayed only when Scheduling Type is set to Event-based. The default value is the time when an event is triggered. |

|

Scheduling Period |

This parameter is displayed only when Scheduling Type is set to Run periodically. The default value is the scheduling period. |

|

Start Time |

This parameter is displayed only when Scheduling Type is set to Run periodically. The value is the configured job execution time. |

|

Start Time |

This parameter is displayed only when Scheduling Type is set to Run periodically. The value is the time when the periodic job scheduling starts. |

|

Subsequent Instances |

Number of job instances scheduled.

|

In Parameter Preview, if a job parameter has a syntax error, the system displays a message.

If a parameter depends on the data generated during job execution, such data cannot be simulated and displayed in Parameter Preview.

Monitoring Quality

Data migration and single-task real-time jobs that have been orchestrated cannot be associated with quality jobs.

Two execution modes are available: parallel and serial. Click the Quality Monitoring tab on the right of the canvas to expand the slide-out panel and configure the parameters listed in Table 6.

|

Parameter |

Description |

|---|---|

|

Execution Mode |

Execution mode of quality monitoring. The options are as follows:

|

|

Quality job |

Quality jobs to be associated with the single-task job

|

Data Table

You can view tables of Hive SQL, Spark SQL, DLI SQL, Doris SQL, RDS SQL, and DWS SQLsingle-task batch processing jobs. On the Data Tables slide-out panel, you can select a table name to view the column names, field types, and descriptions in the table.

Testing and Saving the Job

After configuring the job, perform the following operations:

- Click

to execute the job.

to execute the job.

You can view the run logs of the job by clicking View Log.

- After the job is executed, click

to save the job configuration.

to save the job configuration.

After the job is saved, a version is automatically generated and displayed in Versions. The version can be rolled back. If you save a job multiple times within a minute, only one version is recorded. If the intermediate data is important, you can click Save new version to save and add a version.

Downloading or Dumping Script Execution Results

- After executing a script, you can click Download on the Result tab page to download a CSV result file to a local path. You can view the download record on the Download Center page.

- After executing a script, you can click Dump on the Result tab page to dump a CSV and a JSON result file to OBS. For details, see Table 7.

- The dump function is supported only if the OBS service is available.

- Only the execution results of SQL script query statements can be dumped.

- If the execution result of a download or dump SQL statement contains commas (,), newline characters, or other special characters, data may be disordered, the number of rows may increase, or other issues may occur.

Table 7 Dump parameters Parameter

Mandatory

Description

Data Format

Yes

Format of the data to be exported, which can be CSV or JSON

Resource Queue

No

DLI queue where the export operation is to be performed. Set this parameter when a DLI or SQL script is created.

Compression Format

No

Format of compression. Set this parameter when a DLI or SQL script is created.

- none

- bzip2

- deflate

- gzip

Storage Path

Yes

OBS path where the result file is stored. After selecting an OBS path, customize a folder. Then, the system will create it automatically for storing the result file.

You can also go to the Download Center page to set the default OBS path, which will be automatically set for Storage Path in the Dump Result dialog box.

Cover Type

No

If a folder that has the same name as your custom folder exists in the storage path, select a cover type. Set this parameter when a DLI or SQL script is created.

- Overwrite: The existing folder will be overwritten by the customized folder.

- Report: The system reports an error and suspends the export operation.

Export Column Name

No

Yes: Column names will be exported.

No: Column names will not be exported.

Character Set

No

- UTF-8: default character set

- GB2312: recommended when the data to be exported contains Chinese character sets

- GBK: expanded based on and compatible with GB2312

Quotation Character

No

This parameter is available and can be set only when Data Format is csv.

Quotation characters are used to identify the beginning and end of text fields when exporting job results, and are used to separate fields.

Only one character can be set. The default value is double quotation marks (").

This is mainly used to handle data that contains spaces, special characters, or characters that are the same as the delimiter.

For details about the examples of using quotation characters and escape characters, see Example of Using Quotation Characters and Escape Characters.

Escape Character

No

This parameter is available and can be set only when Data Format is csv.

If special characters, such as quotation marks, need to be included in the exported results, they can be represented using escape characters (backslash \).

Only one character can be set. The default value is a backslash (\).

Common scenarios for using escape characters are:

- If there is a third quotation mark between two quotation marks, add an escape character before the third quotation mark to prevent the field content from being split.

- If there is already an escape character in the data content, add another escape character before the existing one to avoid the original character being used as an escape character.

For details about the examples of using quotation characters and escape characters, see Example of Using Quotation Characters and Escape Characters.

|

SQL Type |

Maximum Number of Results That You Can View Online |

Maximum Number/Size of Results That Can Be Downloaded |

Maximum Number/Size of Results That Can Be Dumped |

|---|---|---|---|

|

DLI |

1,000 |

1,000 records, less than 3MB |

Unlimited |

|

Hive |

1,000 |

1,000 records, less than 3MB |

10,000 records or 3 MB |

|

DWS |

1,000 |

1,000 records, less than 3MB |

10,000 records or 3 MB |

|

Spark |

1,000 |

1,000 records, less than 3MB |

10,000 records or 3 MB |

|

RDS |

1,000 |

1,000 records, less than 3MB |

Not supported |

|

Doris |

1,000 |

1,000 records, less than 3MB |

1,000 records or 3 MB |

Example of Using Quotation Characters and Escape Characters

- Usage of quotation characters and escape characters:

- Quotation character: used to identify and separate fields. The default value is double quotation marks (").

- Escape character: If special characters, such as quotation marks, need to be included in the exported results, they can be represented using escape characters (backslash \). The default value is a backslash (\).

- To prevent the content of a field from being split when there is a third quotation character between two quotation characters, add an escape character before the third quotation character.

- If there is already an escape character in the data content, add another escape character before the existing one to avoid the original character being used as an escape character.

- Example:

You can leave Quotation Character and Escape Character empty.

If you leave them empty, the downloaded .csv file contains two rows in Excel.

If you specify both of them, for example, enter double quotation marks ("), the downloaded file is as follows.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot