CREATE TABLE PARTITION

Function

CREATE TABLE PARTITION creates a partitioned table. A partitioned table is a logical table that is divided into segments, called partitions whose data is stored on different physical blocks.

Common partitioning strategies define data ranges based on one or more columns. Each partition stores data within a range. These columns are called partition keys.

Currently, DWS row-store and column-store tables support range partitioning and list partitioning.

- Range partitioning

A table is partitioned into ranges defined by a key column or set of columns, with no overlap between the ranges of values assigned to different partitions. Each range has a dedicated partition for data storage.

- Core feature: Data is distributed by consecutive intervals. It is used in scenarios where data is ordered and retrieved by range.

- Scenario: Partition keys increase linearly or have continuous intervals. Data query is usually performed around a certain range, and new data naturally falls into a new range. For example, data about orders is partitioned by time range, and data about user levels is partitioned by value range.

- Range partitioning maps data to partitions based on ranges of values of the partitioning key that you establish for each partition. This is the most commonly used partitioning policy. Currently, range partitioning only allows the use of the range partitioning policy.

- List partitioning

List partitioning allocates records to partitions based on the key values in each partition. The key values do not overlap in different partitions. Each set of key values gets its own partition for storing related data. List partitioning is supported only by clusters of version 8.1.3 or later.

- Core feature: Data is divided based on discrete enumerated values, which are used as fixed classification category or high-frequency filter criteria.

- Scenario: Partition key values are fixed and discrete. Data query is usually based on a certain category, and key values do not increase irregularly. For example, the user information table is partitioned by province, and each province corresponds to a partition.

- In list partitioning, data is mapped to a created partition based on the partition key value. If the data can be mapped to, it is inserted into the specific partition. If it cannot be mapped to, error messages are returned.

Precautions

- A single table can have up to 32,767 partitions with a total of 32,767 boundary values. For better performance, keep this value at or below 1,000.

- A partitioned table supports unique and primary key constraints. The constraint keys of these constraints contain all partition keys.

Syntax

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

CREATE TABLE [ IF NOT EXISTS ] partition_table_name ( [ { column_name data_type [ COLLATE collation ] [ column_constraint [ ... ] ] | table_constraint | LIKE source_table [ like_option [...] ] }[, ... ] ] ) [ WITH ( {storage_parameter = value} [, ... ] ) ] [ COMPRESS | NOCOMPRESS ] [ DISTRIBUTE BY { REPLICATION | ROUNDROBIN | { [ HASH ] ( column_name ) } } ] [ TO { GROUP groupname | NODE ( nodename [, ... ] ) } ] PARTITION BY { {VALUES (partition_key)} | {RANGE (partition_key) ( partition_less_than_item [, ... ] )} | {RANGE (partition_key) ( partition_start_end_item [, ... ] )} | {LIST (partition_key) (list_partition_item [, ...])} } [ { ENABLE | DISABLE } ROW MOVEMENT ]; |

CREATE TABLE PARTITION Main Clause Parameters

|

Parameter |

Description |

Value Range or Example |

||||||

|---|---|---|---|---|---|---|---|---|

|

IF NOT EXISTS |

Does not throw an error if a table with the same name exists. A notice is issued in this case. |

- |

||||||

|

partition_table_name |

Name of the partitioned table |

A string, which must comply with the naming convention. For details, see Identifier Naming Conventions. |

||||||

|

column_name |

Specifies the name of a column to be created in the new table. |

A string, which must comply with the naming convention. For details, see Identifier Naming Conventions. |

||||||

|

data_type |

Specifies the data type of the column. |

- |

||||||

|

COLLATE collation |

The COLLATE clause defines the collation rule of a column. The supported data types include char, varchar, text, nchar, and nvarchar. The collation rule significantly impacts the sequence of query results, particularly when using SELECT ... ORDER BY. If no collation rule is specified, the default collation rule C (case-sensitive) is used. Common collation rules:

You can query collation rules supported by DWS from the PG_COLLATION system catalog.

To check the default collation rule of the current database, run the following statement:

|

A case-insensitive collation rule for the values of a column

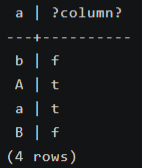

The following figure shows that A and a are equivalent.

|

||||||

|

CONSTRAINT constraint_name |

Specifies a name for a column or table constraint. The optional constraint clauses specify constraints that new or updated rows must satisfy for an insert or update operation to succeed. There are two ways to define constraints:

|

For details about the supported constraints, see Table 3. |

||||||

|

LIKE source_table [ like_option ... ] |

Specifies a table from which the new table automatically copies all column names, their data types, and their not-null constraints. |

For details about the supported constraints, see LIKE Parameters. |

||||||

|

WITH |

Specifies an optional storage parameter for a table or an index. |

For details about the supported WITH parameters, see Table 4. |

||||||

|

COMPRESS / NOCOMPRESS |

Specifies the keyword COMPRESS during the creation of a table, so that the compression feature is triggered in the case of a bulk INSERT operation. If this feature is enabled, a scan is performed for all tuple data within the page to generate a dictionary and then the tuple data is compressed and stored. If NOCOMPRESS is specified, the table is not compressed. |

Default value: NOCOMPRESS, tuple data is not compressed before storage. |

||||||

|

DISTRIBUTE BY |

Specifies how the table is distributed or replicated between DNs. |

For details about other restrictions, see DISTRIBUTE BY Parameter Description.

CAUTION:

|

||||||

|

TO { GROUP groupname | NODE ( nodename [, ... ] ) } |

TO GROUP specifies the Node Group in which the table is created. Currently, it cannot be used for HDFS tables. TO NODE is used for internal scale-out tools. |

- |

||||||

|

{ ENABLE | DISABLE } ROW MOVEMENT |

Specifies whether to relocate a row within a table. If the new value of a partition key in a row belongs to another partition, an error message is displayed or the row is moved to the corresponding partition.

The default value is DISABLE. Suggestions:

CAUTION:

To enable cross-partition update, you need to enable row movement. However, if SELECT FOR UPDATE is executed concurrently to query the partitioned table, the query results may be inconsistent. Therefore, exercise caution when performing this operation. |

The date column (partition key) of a partitioned table is partitioned by quarter into p_2023q1 and p_2023q2. The value 2023-02-15 of a row belongs to the partition p_2023q1 in the first quarter. After the value is updated to 2023-05-15, you can configure ROW MOVEMENT to determine whether to move the data in this row.

For details about examples, see Examples: Enabling and Disabling ROW MOVEMENT. |

CONSTRAINT constraint_name Parameter

1 2 3 4 5 6 7 |

{ NOT NULL | NULL | CHECK ( expression ) | DEFAULT default_expr | UNIQUE [ NULLS [NOT] DISTINCT | NULLS IGNORE ] index_parameters | PRIMARY KEY index_parameters } [ DEFERRABLE | NOT DEFERRABLE | INITIALLY DEFERRED | INITIALLY IMMEDIATE ] |

|

Parameter |

Description |

Value Range or Example |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|

|

NOT NULL |

Specifies that the column is not allowed to contain NULL values. |

- |

||||||||

|

NULL |

Specifies that the column can store NULL values. This is the default. This clause is only provided for compatibility with non-standard SQL databases. You are advised not to use this clause. |

- |

||||||||

|

CHECK (condition) [ NO INHERIT ] |

Specifies an expression producing a Boolean result which new or updated rows must satisfy for an insert or update operation to succeed. Expressions evaluating to TRUE or UNKNOWN succeed, or an error is reported and the execution fails. A check constraint specified as a column constraint should reference only the column's values, while an expression appearing in a table constraint can reference multiple columns. A constraint marked with NO INHERIT will not propagate to child tables. |

Single-column constraints: The price must be a positive number.

Insert a data record where the price is a negative number.

The constraint is violated, so an error is reported and the insertion fails.

Table constraints (on multiple columns): Create an inventory table with two columns available and reserved. One column indicates available quantity and the other column indicates reserved quantity. The available quantity must be greater than or equal to the reserved quantity.

|

||||||||

|

DEFAULT default_expr |

Assigns a default data value for a column. The value can be any variable-free expressions (Subqueries and cross-references to other columns in the current table are not allowed). The data type of the default expression must match the data type of the column. The default expression will be used in any insert operation that does not specify a value for the column. If there is no default value for a column, then the default value is NULL. |

- |

||||||||

|

UNIQUE [ NULLS [NOT] DISTINCT | NULLS IGNORE ] index_parameters UNIQUE [ NULLS [NOT] DISTINCT | NULLS IGNORE ] ( column_name [, ... ] ) index_parameters |

Specifies that a group of one or more columns of a table can contain only unique values. The [ NULLS [ NOT ] DISTINCT | NULLS IGNORE ] field is used to specify how to process null values in the index column of the Unique index. |

Default value: NULL can be inserted repeatedly. When the inserted data is compared with the original data in the table, the NULL value can be processed in any of the following ways:

Table 6 lists the behaviors of the three processing modes. For details, see Application Differences Among NULLS DISTINCT, NULLS NOT DISTINCT, and NULLS IGNORE.

CAUTION:

If DISTRIBUTE BY REPLICATION is not specified, the column table that contains only unique values must contain distribution columns. |

||||||||

|

PRIMARY KEY index_parameters PRIMARY KEY ( column_name [, ... ] ) index_parameters |

Specifies the primary key constraint specifies that a column or columns of a table can contain only unique (non-duplicate) and non-null values. Only one primary key can be specified for a table.

CAUTION:

If DISTRIBUTE BY REPLICATION is not specified, the column set with a primary key constraint must contain distributed columns. |

- |

||||||||

|

DEFERRABLE | NOT DEFERRABLE |

Sets whether the constraint can be deferrable. A constraint that is not deferrable will be checked immediately after every command. Checking of constraints that are deferrable can be postponed until the end of the transaction using the SET CONSTRAINTS command. NOT DEFERRABLE is the default value. Currently, only UNIQUE and PRIMARY KEY constraints of row-store tables accept this clause. All the other constraints are not deferrable. |

- |

||||||||

|

INITIALLY IMMEDIATE | INITIALLY DEFERRED |

If a constraint is deferrable, this clause specifies the default time to check the constraint.

The constraint check time can be altered using the SET CONSTRAINTS command. |

- |

table_constraint Parameters

1 2 3 4 |

{ CHECK ( expression ) | UNIQUE [ NULLS [NOT] DISTINCT | NULLS IGNORE ] ( column_name [, ... ] ) index_parameters | PRIMARY KEY ( column_name [, ... ] ) index_parameters} [ DEFERRABLE | NOT DEFERRABLE | INITIALLY DEFERRED | INITIALLY IMMEDIATE ] |

LIKE Parameters

1

|

{ INCLUDING | EXCLUDING } { DEFAULTS | CONSTRAINTS | INDEXES | STORAGE | COMMENTS | RELOPTIONS | DISTRIBUTION | ALL } |

The new table and original table are decoupled after creation is complete. Changes to the original table will not be applied to the new table, and it is not possible to include data of the new table in scans of the original table.

Default expressions for the copied column definitions will only be copied if INCLUDING DEFAULTS is specified. The default behavior is to exclude default expressions, resulting in the copied columns in the new table having default values NULL.

NOT NULL constraints are always copied to the new table. CHECK constraints will only be copied if INCLUDING CONSTRAINTS is specified; other types of constraints will never be copied. These rules also apply to column constraints and table constraints.

Columns and constraints copied by LIKE are not merged with the same name. If the same name is specified explicitly or in another LIKE clause, an error is reported.

- Any indexes on the source table will not be created on the new table, unless the INCLUDING INDEXES clause is specified.

- STORAGE settings for the copied column definitions will only be copied if INCLUDING STORAGE is specified. The default behavior is to exclude STORAGE settings.

- Comments for the copied columns, constraints, and indexes will only be copied if INCLUDING COMMENTS is specified. The default behavior is to exclude comments.

- If INCLUDING RELOPTIONS is specified, the new table will copy the storage parameter (WITH clause of the source table) of the source table. The default behavior is to exclude partition definition of the storage parameter of the source table.

- If INCLUDING DISTRIBUTION is specified, the new table will copy the distribution information of the source table, including distribution type and column, and the new table cannot use DISTRIBUTE BY clause. The default behavior is to exclude distribution information of the source table.

- INCLUDING ALL is an abbreviated form of INCLUDING DEFAULTS INCLUDING CONSTRAINTS INCLUDING INDEXES INCLUDING STORAGE INCLUDING COMMENTS INCLUDING RELOPTIONS INCLUDING DISTRIBUTION.

WITH Clause Parameters

|

Parameter |

Description |

Value Range |

|---|---|---|

|

FILLFACTOR |

The fillfactor of a table is a percentage between 10 and 100. 100 (complete packing) is the default value. When a smaller fillfactor is specified, INSERT operations pack table pages only to the indicated percentage. The remaining space on each page is reserved for updating rows on that page. This gives UPDATE a chance to place the updated copy of a row on the same page, which is more efficient than placing it on a different page. For a table whose records are never updated, setting the fillfactor to 100 (complete packing) is the appropriate choice, but in heavily updated tables smaller fillfactors are appropriate. The parameter has no meaning for column-store tables. |

10~100 |

|

ORIENTATION |

Determines the storage mode of the data in the table.

CAUTION:

orientation cannot be modified. |

|

|

COMPRESSION |

This parameter is available only for column-store tables. Value: LOW (default value), MIDDLE, or HIGH.

NOTE:

Currently, row-store table compression is not supported. |

- |

|

MAX_BATCHROW |

Specifies the maximum of a storage unit during data loading process. The parameter is only valid for column-store tables. |

10000 to 60000 Default value: 60000 |

|

PARTIAL_CLUSTER_ROWS |

Specifies the number of records to be partial cluster stored during data loading process. The parameter is only valid for column-store tables. |

The value is no less than 100,000. The value is the multiple of MAX_BATCHROW. |

|

enable_delta |

Specifies whether to enable delta tables in column-store tables. The parameter is only valid for column-store tables. Using column-store tables with delta tables is not recommended. This may cause disk bloat and performance deterioration due to delayed merge. |

Default value: off |

|

DELTAROW_THRESHOLD |

A reserved parameter. The parameter is only valid for column-store tables. |

0 to 60000 Default value: 6000 |

|

COLD_TABLESPACE |

Specifies the OBS tablespace for the cold partitions in a hot or cold table. This parameter is available only to column-store partitioned tables and cannot be modified. It must be used together with STORAGE_POLICY. The parameter STORAGE_POLICY can be left unconfigured. In this case, the default value default_obs_tbs is used. |

Valid OBS tablespace name. |

|

STORAGE_POLICY |

Specifies the rule for switching between hot and cold partitions. This parameter is used only for multi-temperature tables. This parameter must be used together with COLD_TABLESPACE. |

Value range: Cold and hot switchover policy name:Cold and hot switchover threshold. Currently, only LMT and HPN policies are supported. LMT indicates that the switchover is performed based on the last update time of partitions. HPN indicates the switchover is performed based on a fixed number of reserved hot partitions.

CAUTION:

|

|

ENABLE_MULTI_TEMP_DISKCACHE |

This parameter improves the performance of tables that separate cold and hot data. It is only applicable to tables that support this feature and is supported in versions 9.1.0 and later. |

on or off Default value: on/true |

|

PERIOD |

Specifies the period of automatically creating partitions and enables the automatic partition creation function. Only row-store and column-store range partitioned tables, time series tables, and cold and hot tables are supported. The partition key must be unique and its type can only be TIMESTAMP[(p)] [WITHOUT TIME ZONE], TIMESTAMP[(p)] [WITH TIME ZONE] or DATE. MAXVALUE partitions are not supported. The value of (nowTime - boundaryTime)/PERIOD must be less than the upper limit of the number of partitions, where nowTime indicates the current time and boundaryTime indicates the earliest partition boundary time. It cannot be used on midrange computers and acceleration clusters. |

1 hour ~ 100 years

CAUTION:

|

|

TTL |

Specifies the partition expiration time in partition management and enables the automatic partition deletion function. This parameter cannot be set separately. You must set PERIOD in advance or at the same time. The value of this parameter must be greater than or equal to that of PERIOD. Time To Live (TTL) specifies the data storage period of the table. The data that exceeds the TTL period will be cleared. To do this, the function in proc_drop_partition (relname regclass, older_than interval) is automatically invoked based on the period. The PERIOD and TTL values are of the Interval type, for example, 1 hour, 1 day, 1 week, 1 month, 1 year, and 1 month 2 day 3 hour. PERIOD indicates that data is partitioned by time period. The partition size may affect the query performance. The proc_add_partition (relname regclass, boundaries_interval interval) function is automatically invoked to create a partition after each period. |

1 hour ~ 100 years |

|

COLVERSION |

Specifies the version of the column-store format. Switching between different storage formats is supported. However, the storage format of a partitioned table cannot be switched. |

Default value: 2.0 The value of COLVERSION can only be set to 2.0 for OBS multi-temperature tables.

CAUTION:

When creating a column-store table, set COLVERSION to 2.0. Compared with the 1.0 storage format, the performance is significantly improved:

|

|

SKIP_FPI_HINT |

Indicates whether to skip the hint bits operation when the full-page writes (FPW) log needs to be written during sequential scanning. |

Default value: false

CAUTION:

If SKIP_FPI_HINT is set to true and the checkpoint operation is performed on a table, no Xlog will be generated when the table is sequentially scanned. This applies to intermediate tables that are queried less frequently, reducing the size of Xlogs and improving query performance. |

PARTITION BY Parameters

- partition_less_than_item is as follows:

1PARTITION partition_name VALUES LESS THAN ( { partition_value | MAXVALUE } )

- partition_start_end_item is as follows:

1 2 3 4 5 6

PARTITION partition_name { {START(partition_value) END (partition_value) EVERY (interval_value)} | {START(partition_value) END ({partition_value | MAXVALUE})} | {START(partition_value)} | {END({partition_value | MAXVALUE})} }

|

Parameter |

Description |

Value Range |

||

|---|---|---|---|---|

|

PARTITION BY RANGE(partition_key) |

The syntax specifying range partitioning. partition_key indicates the name of a partition key. |

|

||

|

PARTITION BY LIST (partition_key,[...]) |

The syntax specifying list partitioning. partition_key indicates the name of a partition key. |

In list partitioning, partition keys support the following data types: TINYINT, SMALLINT, INTEGER, BIGINT, NUMERIC/DECIMAL, TEXT, NVARCHAR2, VARCHAR(n), CHAR, BPCHAR, TIME, TIME WITH TIMEZONE, TIMESTAMP, TIMESTAMP WITH TIME ZONE, DATE, INTERVAL and SMALLDATETIME.

CAUTION:

In list partitioning, a partition key has a maximum of four columns. |

||

|

partition_less_than_item |

Partition definition syntax in range partitioning. partition_name is the name of a range partition. partition_value is the upper limit of range partition, and the value depends on the type of partition_key. MAXVALUE can specify the upper boundary of a range partition, and it is commonly used to specify the upper boundary of the last range partition.

CAUTION:

|

- |

||

|

partition_start_end_item |

The syntax of using the start value and interval value to define a range partition.

|

The following table describes the parameters.

CAUTION:

|

||

|

list_partition_item |

Partition definition syntax in list partitioning. partition_name indicates the partition name. partition_value is an enumerated value of the list partition boundary. The value depends on the type of partition_key. DEFAULT indicates the default partition boundary.

|

The following conventions and constraints apply to list partitioned tables:

|

|

Constraint |

All Index Columns Are NULL |

Some Index Columns Are NULL |

|---|---|---|

|

NULLS DISTINCT |

Can be inserted repeatedly. |

Can be inserted repeatedly. |

|

NULLS NOT DISTINCT |

Cannot be inserted repeatedly. |

Cannot be inserted if the non-null values are equal. Can be inserted if the non-null values are not equal. |

|

NULLS IGNORE |

Can be inserted repeatedly. |

Cannot be inserted if the non-null values are equal. Can be inserted if the non-null values are not equal. |

DISTRIBUTE BY Parameter Description

- When default_distribution_mode is set to roundrobin, the default value of DISTRIBUTE BY is selected according to the following rules:

- If the primary key or unique constraint is included during table creation, hash distribution is selected. The distribution column is the column corresponding to the primary key or unique constraint.

- If the primary key or unique constraint is not included during table creation, round-robin distribution is selected.

- When default_distribution_mode is set to hash, the default value of DISTRIBUTE BY is selected according to the following rules:

- If the primary key or unique constraint is included during table creation, hash distribution is selected. The distribution column is the column corresponding to the primary key or unique constraint.

- If the primary key or unique constraint is not included during table creation but there are columns whose data types can be used as distribution columns, hash distribution is selected. The distribution column is the first column whose data type can be used as a distribution column.

- If the primary key or unique constraint is not included during table creation and no column whose data type can be used as a distribution column exists, round-robin distribution is selected.

- Integer types: TINYINT, SMALLINT, INT, BIGINT, and NUMERIC/DECIMAL

- Character types: CHAR, BPCHAR, VARCHAR, VARCHAR2, NVARCHAR2, and TEXT

- Date/time types: DATE, TIME, TIMETZ, TIMESTAMP, TIMESTAMPTZ, INTERVAL, and SMALLDATETIME

Example: Using the LESS THAN Syntax to Create a Range Partitioned Table

The range partitioned table customer_address has four partitions and their partition keys are of the integer type. The ranges of the partitions are as follows: ca_address_sk < 2450815, 2450815 <= ca_address_sk < 2451179, 2451179 <= ca_address_sk < 2451544, 2451544 <= ca_address_sk.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

CREATE TABLE customer_address ( ca_address_sk INTEGER NOT NULL , ca_address_id CHARACTER(16) NOT NULL , ca_street_number CHARACTER(10) , ca_street_name CHARACTER varying(60) , ca_street_type CHARACTER(15) , ca_suite_number CHARACTER(10) ) DISTRIBUTE BY HASH (ca_address_sk) PARTITION BY RANGE(ca_address_sk) ( PARTITION P1 VALUES LESS THAN(2450815), PARTITION P2 VALUES LESS THAN(2451179), PARTITION P3 VALUES LESS THAN(2451544), PARTITION P4 VALUES LESS THAN(MAXVALUE) ); |

View the information of the partitioned table.

SELECT relname, boundaries FROM pg_partition p where p.parentid='customer_address'::regclass ORDER BY 1;

relname | boundaries

------------------+------------

customer_address |

p1 | {2450815}

p2 | {2451179}

p3 | {2451544}

p4 | {NULL}

(5 rows)

Query the number of rows in the P1 partition.

1 2 |

SELECT count(*) FROM customer_address PARTITION (P1); SELECT count(*) FROM customer_address PARTITION FOR (2450815); |

Examples: Enabling and Disabling ROW MOVEMENT

If ROW MOVEMENT is enabled, the data of a row is moved to a new partition when the partition key of the row is updated to a new value and the new value belongs to another partition.

If a partition key is frequently updated, enabling ROW MOVEMENT may cause extra I/O overhead. To enable it, evaluate service requirements first.

Create a table partitioned by date and enable ROW MOVEMENT.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

DROP TABLE IF EXISTS sale_data; CREATE TABLE sales_data ( sale_id INT, product_name VARCHAR(100), sale_date DATE, amount DECIMAL(10, 2) ) PARTITION BY RANGE (sale_date) ( PARTITION p_2023q1 VALUES LESS THAN ('2023-04-01'), PARTITION p_2023q2 VALUES LESS THAN ('2023-07-01'), PARTITION p_2023q3 VALUES LESS THAN ('2023-10-01'), PARTITION p_2023q4 VALUES LESS THAN ('2024-01-01') ) ENABLE ROW MOVEMENT; |

Insert test data.

1 2 3 4 |

INSERT INTO sales_data (sale_id, product_name, sale_date, amount) VALUES (1, 'Product A', '2023-02-15', 1000.00), -- It belongs to p_2023q1. (2, 'Product B', '2023-05-20', 1500.00); -- It belongs to p_2023q2. |

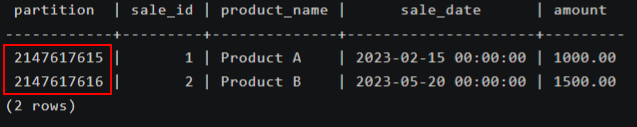

Query the data distribution of each partition.

1

|

SELECT tableoid::regclass AS partition, * FROM sales_data ORDER BY sale_id; |

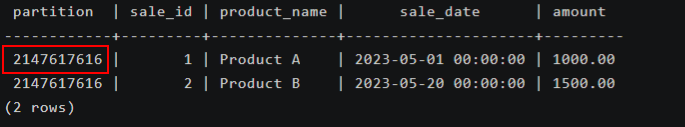

Update the partition key (which is moved from p_2023q1 to p_2023q2).

1

|

UPDATE sales_data SET sale_date = '2023-05-01' WHERE sale_id = 1; |

Query the data distribution of each partition again to confirm that the row has been moved.

1

|

SELECT tableoid::regclass AS partition, * FROM sales_data ORDER BY sale_id; |

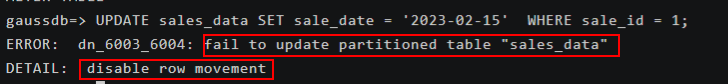

Disable ROW MOVEMENT.

1

|

ALTER TABLE sales_data DISABLE ROW MOVEMENT; |

Modify the partition key again (which is move from p_2023q2 back to p_2023q2).

UPDATE sales_data SET sale_date = '2023-02-15' WHERE sale_id = 1;

View the command output. It indicates that ROW MOVEMENT is disabled and the partition key cannot be updated.

Example: Using the START END Syntax to Create a Column-store Range Partitioned Table

CREATE TABLE customer_address_SE

(

ca_address_sk INTEGER NOT NULL ,

ca_address_id CHARACTER(16) NOT NULL ,

ca_street_number CHARACTER(10) ,

ca_street_name CHARACTER varying(60) ,

ca_street_type CHARACTER(15) ,

ca_suite_number CHARACTER(10)

)

WITH (ORIENTATION = COLUMN)

DISTRIBUTE BY HASH (ca_address_sk)

PARTITION BY RANGE(ca_address_sk)

(

PARTITION p1 START(1) END(1000) EVERY(200),

PARTITION p2 END(2000),

PARTITION p3 START(2000) END(5000)

);

View the information of the partitioned table.

SELECT relname, boundaries FROM pg_partition p where p.parentid='customer_address_SE'::regclass ORDER BY 1;

relname | boundaries

---------------------+------------

customer_address_se |

p1_0 | {1}

p1_1 | {201}

p1_2 | {401}

p1_3 | {601}

p1_4 | {801}

p1_5 | {1000}

p2 | {2000}

p3 | {5000}

(9 rows)

Example: Using the START END Syntax to Create and Modify a Range Partitioned Table

Create a temporary schema.

1 2 |

CREATE SCHEMA tpcds; SET CURRENT_SCHEMA TO tpcds; |

Create a partitioned table with the partition key of the integer type.

1 2 3 4 5 6 7 8 9 10 |

CREATE TABLE tpcds.startend_pt (c1 INT, c2 INT) DISTRIBUTE BY HASH (c1) PARTITION BY RANGE (c2) ( PARTITION p1 START(1) END(1000) EVERY(200), PARTITION p2 END(2000), PARTITION p3 START(2000) END(2500), PARTITION p4 START(2500), PARTITION p5 START(3000) END(5000) EVERY(1000) ) ENABLE ROW MOVEMENT; |

View the information of the partitioned table.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

SELECT relname, boundaries FROM pg_partition p where p.parentid='tpcds.startend_pt'::regclass ORDER BY 1; relname | boundaries -------------+------------ p1_0 | {1} p1_1 | {201} p1_2 | {401} p1_3 | {601} p1_4 | {801} p1_5 | {1000} p2 | {2000} p3 | {2500} p4 | {3000} p5_1 | {4000} p5_2 | {5000} tpcds.startend_pt | (12 rows) |

Import data and check the data volume in the partition.

1 2 3 4 5 6 7 8 9 10 11 12 |

INSERT INTO tpcds.startend_pt VALUES (GENERATE_SERIES(0, 4999), GENERATE_SERIES(0, 4999)); SELECT COUNT(*) FROM tpcds.startend_pt PARTITION FOR (0); count ------- 1 (1 row) SELECT COUNT(*) FROM tpcds.startend_pt PARTITION (p3); count ------- 500 (1 row) |

View the information of the partitioned table.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

SELECT relname, boundaries FROM pg_partition p where p.parentid='tpcds.startend_pt'::regclass ORDER BY 1; relname | boundaries -------------+------------ p1_0 | {1} p1_1 | {201} p1_2 | {401} p1_3 | {601} p1_4 | {801} p1_5 | {1000} p2 | {2000} p3 | {2500} p4 | {3000} p5_1 | {4000} p6_1 | {5300} p6_2 | {5600} p6_3 | {5900} p71 | {6000} q1_1 | {4250} q1_2 | {4500} q1_3 | {4750} q1_4 | {5000} tpcds.startend_pt | (19 rows) |

Example: Creating a List Partitioned Table with Partition Keys

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

CREATE TABLE data_list ( id int, time int, salary decimal(12,2) ) PARTITION BY LIST (time) ( PARTITION P1 VALUES (202209), PARTITION P2 VALUES (202210,202208), PARTITION P3 VALUES (202211), PARTITION P4 VALUES (202212), PARTITION P5 VALUES (202301) ); |

Example: Creating a List Partitioned Table with Multiple Partition Keys

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

CREATE TABLE sales_info ( sale_time timestamptz, period int, city text, price numeric(10,2), remark varchar2(100) ) DISTRIBUTE BY HASH(sale_time) PARTITION BY LIST (period, city) ( PARTITION north_2022 VALUES (('202201', 'north1'), ('202202', 'north2')), PARTITION south_2022 VALUES (('202201', 'south1'), ('202202', 'south2'), ('202203', 'south2')), PARTITION rest VALUES (DEFAULT) ); |

Example: Creating a Partitioned Table with Automatic Partition Management and No Specified Partitions

Set PERIOD to 1 day and partition key to time.

1 2 3 4 5 6 |

CREATE TABLE time_part ( id integer, time timestamp ) with (PERIOD='1 day') partition by range(time); |

Two default partitions are created during table creation. The boundary time of the first default partition is the start of the day later than the current time, that is, 2022-12-13 00:00:00. The boundary time of the second default partition is the boundary time of the first partition plus PERIOD, that is, 2022-12-13 00:00:00+1 day=2022-12-14 00:00:00.

1 2 3 4 5 6 7 8 9 10 11 12 13 |

SELECT now(); now ------------------------------- 2022-12-12 20:41:21.603172+08 (1 row) SELECT relname, boundaries FROM pg_partition p where p.parentid='time_part'::regclass ORDER BY 1; relname | boundaries ----------------+------------------------- default_part_1 | {"2022-12-13 00:00:00"} default_part_2 | {"2022-12-14 00:00:00"} time_part | (3 rows) |

Example: Creating a Partitioned Table with Automatic Partition Management and Specified Partitions

1 2 3 4 5 6 7 8 9 10 11 12 13 |

CREATE TABLE CPU( id integer, idle numeric, IO numeric, scope text, IP text, time timestamp ) with (TTL='7 days',PERIOD='1 day') partition by range(time) ( PARTITION P1 VALUES LESS THAN('2022-01-05 16:32:45'), PARTITION P2 VALUES LESS THAN('2022-01-06 16:56:12') ); |

Example: Creating a Partitioned Table by Month

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

CREATE TABLE customer_address ( ca_address_sk integer NOT NULL, ca_address_date date NOT NULL ) DISTRIBUTE BY HASH (ca_address_sk) PARTITION BY RANGE (ca_address_date) ( PARTITION p202001 VALUES LESS THAN('20200101'), PARTITION p202002 VALUES LESS THAN('20200201'), PARTITION p202003 VALUES LESS THAN('20200301'), PARTITION p202004 VALUES LESS THAN('20200401'), PARTITION p202005 VALUES LESS THAN('20200501'), PARTITION p202006 VALUES LESS THAN('20200601'), PARTITION p202007 VALUES LESS THAN('20200701'), PARTITION p202008 VALUES LESS THAN('20200801'), PARTITION p202009 VALUES LESS THAN('20200901'), PARTITION p202010 VALUES LESS THAN('20201001'), PARTITION p202011 VALUES LESS THAN('20201101'), PARTITION p202012 VALUES LESS THAN('20201201'), PARTITION p202013 VALUES LESS THAN(MAXVALUE) ); |

Insert data.

1 2 3 4 |

INSERT INTO customer_address values('1','20200215'); INSERT INTO customer_address values('7','20200805'); INSERT INTO customer_address values('9','20201111'); INSERT INTO customer_address values('4','20201231'); |

Query a partition.

1 2 3 4 5 |

SELECT * FROM customer_address PARTITION(p202009); ca_address_sk | ca_address_date ---------------+--------------------- 7 | 2020-08-05 00:00:00 (1 row) |

Example: Using the START END Syntax to Create a Partitioned Table with Multiple Partitions at a Time

- Create a partitioned table day_part. Each day is a partition, and the partition key is a date.

1 2 3 4 5

CREATE table day_part(id int,d_time date) DISTRIBUTE BY HASH (id) PARTITION BY RANGE (d_time) (PARTITION p1 START('2022-01-01') END('2022-01-31') EVERY(interval '1 day')); ALTER TABLE day_part ADD PARTITION pmax VALUES LESS THAN (maxvalue);

- Create a partitioned table week_part, seven days as a partition, and the partition key is a date.

1 2 3 4 5

CREATE table week_part(id int,w_time date) DISTRIBUTE BY HASH (id) PARTITION BY RANGE (w_time) (PARTITION p1 START('2021-01-01') END('2022-01-01') EVERY(interval '7 day')); ALTER TABLE week_part ADD PARTITION pmax VALUES LESS THAN (maxvalue);

- Create the partitioned table month_part, each month as a partition, and the partition key is a date.

1 2 3 4 5

CREATE table month_part(id int,m_time date) DISTRIBUTE BY HASH (id) PARTITION BY RANGE (m_time) (PARTITION p1 START('2021-01-01') END('2022-01-01') EVERY(interval '1 month')); ALTER TABLE month_part ADD PARTITION pmax VALUES LESS THAN (maxvalue);

Example: Creating a Hot or Cold Table

Only a column-store partitioned table is supported. Use the default OBS tablespace. Set LMT to 30 for cold and hot switchover rules.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

CREATE TABLE cold_hot_table ( W_WAREHOUSE_ID CHAR(16) NOT NULL, W_WAREHOUSE_NAME VARCHAR(20) , W_STREET_NUMBER CHAR(10) , W_STREET_NAME VARCHAR(60) , W_STREET_ID CHAR(15) , W_SUITE_NUMBER CHAR(10) ) WITH (ORIENTATION = COLUMN, storage_policy = 'LMT:30') DISTRIBUTE BY HASH (W_WAREHOUSE_ID) PARTITION BY RANGE(W_STREET_ID) ( PARTITION P1 VALUES LESS THAN(100000), PARTITION P2 VALUES LESS THAN(200000), PARTITION P3 VALUES LESS THAN(300000), PARTITION P4 VALUES LESS THAN(MAXVALUE) )ENABLE ROW MOVEMENT; |

Helpful Links

FAQs

- Practice: Creating and Managing DWS Partitioned Tables

- Practice: Case: Reconstructing Partitioned Tables

- "inserted partition key does not map to any table partition" Is Reported When Data Is Inserted into a Partitioned Table

- Error upper boundary of adding partition MUST overtop last existing partition Is Reported When a New Partition Is Added to a Range Partitioned Table

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot