Preparing Environment

Before interconnecting open source component Spark with LakeFormation, complete the following operations:

- Prepare an available open source Spark environment and Hive environment. Install the Git environment.

Currently, only Spark 3.1.1 and Spark 3.3.1 are supported. The Hive kernel version is 2.3.

- Prepare a LakeFormation instance. For details, see Creating an Instance.

- Create a LakeFormation access client in the same VPC and subnet as Spark. For details, see Managing Clients.

- Prepare the development environment. For details, see the part "Preparing the Environment" inPreparing the Development Environment. You can choose whether to install and configure IntelliJ IDEA.

- Download the LakeFormation client.

- Method 1: downloading the release version

Download link: https://gitee.com/HuaweiCloudDeveloper/huaweicloud-lake-formation-lakecat-sdk-java/releases

Download the corresponding client based on the Spark and Hive versions. For example, if the versions of Spark and Hive are 3.1.1 and 2.3.7, download lakeformation-lakecat-client-hive2.3-v1.0.0.rar.

Verify the compressed package. After downloading the package, run the certutil -hashfile <Compressed package > sha256 command in the Windows environment to check whether the command output is consistent with the content of the corresponding sha256 file.

- Method 2: compiling the client locally

- Obtain the client code.

Download link: https://gitee.com/HuaweiCloudDeveloper/huaweicloud-lake-formation-lakecat-sdk-java

- Run the following command in Git to switch the branch to master_dev:

git checkout master_dev

- Configure the Maven source. For details, see Obtaining the SDK and Configuring Maven.

- Obtain the following JAR package and corresponding POM file and save the file to the local Maven repository.

For example, if the local repository directory is D:\maven\repository, save the file in the D:\maven\repository\com\huaweicloud\hadoop-huaweicloud\3.1.1-hw-53.8 path.

- JAR package: https://github.com/huaweicloud/obsa-hdfs/blob/master/release/hadoop-huaweicloud-3.1.1-hw-53.8.jar

- POM file: https://github.com/huaweicloud/obsa-hdfs/blob/master/hadoop-huaweicloud/pom.xml (rename the downloaded file hadoop-huaweicloud-3.1.1-hw-53.8.pom)

- Package and upload the client code.

- Go to the client project directory and run the following command to package the client code:

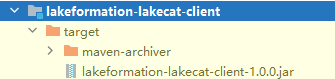

- After the packaging is complete, obtain the lakeformation-lakecat-client-1.0.0.jar package from the target directory of lakeformation-lakecat-client.

- Obtain the client code.

- Method 1: downloading the release version

- Prepare and replace JAR packages required by the Hive kernel.

If only SparkCatalogPlugin is used for interconnection (MetastoreClient is not used), skip this step.

- Method 1: downloading the JAR packages needed for Hive pre-building.

Download link: https://gitee.com/HuaweiCloudDeveloper/huaweicloud-lake-formation-lakecat-sdk-java/releases

Download the corresponding client based on the Spark and Hive versions. For example, if the versions of Spark and Hive are 3.1.1 and 2.3.7, download hive-exec-2.3.7-core.jar and hive-common-2.3.7.jar.

- Method 2: modifying Hive-related JAR packages locally

If the connected environment is Spark 3.1.1, use Hive 2.3.7. If the interconnected environment is Spark 3.3.1, use Hive 2.3.9.

In Windows, you need to perform Maven operations in the WSL development environment.

- Download the Hive source code based on the Hive version.

For example, if the Hive kernel version is 2.3.9, the download link is https://github.com/apache/hive/tree/rel/release-2.3.9.

- Apply the patch in the LakeFormation client code to the Hive source code.

- Switch the Hive source code branch as required. For example, if the Hive kernel version is 2.3.9, run the following command:

- Run the following command to apply the patch file to the Hive source code project after the branch is switched:

mvn patch:apply -DpatchFile=${your patch file location}

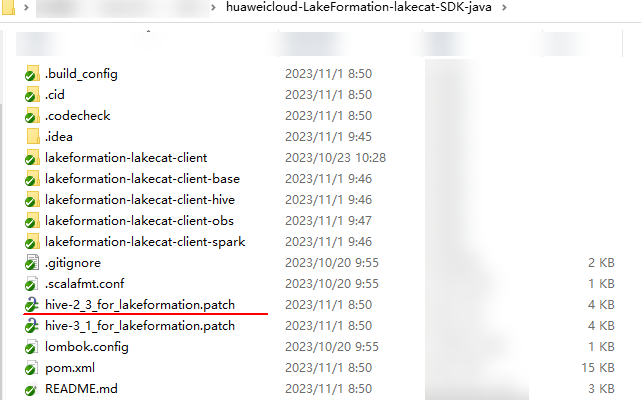

In the command, your patch file location indicates the storage path of the hive-2_3_for_lakeformation.patch file. The patch file can be obtained from the client project, as shown in the following figure.

- Run the following command to recompile the Hive kernel source code:

- Download the Hive source code based on the Hive version.

- Method 1: downloading the JAR packages needed for Hive pre-building.

- Add the JAR packages required by the Spark environment.

Obtain the JAR packages listed in the following table and supplement or replace them in the jars directory in Spark.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot