Creating a Notebook Instance (Default Page)

Before developing a model, create a notebook instance and access it for coding.

Constraints

- When a notebook instance is created, auto stop is enabled by default. The notebook instance will automatically stop at the specified time.

- Only running notebook instances can be accessed or stopped.

- A maximum of 10 notebook instances can be created under one account.

- For single-PU instances powered by Snt9B23 or D310P-300 resource pools, EVS disks cannot be used to create notebook instances (when Storage is set to EVS).

Billing

- A running notebook instance will be billed based on used resources. The fees vary depending on your selected resources. For details, see Pricing Details. When a notebook instance is not used, stop it.

- If you select EVS for storage when creating a notebook instance, the EVS disk will be continuously billed if the instance is not deleted. Stop and delete the notebook instance if it is not required. For details, see Development Environment.

Procedure

- Log in to the ModelArts console. In the navigation pane on the left, choose Permission Management and check whether the access authorization has been configured. If not, configure access authorization. For details, see Configuring Agency Authorization for ModelArts with One Click.

Figure 1 Viewing agency configurations

- Log in to the ModelArts console. In the navigation pane on the left, choose Development Workspace > Notebook.

- Click Create Notebook in the upper right corner. On the displayed page, configure the parameters.

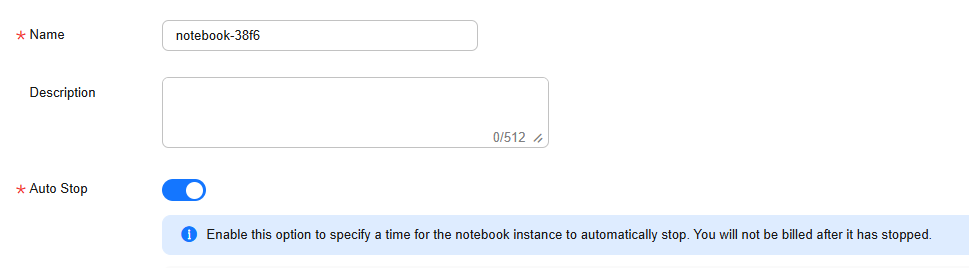

- Configure the basic information of the notebook instance, including its name, description, and auto stop status. For details, see Table 1.

Figure 2 Basic information of a notebook instance

Table 1 Basic parameters Parameter

Description

Name

Name of the notebook instance, which is automatically generated by the system. You can rename it based on service requirements. A name consists of a maximum of 128 characters and cannot be empty. It can contain only digits, letters, underscores (_), and hyphens (-).

Description

Brief description of the notebook instance

Auto Stop

Automatically stops the notebook instance at a specified time. This function is enabled by default. The default value is 1 hour, indicating that the notebook instance automatically stops after running for 1 hour and its resource billing will stop then. The options are 1 hour, 2 hours, 4 hours, 6 hours, and Custom. You can select Custom to specify any integer from 1 to 72 hours.

- Stop as scheduled: If this option is enabled, the notebook instance automatically stops when the running duration exceeds the specified duration.

NOTE:To protect in-progress jobs, a notebook instance does not automatically stop immediately at the auto stop time. Instead, there is a 2 to 5 minutes delay for you to renew the auto stop time.

- Configure notebook parameters, such as the image and instance flavor. For details, see Table 2.

Table 2 Notebook instance parameters Parameter

Description

Image

Public and private images are supported.

- Public images are the AI engines built in ModelArts.

- You can use a custom image created by yourself. Use either of the following ways to create a custom image:

- Save the instance created using the public image and use it as a custom image. For details, see Saving a Notebook Instance.

- Create a custom image using a preset or third-party image. Custom images must meet the image specifications. After the image is created, register it on the image management page of ModelArts before using it in a notebook instance. For details, see Creating a Custom Image.

An image corresponds to an AI engine. When you select an image during instance creation, the AI engine is specified accordingly. Select an image as required. Enter a keyword of the image name in the search box on the right to quickly search for the image.

You can change an image on a stopped notebook instance.

JupyterLab

JupyterLab supports the following two versions. The default version is 3.2.3. User experience and version stability vary among the two versions.

- 3.2.3: A stable version that has been widely verified and is suitable for long-term use. Select this version if you need a stable working environment.

- 4.3.1: The latest version that includes multiple new functions and improvements, but may be unstable. Select this version to experience the latest functions. For details, see JupyterLab 4.3.1.

NOTE:

JupyterLab 3.2.3 will be gradually brought offline. Select a version as required.

Resource Type

Public and dedicated resource pools are available. For dedicated resource pools, you can select heterogeneous resource pools of CPUs, NPUs, and GPUs. For example, if the node flavor supports CPUs and GPUs, you can select CPUs or GPUs as the instance specifications.

Public resource pools are billed based on the running duration of your notebook instances.

Select a created dedicated resource pool based on site requirements. If no dedicated resources are available, purchase one.

NOTE:If the dedicated resource pool you purchased is a single-node Tnt004 pool whose specification is GPU: 1*tnt004 | CPU: 8 vCPUs and 32 GiB (modelarts.vm.gpu._tnt004u8), when you use the cluster to create a notebook instance, the Tnt004 card is idle but is displayed as sold out or the creation fails due to insufficient resources, contact technical support.

Type

Processor type, which can be CPU or GPU.

The chips vary depending on the selected image.

GPUs deliver better performance that CPUs but at a higher cost. Select a chip type as needed.

Instance Specifications

The available resource specifications vary among chip types. Select the specifications based on your needs.

- CPU

2vCPUs 8GB: General-purpose Intel CPU flavor, ideal for rapid data exploration and experiments

8vCPUs 32GB: General computing-plus Intel CPU flavor, ideal for compute-intensive applications

- GPU

GPU: 1*Vnt1(32GB)|CPU: 8vCPUs 64GB: Single GPU with 32 GB of memory, ideal for algorithm training and debugging in deep learning scenarios

GPU: 1*Tnt004(16GB)|CPU: 8vCPUs* 32GB: Single GPU with 16 GB of memory, ideal for inference computing such as computer vision, video processing, and NLP tasks

GPU: 1*Pnt1(16GB)|CPU: 8vCPUs 64GB: Single GPU with 16 GB of memory, ideal for algorithm training and debugging in deep learning scenarios

Storage

The value can be EVS, SFS, OBS, or PFS. Configure this parameter based on your needs.

NOTE:OBS and PFS are whitelist functions. If you have trial requirements, submit a service ticket to apply for permissions.

- EVS

Set a disk size based on service requirements. The default value is 5 GB. The maximum disk size is displayed on the GUI.

The EVS disk space is charged by GB from the time the notebook instance is created to the time the notebook instance is deleted.

-

Select this type only for a dedicated resource pool. SFS takes effect only after a dedicated resource pool can communicate with your VPC. For details, see Creating a Network.

NOTE:For details about how to set permissions to access SFS Turbo folders, see Permissions Management.

- SFS: Select the created SFS Turbo file system (created on the SFS console).

- Cloud Mount Path: Retain the default value /home/ma-user/work/.

- Mounted Subdirectory: Select the storage path on SFS Turbo.

- Mount Method: This parameter is displayed when the folder control permission is granted for the user. The read/write or read-only permission is displayed based on the storage path on SFS Turbo.

- The value can be OBS or PFS.

Storage Path: Set the OBS path for storing notebook data. If you want to use existing files or data, upload them to the specified OBS path. Storage Path must be set to a specific directory in an OBS bucket rather than the root directory of the OBS bucket.

Secret: Select an existing secret or click Create on the right to create one. On the displayed DEW console, create a secret. Enter accessKeyId and secretAccessKey under Key, and enter the AKs/SKs obtained from My Credentials > Access Keys under Value.

Figure 3 Configuring the secret values

EVS and SFS are all mounted to the /home/ma-user/work directory.

You can add a data storage path during the runtime of a notebook instance by referring to Dynamically Mounting an OBS Parallel File System.

The data is retained in /home/ma-user/work, even if the notebook instance is stopped or restarted.

When a notebook instance is deleted, the EVS storage is released and the stored data is not retained. SFS can be mounted to a new notebook instance and data can be retained.

Extended Storage

NOTE:This parameter is a whitelist function. If you have trial requirements, submit a service ticket to apply for permissions.

If you need multiple data storage paths, click Add Extended Storage to add more storage mount directories. You can add an OBS, PFS, or SFS directory.

Constraints:

- For each type, a maximum of five directories can be mounted.

- The directories must be unique and cannot be mounted to a blacklisted directory. Nested mounting is allowed. Blacklisted directories are those with the following prefixes:

/data/, /cache/, /dev/, /etc/, /bin/, /lib/, /sbin/, /modelarts/, /train-worker1-log/, /var/, /resource_info/, /usr/, /sys/, /run/, /tmp/, /infer/, and /opt/

After this parameter is configured, the notebook instance details page is displayed. Click Storage Storage > Extended Storage to view or edit the extended storage information. If the number of storage devices does not reach the maximum, you can click Add Extended Storage.

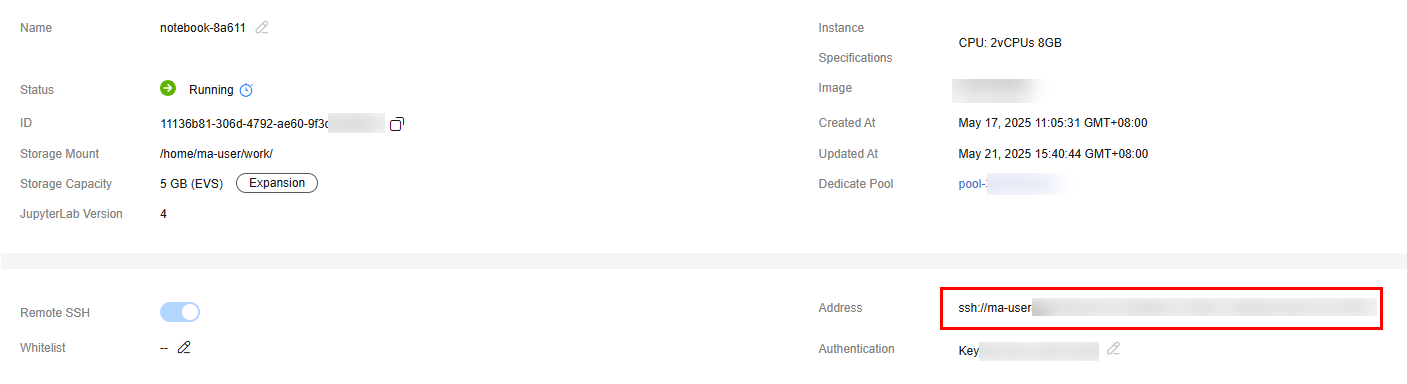

Remote SSH

- After you enable this function, you can remotely access the development environment of the notebook instance from your local development environment.

- When a notebook instance is stopped, you can update the SSH configuration on the instance details page.

NOTE:The notebook instances with remote SSH enabled have VS Code plug-ins (such as Python and Jupyter) and the VS Code server package pre-installed, which occupy about 1 GB persistent storage space.

Key Pair

Set a key pair after remote SSH is enabled.

Select an existing key pair.

Alternatively, click Create on the right of the text box to create one on the DEW console. On the displayed Create Account Key Pair page, configure the parameters.

After a notebook instance is created, you can change the key pair on the instance details page.

CAUTION:Download the created key pair and properly keep it. When you use a local IDE to remotely access the notebook development environment, the key pair is required for authentication.

Whitelist

Set a whitelist after remote SSH is enabled. This parameter is optional.

Add the IP addresses for remotely accessing the notebook instance to the whitelist, for example, the IP address of your local PC or the public IP address of the source device. A maximum of five IP addresses can be added and separated by commas (,). If the parameter is left blank, all IP addresses will be allowed for remote SSH access.

If your source device and ModelArts are isolated from each other in network, obtain the public IP address of your source device using a mainstream search engine, for example, by entering "IP address lookup", but not by running ipconfig or ifconfig/ip locally.

After a notebook instance is created, you can change the whitelist IP addresses on the instance details page.

- (Optional) Add tags to the notebook instance. Enter a tag key and value and click Add.

Table 3 Adding a tag Parameter

Description

Tags

ModelArts can work with Tag Management Service (TMS). When creating resource-consuming tasks in ModelArts, for example, training jobs, configure tags for these tasks so that ModelArts can use tags to manage resources by group.

For details about how to use tags, see How Does ModelArts Use Tags to Manage Resources by Group?

After adding a tag, you can view, modify, or delete the tag on the notebook instance details page.

You can select a predefined TMS tag from the tag drop-down list or customize a tag. Predefined tags are available to all service resources that support tags. Customized tags are available only to the service resources of the user who has created the tags.

- Configure the basic information of the notebook instance, including its name, description, and auto stop status. For details, see Table 1.

- Click Next.

- After confirming the parameter settings, click Submit.

Switch to the notebook instance list. The notebook instance is being created. It will take several minutes before its status changes to Running. Then, the notebook instance is created.

- In the notebook instance list, click the instance name. On the instance details page that is displayed, view the instance configuration.

If Remote SSH is enabled, you can click the modification icon on the right of the whitelist to modify it. You can click the modification icon on the right of Authentication to update the key pair of a stopped notebook instance.

In the Storage tab, click Mount Storage to mount an OBS parallel file system to the instance for reading data. For details, see Dynamically Mounting an OBS Parallel File System.

If an EVS disk is used, click Expansion on the right of Storage Capacity to dynamically expand the EVS disk capacity. For details, see Dynamically Expanding EVS Disk Capacity.

Accessing a Notebook Instance

Access a notebook instance in the Running state for coding.

- Online access: Use JupyterLab. For details, see Using a Notebook Instance for AI Development Through JupyterLab.

- Remotely accessed from a local IDE through PyCharm. For details, see Using Notebook Instances Remotely Through PyCharm.

- Remotely accessed from a local IDE through VS Code. For details, see Using Notebook Instances Remotely Through VS Code.

- Remotely accessed from a local IDE through SSH. For details, see Using a Notebook Instance Remotely with SSH.

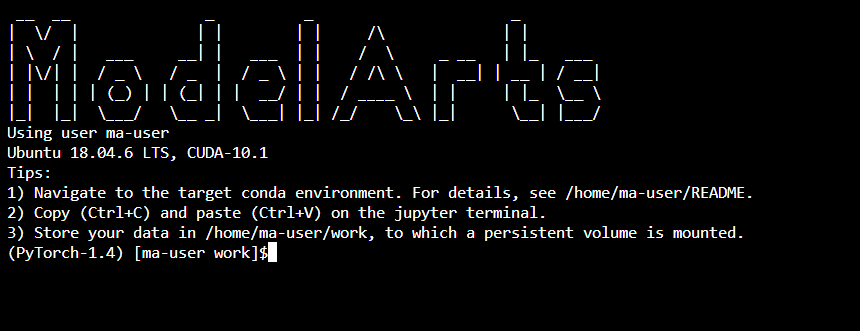

ModelArts notebook instances are started by ma-user by default. After you access the instance, the default working directory is /home/ma-user/work.

Most notebook instances in a dedicated resource pool are started as user root. The details are as follows:

- When you log in to the terminal as user root, the system automatically runs the source /home/ma-user/.bashrc command to synchronize the environment variables of user ma-user. To disable this function, set the environment variable export DISABLE_MA_USER_BASHRC to true in the custom image to prevent the /home/ma-user/.bashrc file from being loaded.

- If the instance is started by user root, only user root can be used for SSH remote connection.

Figure 5 Using user root for SSH remote connection

JupyterLab 4.3.1

This version has significantly improved user experience, functions, and performance. The following table lists the main updates. For further information, see JupyterLab Documentation.

|

Function Module |

Description |

|---|---|

|

Workspaces |

You can create multiple workspaces to organize and manage different projects and files, improving project management efficiency. |

|

Launcher creation toolbar |

Added a toolbar for functional access and convenient operations. |

|

Theme framework optimization |

Optimized the theme framework to enhance the theme flexibility and compatibility. You can customize the GUI as required. |

|

Configuration page optimization |

Optimized user experience on the configuration page for easier use. |

|

Performance optimization and debugging enhancement |

Fully optimized the system performance and enhanced the debugging function to improve the development experience. |

|

Code editor enhancement |

Improved the code editor by adding more functions and optimizing performance to improve the code writing and editing experience. |

|

Search function enhancement |

Enhanced the search function for quicker and more accurate search. |

|

Performance enhancement |

Improved the overall performance, system response speed, and system stability. |

|

Custom CSS style sheets |

Custom CSS style sheets can be used to adjust the GUI appearance to meet personalized requirements. |

|

Markdown charts |

Charts can be used in Markdown to facilitate document writing. |

|

Virtual scroll bar |

Introduced the virtual scroll bar to improve the scrolling experience of large files or a large amount of content. |

|

Workspace UI |

Improved the workspace UI to provide better visual effect and operation experience and enhance UI friendliness. |

|

File access records |

You can view recently opened and closed files to quickly access common files and improve work efficiency. |

|

Keyboard shortcuts improvement |

Keyboard shortcuts are improved for higher operation efficiency and convenience. |

Mounting Directories of Notebook Containers

When you use EVS storage when creating a notebook instance, the /home/ma-user/work directory is used as the workspace for persistent storage.

The data stored in only the work directory is retained after the instance is stopped or restarted. When you use a development environment, store the data for persistence in /home/ma-user/work.

For details about directory mounting of a notebook instance, see Table 5. The following mounting points are not saved when images are saved.

|

Mount Point |

Read Only |

Remarks |

|---|---|---|

|

/home/ma-user/work/ |

No |

Persistent directory of your data |

|

/data |

No |

Mount directory of your PFS |

|

/cache |

No |

Used to mount the hard disk of the host NVMe (supported by bare metal specifications) |

|

/train-worker1-log |

No |

Compatible with training job debugging |

|

/dev/shm |

No |

Used for PyTorch engine acceleration |

Selecting Storage for a Notebook Instance

Storage varies depending on performance, usability, and cost. No storage media can cover all scenarios. Learn about in-cloud storage application scenarios for better usage.

|

Storage |

Application Scenario |

Advantage |

Disadvantage |

|---|---|---|---|

|

EVS |

Data and algorithm exploration only in the development environment. |

Block storage SSDs feature better overall I/O performance than NFS. The storage capacity can be dynamically expanded to up to 4,096 GB. As persistent storage, EVS disks are mounted to /home/ma-user/work. The data in this directory is retained after the instance is stopped. The storage capacity can be expanded online based on demand. |

This type of storage can only be used in a single development environment. |

|

Parallel File System (PFS) |

NOTE:

PFS buckets mounted as persistent storage for AI development and exploration.

|

PFS is an optimized high-performance object storage file system with low storage costs and large throughput. It can quickly process high-performance computing (HPC) workloads. PFS mounting is recommended if OBS is used.

NOTE:

Package or split the data to be uploaded by 128 MB or 64 MB. Download and decompress the data in local storage for better I/O and throughput performance. |

Due to average performance in frequent read and write of small files, PFS storage is not suitable for large model training or file decompression.

NOTE:

Before mounting PFS storage to a notebook instance, grant ModelArts with full read and write permissions on the PFS bucket. The policy will be retained even after the notebook instance is deleted. |

|

OBS |

NOTE:

When uploading or downloading a large amount of data in the development environment, you can use OBS buckets to transfer data. |

Low storage cost and high throughput, but average performance in reading and writing small files. It is a good practice to package or split the file by 128 MB or 64 MB. In this way, you can download the packages, decompress them, and use them locally. |

The object storage semantics is different from the Posix semantics and needs to be further understood. |

|

Scalable File Service (SFS) |

Available only in dedicated resource pools. Use SFS storage in informal production scenarios such as exploration and experiments. One SFS device can be mounted to both a development environment and a training environment. In this way, you do not need to download data each time your training job starts. This type of storage is not suitable for heavy I/O training on more than 32 cards. |

SFS is implemented as NFS and can be shared between multiple development environments and between development and training environments. This type of storage is preferred for non-heavy-duty distributed training jobs, especially for the ones not requiring to download data additionally when the training jobs start. |

The performance of the SFS storage is not as good as that of the EVS storage. |

|

OceanStor Pacific storage (SFS capacity-oriented 2.0) |

Currently, it can be used only in Tiangong resource pools. It is suitable for the training jobs that use the file systems provided by SFS capacity-oriented 2.0 for AI model training and exploration. In addition, OBS APIs are provided to import training data from outside the cloud. |

It provides a high-performance file client to meet the high storage bandwidth requirements of heavy-load training jobs. It also supports access to OBS. After the training data is imported to the storage via OBS APIs, it can be used to train the model directly without any conversion. |

It provides the object storage semantics, which is different from the Posix semantics and needs to be further understood. |

|

Local storage |

First choice for heavy-duty training jobs. |

High-performance SSDs for the used VM or BMS, featuring high file I/O throughput. For heavy-duty training jobs, store data in the target directory and then start training. By default, the storage is mounted to the /cache directory. For details about the available space of the /cache directory, see What Are Sizes of the /cache Directories for Different Notebook Specifications in DevEnviron? |

The storage lifecycle is associated with the container lifecycle. Data needs to be downloaded each time the training job starts. |

FAQ

- How do I use EVS in a development environment?

When creating a notebook instance, select a small-capacity EVS disk. You can scale out the disk as needed. For details, see Dynamically Expanding EVS Disk Capacity.

- How do I use an OBS parallel file system in a development environment?

When training data in a notebook instance, you can use the datasets mounted to a notebook container, and use an OBS parallel file system. For details, see Dynamically Mounting an OBS Parallel File System.

- How do I switch back to JupyterLab 3.2.3 if an error occurs during the startup of JupyterLab 4.3.1?

Locate the target instance in the list and click Start in the Operation column. In the displayed dialog box, select JupyterLab 3.2.3 and click OK.

- Can I use both JupyterLab 3.2.3 and 4.3.1 in a project?

Not recommended. Each JupyterLab instance runs independently. Therefore, you need to create an instance for each version. To try different versions, you can start them in different containers or environments. Pay attention to the following:

- The configuration file and data path may vary according to the version. Ensure the independence of data and configuration.

- Running multiple versions at the same time may cause port conflicts or other resource competition problems.

- Can I use GDB in a notebook instance?

No. GDB needs Docker with privileged containers. For security purposes, the development environment does not allow privileged containers. Therefore, GDB cannot be used in notebook instances.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot