Help Center/

PanguLargeModels/

User Guide/

Developing a Prompt Engineering Project/

Evaluating the Prompt Outcomes in Batches/

Creating a Prompt Evaluation Task

Updated on 2025-11-04 GMT+08:00

Creating a Prompt Evaluation Task

Create an evaluation task to batch evaluate candidate prompts. The procedure is as follows:

- Log in to ModelArts Studio and access a workspace.

- In the navigation pane, choose Agent Dev. On the displayed page, choose Prompt Engineering > Prompt dev.

- In the prompt engineering project list, locate the desired project and click writing in the Operation column.

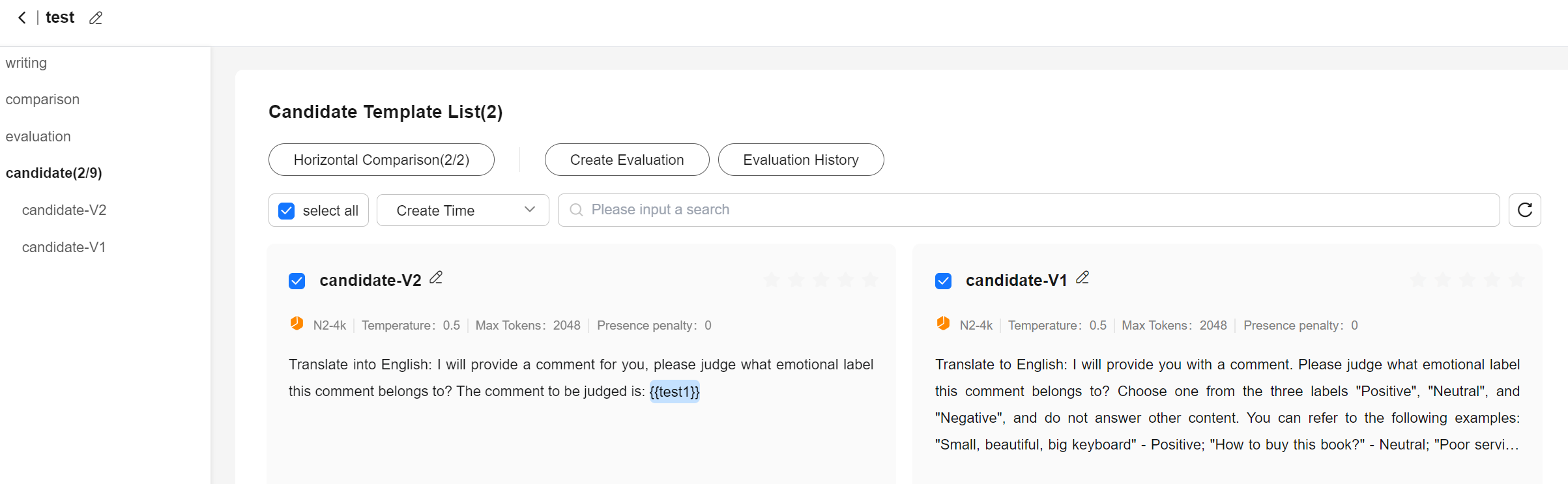

- On the writing page, choose candidate from the navigation pane. In the candidate list, select the prompts for horizontal comparison and click Create Evaluation.

Figure 1 Create Evaluation

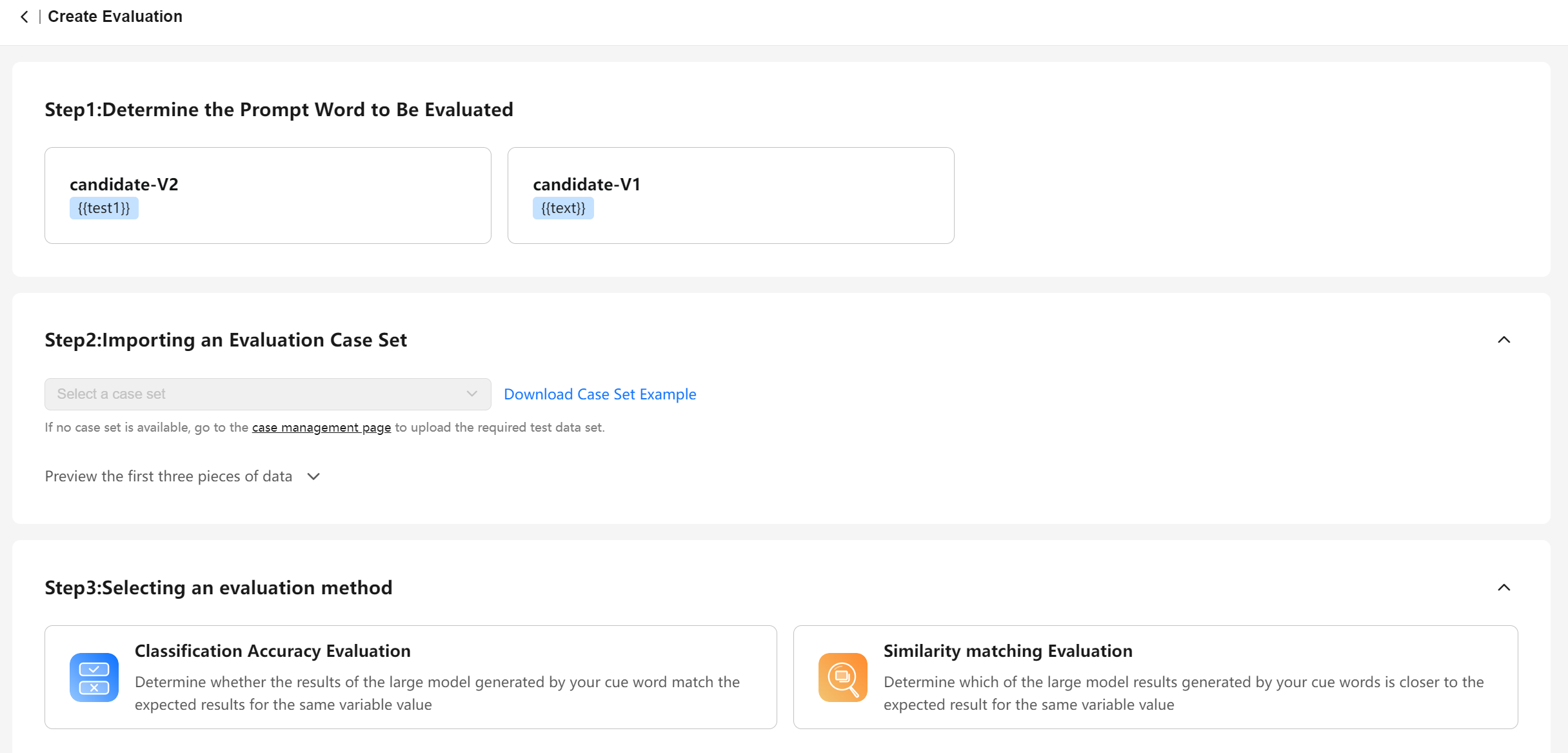

- Select the variable set and evaluation method.

- Evaluation case set: The platform assembles the prompt to be evaluated and the variables in the selected dataset into a complete prompt. The model can generate a corresponding outcome in response to this prompt.

- Evaluation method: Using the selected evaluation method, the platform compares the model's generated result with the expected one, and then offers the corresponding score.

Figure 2 Creating a prompt evaluation task

- Click Confirm. The evaluation task is automatically executed.

Parent topic: Evaluating the Prompt Outcomes in Batches

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

The system is busy. Please try again later.

For any further questions, feel free to contact us through the chatbot.

Chatbot