Using the Pangu Pre-trained NLP Model for Text Dialog

Scenarios

This example demonstrates how to use the Pangu pre-trained NLP model for text dialog by using the experience center and by calling APIs.

You will learn how to debug model hyperparameters using the experience center and how to call the Pangu NLP model API to implement intelligent Q&A.

Prerequisites

You have deployed a preset NLP model. For details, see "Developing a Pangu NLP Model" > "Deploying an NLP Model" > "Creating an NLP Model Deployment Task" in User Guide.

Using the Experience Center Function

You can call the preset services that have been deployed by using the Experience Center function of the platform. The procedure is as follows:

- Log in to ModelArts Studio and access a workspace.

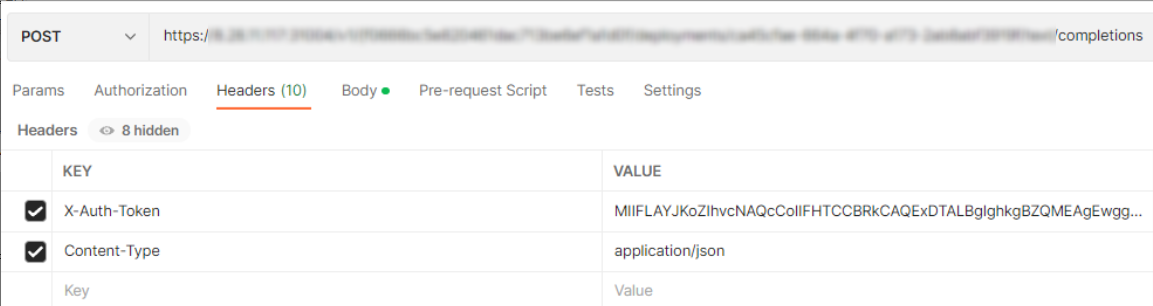

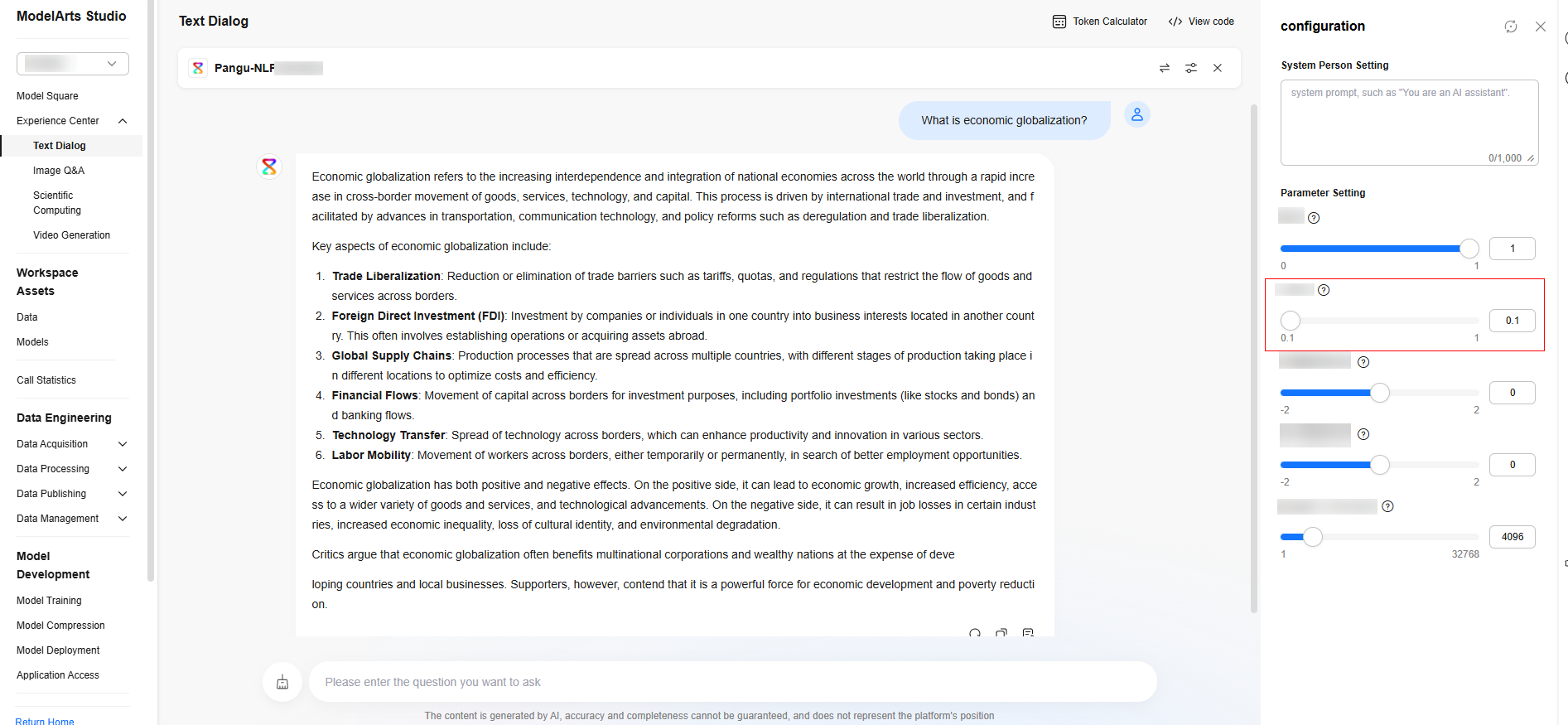

- In the navigation pane, choose Experience Center > Text Dialog. Select a service, retain the default parameter settings, and enter a question in the text box. The model will answer the question.

Figure 1 Using a preset service for text dialog

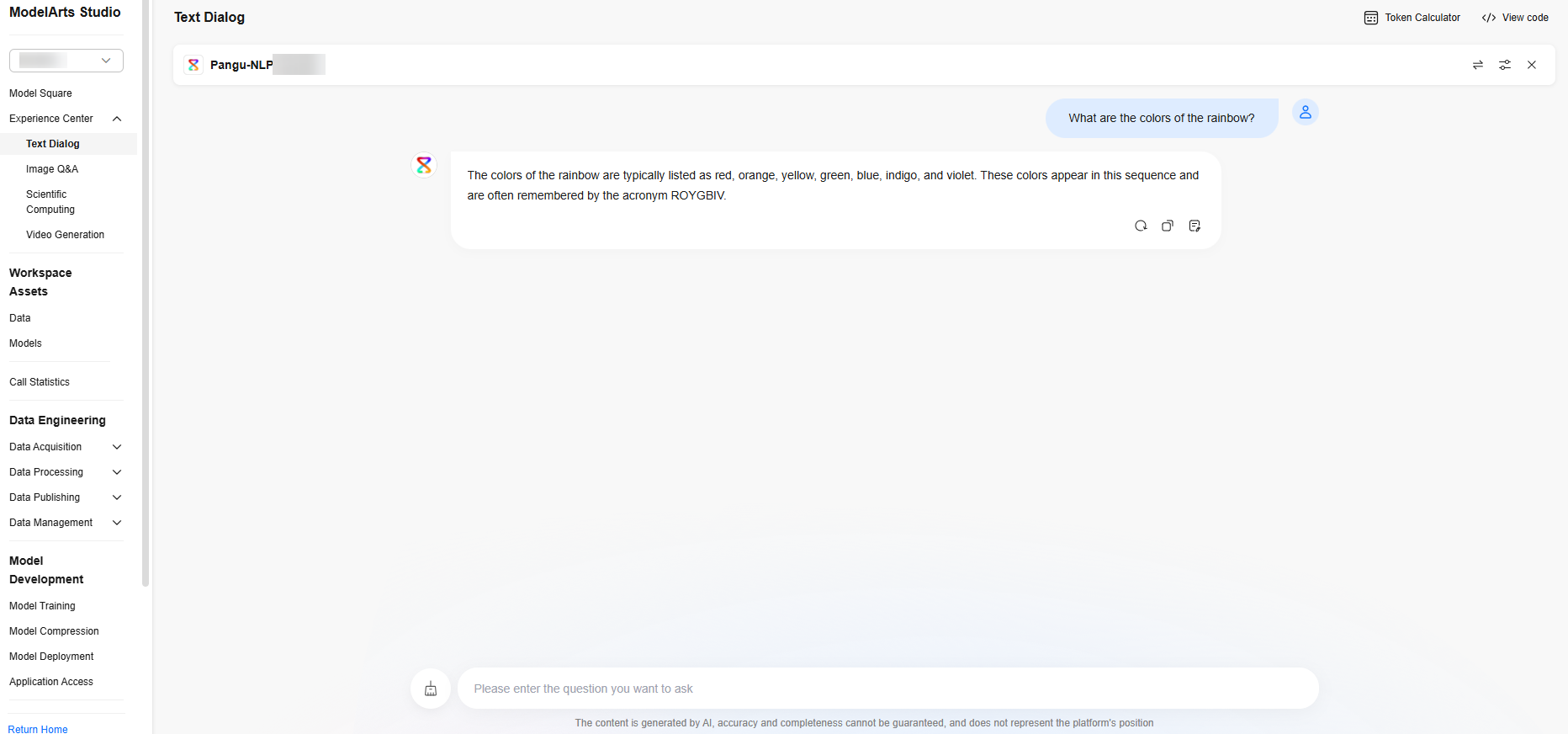

- Modify parameters and check the quality of the model output. The following uses the Nuclear sampling parameter as an example. This parameter controls the diversity and quality of generated text.

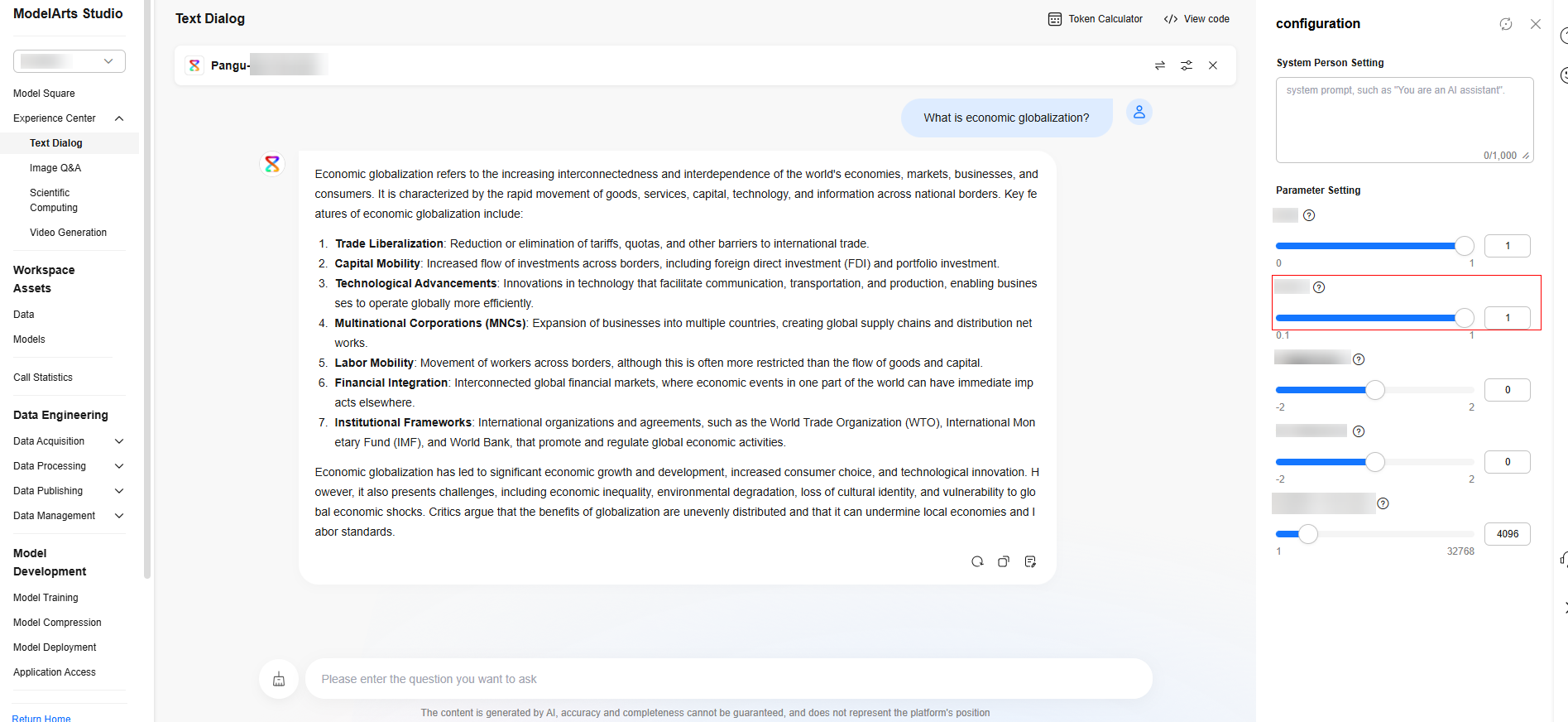

- When the Nuclear sampling parameter is set to 1 and the settings of other parameters remain unchanged, click Regenerate, and then click Regenerate again. Observe the diversity of the two responses of the model.

Figure 2 Response 1 (with the Nuclear sampling parameter is set to 1)

Figure 3 Response 2 (with the Nuclear sampling parameter is set to 1)

Figure 3 Response 2 (with the Nuclear sampling parameter is set to 1)

- When the Nuclear sampling parameter is set to 0.1 and the settings of other parameters remain unchanged, click Regenerate, and then click Regenerate again. Check that the diversity of the two responses of the model decreases.

Figure 4 Response 1 (with the Nuclear sampling parameter is set to 0.1)

Figure 5 Response 2 (with the Nuclear sampling parameter is set to 0.1)

Figure 5 Response 2 (with the Nuclear sampling parameter is set to 0.1)

- When the Nuclear sampling parameter is set to 1 and the settings of other parameters remain unchanged, click Regenerate, and then click Regenerate again. Observe the diversity of the two responses of the model.

Calling APIs

After the preset NLP model is deployed, you can call the model by calling the text dialog API. The procedure is as follows:

- Log in to ModelArts Studio and access a workspace.

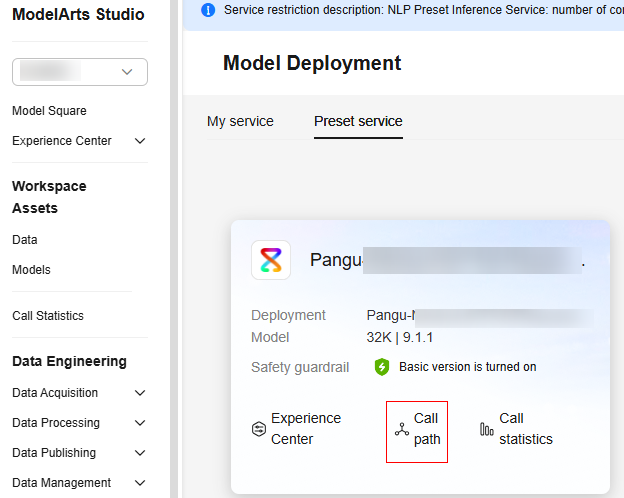

- Obtain the call path. Choose Model Training > Model Deployment. On the Preset service tab page, select the required NLP model, and click Call path. In the dialog box that is displayed, obtain the call path.

Figure 6 Obtaining the call path

Figure 7 Call path dialog box

Figure 7 Call path dialog box

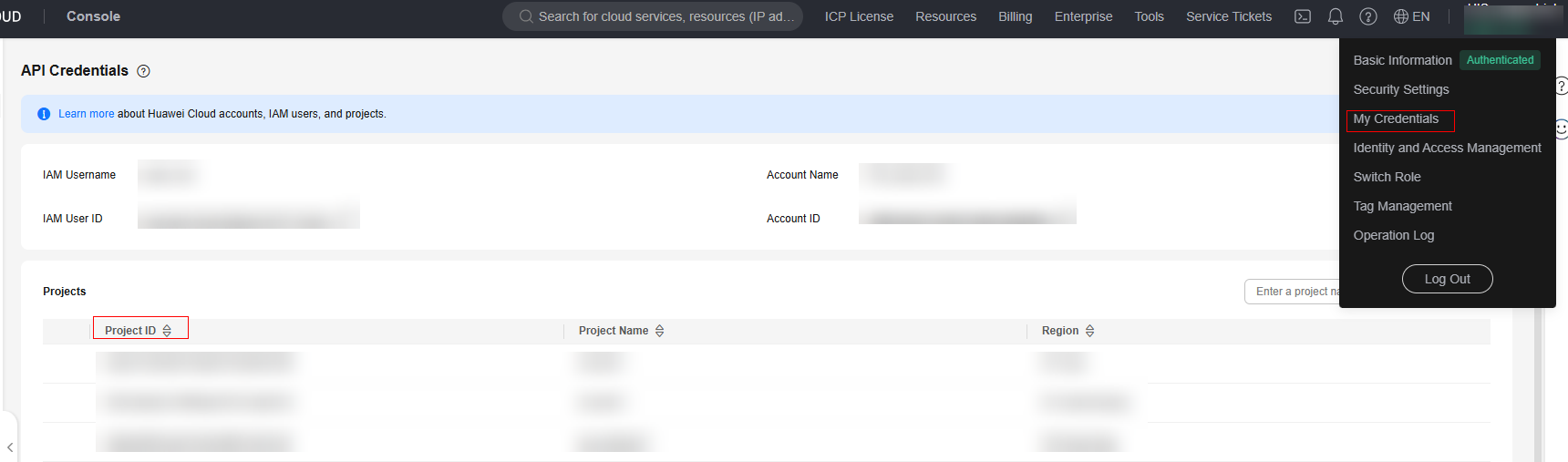

- Obtain the project ID. Click My Credentials in the upper right corner of the page. On the API Credentials page, obtain the project ID.

Figure 8 Obtaining the project ID

- Obtain the token. Obtain the token by following the instructions provided in section "Calling REST APIs" > "Authentication" in API Reference.

- Create a POST request in Postman and enter the call path (API request address).

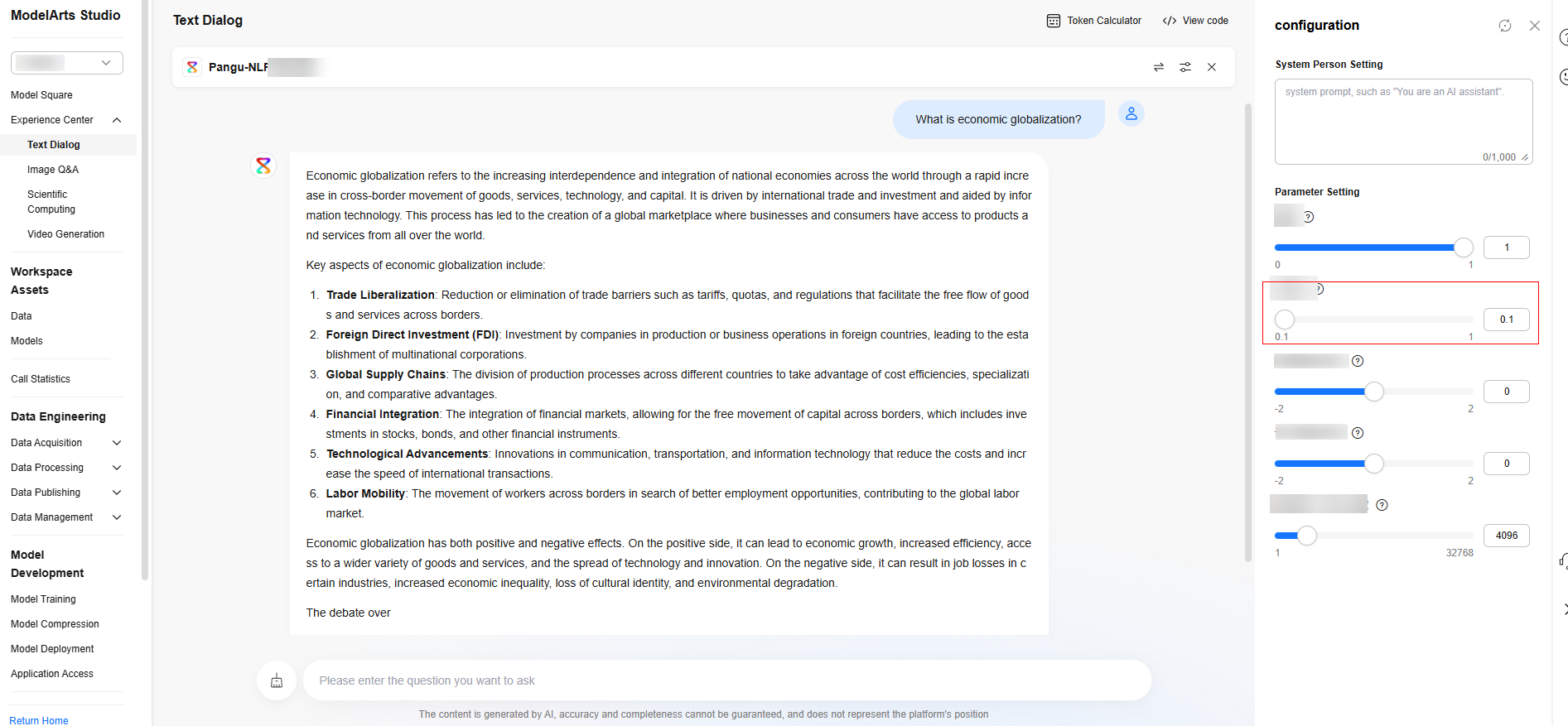

- Set two request header parameters by referring to Figure 9.

- KEY: Content-Type; VALUE: application/json

- KEY: X-Auth-Token; VALUE: the token value

- Click Body, select raw, refer to the following code, and enter the request body.

{ "messages": [ { "content": "Introduce the Yangtze River and its typical fish species." } ], "temperature": 0.9, "max_tokens": 600 } - Click Send to send the request. If the returned status code is 200, the NLP model API is successfully called.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot