Self-Healing of Read Replicas upon a Replication Latency

TaurusDB is a cloud-native database with decoupled compute and storage. The primary node and read replicas share underlying storage data. To ensure data cache consistency, each time the primary node communicates with read replicas, the read replicas read the redo logs generated by the primary node from storage to update the data cache. Due to network fluctuations or busy nodes, read replicas may experience a higher data cache latency than the primary node. When the latency exceeds a specific threshold, read replicas will automatically reboot to ensure that users can access the latest data from them.

This section explains the technical principles behind the read replica latency and automatic reboot.

How Data Is Synchronized Between the Primary Node and Read Replicas

Although the primary node and read replicas share storage data, the primary node and the read replicas still need to regularly communicate with each other to ensure that the data cache of the read replicas is consistent with that of the primary node.

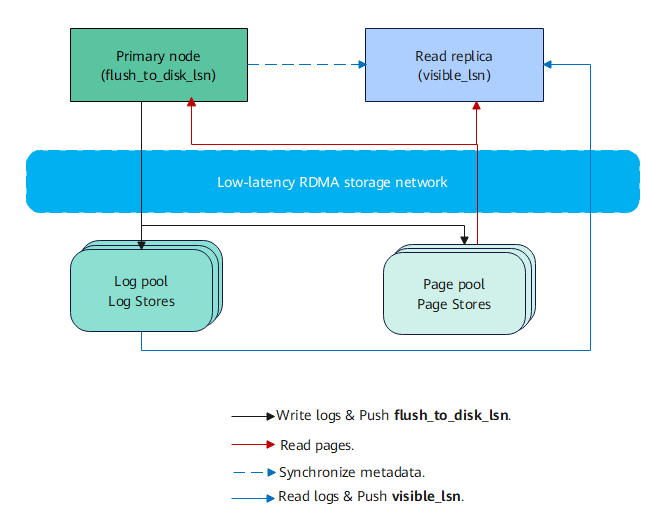

The preceding figure shows how the primary node communicates with read replicas and synchronizes data.

- After writing logs, the primary node pushes flush_to_disk_lsn (which indicates the latest position where the primary node data is visible). When writing redo logs to the storage layer (the log pool and page pool), the primary node periodically sends metadata messages of incremental redo logs to read replicas, including the latest flush_to_disk_lsn value corresponding to a redo log and the API for reading the log.

- After receiving the messages sent by the primary node, the read replicas read incremental logs from the log pool and push visible_lsn (which indicates the latest position where the read replica data is visible). In this case, the related data cache becomes invalid. If the visible_lsn value of the read replicas is the same as the flush_to_disk_lsn value of the primary node, there is no latency between them. If the visible_lsn value of the read replicas is smaller than the flush_to_disk_lsn value of the primary node, the read replicas are lagging behind the primary node. The read replicas experience a data latency.

- The read replicas return a message packet to the primary node. The message packet contains the following information:

- Views. The views store the transaction list of the read replicas. The primary node can purge undo logs based on the view of each read replica.

- recycle_lsn values: the minimum LSN of the data pages read by a read replica. The LSN of the data pages read by a read replica will not be smaller than its recycle_lsn value. The primary node collects the recycle_lsn value of each read replica and evaluates the position for purging underlying redo logs.

- Basic information about each read replica: the ID of the read replica and timestamp of the latest message. The primary node uses this information to manage read replicas.

- If the data cache of the primary node or read replicas fails, both the primary node and the read replicas will obtain pages from the page pool during queries.

How Read Replica Latency Is Calculated

Read replica latency refers to the amount of time that passes between when data is updated on the primary node and when the updated data is obtained on the read replicas. Read replicas read the redo log to update cached data. visible lsn is used to record the LSN of the redo log. It indicates the maximum LSN of the data pages read by read replicas. flush_to_disk_lsn is used to record the LSN of the latest redo log generated each time a data record is updated or inserted on the primary node. It indicates the maximum LSN of the data pages accessed by the primary node. Read replica latency is actually calculated based on the values of visible lsn and flush_to_disk_lsn. For example, at time t1, the flush_to_disk_lsn value is 100 and the visible lsn value is 80. After a period of time, read replicas replay the redo log. At time t2, the flush_to_disk_lsn value is 130 and the visible lsn value is 100. In this case, read replica latency is calculated as follows: t2 - t1.

The speed at which a read replica pushes visible lsn is the crucial factor that affects the latency. In most cases, there is a minimal latency between the primary node and read replicas. However, in certain scenarios, such as when the primary node is executing a large number of DDL statements, there may be a significant latency.

Self-Healing Policy

If there is a significant read replica latency, users cannot access the latest data from read replicas, which may affect data consistency. To address this, the database automatically reboots the read replicas' process within seconds if the latency exceeds the default 30 seconds. After the reboot, the read replicas will read the latest data from storage, and there is no latency.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot