Configuring Dynamic Overwriting for Hive Table Partitions

Scenarios

In Hive, data for a partitioned table is stored into separate directories based on specified partition fields (such as date and region). Dynamic partition overwriting enables you to overwrite specific partitions based on the query results, without explicitly specifying all partition values in advance. When an INSERT OVERWRITE statement is executed in Hive, the system locates the target partition directory based on the partition field value present in the query result. Hive then deletes any existing data in the corresponding partition directory and writes the new data, updating the partition with fresh content.

In earlier versions, when the insert overwrite syntax is used to overwrite partition tables, only partitions that match the explicitly specified expressions in the query are retained. Any existing partitions not matched by the query are deleted. In Spark 2.3, partitions without explicitly specified expressions are automatically matched. The behavior of the insert overwrite syntax on partitioned tables aligns more closely with Hive's dynamic partition overwrite syntax.

Parameters

- Log in to FusionInsight Manager.

For details, see Accessing FusionInsight Manager.

- Choose Cluster > Services > Spark2x or Spark, click Configurations and then All Configurations, and search for the following parameters and adjust their values.

Parameter

Description

Example Value

spark.sql.sources.partitionOverwriteMode

Specifies the mode for inserting data in partition tables by running the insert overwrite command, which can be STATIC or DYNAMIC.

- When it is set to STATIC, Spark deletes all partitions based on the matching conditions.

- When it is set to DYNAMIC, Spark matches partitions based on matching conditions and dynamically matches partitions without specified conditions.

DYNAMIC

- After the parameter settings are modified, click Save, perform operations as prompted, and wait until the settings are saved successfully.

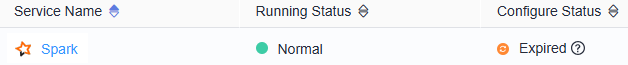

- After the Spark server configurations are updated, if Configure Status is Expired, restart the component for the configurations to take effect.

Figure 1 Modifying Spark configurations

On the Spark dashboard page, choose More > Restart Service or Service Rolling Restart, enter the administrator password, and wait until the service restarts.

If you use the Spark client to submit tasks, after the cluster parameter spark.sql.sources.partitionOverwriteMode is modified, you need to download the client again for the configuration to take effect. For details, see Using an MRS Client.

Components are unavailable during the restart, affecting upper-layer services in the cluster. To minimize the impact, perform this operation during off-peak hours or after confirming that the operation does not have adverse impact.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot