Configuring the DROP PARTITION Command to Support Batch Deletion

Scenarios

DROP PARTITION deletes all data in one or more specified partitions for the table. DROP PARTITION is used to delete data in batches based on shared partition characteristics. For example, you can remove historical data by specifying a time-based partition range, or delete an entire partition when its data is no longer needed. For example, if a table is partitioned by date and you execute a DROP PARTITION command targeting a specific month, all data associated with that month's partition will be deleted.

In Spark, when working with Hive tables, the DROP PARTITION command uses the equal sign (=) to define the partition to be deleted. After configuring this parameter, you can use multiple filter criteria to delete data in batches. Supported operators include less than (<), less than or equal to (<=), greater than (>), greater than or equal to (>=), not less than (!>), and not greater than (!<).

Notes and Constraints

This section applies only to MRS 3.2.0-LTS or later.

Configuration

- Log in to FusionInsight Manager.

For details, see Accessing FusionInsight Manager.

- Choose Cluster > Services > Spark2x or Spark, click Configurations and then All Configurations, and search for the following parameters and adjust their values.

Parameter

Description

Example Value

spark.sql.dropPartitionsInBatch.enabled

Whether the DROP PARTITION command in Spark supports batch deletion of partitions based on filter criteria. Batch deletion can significantly improve the performance of deletion operations, especially when a large number of partitions need to be removed. When processing large-scale datasets, batch deletion helps reduce the overhead of metadata operations, thereby improving overall performance.

- true: enables the function of deleting partitions using the DROP PARTITION command. This command supports the filter conditions such as <, <=, >, >=, !>, and !<.

- false: disables the function of deleting partitions using the DROP PARTITION command.

true

spark.sql.dropPartitionsInBatch.limit

Maximum number of partitions that can be deleted in a batch in Spark.

1000

- After the parameter settings are modified, click Save, perform operations as prompted, and wait until the settings are saved successfully.

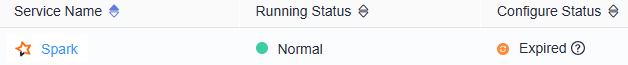

- After the Spark server configurations are updated, if Configure Status is Expired, restart the component for the configurations to take effect.

Figure 1 Modifying Spark configurations

On the Spark dashboard page, choose More > Restart Service or Service Rolling Restart, enter the administrator password, and wait until the service restarts.

If you use the Spark client to submit tasks, after the cluster parameters spark.sql.dropPartitionsInBatch.enabled and spark.sql.dropPartitionsInBatch.limit are modified, you need to download the client again for the configuration to take effect. For details, see Using an MRS Client.

Components are unavailable during the restart, affecting upper-layer services in the cluster. To minimize the impact, perform this operation during off-peak hours or after confirming that the operation does not have adverse impact.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot