Migrating Data from Hive to MRS with DistCp

Scenarios

Distributed Copy (DistCp) is a distributed data replication tool provided by Hadoop. It is designed to efficiently transfer large volumes of data between HDFS clusters, between HDFS and the local file system, or even within the same cluster. DistCp leverages Hadoop's distributed computing capabilities and the parallel processing framework of MapReduce to enable highly efficient migration of large-scale data.

You can run the distcp command to migrate data stored in HDFS of a self-built Hive cluster to an MRS cluster.

This section describes how to use the DistCp tool to migrate full or incremental Hive service data to an MRS cluster after completing Hive metadata migration.

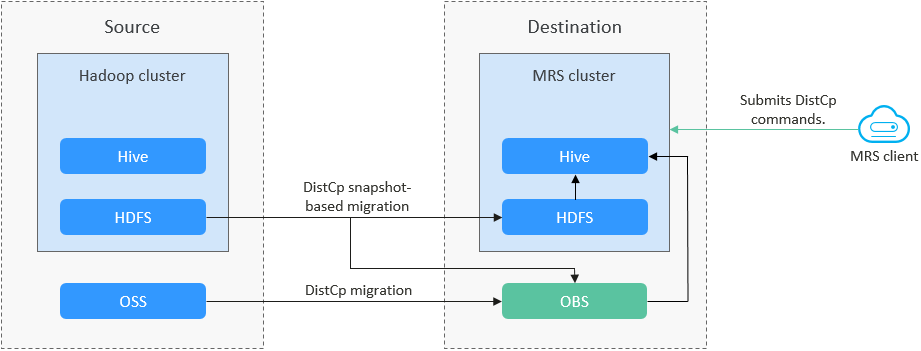

Solution Architecture

Figure 1 shows the process for migrating Hive data to an MRS cluster.

- Historical data migration

Create a snapshot for the directory to be migrated in the source Hadoop cluster, and then run the DistCp command on the MRS cluster client to migrate all data to the destination OBS or HDFS.

- Partition repair.

Run the msck repair table db_name.table_name command to repair partitions of the partitioned table.

- Incremental service data migration

Create a snapshot for the directory that requires incremental migration in the source Hadoop cluster. Add the -update -delete parameter on the MRS cluster client to perform incremental migration. Set the destination path to OBS or HDFS.

Data migration supports different networking types, such as the public network, VPN, and Direct Connect. Select a networking type as required to ensure that the source and destination networks can communicate with each other.

|

Migration Network Type |

Advantage |

Disadvantage |

|---|---|---|

|

Direct Connect |

|

|

|

VPN |

|

|

|

Public IP address |

|

|

Notes and Constraints

- If the migration destination is an OBS bucket, DistCp does not support incremental migration because OBS does not support snapshots.

- You need to learn about the customer's services and communicate with the customer to determine the migration time window. You are advised to perform the migration during off-peak hours.

Prerequisites

- A Hive MRS cluster has been created.

- You have prepared an ECS execution node and installed the MRS cluster client.

- The source cluster, destination cluster, and the node hosting the MRS cluster client can communicate with each other.

- Hive metadata has been migrated.

Migrating all Hive Data Using DistCp

- Obtain the IP address and port number of the active HDFS NameNode in the source cluster.

The query method varies depending on the self-built clusters. You can contact the cluster administrator or log in to the cluster management page to query the information.

Figure 2 Checking the HDFS NameNode port

- Log in to MRS Manager of the destination cluster and check the IP address and port number of the active HDFS NameNode.

- Choose Cluster > Services > HDFS and click Instances. On the displayed page, view the IP address of the NameNode (hacluster,Active) instance in the instance list.

- Click Configurations, and search for and view the NameNode port parameter dfs.namenode.rpc.port.

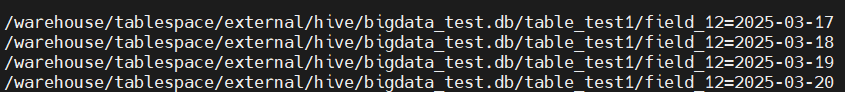

- Obtain the HDFS file directory containing the Hive data to be migrated from the source cluster.

For example, data to be migrated is as follows.

- Configure user access to the source HDFS cluster on the ECS execution node.

vim /etc/profile

Configure environment variables.

export HADOOP_USER_NAME=hdfs

Load the environment variables.

source /etc/peofile

- Create a snapshot for the specified HDFS directory in the source cluster. The snapshot is saved in /Snapshot directory/.snapshot.

- Enable the snapshot function for the specified directory in the source cluster.

hdfs dfsadmin -allowSnapshot Snapshot directorySource cluster directory format: hdfs://IP address of the active NameNode:Port/Directory

For example, run the following command:

hdfs dfsadmin -allowSnapshot hdfs://192.168.1.100:8020/warehouse/tablespace/external/hive/bigdata_test.db/

- Create a snapshot.

hdfs dfs -createSnapshot Snapshot directory Snapshot file name

For example, run the following command:

hdfs dfs -createSnapshot hdfs://192.168.1.100:8020/warehouse/tablespace/external/hive/bigdata_test.db snapshot01

- Enable the snapshot function for the specified directory in the source cluster.

- Access the MRS cluster client directory.

cd /opt/client

source bigdata_env

User authentication is required for MRS clusters with Kerberos authentication enabled.

kinit HDFS service user - Run the distcp command on the node where the MRS cluster client is installed to migrate data.

hadoop distcp -prbugpcxt Path for storing snapshots in the source cluster Data storage path in the destination cluster

For example, run the following command:

hadoop distcp -prbugpcxt hdfs://192.168.1.100:8020/warehouse/tablespace/external/hive/bigdata_test.db/.snapshot/snapshot01/* hdfs://192.168.1.200:8020/user/hive/warehouse/bigdata_test.db

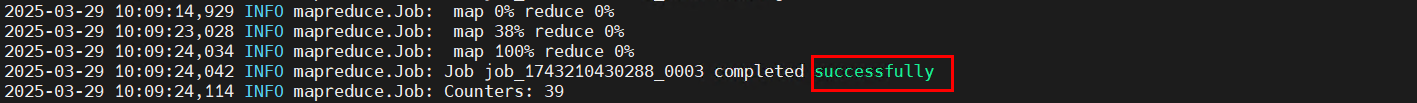

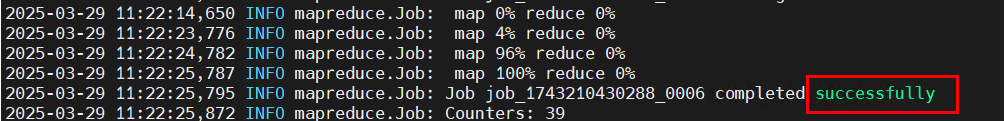

Wait until the MapReduce task is complete.

- Migrate other data directories by referring to 5 to 7.

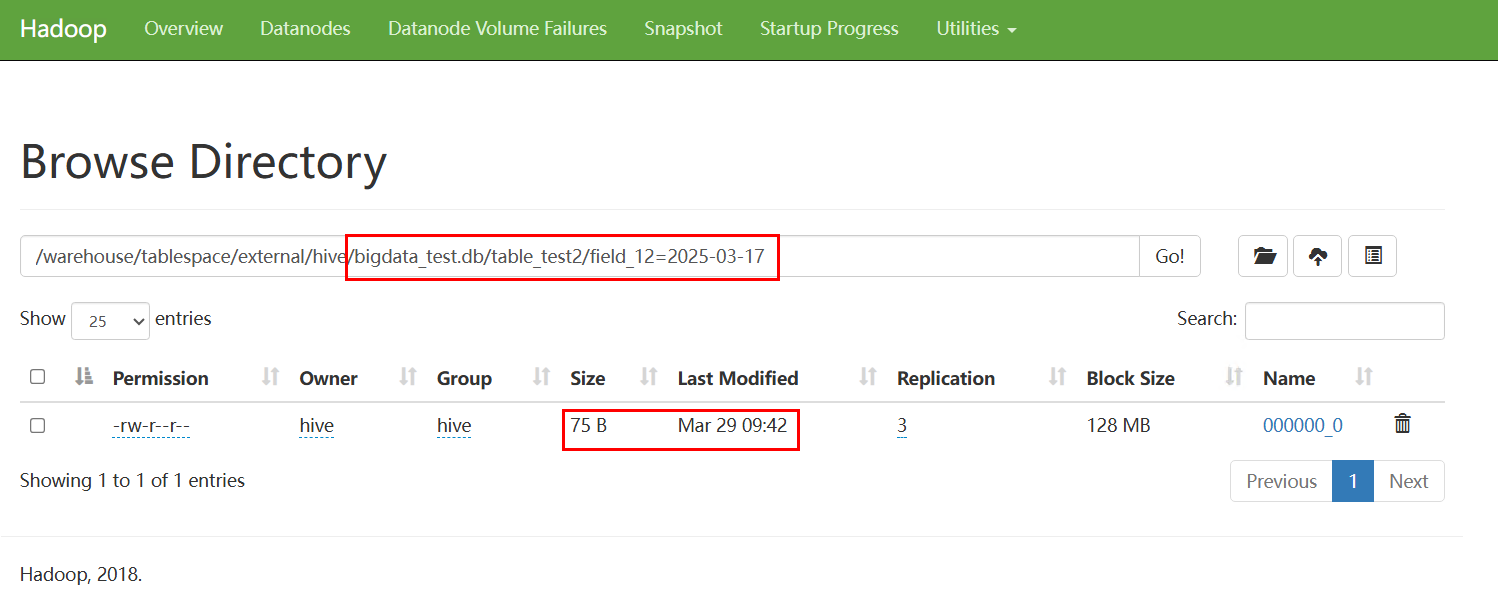

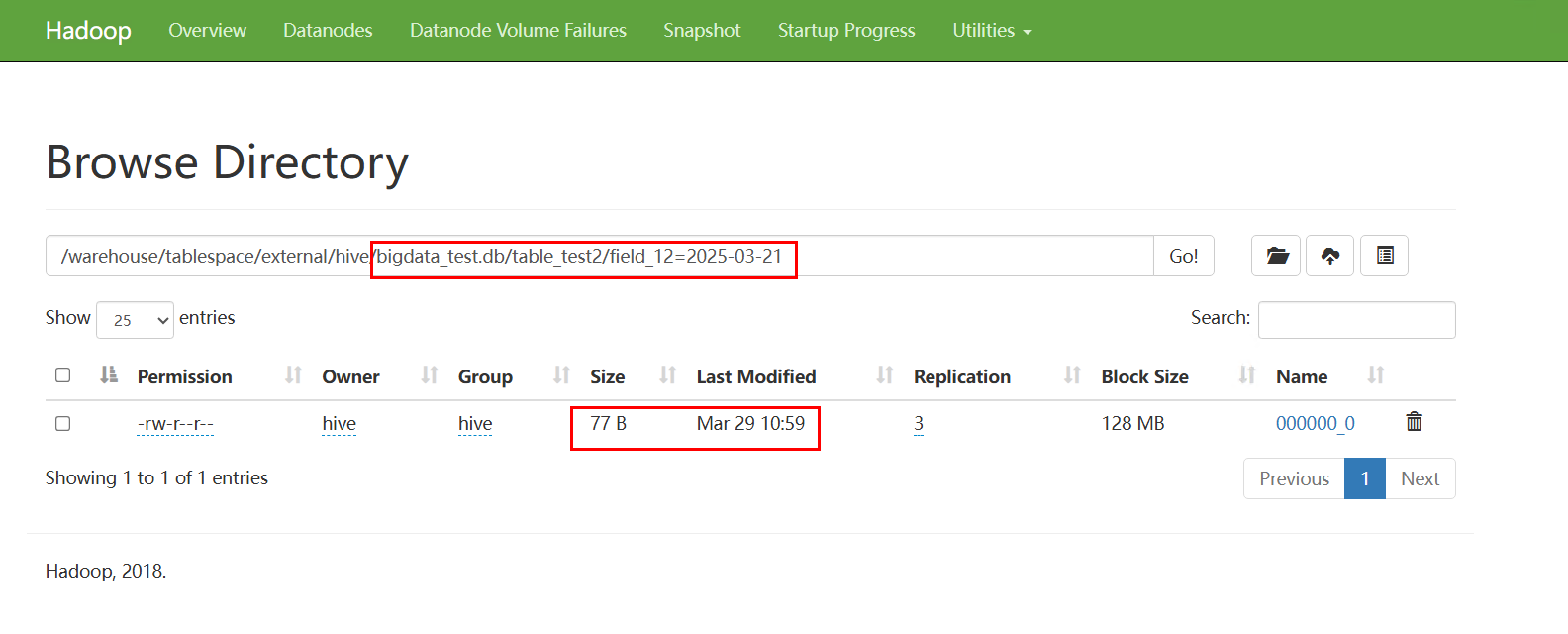

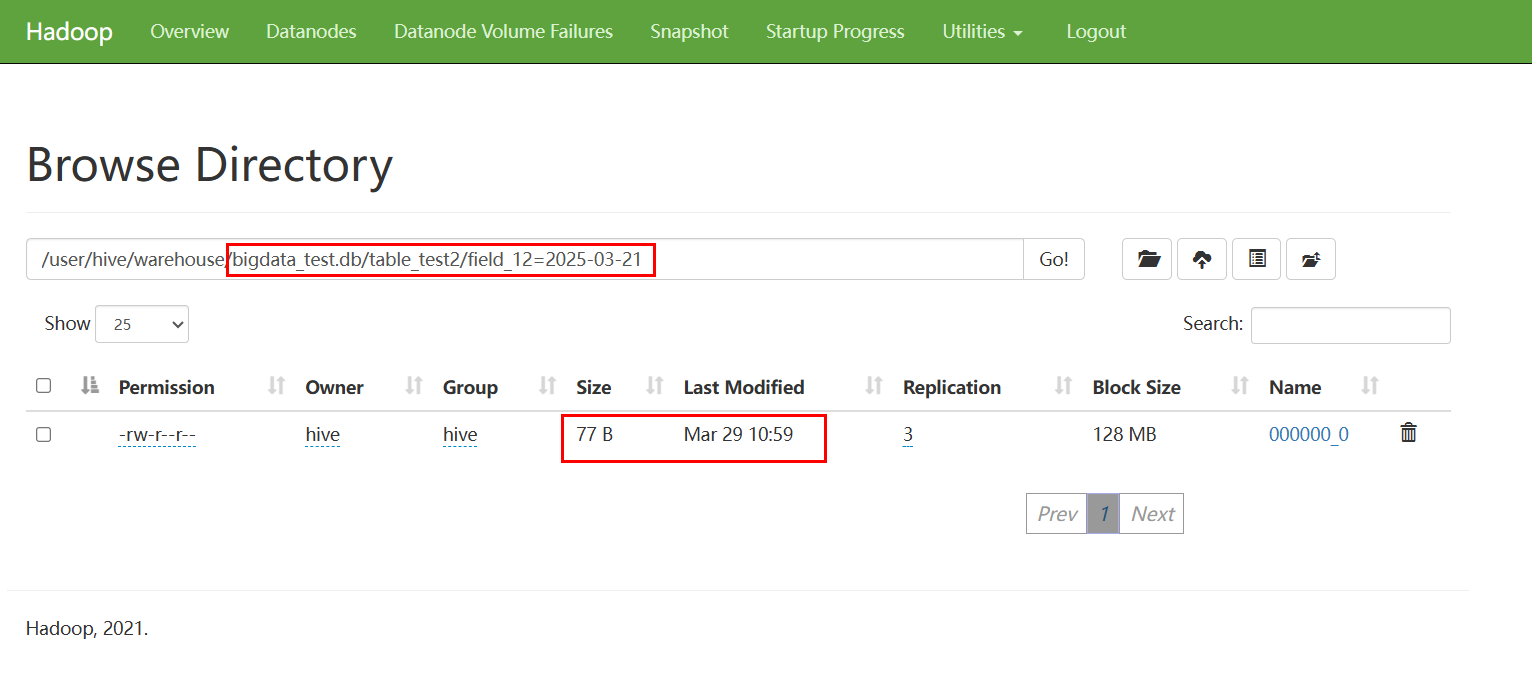

- After the full migration is complete, use the client or HDFS Web UI in the destination cluster to check whether the files are successfully migrated.

For example, the source file is as follows.

The destination file is as follows.

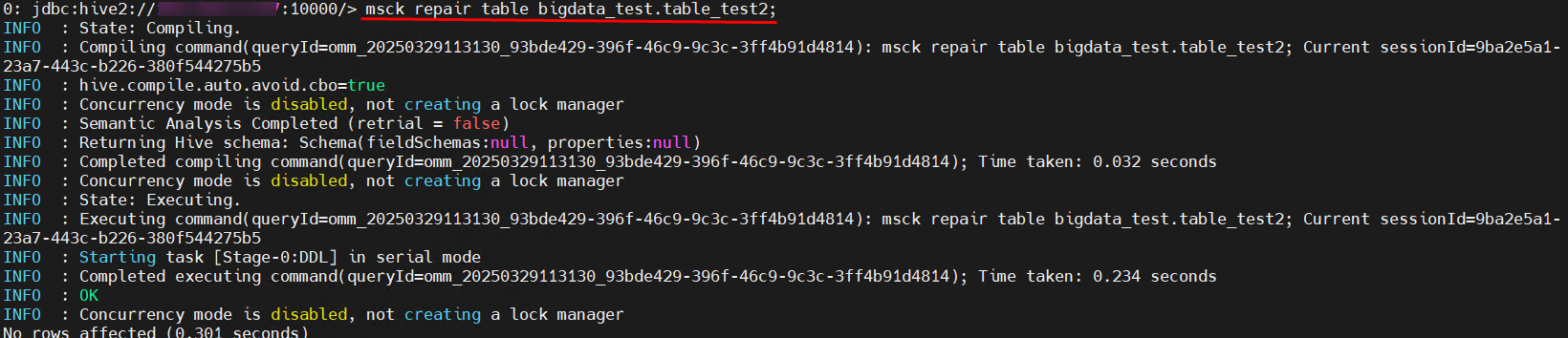

- After data migration is complete, use the Hive client in the destination cluster to repair partitions of the partitioned table and check whether the data exists.

Run the following command to access the Hive client:

beeline

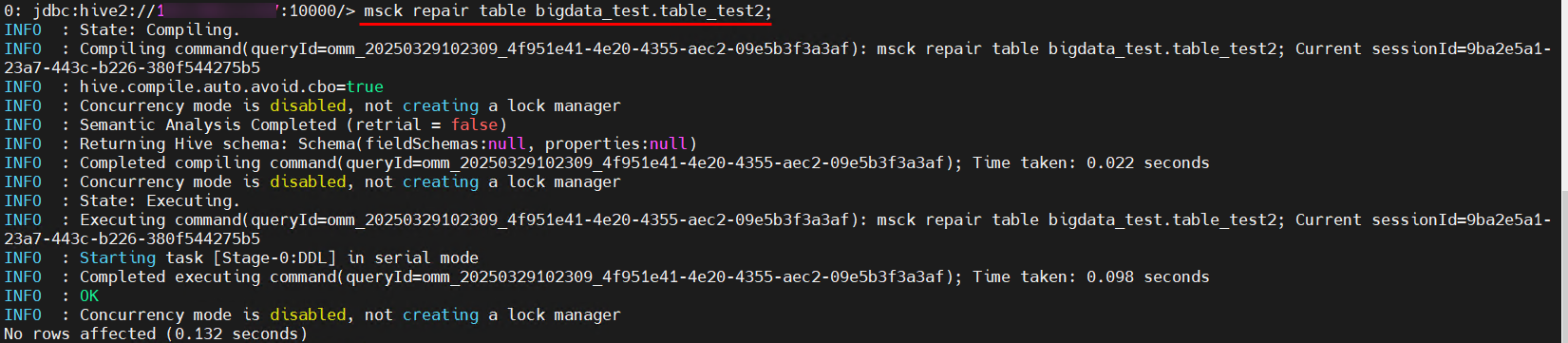

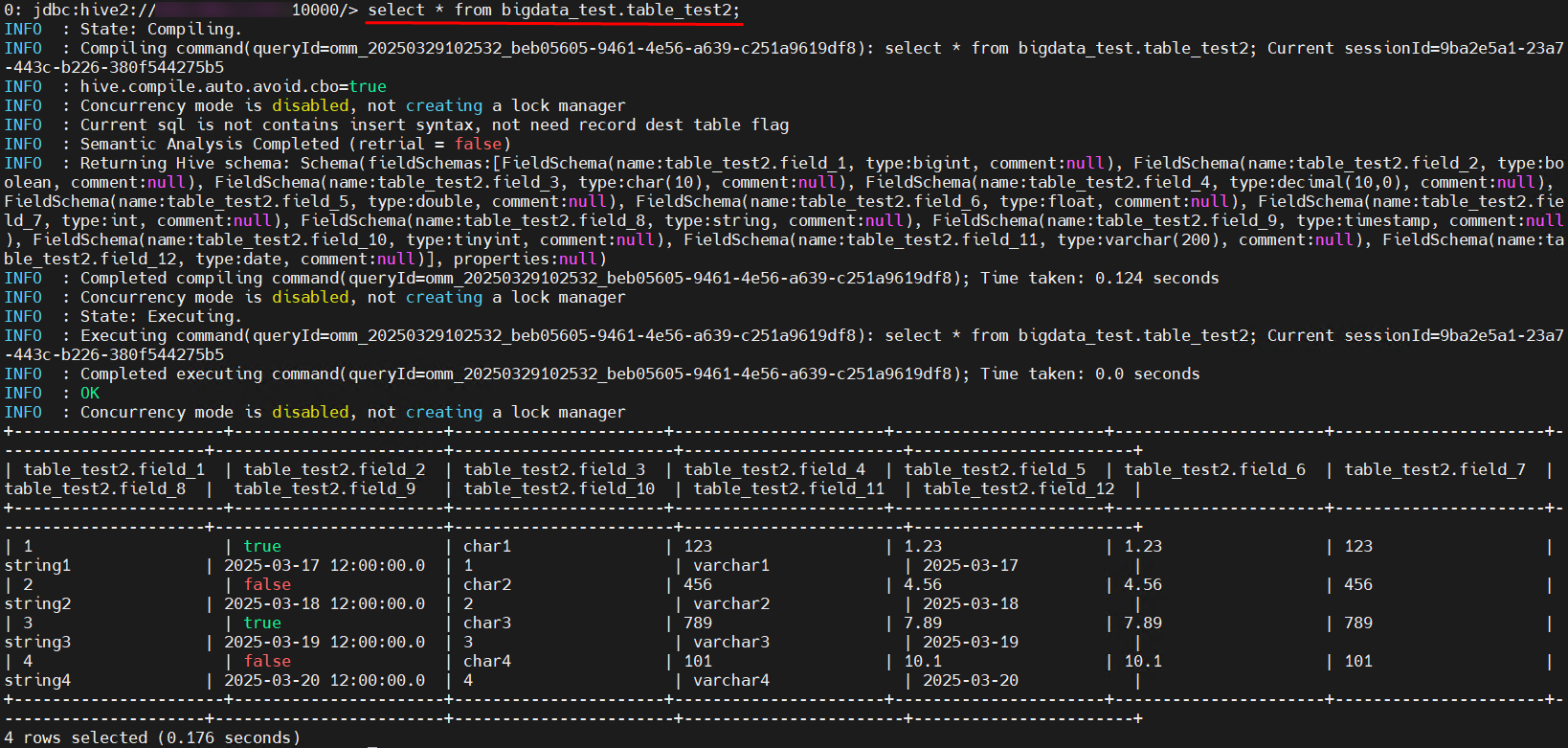

- Partitioned table

msck repair table Table name;

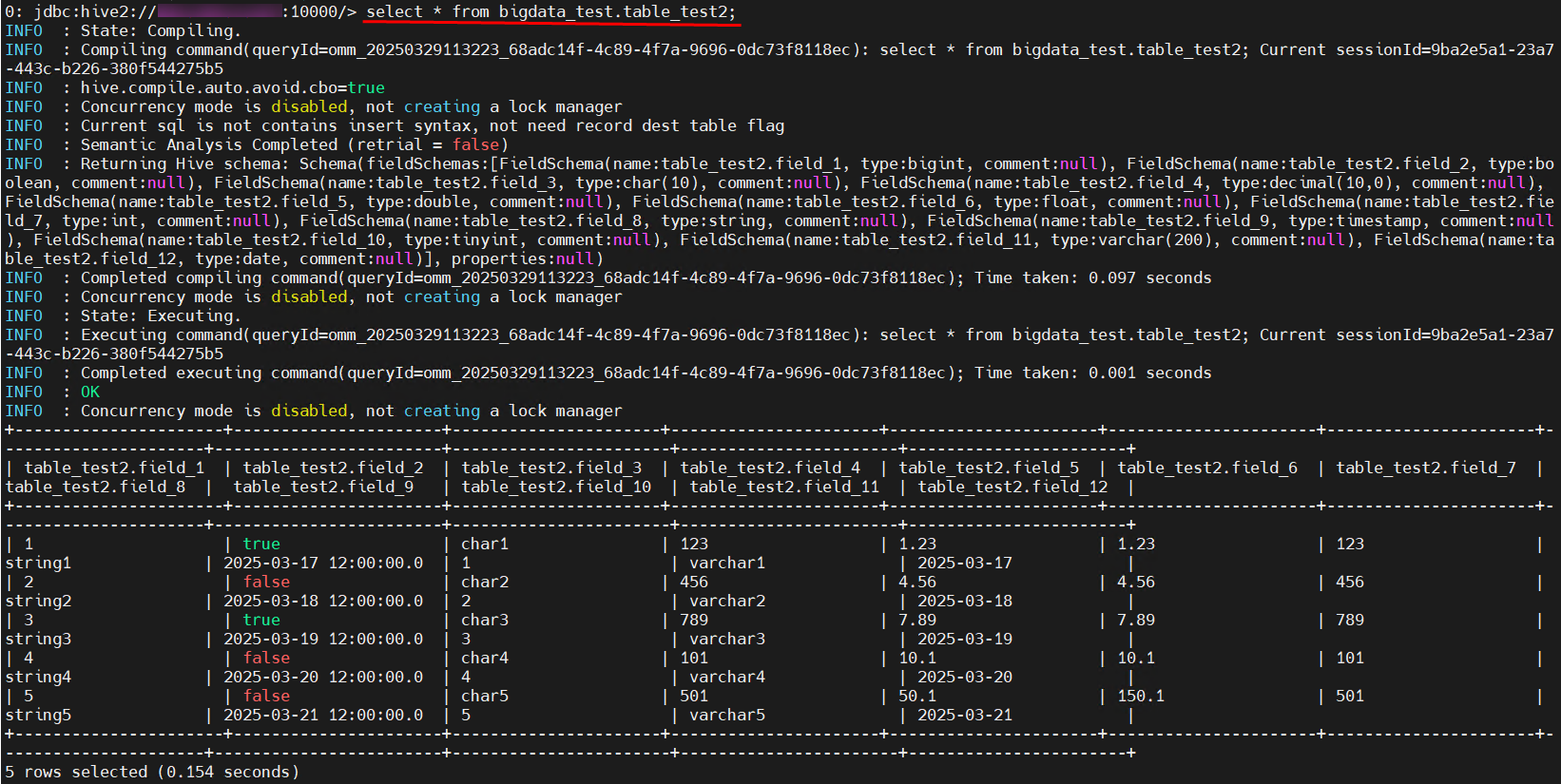

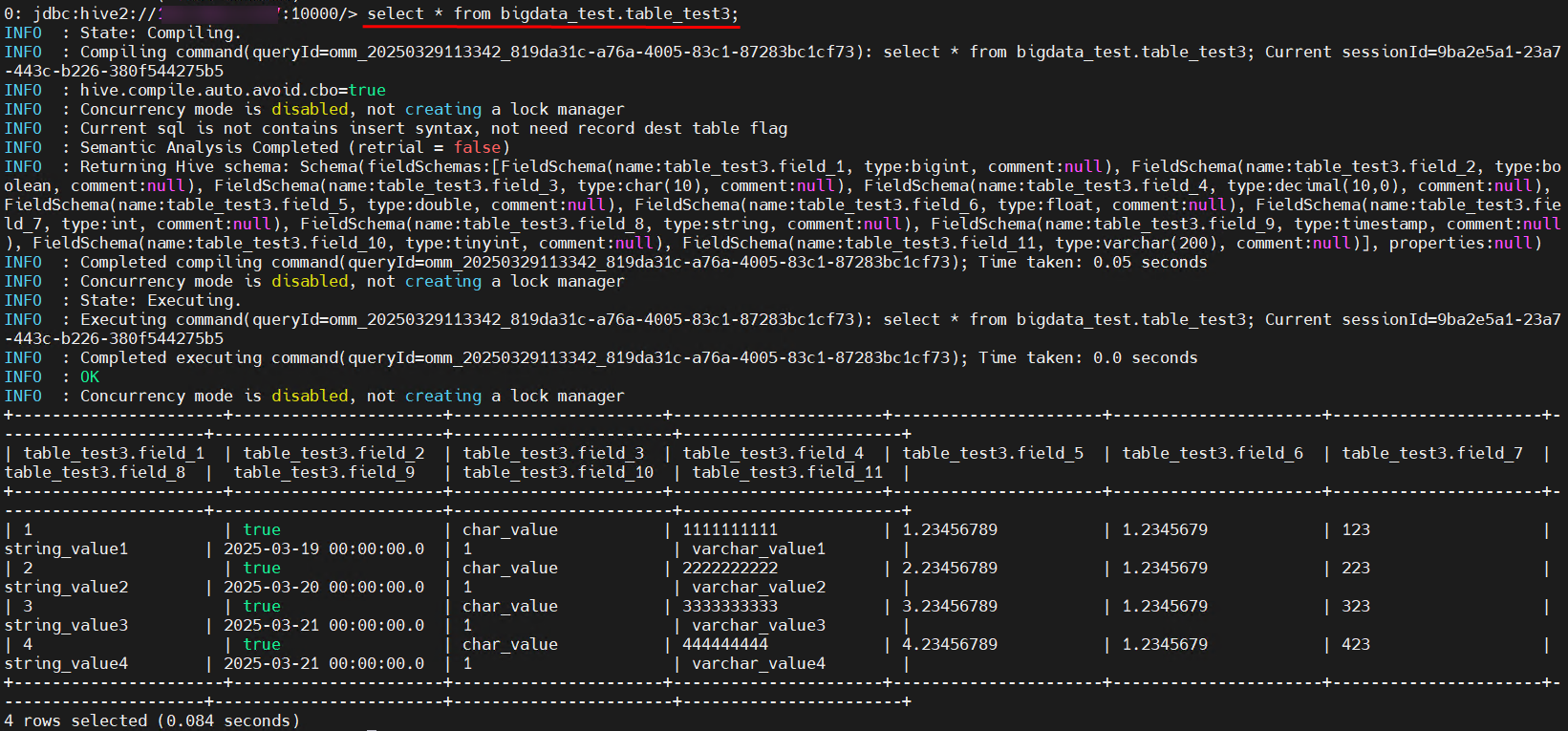

select * from Table name;

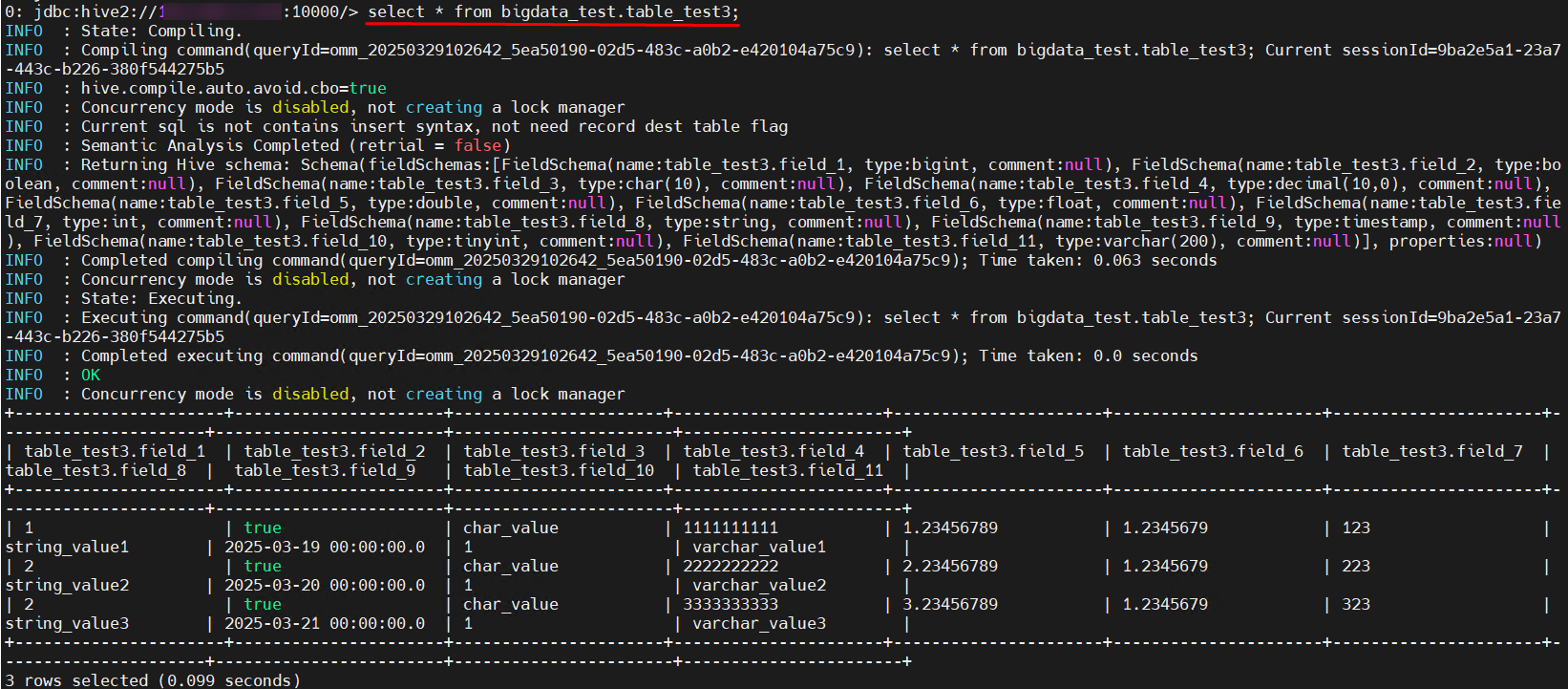

- Non-partitioned table

select * from Table name;

- Partitioned table

Migrating Incremental Hive Data using DistCp

- Create a snapshot for the directory that requires incremental migration in the source cluster.

hdfs dfs -createSnapshot Snapshot directory Snapshot file name

For example, run the following command:

hdfs dfs -createSnapshot hdfs://192.168.1.100:8020/warehouse/tablespace/external/hive/bigdata_test.db snapshot02

- Run the distcp command on the node where the MRS cluster client is installed to migrate incremental data.

hadoop distcp -prbugpcxt Path for storing snapshots in the source cluster Data storage path in the destination cluster

For example, run the following command:

hadoop distcp -prbugpcxt -update -delete hdfs://192.168.1.100:8020/warehouse/tablespace/external/hive/bigdata_test.db/.snapshot/snapshot02/ hdfs://192.168.1.200:8020/user/hive/warehouse/bigdata_test.db

Wait until the MapReduce task is complete.

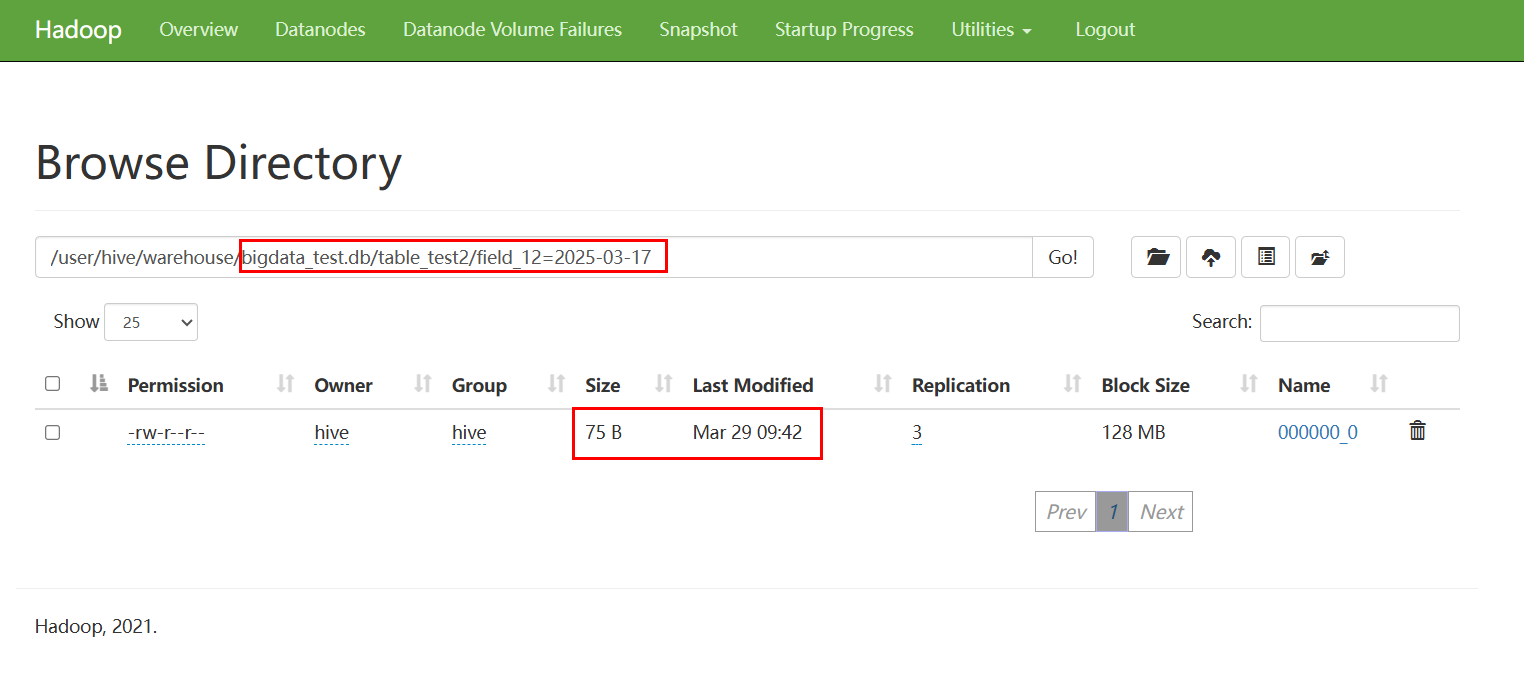

- After the migration is complete, use the client or HDFS Web UI in the destination cluster to check whether the files are successfully migrated, and check the number and size of files in the destination and compare them with the source to ensure consistency.

For example, the source file is as follows.

The destination file is as follows.

- After data migration is complete, use the Hive client in the destination cluster to repair partitions of the partitioned table and check whether the data exists.

- Partitioned table

msck repair table Table name;

select * from Table name;

- Non-partitioned table

select * from Table name;

- Partitioned table

FAQs About Data Migration Using DistCp

- Version differences

If the Hadoop version of the source cluster matches the MRS cluster in major version (minor versions may differ), you can use the following command to synchronize service data from the source table to the MRS cluster:

hadoop distcp -prbugpcaxt hdfs://Active NameNode IP address:Port/Snapshot path Save path

If the Hadoop version of the source cluster is significantly different from the MRS cluster version, use WebHDFS or HFTP to replace HDFS to run the following commands:

hadoop distcp -prbugpcaxt webhdfs://Active NameNode IP address:Port/Snapshot path Save path

Or

hadoop distcp -prbugpcaxt hftp://Active NameNode IP address:Port/Snapshot path Save path

- Parameters of the DistCp commands

- -p[rbugpcaxt]: specifies the attributes of the retained data.

- IP address: IP address of the active NameNode in the source cluster.

- Port: The default open-source port for HDFS is 8020, and the port for WebHDFS and HFTP is 50070.

- If the source cluster provides a unified domain name for access, you can change Active NameNode IP address:Port to the domain name URL.

- DistCp permission control

When DistCp is used for migration, file or directory copy operations may fail due to insufficient permissions.

Ensure that the user executing the DistCp command has sufficient permissions to read from the source path and write to the destination path. You can use the -p option to preserve the permission information.

- Timeout interval change using the DistCp command

When you run the DistCp command, if some files being copied are particularly large, you are advised to increase the timeout settings for the MapReduce jobs that execute the copy tasks.

You can specify mapreduce.task.timeout in the DistCp command to increase the timeout interval.

For example, run the following command to change the timeout interval to 30 minutes:

hadoop distcp -Dmapreduce.task.timeout=1800000 hdfs://cluster1/source hdfs://cluster2/target

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot