Main Entry (HwICSUiSdk)

This section describes the APIs of the web SDK.

|

API |

Description |

Supported by a Third-Party Large Model |

Supported by a Non-Third-Party Large Model |

|---|---|---|---|

|

Obtains the interaction mode (speech/text Q&A) in use. |

× |

√ |

|

|

Callback registration. |

√ |

√ |

|

|

Sets the language of an interaction task. |

× |

√ |

|

|

Checks the browser compatibility. |

√ |

√ |

|

|

Creates an interaction task. |

√ |

√ |

|

|

Destroys an interaction task. |

√ |

√ |

|

|

Obtains interaction task information. |

√ |

√ |

|

|

Obtains language information of an interaction task. |

× |

√ |

|

|

Initializes voice wakeup resources. If voice wakeup is not used, ignore this API. |

× |

√ |

|

|

Switches the interaction mode (speech/text Q&A). |

× |

√ |

|

|

Interrupts the virtual avatar's speech. |

× |

√ |

|

|

Mutes the virtual avatar. |

√ |

√ |

|

|

Sends the text to be read by the interactive virtual avatar. |

× |

√ |

|

|

Sends the question text. |

× |

√ |

|

|

Updates a configuration item. |

√ |

√ |

|

|

Sets the log level. |

√ |

√ |

|

|

Starts a dialog. |

× |

√ |

|

|

Starts speaking by calling the new API startUserSpeak. |

× |

√ |

|

|

The user starts to ask questions. |

× |

√ |

|

|

Ends a dialog. |

× |

√ |

|

|

Stops speaking by calling the new API stopUserSpeak. |

× |

√ |

|

|

The user stops asking questions. The automatic speech recognition (ASR) stops receiving the user's speech but the dialog is still active. |

× |

√ |

|

|

Unmutes the virtual avatar. |

√ |

√ |

activeInteractionMode

(static) activeInteractionMode(): Promise<InteractionModeResult>

[Function Description]

Obtains the interaction mode (speech/text Q&A) in use.

[Request Parameters]

None.

[Response Parameters]

|

Parameter |

Type |

Description |

|---|---|---|

|

result |

boolean |

Execution result. |

|

errorCode |

string | undefined |

Error code. See Table 1. |

|

errorMsg |

string | undefined |

Error message. |

|

interactionMode |

'AUDIO' | 'TEXT' |

Interaction mode. |

[Code Examples]

const { result, interactionMode } = await HwICSUiSdk.activeInteractionMode();

addEventListeners

(static) addEventListeners(eventMap: EventMap): void

[Function Description]

Sets the event callback.

[Request Parameters]

|

Parameter |

Mandatory |

Default Value |

Type |

Description |

|---|---|---|---|---|

|

eventMap |

Yes |

- |

EventMap |

Event registration map. See Table 4. |

|

Parameter |

Mandatory |

Default Value |

Type |

Description |

|---|---|---|---|---|

|

error |

No |

- |

(icsError: IcsError) => any |

Error event. |

|

jobInfoChange |

No |

- |

(jobInfo: JobInfo) => any |

Interaction task information change. |

|

speakingStart |

No |

- |

() => any |

A virtual avatar starts speaking. |

|

speakingStop |

No |

- |

() => any |

A virtual avatar stops speaking. |

|

speechRecognized |

No |

- |

(question: SpeechRecognitionInfo) => any |

Speech recognition result. |

|

semanticRecognized |

No |

- |

(answer: SemanticRecognitionInfo) => any |

Semantic recognition result. |

[Response Parameters]

None

[Code Examples]

HwICSUiSdk.addEventListeners({

error: (icsError) => {

console.error('icsError', icsError);

},

jobInfoChange: (jobInfo) => {

console.info('jobInfoChange', jobInfo);

}

});

changeLanguage

(static) changeLanguage(param: ChangeParam): Promise<void>

[Function Description]

Sets the language of an interaction task.

[Request Parameters]

|

Parameter |

Mandatory |

Default Value |

Type |

Description |

|---|---|---|---|---|

|

param |

Yes |

- |

ChangeParam |

Language options. For details, see Table 6. |

|

Parameter |

Mandatory |

Default Value |

Type |

Description |

|---|---|---|---|---|

|

langCode |

Yes |

- |

'zh_CN' | 'en_US' |

Language code, which is obtained from the API getLanguageInfo. |

[Response Parameters]

See Table 22.

[Code Examples]

const { result } = await HwICSUiSdk.changeLanguage({

langCode: 'zh_CN',

});

checkBrowserSupport

(static) checkBrowserSupport(): Promise<boolean>

[Function Description]

Checks whether the SDK is supported by the browser.

[Request Parameters]

None

[Response Parameters]

Promise<boolean>: whether the SDK is supported by the browser

[Code Examples]

1 2 3 4 5 6 |

const result = await HwICSUiSdk.checkBrowserSupport(); if (result) { // Supported } else { // Not supported } |

create

(static) create(param: CreateParam): Promise<void>

[Function Description]

Creates an intelligent interaction task using the obtained task link and one-off authentication code.

[Request Parameters]

|

Parameter |

Mandatory |

Default Value |

Type |

Description |

|---|---|---|---|---|

|

param |

Yes |

- |

CreateParam |

For details about how to create an activity option, see Table 8. |

|

Parameter |

Mandatory |

Default Value |

Type |

Description |

|---|---|---|---|---|

|

onceCode |

Yes |

- |

string |

One-off authentication code. For details about how to obtain an authentication code, see Creating a One-Off Authentication Code.

NOTE:

The API CreateOnceCode needs to be called in the backend and cannot be directly called on the browser. Otherwise, cross-domain problems may occur. |

|

serverAddress |

Yes |

- |

string |

IP address of the intelligent interaction server. The values of different regions are as follows:

|

|

robotId |

No |

- |

string |

Intelligent interaction activity ID, which is the value of robot_id in the URL specified by taskUrl. For example, if the URL is https://metastudio-api.cn-north-4.myhuaweicloud.com/icswebclient?robot_id=a1b2c3d4e5f6, the robot ID is a1b2c3d4e5f6. Note: Specify robotId or taskUrl, or both. |

|

taskUrl |

No |

- |

string |

URL of the virtual avatar interaction task page created on the MetaStudio console. For details about how to obtain the URL, see Creating an Interactive Virtual Avatar. Note: Specify robotId or taskUrl, or both. |

|

containerId |

Yes |

- |

string |

ID of the DOM node for the rendering SDK interaction UI. |

|

config |

No |

- |

ConfigMap |

Configuration information. See Table 18. |

|

eventListeners |

No |

- |

EventMap |

Event registration map. See Table 4. This parameter is mandatory for the intelligent interaction using a third-party application. |

|

logLevel |

No |

info |

string |

Log level. Options:

|

[Response Parameters]

None

[Code Examples]

HwICSUiSdk.create({

serverAddress: 'serverAddress',

onceCode: 'onceCode',

robotId: 'robotId',

containerId: 'ics-root',

logLevel: 'debug',

config: {

enableCaption: true,

enableChatBtn: false

},

eventListeners: {

error: (error) => {

console.error('sdk error', {

message: error.message,

code: error.code,

}, error);

}

}

});

destroy

(static) destroy(): Promise<void>

[Function Description]

Destroys an interaction task.

[Request Parameters]

None

[Response Parameters]

None

[Code Examples]

HwICSUiSdk.destroy();

getJobInfo

(static) getJobInfo(): Promise<JobInfo>

[Function Description]

Obtains interaction task information.

[Request Parameters]

None

[Response Parameters]

|

Parameter |

Type |

Description |

|---|---|---|

|

jobId |

string |

Task ID. |

|

websocketAddr |

string | undefined |

WebSocket address of the intelligent interaction server. This address is used to assemble a WebSocket connection address when the virtual avatar is equipped with a third-party large model.

NOTICE:

By default, the returned address does not contain the prefix wss://. You need to add the prefix. For example, if the returned content is metastudio-api.cn-north-4.myhuaweicloud.com:443, the address should be wss://metastudio-api.cn-north-4.myhuaweicloud.com:443. |

[Code Examples]

const jobInfo = await HwICSUiSdk.getJobInfo();

getLanguageInfo

(static) getLanguageInfo(): Promise<LanguageResult>

[Function Description]

Obtains language information of an interaction task. To implement the multilingual capability, you need to create an intelligent interaction page in the backend and set the languages, which can be Chinese and English currently.

[Request Parameters]

None

[Response Parameters]

|

Parameter |

Type |

Description |

|---|---|---|

|

languageList |

Language list. |

|

|

currentLanguage |

'zh_CN' | 'en_US' |

Language of the current interaction task. |

|

result |

boolean |

Execution result. |

|

errorCode |

string | undefined |

Error code. See Table 1. |

|

errorMsg |

string | undefined |

Error message. |

|

Parameter |

Type |

Description |

|---|---|---|

|

language |

'zh_CN' | 'en_US' |

Language. |

|

language_desc |

string |

Language description. |

|

isDefault |

boolean |

Whether it is the default language. |

[Code Examples]

const languageResult = await HwICSUiSdk.getLanguageInfo();

initResourcePath

(static) interactionModeSwitch(param: ResourcePath): Promise<void>

[Function Description]

Initializes voice wakeup resources. If voice wakeup is not used, ignore this API.

The web SDK package later than 3.0.1 contains the following two resource files, which are needed only when voice wakeup is used:

- wasmData.js: voice wakeup algorithm resource file

- modelData.js: voice wakeup model resource file

The SDK has a preset wakeup model that allows customizing wakeup phrases. You can update the model to a local path. For details, see Web Voice Wakeup.

[Request Parameters]

|

Parameter |

Mandatory |

Default Value |

Type |

Description |

|---|---|---|---|---|

|

wasmPath |

Yes |

'wasmData.js' |

string |

Relative or absolute path of the wakeup algorithm resource file.

|

|

dataPath |

Yes |

'modelData.js' |

string |

Relative or absolute path of the wakeup model resource file.

|

|

initModel |

No |

true |

boolean |

Whether to initialize the wakeup model when calling an API. It takes 2 to 3 seconds to load the wakeup model before initialization. During this period, no operations can be performed. Therefore, you need to select a proper time point for model initialization:

|

[Response Parameters]

None.

[Code Examples]

await HwICSUiSdk.initResourcePath({

wasmPath: 'wasmData.js',

dataPath: 'modelData.js',

initModel: true,

});

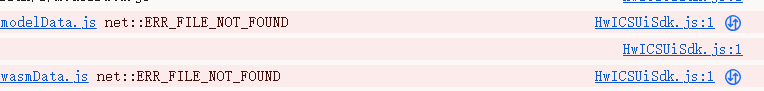

Note: If wasmPath and dataPath are not set or are incorrectly set, error messages as shown in the following figure will be reported.

In this case, voice wakeup is not available. You need to set the paths correctly.

interactionModeSwitch

(static) interactionModeSwitch(param: InteractionModeParam): Promise<InteractionModeResult>

[Function Description]

Switches the interaction mode (speech/text Q&A).

[Request Parameters]

|

Parameter |

Mandatory |

Default Value |

Type |

Description |

|---|---|---|---|---|

|

interactionMode |

No |

AUDIO |

|

Interaction mode, which can be speech or text interaction. |

[Response Parameters]

See Table 2.

[Code Examples]

const { result } = await HwICSUiSdk.interactionModeSwitch({ interactionMode: 'AUDIO' });

interruptSpeaking

(static) interruptSpeaking(): Promise<UISdkResult>

[Function Description]

Interrupts the virtual avatar's speech.

[Request Parameters]

None

[Response Parameters]

See Table 22.

[Code Examples]

const { result } = await HwICSUiSdk.interruptSpeaking();

muteRemoteAudio

(static) muteRemoteAudio(): Promise<boolean>

[Function Description]

Mutes the virtual avatar.

[Request Parameters]

None

[Response Parameters]

Promise<boolean>: whether the virtual avatar has been muted

[Code Examples]

const result = await HwICSUiSdk.muteRemoteAudio();

sendDrivenText

(static) sendDrivenText(param: TextDrivenParam): Promise<ChatResult>

[Function Description]

Sends the text to be read by the interactive virtual avatar.

The parameter isLast:true must be transferred for the last calling when active script reading ends. Otherwise, the virtual avatar cannot receive the notification speakingStop when finishing the reading.

[Request Parameters]

|

Parameter |

Mandatory |

Default Value |

Type |

Description |

|---|---|---|---|---|

|

text |

Yes |

"" |

String |

Text to be read by the interactive virtual avatar. |

|

isLast |

No |

false |

Boolean |

Whether it is the last piece of text. |

[Response Parameters]

See Table 21.

[Code Examples]

const { result } = await HwICSUiSdk.sendDrivenText({ text: 'Hello', isLast: true });

sendTextQuestion

(static) sendTextQuestion(param: TextQuestionParam): Promise<TextQuestionResult>

[Function Description]

Sends the question text.

[Request Parameters]

|

Parameter |

Mandatory |

Default Value |

Type |

Description |

|---|---|---|---|---|

|

text |

Yes |

- |

String |

Question text. |

[Response Parameters]

|

Parameter |

Type |

Description |

|---|---|---|

|

result |

Boolean |

Execution result. |

|

errorCode |

string | undefined |

Error code. See Table 1. |

|

errorMsg |

string | undefined |

Error message. |

|

chatId |

String |

Dialog ID. |

[Code Examples]

const { result } = await HwICSUiSdk.sendTextQuestion({ text: 'Who are you?' });

setConfig

(static) setConfig(config: ConfigMap): void

[Function Description]

Sets config to determine whether to display subtitles or the button for interaction.

[Request Parameters]

|

Parameter |

Mandatory |

Default Value |

Type |

Description |

|---|---|---|---|---|

|

config |

Yes |

- |

ConfigMap |

Configuration information. See Table 18. |

|

Parameter |

Mandatory |

Default Value |

Type |

Description |

|---|---|---|---|---|

|

enableCaption |

No |

false |

Boolean |

Whether to display subtitles. |

|

enableChatBtn |

No |

false |

Boolean |

Whether to display the button for interaction. |

|

enableHotIssues |

No |

false |

Boolean |

Whether to display frequently asked questions. |

|

enableWeakErrorInfo |

No |

true |

Boolean |

Whether to display a weak prompt. Example: A WebSocket exception occurs in the SDK. |

|

enableBusinessTrack |

No |

true |

Boolean |

Whether to report SDK tracking data. |

|

enableJobCache |

No |

true |

Boolean |

Whether to enable task cache. If you want the task configuration modification to take effect immediately, you do not need to enable the cache.

|

|

useDefaultBackground |

No |

true |

Boolean |

Whether to use the default background image. |

|

enableLocalWakeup |

No |

false |

Boolean |

Whether to enable voice wakeup. To enable it, you need to call the API initResourcePath to initialize the wakeup resource path, and then call the API create to create a virtual avatar. |

|

firstCreateLocalStream |

No |

false |

boolean |

Whether to create a local stream first. This method is applicable when the virtual avatar's voice volume is low on some Android devices in receiver mode. |

|

enableMediaViewer |

No |

true |

boolean |

Whether to enable image preview. You can click an image in the default dialog list to zoom in on the image. If this function is not required, set the value to false (disabled). |

[Response Parameters]

None

[Code Examples]

HwICSUiSdk.setConfig({

enableCaption: true,

enableChatBtn: false,

enableHotIssues: false,

enableWeakErrorInfo: true,

});

setLogLevel

(static) setLogLevel(logLevel: 'debug' | 'info' | 'warn' | 'error' | 'none'): void

[Function Description]

Sets the output log level.

[Request Parameters]

|

Parameter |

Mandatory |

Default Value |

Type |

Description |

|---|---|---|---|---|

|

logLevel |

Yes |

info |

|

Log level. |

[Response Parameters]

None

[Code Examples]

HwICSUiSdk.setLogLevel('warn');

startChat

(static) startChat(param?: ChatParam): Promise<ChatResult>

[Function Description]

Starts a dialog.

[Request Parameters]

|

Parameter |

Mandatory |

Default Value |

Type |

Description |

|---|---|---|---|---|

|

interactionMode |

No |

AUDIO |

|

Interaction mode, which can be speech or text interaction. |

[Response Parameters]

|

Parameter |

Type |

Description |

|---|---|---|

|

result |

Boolean |

Execution result. |

|

errorCode |

string | undefined |

Error code. See Table 1. |

|

errorMsg |

string | undefined |

Error message. |

|

chatId |

String |

Dialog ID. |

[Code Examples]

const { result } = await HwICSUiSdk.startChat({ interactionMode: 'AUDIO' });

startSpeak

(static) startSpeak(): Promise<UISdkResult>

[Function Description]

Starting speaking

[Request Parameters]

None

[Response Parameters]

See Table 22.

[Code Examples]

const { result } = await HwICSUiSdk.startSpeak();

startUserSpeak

(static) startUserSpeak(): Promise<UISdkResult>

[Function Description]

The user starts to ask questions.

[Request Parameters]

None

[Response Parameters]

|

Parameter |

Type |

Description |

|---|---|---|

|

result |

Boolean |

Execution result. |

|

errorCode |

string | undefined |

Error code. See Table 1. |

|

errorMsg |

string | undefined |

Error message. |

[Code Examples]

const { result } = await HwICSUiSdk.startUserSpeak();

stopChat

(static) stopChat(): Promise<ChatResult>

[Function Description]

Ends a dialog.

[Request Parameters]

None

[Response Parameters]

See Table 21.

[Code Examples]

const { result } = await HwICSUiSdk.stopChat();

stopSpeak

(static) stopSpeak(): Promise<UISdkResult>

[Function Description]

The virtual avatar stops speaking.

[Request Parameters]

None

[Response Parameters]

See Table 22.

[Code Examples]

const { result } = await HwICSUiSdk.stopSpeak();

stopUserSpeak

(static) stopUserSpeak(): Promise<UISdkResult>

[Function Description]

The user stops asking questions.

[Request Parameters]

None

[Response Parameters]

See Table 22.

[Code Examples]

const { result } = await HwICSUiSdk.stopUserSpeak();

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot