MLOps Overview

What Is MLOps?

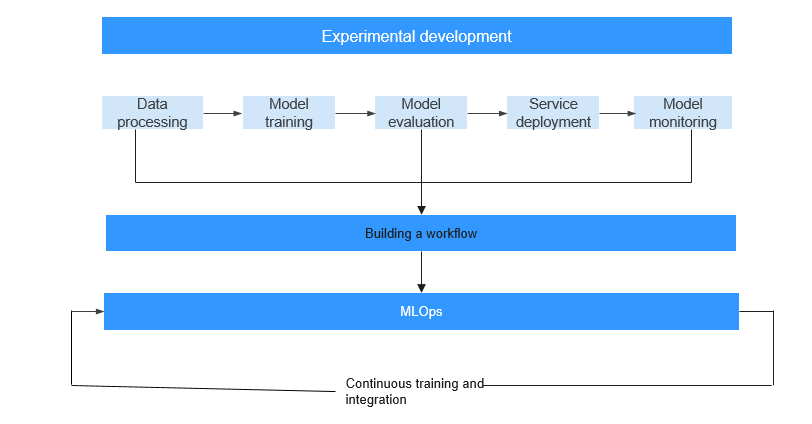

Machine Learning Operations (MLOps) are a set of practices with machine learning (ML) and DevOps combined. With the development of ML, it is expected not only to make breakthroughs in academic research, but also to systematically implement these technologies in various scenarios. However, there is a significant gap between academic research and the implementation of ML technologies. In academic research, an AI algorithm is developed for a certain dataset (a public dataset or a scenario-specific dataset). The algorithm is continuously iterated and optimized for this specific dataset. Scenario-oriented systematical AI development involves the development of both models and the entire system. Then, the successful experience in software system development "DevOps" is naturally introduced to AI development. However, in the AI era, traditional DevOps cannot cover the entire development process of an AI system.

DevOps

Development and Operations (DevOps) are a set of processes, approaches, and systems that facilitate communication, collaboration, and integration between software development, O&M, and quality assurance (QA) departments. DevOps is a proven approach in large-scale software system development. DevOps not only accelerates the interaction and iteration between services and development, but also resolves the conflicts between development and O&M. Development pursues speed, while O&M requires stability. This is the inherent and root conflict between development and O&M. Similar conflicts occur during the implementation of AI applications. The development of AI applications requires basic algorithm knowledge as well as fast, efficient algorithm iteration. Professional O&M personnel pursue stability, security, and reliability. Their professional knowledge is quite different from that of AI algorithm personnel. O&M personnel have to understand the design and ideas of algorithm personnel for service assurance, which are difficult for them to achieve. In this case, the algorithm personnel are required to take end-to-end responsibilities, leading to high labor cost. This method is feasible if a small number of models are used. However, when AI applications are implemented on a large scale, manpower will become a bottleneck.

MLOps Functions

The ML development process consists of project design, data engineering, model building, and model deployment. AI development is not a unidirectional pipeline job. During development, multiple iterations of experiments are performed based on the data and model results. To achieve better model results, algorithm engineers perform diverse data processing and model optimization based on the data features and labels of existing datasets. Traditional AI development ends with a one-off delivery of the final model output by iterative experimentation. As time passes after an application is released however, model drift occurs, leading to worsening effects when applying new data and features to the existing model. Iterative experimentation of MLOps forms a fixed pipeline which contains data engineering, model algorithms, and training configurations. You can use the pipeline to continuously perform iterative training on data that is being continuously generated. This ensures that the AI application of the model, built using the pipeline, is always in an optimum state.

An entire MLOps link, which covers everything from algorithm development to service delivery and O&M, requires an implementation tool. Originally, the development and delivery processes were conducted separately. The models developed by algorithm engineers were delivered to downstream system engineers. In this process, algorithm engineers are highly involved, which is different from MLOps. There are general delivery cooperation rules in each enterprise. When it comes to project management, working process management needs to be added to AI projects as the system does not simply build and manage pipelines, but acts as a job management system.

The tool for the MLOps link must support the following features:

- Process analysis: Accumulated industry sample pipelines help you quickly design AI projects and processes.

- Process definition and redefinition: You can use pipelines to quickly define AI projects and design workflows for model training and release for inference.

- Resource allocation: You can use account management to allocate resource quotas and permissions to participants (including developers and O&M personnel) in the pipeline and view resource usage.

- Task arrangement: Sub-tasks can be arranged based on sub-pipelines. Additionally, notifications can be enabled for efficient management and collaboration.

- Process quality and efficiency evaluation: Pipeline execution views are provided, and checkpoints for different phases such as data evaluation, model evaluation, and performance evaluation are added so that AI project managers can easily view the quality and efficiency of the pipeline execution.

- Process optimization: In each iteration of the pipeline, you can customize core metrics and obtain affected data and causes. In this way, you can quickly determine the next iteration based on these metrics.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot