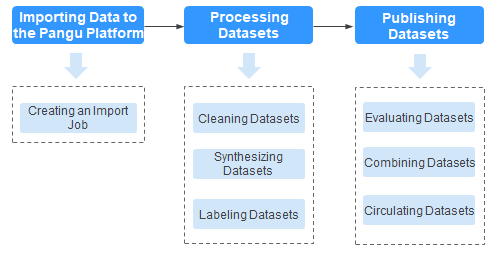

Process of Using Data Engineering

High-quality data is the foundation for continuous iteration and optimization of large models. Its quality directly determines the performance, generalization capability, and adaptability of models to different application scenarios. Only through systematic preparation and processing of data can valuable insights be extracted, thereby providing better support for model training. Therefore, data acquisition, cleaning, labeling, evaluation, and publishing are indispensable steps in data development.

For details about the data engineering process, see Figure 1 and Table 1.

Before using the pre-labeling functions of LLM operators for data processing, data synthesis, and data labeling, ensure that an NLP model has been deployed.

|

Procedure |

Step |

Description |

|---|---|---|

|

Importing data to the Pangu platform |

Creating an import task |

Import data stored in OBS or local data into the platform for centralized management, facilitating subsequent processing or publishing. |

|

Processing datasets |

Processing datasets |

Use dedicated processing operators to preprocess data, ensuring it meets the model training standards and service requirements. Different types of datasets utilize operators specially designed for removing noise and redundant information, to enhance data quality. |

|

Generating a synthetic dataset |

Using either a preset or custom data instruction, process the original data, and generate new data based on a specified number of epochs. This process can extend the dataset to some extent and enhance the diversity and generalization capability of the trained model. |

|

|

Labeling datasets |

Add accurate labels to unlabeled datasets to ensure high-quality data required for model training. The platform supports both manual annotation and AI pre-annotation. You can choose an appropriate annotation method based on your needs. The quality of data labeling directly impacts the training effectiveness and accuracy of the model. |

|

|

Combining datasets based on a specific ratio |

Dataset combination involves combining multiple datasets based on a specific ratio and generating a processed dataset. A proper ratio ensures the diversity, balance, and representativeness of datasets and avoids issues resulting from uneven data distribution. |

|

|

Publishing datasets |

Evaluating datasets |

The platform offers predefined evaluation standards for multiple types of data. You can choose from these predefined standards or customize evaluation standards as needed to precisely improve data quality, ensure that data meets high standards, and enhance model performance. |

|

Publishing datasets |

Data publishing refers to publishing a dataset in a specific format as a published dataset for subsequent model training operations. Datasets can be published in standard or Pangu format.

|

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot