Ingesting ServiceStage Containerized Application Logs to LTS

LTS collects log data from containerized applications of ServiceStage. By processing a massive number of logs efficiently, securely, and in real time, LTS provides useful insights for you to optimize the availability and performance of cloud services and applications. It also helps you efficiently perform real-time decision-making, device O&M management, and service trend analysis.

Follow these steps to complete the ingestion configuration:

Step 1: Select a Log Stream: Store various log types in separate log streams for better categorization and management.

Step 2: Check Dependencies: The system automatically checks whether the dependencies meet the requirements.

Step 3: (Optional) Select a Host Group: Define the range of hosts for log ingestion. Host groups are virtual groups of hosts. They help you organize and categorize hosts, making it easier to configure log ingestion for multiple hosts simultaneously. You can add one or more hosts whose logs are to be collected to a single host group, and associate it with the same ingestion configuration.

Step 4: Configure the Collection: Configure the log collection details, including collection paths and policies.

Step 5: Configure Indexing: An index is a storage structure used to query log data. Configuring indexing makes log searches and analysis faster and easier.

Step 6: Complete the Ingestion Configuration: After a log ingestion configuration is created, manage it in the ingestion list.

Setting Multiple Ingestion Configurations in a Batch: Select this mode to collect logs from multiple scenarios.

Prerequisites

- A ServiceStage application has been created. For details, see Creating an Application.

- A ServiceStage environment has been created. For details, see Creating an Environment.

- A ServiceStage component has been created. For details, see Creating and Deploying a Component.

- A log group and a log stream have been created. For details, see Managing Log Groups and Managing Log Streams.

- ICAgent has been installed in the cluster hosted by ServiceStage. For details, see Managing ICAgent in Container Scenarios.

(You are advised to install ICAgent before configuring ServiceStage log ingestion. This avoids automatic checks and repairs during configuration, saving time.)

- Add the CCE cluster with ICAgent installed to a host group of the custom identifier type. For details, see Creating a Host Group (Custom Identifier).

- Before configuring log ingestion, ensure that ICAgent collection is enabled by referring to Setting LTS Log Collection Quota and Usage Alarms.

- This function is enabled by default. If you do not need to collect logs, disable this function to reduce resource usage.

- After this function is disabled, ICAgent will stop collecting logs, and the log collection function on the AOM console will also be disabled.

Constraints

- CCE cluster nodes whose container engine is Docker are supported.

- CCE cluster nodes whose container engine is containerd are supported. You must be using ICAgent 5.12.130 or later.

- To collect container log directories mounted to host directories to LTS, you must configure the node file path.

- Constraints on the Docker storage driver: Currently, container file log collection supports only the overlay2 storage driver. devicemapper cannot be used as the storage driver. Run the following command to check the storage driver type:

docker info | grep "Storage Driver"

Step 1: Select a Log Stream

- Log in to the LTS console.

- In the navigation pane, choose Log Ingestion > Ingestion Center. On the displayed page, select Cloud services under Types. Hover the cursor over the ServiceStage - Containerized Application Logs card and click Ingest Log (LTS) to access the configuration page.

Alternatively, choose Log Ingestion > Ingestion Management in the navigation pane. Click Create. On the displayed page, select Cloud services under Types. Hover the cursor over the ServiceStage - Containerized Application Logs card and click Ingest Log (LTS) to access the configuration page.

- In the Select Log Stream step, set the following parameters:

- Select a ServiceStage application and ServiceStage environment.

- Select a log group from the Log Group drop-down list. If there are no desired log groups, click Create Log Group to create one.

- Select a log stream from the Log Stream drop-down list. If there are no desired log streams, click Create Log Stream to create one.

- Click Next: Check Dependencies.

Step 2: Check Dependencies

- The system automatically checks whether there is a host group with the custom identifier k8s-log-Application ID.

If not, click Auto Correct.

- Auto Correct: Configure dependencies with one click.

- Check Again: Recheck dependencies.

- Click Next: (Optional) Select Host Group.

Step 3: (Optional) Select a Host Group

- In the host group list, the host group to which the cluster belongs is selected by default. You can also select host groups as required.

- Click Next: Configurations.

Step 4: Configure the Collection

Collection configuration items include the log collection scope, collection mode, and format processing. Configure them as follows.

- Collection Configuration Name: Enter 1 to 64 characters. Only letters, digits, hyphens (-), underscores (_), and periods (.) are allowed. Do not start with a period or underscore, or end with a period.

- Data Source: Select a data source type and configure it. The following data source types are supported: container standard output, container file path, node file path, and K8s event.

Table 1 Collection configuration parameters Type

Description

Container standard output

Collects stderr and stdout logs of a specified container in the cluster. Either Container Standard Output (stdout) or Container Standard Error (stderr) must be enabled.

- If you enable Container Standard Error (stderr), select your collection destination path: Collect standard output and standard error to different files (stdout.log and stderr.log) or Collect standard output and standard error to the same file (stdout.log).

- The standard output of the matched container is collected to the specified log stream. Standard output to AOM stops.

- Allow Repeated File Collection (not available to Windows)

After you enable this function, one host log file can be collected to multiple log streams. This function is available only to certain ICAgent versions. For details, see Checking the ICAgent Version.

After you disable this function, each collection path must be unique. That is, the same log file in the same host cannot be collected to different log streams.

Container file

Collects file logs of a specified container in the cluster.

- Collection Paths: Specify the paths from which LTS will collect logs. For details, see 2.

If a container mount path has been configured for the CCE cluster workload, the paths added for this field are invalid. The collection paths take effect only after the mount path is deleted.

- You can verify collection paths to ensure that logs can be properly collected. Click use path verification, enter the collection paths and absolute paths of the log files, and click OK. You can add up to 30 collection paths. If the paths are correct, a success message will be displayed.

- Allow Repeated File Collection (not available to Windows)

After you enable this function, one host log file can be collected to multiple log streams. This function is available only to certain ICAgent versions. For details, see Checking the ICAgent Version.

After you disable this function, each collection path must be unique. That is, the same log file in the same host cannot be collected to different log streams.

- Set Collection Filters: Blacklisted directories or files will not be collected. If you specify a directory, all files in the directory are filtered out.

Node file

Collects files of a specified node in a cluster.

- Collection Paths: Specify the paths from which LTS will collect logs.

- Allow Repeated File Collection (not available to Windows)

After you enable this function, one host log file can be collected to multiple log streams. This function is available only to certain ICAgent versions. For details, see Checking the ICAgent Version.

After you disable this function, each collection path must be unique. That is, the same log file in the same host cannot be collected to different log streams.

- You can verify collection paths to ensure that logs can be properly collected. Click use path verification, enter the collection paths and absolute paths of the log files, and click OK. You can add up to 30 collection paths. If the paths are correct, a success message will be displayed.

- Set Collection Filters: Blacklisted directories or files will not be collected. If you specify a directory, all files in the directory are filtered out.

Kubernetes event

Collects event logs of the Kubernetes cluster. No parameters are required. This feature is supported on ICAgent 5.12.150 and later.

Kubernetes events of a Kubernetes cluster can be collected to only one log stream.

- If you select Container standard output or Container file as the data source type, set the ServiceStage matching rule by selecting the corresponding component from the drop-down list.

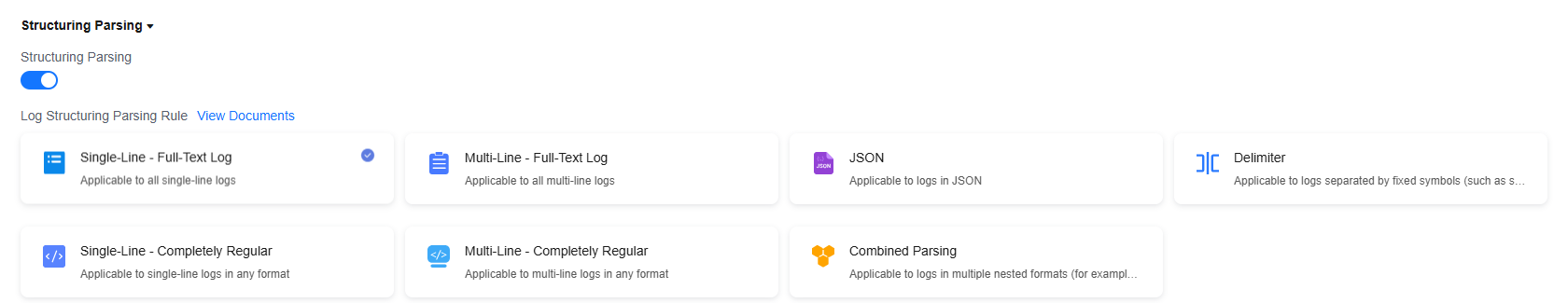

- Structuring Parsing:

LTS offers various log parsing rules, including Single-Line - Full-Text Log, Multi-Line - Full-Text Log, JSON, Delimiter, Single-Line - Completely Regular, Multi-Line - Completely Regular, and Combined Parsing. Select a parsing rule that matches your log content. Once collected, structured logs are sent to your specified log stream, enabling field searching.

- If you enable Structuring Parsing, configure it by referring to Configuring ICAgent Structuring Parsing.

Figure 1 ICAgent structuring parsing configuration

- If you disable Structuring Parsing, log data will not be structured. Raw logs will be sent to the specified log stream, allowing only keyword-based searches.

- If you enable Structuring Parsing, configure it by referring to Configuring ICAgent Structuring Parsing.

- Other: After setting the collection paths, you can also set log splitting, binary file collection, and custom metadata.

- Configure the log format and time by referring to Table 3. If Structuring Parsing is enabled, these parameters are unavailable.

Table 3 Log collection settings Parameter

Description

Log Format

- Single-line: Each log line is displayed as a single log event.

- Multi-line: Multiple lines of exception logs can be displayed as a single log event and each line of regular logs is displayed as a log event. This is helpful when you check logs to locate problems.

Log Time

System time: log collection time by default. It is displayed at the beginning of each log event.

- Log collection time is the time when logs are collected and sent by ICAgent to LTS.

- Log printing time is the time when logs are printed. ICAgent collects and sends logs to LTS every second.

- Restriction on log collection time: Logs are collected within 24 hours before and after the system time.

Time wildcard: You can set a time wildcard so that ICAgent will look for the log printing time as the beginning of a log event.

- If the time format in a log event is 2019-01-01 23:59:59.011, the time wildcard should be set to YYYY-MM-DD hh:mm:ss.SSS.

- If the time format in a log event is 19-1-1 23:59:59.011, the time wildcard should be set to YY-M-D hh:mm:ss.SSS. If a log event does not contain year information, ICAgent regards it as printed in the current year.

Example:

YY - year (19) YYYY - year (2019) M - month (1) MM - month (01) D - day (1) DD - day (01) hh - hours (23) mm - minutes (59) ss - seconds (59) SSS - millisecond (999) hpm - hours (03PM) h:mmpm - hours:minutes (03:04PM) h:mm:sspm - hours:minutes:seconds (03:04:05PM) hh:mm:ss ZZZZ (16:05:06 +0100) hh:mm:ss ZZZ (16:05:06 CET) hh:mm:ss ZZ (16:05:06 +01:00)

Log Segmentation

This parameter needs to be specified if the Log Format is set to Multi-line. By generation time indicates that a time wildcard is used to detect log boundaries, whereas By regular expression indicates that a regular expression is used.

By regular expression

You can set a regular expression to look for a specific pattern to indicate the beginning of a log event. This parameter needs to be specified when you select Multi-line for Log Format and By regular expression for Log Segmentation.

ICAgent supports only RE2 regular expressions. For details, see Syntax.

- Click Next: Index Settings.

Step 5: Configure Indexing

An index is a storage structure used to query log data. Configuring indexing makes log searches and analysis faster and easier. Different index settings generate different query and analysis results. Configure index settings to fit your service requirements.

- If you do not want to query or analyze logs using specific fields, you can skip configuring indexing when configuring log ingestion. This will not affect log collection. You can also configure indexing after creating the log ingestion configuration. However, index settings will only apply to newly ingested logs. For details, see Configuring Log Indexing. If you choose to skip this step, retain the default settings on the Index Settings page and click Skip and Submit. The message "Logs ingested" will appear.

- To query or analyze logs using specific fields, configure indexing on the Index Settings page when creating an ingestion configuration. For details, see Configuring Log Indexing.

On this page, click Auto Configure to have LTS generate index fields based on the first log event in the last 15 minutes or common system reserved fields (such as hostIP, hostName, and pathFile), and manually add structured fields. After completing the settings, click Submit. The message "Logs ingested" will appear. You can also adjust the index settings after the ingestion configuration is created. However, the changes will only affect newly ingested logs.

Step 6: Complete the Ingestion Configuration

- Click its name to view its details.

- Click Modify in the Operation column to modify the ingestion configuration.

You can quickly navigate and modify settings by clicking Select Log Stream, (Optional) Select Host Group, Configurations, or Index Settings in the navigation tree at the top of the page. After making your modifications, click Submit to save the changes. This operation is available for all log ingestion modes except CCE logs, ServiceStage containerized application logs, and self-built Kubernetes cluster logs.

- Click More > Configure Tag in the Operation column to add a tag.

- Click More > Copy in the Operation column to copy the ingestion configuration.

- Click Delete in the Operation column to delete the ingestion configuration.

Deleting an ingestion configuration may lead to log collection failures, potentially resulting in service exceptions related to user logs. In addition, the deleted ingestion configuration cannot be restored. Exercise caution when performing this operation.

- To stop log collection of an ingestion configuration, toggle off the switch in the Ingestion Configuration column to disable the configuration. To restart log collection, toggle on the switch in the Ingestion Configuration column.

Disabling an ingestion configuration may lead to log collection failures, potentially resulting in service exceptions related to user logs. Exercise caution when performing this operation.

- Click More > ICAgent Collect Diagnosis in the Operation column of the ingestion configuration to monitor the exceptions, overall status, and collection status of ICAgent. If this function is not displayed, enable ICAgent diagnosis by referring to Setting ICAgent Collection.

Setting Multiple Ingestion Configurations in a Batch

You can set multiple ingestion configurations for multiple scenarios in a batch, avoiding repetitive setups.

- On the Ingestion Management page, click Batch Create to go to the configuration details page.

- Ingestion Type: Select ServiceStage - Containerized Application Logs.

- Rule List:

- Enter the number of ingestion configurations in the text box and click Add.

- Enter a rule name under Configuration Items on the right. You can also double-click the name of the ingestion configuration on the left to replace it with a custom name after setting the configuration items. A rule name can contain 1 to 64 characters, including only letters, digits, hyphens (-), underscores (_), and periods (.). It cannot start with a period or underscore or end with a period.

- To copy an ingestion configuration, move the cursor to it and click

.

. - To delete an ingestion configuration, move the cursor to it and click

. In the displayed dialog box, click Yes.

. In the displayed dialog box, click Yes.

- Configuration Items:

- The ingestion configurations are displayed on the left. You can add up to 99 more configurations.

- The ingestion configuration items are displayed on the right. Set them by referring to Step 4: Configure the Collection.

- After an ingestion configuration is complete, you can click Apply to Other Configurations to copy its settings to other configurations.

- Click Check Parameters. After the check is successful, click Submit.

The added ingestion configurations will be displayed on the Ingestion Management page after the batch creation is successful.

- (Optional) Perform the following operations on ingestion configurations:

- Select multiple existing ingestion configurations and click Edit. On the displayed page, select an ingestion type to modify the corresponding ingestion configurations.

- Select multiple disabled ingestion configurations, click Enable/Disable Ingestion Configuration, and select Enable to enable them in a batch.

- Select multiple enabled ingestion configurations, click Enable/Disable Ingestion Configuration, and select Disable. Logs will not be collected for disabled ingestion configurations. Exercise caution when disabling these configurations.

- Select multiple existing ingestion configurations and click Delete.

Helpful Links

- After logs are ingested to LTS, you can use the search and analysis functions of LTS to quickly gain insights into log data. For details, see Log Search and Analysis (SQL Analysis Offline Soon).

- You can use the log alarm function of LTS to set alarm rules to notify you of exceptions in the ingested logs. For details, see Configuring Log Alarm Rules.

- If you have any questions when installing ICAgent, see Host Management in the FAQ.

- If you have any questions when configuring log ingestion, see Log Ingestion in the FAQ.

- You can call APIs to create, query, and delete log ingestion configurations. For details, see Log Ingestion.

- You are advised to learn several best practices of data collection. For details, see Log Ingestion in the Best Practices.

- To collect logs across clouds or regions, see Collecting Host Logs from Third-Party Clouds, Internet Data Centers, and Other Huawei Cloud Regions to LTS.

- To collect data from multiple channels, see Collecting Logs from Multiple Channels to LTS.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot