Error netty.exception.RemoteTransportException Is Reported During Flink Job Runtime

Symptom

The error message "netty.exception.RemoteTransportException" is displayed during Flink job runtime.

Possible Causes

- Possible cause 1:

Full GC occurs at the heap memory level.

- Based on the error information in the logs, we can locate the node where the faulty TaskManager is.

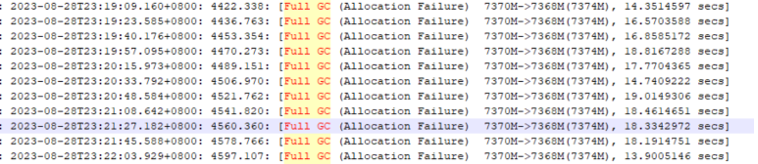

- Check whether a large number of full GCs occur or the GC duration is too long in the GC logs of the node. As shown in the following figure, each GC takes more than 10s.

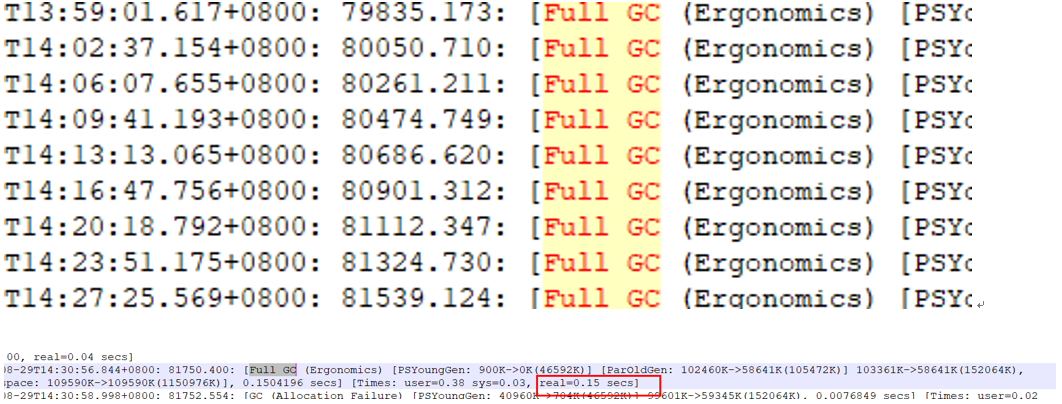

Note: It is normal that Full GC occurs in the first several TaskManager startups. It is also normal that the full GC interval is long and the full GC duration is short. As shown in the following figure, the interval is at the hour level, and the interval does not exceed 1s.

- Possible cause 2:

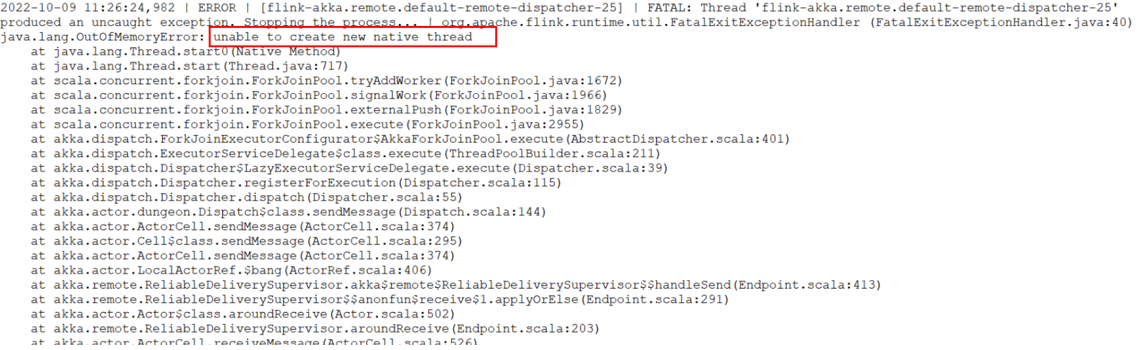

A thread-level memory leak (java.lang.OutOfMemoryError: unable to create new native thread) occurs on the service side.

- You can locate the node where the faulty TaskManager is deployed based on the logs of the abnormal job.

- The logs of the abnormal TaskManager node contain the error message unable to create new native thread type.

- This error indicates that the abnormal TaskManager node cannot create new threads. As a result, the TaskManager node on the current node is blocked.

- Possible Cause 3

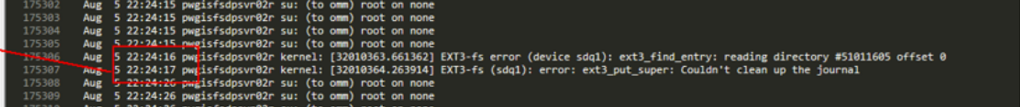

The hardware of NodeManager is faulty.

- You can locate the node where the faulty TaskManager is deployed based on the logs of the abnormal job.

- No exception information is found in the TaskManager log.

- A large number of disk exceptions are found in the OS logs of the NodeManager node where TaskManager is.

- Check whether the yarn-start-stop.log file of the NodeManager process is restarted.

In addition to the preceding error information, if the disk of the NodeManager node is full, the NodeManager process restarts and all containers fail. In this case, the Flink job also fails.

Solution

- Solution for Cause 1

- Increase the startup memory of the TaskManager on the service side.

- If backpressure exists on the service side, increase the overall service concurrency to eliminate backpressure.

If backpressure does not exist, ask the service side to check the code for memory leakage. It is recommended that automatic restart be enabled. For example, set restart-strategy: failure-rate in the flink-conf.xml file.

- Solution for Cause 2

- Check whether the OS configuration of the node is improper.

- Determine whether memory leakage occurred based on the method of determining whether thread leakage occurred in Flink jobs. (Use jstack.)

- Solution for Cause 3

- Rectify the disk fault.

- Enable automatic restart. For example, set restart-strategy to failure-rate in the flink-conf.xml file.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot