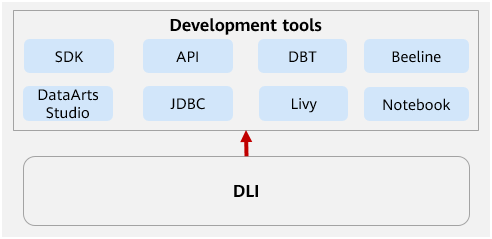

Development Tools Supported by DLI

DLI supports various client connection methods to cater to diverse user needs in different scenarios. This section details the connection methods supported by DLI and their specific applications.

|

Connection Method |

Description |

Supported Job Type |

Application Scenario |

Helpful Link |

|---|---|---|---|---|

|

DLI management console |

On the DLI management console, you can intuitively manage and use DLI, including creating elastic resource pools, adding or managing queues, submitting jobs, and monitoring job execution statuses. |

|

Offers a graphical user interface (GUI) that is simple and intuitive, ideal for users unfamiliar with command-line operations. |

For details about how to use the DLI console, see DLI Job Development Process. |

|

DLI API |

DLI offers a wide range of APIs that allow you to programmatically manage and use DLI, including creating queues, submitting jobs, and querying job statuses. |

|

This method is ideal for users with foundational programming skills, enabling automated management and operations via APIs to enhance work efficiency. |

For details, see Data Lake Insight API Reference. |

|

DLI SDK |

DLI offers SDKs in various languages (such as Python and Java), enabling you to integrate DLI into your own applications for implementing more complex business logic. |

|

This method offers more functions and greater flexibility, ideal for users seeking deep integration of DLI within their applications. |

You are advised to use DLI SDK V2. For details about how to use DLI SDKs, see Data Lake Insight SDK Reference. |

|

Using JDBC |

DLI supports JDBC connections. You can use JDBC tools (such as DBeaver and SQuirreL SQL) to connect to DLI and execute SQL statements for data query and analysis. |

SQL job |

Standard JDBC APIs are supported, ensuring high compatibility. |

|

|

Submitting a DLI job using DataArts Studio |

DLI is deeply integrated with the Huawei Cloud DataArts Studio console. You can use DataArts Studio to achieve one-stop data development and analytics, including data integration, development, and governance. |

|

This method provides a one-stop data development and analysis platform, streamlining data processing and analysis processes and enhancing development efficiency. |

|

|

Submitting a Spark job using a notebook instance |

Notebook instances offer an interactive programming environment where you can simultaneously write code and develop jobs using the web-based interactive development environment provided by Notebook, enabling flexible data analysis and exploration. |

Spark job |

This method is ideal for scenarios that require immediate viewing of data processing and analytical results, enabling swift adjustments and optimizations of code, such as during the model training phase. |

|

|

Submitting a Spark job using Livy |

Submit Spark jobs to DLI based on the open-source Apache Livy. |

Spark job |

Livy offers reliable REST APIs, ideal for submitting and managing Spark jobs in production environments. |

|

|

DBT |

Data Build Tool (DBT) is an open-source data modeling and conversion tool that runs in Python environments. |

SQL job |

Connecting DBT to DLI can define and execute SQL transformations, supporting the entire data lifecycle management from integration to analysis. It is suitable for large-scale data analysis projects and complex data analysis scenarios. |

Configuring DBT to Connect to DLI for Data Scheduling and Analysis |

|

Beeline |

Beeline is one of the essential tools for data analysts and data engineers. |

SQL job |

It is applicable to large-scale data processing scenarios. With its SQL engine, Beeline enables you to execute data queries, analysis, and management tasks using SQL language. |

Configuring Beeline to Connect to DLI Using Kyuubi for Data Query and Analysis |

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot