Parallel OBS Data Export

Overview

- The CN only plans data export tasks and delivers the tasks to DNs for execution. In this case, the CN is released to process external requests.

- Every DN is involved in data export, and the computing capabilities and bandwidths of all the DNs are fully leveraged to export data.

- You can concurrently export data using multiple OBS services, but the bucket and object paths specified for the export tasks must be different and cannot be null.

- The OBS server connects to DWS cluster nodes. The export rate is affected by the network bandwidth.

- The text, CSV, ORC, and Parquet formats are supported. The size of data in a single row must be less than 1 GB.

- To ensure the correctness of data import or export, you need to import or export data from OBS in the same compatibility mode.

For example, data imported or exported in MySQL compatibility mode can be exported or imported only in MySQL compatibility mode.

- Method 1: You do not need to create a server. Use the default server to create a foreign table. Data in TXT or CSV format is supported. For details, see Exporting CSV/TXT Data to OBS.

- Method 2: Create a server and use it to create a foreign table. ORC and Parquet data formats are supported. For details, see Exporting ORC and Parquet Data to OBS. Only 9.1.0 and later versions support data in Parquet format.

- Ensure that no Chinese characters are contained in paths used for importing data to or exporting data from OBS.

- Data cannot be imported to or exported from OBS across regions. Ensure that OBS and the DWS cluster are in the same region.

- To ensure the correctness of data import or export, you need to import or export data from OBS in the same compatibility mode.

If data is imported or exported in MySQL compatibility mode, it can only be exported or imported in the same mode.

Related Concepts

- Source data file: a text, CSV, ORC, or Parquet file that stores data.

- OBS: a cloud storage service used to store unstructured data, such as documents, images, and videos. Data objects concurrently exported from DWS are stored on the OBS server.

- Bucket: a container storing objects on OBS.

- Object storage is a flat storage mode. Layered file system structures are not needed because all objects in buckets are at the same logical layer.

- In OBS, each bucket name must be unique and cannot be changed. A default access control list (ACL) is created with a bucket. Each item in the ACL contains permissions granted to certain users, such as READ, WRITE, and FULL_CONTROL. Only authorized users can perform bucket operations, such as creating, deleting, viewing, and setting ACLs for buckets.

- A user can create a maximum of 100 buckets. The total data size and the number of objects and files in each bucket are not limited.

- Object: a basic data storage unit in OBS. Data uploaded by users is stored in OBS buckets as objects. Object attributes include Key, Metadata, and Data.

Generally, objects are managed as files. However, OBS has no file system–related concepts, such as files or folders. To let users easily manage data, OBS allows them to simulate folders. Users can add a slash (/) in the object name, for example, tpcds1000/stock.csv. In this name, tpcds1000 is regarded as the folder name and stock.csv the file name. The value of key (object name) is still tpcds1000/stock.csv, and the content of the object is the content of the stock.csv file.

- Key: name of an object. It is a UTF-8 character sequence containing 1 to 1024 characters. A key value must be unique in a bucket. Users can name the objects they stored or obtained as Bucket name+Object name.

- Metadata: object metadata, which contains information about the object. There are system metadata and user metadata. The metadata is uploaded to OBS as key-value pairs together with HTTP headers.

- System metadata is generated by OBS and used for processing object data. System metadata includes Date, Content-length, last-modify, and Content-MD5.

- User metadata contains object descriptions specified by users for uploading objects.

- Data: object content, which is regarded by OBS as stateless binary data.

- Foreign table: A foreign table is used to identify data in a source data file. It stores information, such as the location, format, destination location, encoding format, and data delimiter of a source data file.

Principles

The following describes the principles of exporting data from a cluster to OBS by using a distributed hash table or a replication table.

- Distributed hash table: the table for which DISTRIBUTE BY HASH (Column_Name) is specified in the table creation statement.

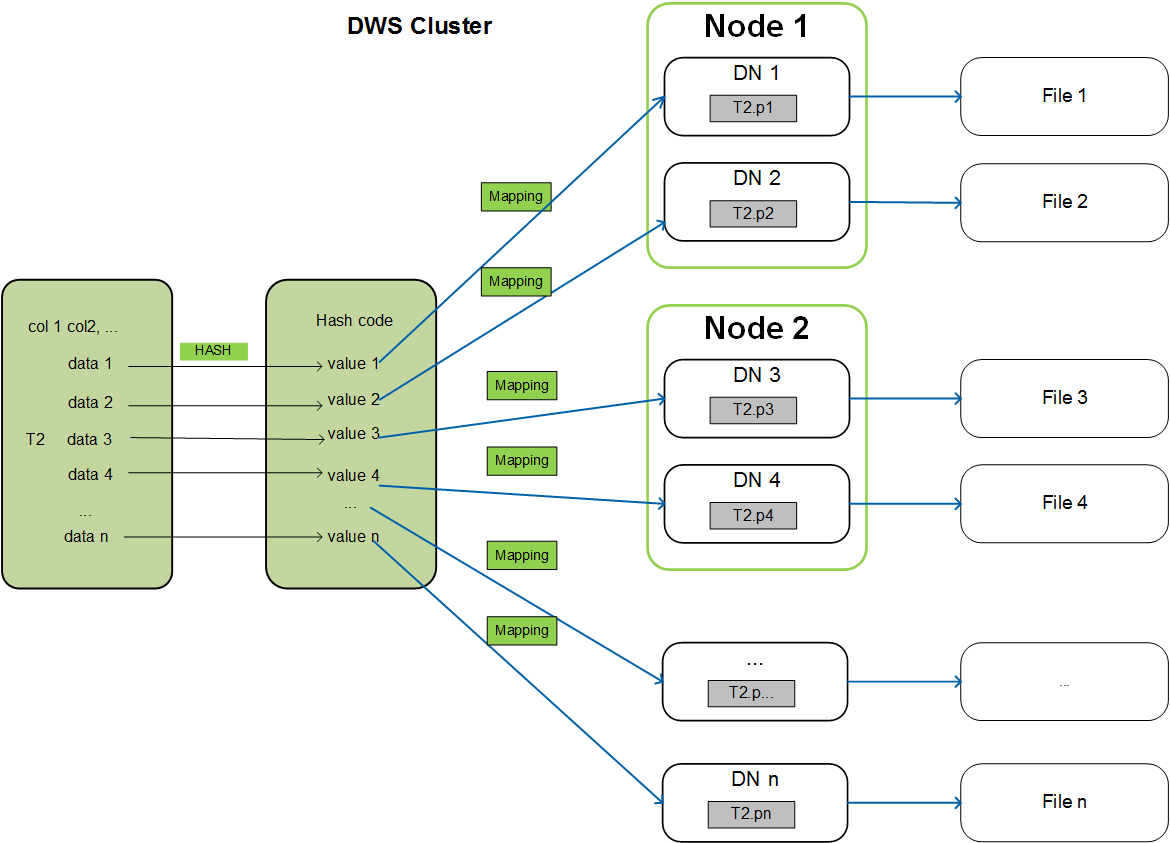

A distributed hash table stores data in hash mode. Figure 1 shows how to export data from table (T2) to OBS as an example.

During table data storage, the col2 hash column in table T2 is hashed, and a hash value is generated. The tuple is distributed to corresponding DNs for storage according to the mapping between the DNs and the hash value.

When data is exported to OBS, DNs that store the exported data of T2 directly export their data files to OBS. Original data on multiple nodes will be exported in parallel.

- Replication table: the table for which DISTRIBUTE BY REPLICATION is specified in the table creation statement.

A replication table stores a package of complete table data on each DWS node. When exporting data to OBS, DWS randomly selects a DN for export.

Naming Rules of Exported Files

Rules for naming the files exported from DWS to OBS are as follows:

- Data exported from DNs is stored on OBS in segment format. The file is named as Table name_Node name_segment.n. n is a natural number starting from 0, for example, 0, 1, 2, 3.

For example, the data of table t1 on datanode3 will be exported as t1_datanode3_segment.0, t1_datanode3_segment.1, and so on.

You are advised to export data from different clusters or databases to different OBS buckets or different paths of the same OBS bucket.

- Each segment can store a maximum of 1 GB data, with no tuples sliced. If data stored in a segment exceeds 1 GB, the excess data will be stored in the second segment.

A segment has already stored 100 pieces of tuples (1023 MB) when datanode3 exports data from t1 to OBS. If a 5 MB tuple is inserted to the segment, the data size becomes 1028 MB. In this case, file t1_datanode3_segment.0 (1023 MB) is generated and stored on OBS, and the new tuple is stored on OBS as file t1_datanode3_segment.1.

- When data is exported from a distributed hash table, the number of segments generated on each DN depends on the data volume stored on a DN, not on the number of DNs in the cluster. Data stored in hash mode may not be evenly distributed on each DN.

For example, a cluster has DataNode1, DataNode2, DataNode3, DataNode4, DataNode5, and DataNode6, which store 1.5 GB, 0.7 GB, 0.6 GB, 0.8 GB, 0.4 GB, and 0.5 GB data, respectively. Seven OBS segment files will be generated during data export because DataNode1 will generate two segment files, which store 1 GB and 0.5 GB data, respectively.

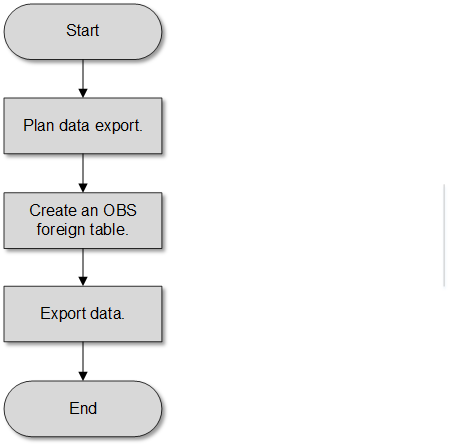

Data Export Process

|

Procedure |

Description |

Subtask |

|---|---|---|

|

Plan data export. |

Create an OBS bucket and a folder in the OBS bucket as the directory for storing exported data files. For details, see Planning Data Export. |

- |

|

Create an OBS foreign table. |

Create a foreign table to help OBS specify information about data files to be exported. The foreign table stores information, such as the destination location, format, encoding, and data delimiter of a source data file. For details, see Creating an OBS Foreign Table. |

- |

|

Export data. |

After the foreign table is created, run the INSERT statement to efficiently export data to data files. For details, see Exporting Data. |

- |

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot