Configuring Spark to Load Third-Party JAR Packages for UDF Registration or Spark SQL Extension

Scenarios

To enhance Spark's capabilities, custom UDFs or third-party JAR packages are frequently used. However, to use these external libraries, you must specify their class loading paths before starting Spark.

Notes and Constraints

This section applies only to MRS 3.5.0-LTS or later.

Prerequisites

Custom JAR package has been uploaded to the client node. This section uses spark-test.jar as an example to describe how to upload the package to the /tmp directory on the client node.

Configuring Parameters

- Install the Spark client.

For details, see Installing a Client.

- Log in to the node where the client is installed as the client installation user and load environment variables.

Navigate to the client installation directory.

cd {Client installation directory}Configure environment variables.

source bigdata_env

If Kerberos authentication has been enabled for the cluster (in security mode), run the following command for user authentication. If Kerberos authentication is disabled for the cluster (in normal mode), user authentication is not required.

kinit Component service user

- Upload the JAR package to a path on the HDFS, for example, hdfs://hacluster/tmp/spark/JAR.

hdfs dfs -put /tmp/spark-test.jar /tmp/spark/JAR/

- Modify the following parameters in the {Client installation directory}/Spark/spark/conf/spark-defaults.conf file on the Spark client.

Parameter

Description

spark.jars

JAR package path, for example, hdfs://hacluster/tmp/spark/JAR/spark-test.jar

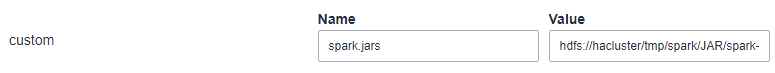

- Log in to FusionInsight Manager, choose Cluster > Services > Spark, click Configurations and then All Configurations. On the displayed page, choose JDBCServer(Role) > Customization, add the following parameters in the custom area, and restart the JDBCServer service.

Parameter

Description

spark.jars

JAR package path, for example, hdfs://hacluster/tmp/spark/JAR/spark-test.jar

- Verify that the JAR package has been loaded and the execution result does not contain error message "ClassNotFoundException".

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot