Clearing Residual Files When a Spark Job Fails

Scenarios

If a Spark job fails during execution, the system may not promptly or completely clean up the temporary files it generated, resulting in residual files remaining on the system. Over time, these files can accumulate and consume significant disk space. If disk usage becomes excessive, it may trigger disk space alarms and potentially disrupt the normal operation of the entire system.

To prevent this issue, it's important to regularly clear residual files. Setting up a scheduled task to remove these files ensures efficient disk space usage and helps maintain stable, reliable system performance.

Notes and Constraints

- This section applies only to MRS 3.3.1-LTS or later.

- To use this feature, you must start the Spark JDBCServer service. This service runs a resident process and periodically deletes temporary files generated during job execution.

- This feature also requires configuring and modifying parameters on both the Spark client and the Spark JDBCServer.

- The following directories can be cleared:

- /user/User/.sparkStaging/

- /tmp/hive-scratch/user

- This feature is supported only when YARN is used as the resource scheduler.

Configuring Parameters

- Install the Spark client.

For details, see Installing a Client.

- Log in to the Spark client node as the client installation user.

Modify the following parameters in the {Client installation directory}/Spark/spark/conf/spark-defaults.conf file on the Spark client.

Parameter

Description

Example Value

spark.yarn.session.to.application.clean.enabled

If a Spark job fails during execution, the system may not promptly or completely clean up the temporary files it generated, resulting in residual files remaining on the system.

- true: Spark periodically deletes residual files.

- false: This function is disabled. This is the default value.

true

- Log in to FusionInsight Manager.

- Choose Cluster > Services > Spark. Click Configurations and then All Configurations, choose JDBCServer(Role) > Customization, and add the following parameters to custom.

Parameter

Description

Example Value

spark.yarn.session.to.application.clean.enabled

If a Spark job fails during execution, the system may not promptly or completely clean up the temporary files it generated, resulting in residual files remaining on the system.

- true: Spark periodically deletes residual files.

- false: This function is disabled. This is the default value.

true

spark.clean.residual.tmp.dir.init.delay

Initial delay, in minutes, for clearing files in Spark.

5

spark.clean.residual.tmp.dir.period.delay

Interval, in minutes, for clearing files in Spark.

10

- After the parameter settings are modified, click Save, perform operations as prompted, and wait until the settings are saved successfully.

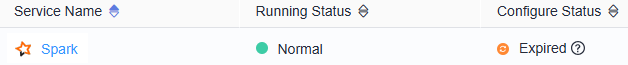

- After the Spark server configurations are updated, if Configure Status is Expired, restart the component for the configurations to take effect.

Figure 1 Modifying Spark configurations

On the Spark dashboard page, choose More > Restart Service or Service Rolling Restart, enter the administrator password, and wait until the service restarts.

On the Spark dashboard page, choose More > Restart Service or Service Rolling Restart, enter the administrator password, and wait until the service restarts.

Components are unavailable during the restart, affecting upper-layer services in the cluster. To minimize the impact, perform this operation during off-peak hours or after confirming that the operation does not have adverse impact.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot