Configuring Indexes

An index in security analysis is a storage structure used to sort one or more columns in log data. Different index configurations generate different query and analysis results. Configure indexes based on your requirements.

If you want to use the analysis function, field indexes are mandatory. After configuring a field index, you can specify field keys and field values to narrow down the query scope. For example, the query statement level:error is to query logs whose level field contains the value error.

Limitations and Constraints

- Custom index can be configured only for new custom pipelines. For details, see Creating a Pipeline.

- Field indexes cannot be deleted.

Configuring Field Indexes

- Log in to the SecMaster console.

- Click

in the upper left corner of the management console and select a region or project.

in the upper left corner of the management console and select a region or project. - Click

in the upper left corner of the page and choose Security & Compliance > SecMaster.

in the upper left corner of the page and choose Security & Compliance > SecMaster. - In the navigation pane on the left, choose Workspaces > Management. In the workspace list, click the name of the target workspace.

Figure 1 Workspace management page

- In the navigation pane on the left, choose .

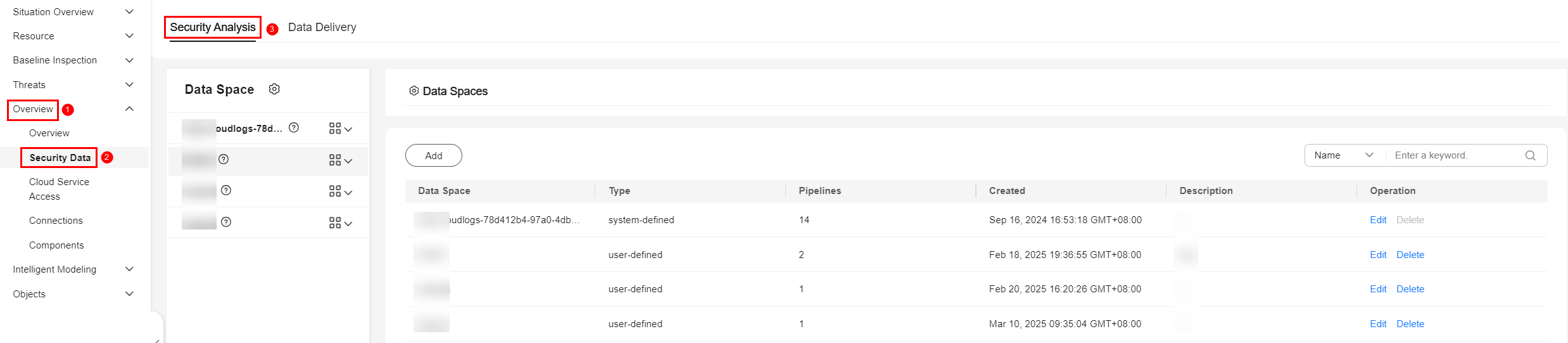

Figure 2 Accessing the Security Analysis tab

- In the data space navigation tree on the left, click a data space name to show the pipeline list. Click a pipeline name. On the displayed page, you can search the pipeline data.

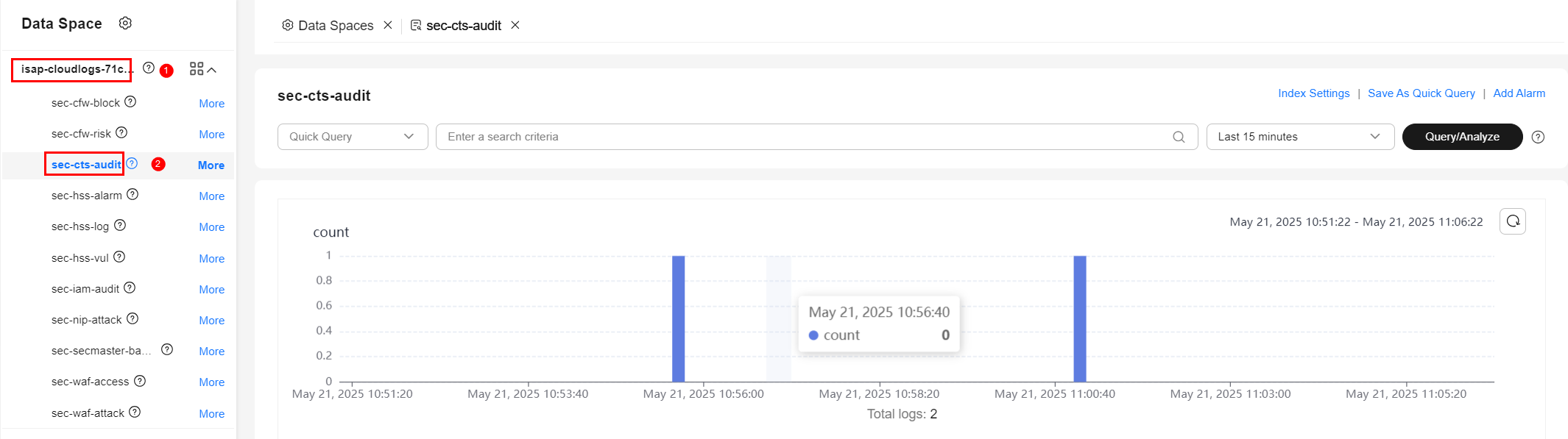

Figure 3 Pipeline data page

- On the pipeline page, click Index Settings in the upper right corner.

- On the Index Settings page, configure index parameters.

- Enable the index status.

The index status is enabled by default. When the index status is disabled, collected logs cannot be queried using indexes.

- Configure index parameters. For details about the parameters, see Table 1.

Table 1 Parameters for index settings Parameter

Description

Field

Log field (key)

Type

Data type of the log field value. The options are text, keyword, long, integer, double, float, date, and json.

Includes Chinese

Indicates whether to distinguish between Chinese and English during query. This parameter needs to be specified when Type is set to text.

- After the function is enabled, if the log contains Chinese characters, the Chinese content is split based on the Chinese grammar and the English content is split based on delimiters.

- After this function is disabled, all content is split based on delimiters.

Example: The log content is user:WAF log user Zhang San.

- After Includes Chinese is disabled, the log is split based on the colon (:). So it is split into user and WAF log user Zhang San. You can search for the log by user or WAF log user Mr. Zhang.

- After Includes Chinese is enabled, the LTS background analyzer splits the log into user, WAF, log, user, and Zhang San. You can find logs by searching for log or Mr. Zhang.

- Enable the index status.

- Click OK.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.