Complete NPU Allocation

Complete NPU allocation is a resource scheduling strategy in which NPUs are assigned exclusively to individual pods. Under this strategy, a pod occupies one or more entire NPU chips during its lifecycle. It does not share the NPU chip computing resources with other workloads. The advantages of using complete NPU allocation include:

- Stable performance: Using complete NPU allocation for a single pod eliminates performance variability caused by resource contention. This ensures consistency and reliability during model training and inference.

- Improved training efficiency: For compute-intensive tasks or large models, using complete NPU allocation minimizes context switching and reduces bandwidth interference. This enhances training efficiencies and overall throughput.

This section describes how to use complete NPU allocation for a workload.

Prerequisites

- An NPU node is available. For details, see Creating a Node.

- The CCE AI Suite (Ascend NPU) add-on has been installed. For details, see CCE AI Suite (Ascend NPU).

Creating a Workload with Complete NPU Allocation Enabled

You can create a workload with complete NPU allocation enabled using the console or kubectl.

- Log in to the CCE console and click the cluster name to access the cluster console. In the navigation pane, choose Workloads. In the upper right corner of the displayed page, click Create Workload.

- In the Container Settings area, click Basic Info, set NPU Quota to Complete NPU allocation, and select the chip type and quantity. CCE will allocate NPU resources to the container based on the settings.

- Configure other parameters by referring to Creating a Workload. After completing the settings, click Create Workload in the lower right corner. When the workload changes to the Running state, it is created.

- Use kubectl to access the cluster.

- Run the following command to create a YAML file for creating a workload with complete NPU allocation enabled:

vim npu-app.yamlThe file content is as follows:

kind: Deployment apiVersion: apps/v1 metadata: name: npu-test namespace: default spec: replicas: 1 selector: matchLabels: app: npu-test template: metadata: labels: app: npu-test spec: nodeSelector: # (Optional) After this parameter is specified, the workload pod can be scheduled to a node with the required NPU resources. accelerator/huawei-npu: ascend-310 containers: - name: container-0 image: nginx:perl env: - name: LD_LIBRARY_PATH # Configure environment variable. It is used to specify the search path of the dynamic link library (DLL) to ensure that CCE can correctly load the required DLL file when running NPU-related applications. value: "/usr/local/HiAI/driver/lib64:/usr/local/Ascend/driver/lib64/common:/usr/local/Ascend/driver/lib64/driver:/usr/local/Ascend/driver/lib64" resources: limits: cpu: 250m huawei.com/ascend-310: '1' memory: 512Mi requests: cpu: 250m huawei.com/ascend-310: '1' memory: 512Mi imagePullSecrets: - name: default-secret- nodeSelector: (Optional) specifies a node selector. After this parameter is specified, a workload pod can be scheduled to a node with the required NPU resources. If not specified, CCE automatically assigns the pod to an available NPU node.

Obtain nodes with a specified label:

kubectl get node -L accelerator/huawei-npu

Information similar to the following is displayed and the information in bold specifies the label value:

NAME STATUS ROLES AGE VERSION HUAWEI-NPU 10.100.2.59 Ready <none> 2m18s v1.19.10-r0-CCE21.11.1.B006-21.11.1.B006 ascend-310

- The types of NPU chips that are supported for containers are listed below. As shown in the YAML file, you can specify the NPU chip type and quantity using resources.limits. The number of NPU chips must be a positive integer.

- Ascend Snt3: specified by the huawei.com/ascend-310 field.

- Ascend Snt9: specified by the huawei.com/ascend-1980 field. To use this type of NPU chips, install the Volcano add-on in advance. For details, see Volcano Scheduler.

When specifying the number of NPU chips, ensure that the values of requests and limits are the same.

- nodeSelector: (Optional) specifies a node selector. After this parameter is specified, a workload pod can be scheduled to a node with the required NPU resources. If not specified, CCE automatically assigns the pod to an available NPU node.

- Create the workload.

kubectl apply -f npu-app.yamlIf information similar to the following is displayed, the workload has been created:

deployment.apps/npu-test created

- View the created pod.

kubectl get pod -n defaultInformation similar to the following is displayed:

NAME READY STATUS RESTARTS AGE npu-test-6bdb4d7cb-pmtc2 1/1 Running 0 21s

- Access the container.

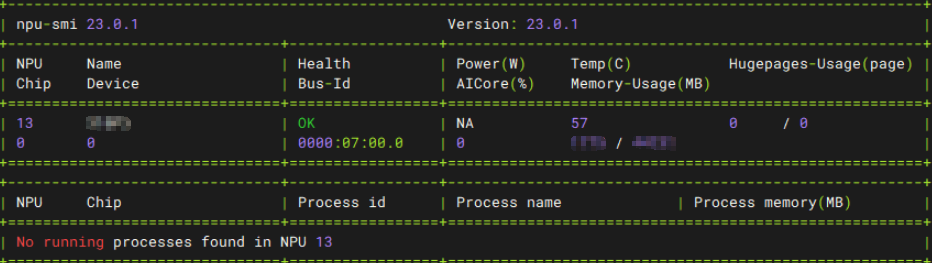

kubectl -n default exec -it npu-test-6bdb4d7cb-pmtc2 -c container-0 -- /bin/bash - Check whether the NPU has been allocated to the container.

npu-smi info

The command output shows that the NPU whose chip ID is 13 has been mounted to the container.

NPU Fault Isolation

The CCE AI Suite (Ascend NPU) add-on monitors the health status of NPUs and communicates with kubelet when a device is detected as unhealthy. kubelet removes the device from the available list, preventing the scheduling of the NPU resources. Once the device recovers, the add-on updates kubelet on its health status, making the device available for use in CCE once again.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.