In a CCE cluster, device-plugin is responsible for reporting hardware resource statuses. In GPU scenarios, nvidia-gpu-device-plugin in the kube-system namespace reports the available GPU resources on each node. If the reported GPU resources appear incorrect or if device mounting issues occur, it is advised to first check device-plugin for potential anomalies.

Run the following command to

check the device-plugin status:

kubectl get po -A -owide|grep nvidia

- If the device-plugin pod is in the Running state, run the following command to check its logs for errors:

kubectl logs -n kube-system nvidia-gpu-device-plugin-9xmhr

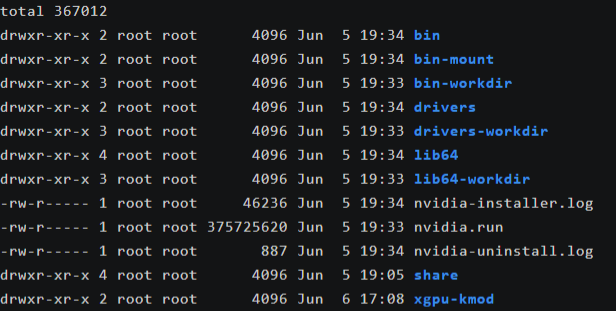

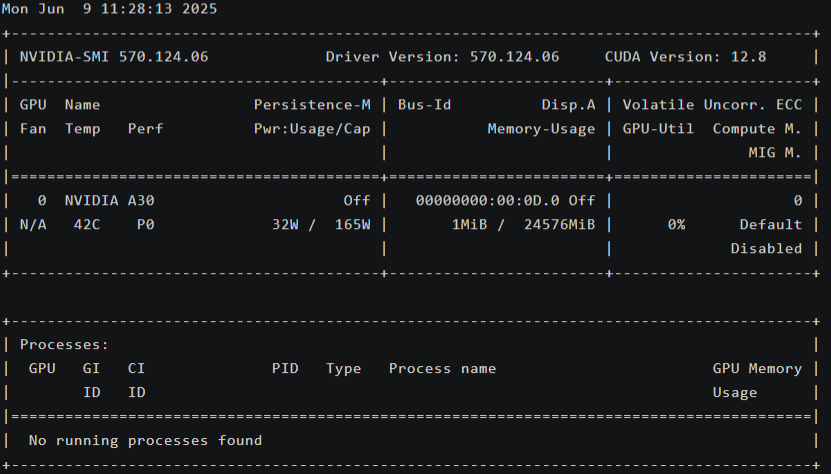

If "gpu driver wasn't ready. will re-check" is displayed in the command output, go to 2 and check whether the /usr/local/nvidia/bin/nvidia-smi or /opt/cloud/cce/nvidia/bin/nvidia-smi file exists in the driver installation directory.

...

I0527 11:29:06.420714 3336959 nvidia_gpu.go:76] device-plugin started

I0527 11:29:06.521884 3336959 nodeinformer.go:124] "nodeInformer started"

I0527 11:29:06.521964 3336959 nvidia_gpu.go:262] "gpu driver wasn't ready. will re-check in %s" 5s="(MISSING)"

I0527 11:29:11.524882 3336959 nvidia_gpu.go:262] "gpu driver wasn't ready. will re-check in %s" 5s="(MISSING)"

...