Affinity and Anti-Affinity

A nodeSelector provides a simple way to assign pods to certain nodes, as mentioned in DaemonSets. Kubernetes also supports affinity and anti-affinity for more refined, flexible scheduling.

Kubernetes allows for affinity and anti-affinity for both nodes and pods, allowing you to define strict restrictions or preferences for your workloads. You can, for example, use affinity and anti-affinity rules to group frontend and backend pods together, place similar applications on designated nodes, or distribute applications across different nodes.

Node Affinity

Labels are the foundation of affinity rules in Kubernetes. In a CCE cluster, a node can have the following labels:

$ kubectl describe node 192.168.0.212

Name: 192.168.0.212

Roles: <none>

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

failure-domain.beta.kubernetes.io/is-baremetal=false

failure-domain.beta.kubernetes.io/region=cn-east-3

failure-domain.beta.kubernetes.io/zone=cn-east-3a

kubernetes.io/arch=amd64

kubernetes.io/availablezone=cn-east-3a

kubernetes.io/eniquota=12

kubernetes.io/hostname=192.168.0.212

kubernetes.io/os=linux

node.kubernetes.io/subnetid=fd43acad-33e7-48b2-a85a-24833f362e0e

os.architecture=amd64

os.name=EulerOS_2.0_SP5

os.version=3.10.0-862.14.1.5.h328.eulerosv2r7.x86_64

These labels are automatically added to a node during its creation. The following are a few that are frequently used during scheduling.

- failure-domain.beta.kubernetes.io/region: the region a node is in. In the preceding output, the label value is cn-east-3, which indicates that the node is in the CN East-Shanghai1 region.

- failure-domain.beta.kubernetes.io/zone: the AZ a node is in

- kubernetes.io/hostname: the host name of a node

Additionally, Labels describes the custom labels. A large Kubernetes cluster typically has various kinds of labels.

When you deploy pods, you can use a nodeSelector, as described in DaemonSets, to constrain pods to nodes with specific labels. The following example shows how to use a nodeSelector to deploy pods only on the nodes with the gpu=true label.

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

nodeSelector: # Select nodes. A pod is deployed on a node that has the gpu=true label.

gpu: true

...

apiVersion: apps/v1

kind: Deployment

metadata:

name: gpu

labels:

app: gpu

spec:

selector:

matchLabels:

app: gpu

replicas: 3

template:

metadata:

labels:

app: gpu

spec:

containers:

- image: nginx:alpine

name: gpu

resources:

requests:

cpu: 100m

memory: 200Mi

limits:

cpu: 100m

memory: 200Mi

imagePullSecrets:

- name: default-secret

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: gpu

operator: In

values:

- "true"

Node affinity may seem complex, but it is more expressive, which will be further described later.

In the file, affinity represents affinity rules, while nodeAffinity specifically defines affinity constraints for nodes. requiredDuringSchedulingIgnoredDuringExecution can be broken down into two parts.

- requiredDuringScheduling specifies that pods can only be scheduled onto the node when all the defined rules are met (required).

- IgnoredDuringExecution specifies that pods already running on the node do not need to meet the defined rules. If a label is removed from the node, the pods that require the node to contain that label will not be re-scheduled.

In addition, the value of operator is set to In. This means that the label value must be in the values list. Other available operator values are as follows:

- NotIn: The label value is not in a list.

- Exists: A specific label exists.

- DoesNotExist: A specific label does not exist.

- Gt: The label value is greater than the specified value (for strings).

- Lt: The label value is less than the specified value (for strings).

There is no node anti-affinity because operators NotIn and DoesNotExist provide the same function.

Now, verify that the node affinity rule works. Add the gpu=true label to the 192.168.0.212 node.

$ kubectl label node 192.168.0.212 gpu=true node/192.168.0.212 labeled $ kubectl get node -L gpu NAME STATUS ROLES AGE VERSION GPU 192.168.0.212 Ready <none> 13m v1.15.6-r1-20.3.0.2.B001-15.30.2 true 192.168.0.94 Ready <none> 13m v1.15.6-r1-20.3.0.2.B001-15.30.2 192.168.0.97 Ready <none> 13m v1.15.6-r1-20.3.0.2.B001-15.30.2

Create a Deployment. In this example, all the Deployment pods run on the 192.168.0.212 node.

$ kubectl create -f affinity.yaml deployment.apps/gpu created $ kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE gpu-6df65c44cf-42xw4 1/1 Running 0 15s 172.16.0.37 192.168.0.212 gpu-6df65c44cf-jzjvs 1/1 Running 0 15s 172.16.0.36 192.168.0.212 gpu-6df65c44cf-zv5cl 1/1 Running 0 15s 172.16.0.38 192.168.0.212

Node Preference Rule

requiredDuringSchedulingIgnoredDuringExecution is a hard selection rule. There is also a preferred selection rule preferredDuringSchedulingIgnoredDuringExecution, which is used to specify which nodes are preferred during scheduling.

To demonstrate the effect, add a node in a different AZ from other nodes to the cluster and check the AZ of the node. In the following output, the newly added node is in cn-east-3c.

$ kubectl get node -L failure-domain.beta.kubernetes.io/zone,gpu NAME STATUS ROLES AGE VERSION ZONE GPU 192.168.0.100 Ready <none> 7h23m v1.15.6-r1-20.3.0.2.B001-15.30.2 cn-east-3c 192.168.0.212 Ready <none> 8h v1.15.6-r1-20.3.0.2.B001-15.30.2 cn-east-3a true 192.168.0.94 Ready <none> 8h v1.15.6-r1-20.3.0.2.B001-15.30.2 cn-east-3a 192.168.0.97 Ready <none> 8h v1.15.6-r1-20.3.0.2.B001-15.30.2 cn-east-3a

Define a Deployment. Use preferredDuringSchedulingIgnoredDuringExecution to set the weight of nodes in cn-east-3a to 80 and nodes with the gpu=true label to 20. In this way, pods are preferentially deployed onto the nodes in cn-east-3a.

apiVersion: apps/v1

kind: Deployment

metadata:

name: gpu

labels:

app: gpu

spec:

selector:

matchLabels:

app: gpu

replicas: 10

template:

metadata:

labels:

app: gpu

spec:

containers:

- image: nginx:alpine

name: gpu

resources:

requests:

cpu: 100m

memory: 200Mi

limits:

cpu: 100m

memory: 200Mi

imagePullSecrets:

- name: default-secret

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 80

preference:

matchExpressions:

- key: failure-domain.beta.kubernetes.io/zone

operator: In

values:

- cn-east-3a

- weight: 20

preference:

matchExpressions:

- key: gpu

operator: In

values:

- "true"

After the Deployment is created, you can see that five pods are deployed on the 192.168.0.212 node, and two pods are deployed on the 192.168.0.100 node.

$ kubectl create -f affinity2.yaml deployment.apps/gpu created $ kubectl get po -o wide NAME READY STATUS RESTARTS AGE IP NODE gpu-585455d466-5bmcz 1/1 Running 0 2m29s 172.16.0.44 192.168.0.212 gpu-585455d466-cg2l6 1/1 Running 0 2m29s 172.16.0.63 192.168.0.97 gpu-585455d466-f2bt2 1/1 Running 0 2m29s 172.16.0.79 192.168.0.100 gpu-585455d466-hdb5n 1/1 Running 0 2m29s 172.16.0.42 192.168.0.212 gpu-585455d466-hkgvz 1/1 Running 0 2m29s 172.16.0.43 192.168.0.212 gpu-585455d466-mngvn 1/1 Running 0 2m29s 172.16.0.48 192.168.0.97 gpu-585455d466-s26qs 1/1 Running 0 2m29s 172.16.0.62 192.168.0.97 gpu-585455d466-sxtzm 1/1 Running 0 2m29s 172.16.0.45 192.168.0.212 gpu-585455d466-t56cm 1/1 Running 0 2m29s 172.16.0.64 192.168.0.100 gpu-585455d466-t5w5x 1/1 Running 0 2m29s 172.16.0.41 192.168.0.212

In this example, the node with both cn-east-3a and gpu=true labels has the highest priority, followed by the node (weight: 80) with only the cn-east-3a label, and then the node with only the gpu=true label. The node without any of these two labels have the lowest priority.

From the preceding output, you can find that no pods of the Deployment are scheduled to node 192.168.0.94. This is because the node already has many pods on it and its resource usage is high. This means that preferredDuringSchedulingIgnoredDuringExecution defines a preference rather than a hard rule.

Workload Affinity

Node affinity affects only the affinity between pods and nodes. Kubernetes also supports inter-pod affinity, which allows you to, for example, deploy the frontend and backend of an application on the same node to reduce access latency. There are also two types of inter-pod affinity rules: requiredDuringSchedulingIgnoredDuringExecution and preferredDuringSchedulingIgnoredDuringExecution.

For workload affinity, the topologyKey field cannot be left blank when requiredDuringSchedulingIgnoredDuringExecution and preferredDuringSchedulingIgnoredDuringExecution are used.

Assume that the backend pod of an application has been created and has the app=backend label.

$ kubectl get po -o wide NAME READY STATUS RESTARTS AGE IP NODE backend-658f6cb858-dlrz8 1/1 Running 0 2m36s 172.16.0.67 192.168.0.100

Configure the following pod affinity rule to deploy the frontend pods of the application to the same node as its backend pod:

apiVersion: apps/v1

kind: Deployment

metadata:

name: frontend

labels:

app: frontend

spec:

selector:

matchLabels:

app: frontend

replicas: 3

template:

metadata:

labels:

app: frontend

spec:

containers:

- image: nginx:alpine

name: frontend

resources:

requests:

cpu: 100m

memory: 200Mi

limits:

cpu: 100m

memory: 200Mi

imagePullSecrets:

- name: default-secret

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- topologyKey: kubernetes.io/hostname

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- backend

Create the frontend pods and check their nodes. You will find that the frontend pods have been deployed on the same node as the backend pod.

$ kubectl create -f affinity3.yaml deployment.apps/frontend created $ kubectl get po -o wide NAME READY STATUS RESTARTS AGE IP NODE backend-658f6cb858-dlrz8 1/1 Running 0 5m38s 172.16.0.67 192.168.0.100 frontend-67ff9b7b97-dsqzn 1/1 Running 0 6s 172.16.0.70 192.168.0.100 frontend-67ff9b7b97-hxm5t 1/1 Running 0 6s 172.16.0.71 192.168.0.100 frontend-67ff9b7b97-z8pdb 1/1 Running 0 6s 172.16.0.72 192.168.0.100

The scheduler first evaluates the topologyKey setting, which defines topology domains and determines the selection range for pod placement. Nodes with the specified key and identical values are considered to be in the same topology domain. The scheduler then applies other defined rules to finalize pod placement. Since all nodes in the previous example share the kubernetes.io/hostname label, they all fall within the same topology domain, meaning the impact of topologyKey cannot be clearly observed.

To see how topologyKey works, assume that there are two backend pods of the application and they run on different nodes.

$ kubectl get po -o wide NAME READY STATUS RESTARTS AGE IP NODE backend-658f6cb858-5bpd6 1/1 Running 0 23m 172.16.0.40 192.168.0.97 backend-658f6cb858-dlrz8 1/1 Running 0 2m36s 172.16.0.67 192.168.0.100

Add the prefer=true label to nodes 192.168.0.97 and 192.168.0.94.

$ kubectl label node 192.168.0.97 prefer=true node/192.168.0.97 labeled $ kubectl label node 192.168.0.94 prefer=true node/192.168.0.94 labeled $ kubectl get node -L prefer NAME STATUS ROLES AGE VERSION PREFER 192.168.0.100 Ready <none> 44m v1.15.6-r1-20.3.0.2.B001-15.30.2 192.168.0.212 Ready <none> 91m v1.15.6-r1-20.3.0.2.B001-15.30.2 192.168.0.94 Ready <none> 91m v1.15.6-r1-20.3.0.2.B001-15.30.2 true 192.168.0.97 Ready <none> 91m v1.15.6-r1-20.3.0.2.B001-15.30.2 true

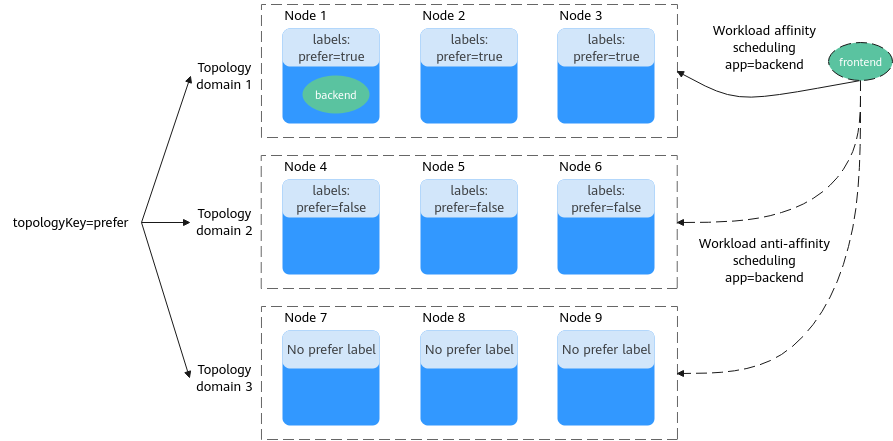

If topologyKey in podAffinity is set to prefer, the node topology domains are divided as shown in Figure 2.

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- topologyKey: prefer

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- backend

During scheduling, node topology domains are determined based on the prefer label. In this example, the 192.168.0.97 and 192.168.0.94 nodes belong to the same topology domain. If a pod labeled app=backend runs within this topology domain, the frontend is scheduled to the same domain, even if not all nodes within it contain a pod with the app=backend label. In this example, only the 192.168.0.97 node hosts a pod labeled app=backend, but the scheduling rules ensure that the frontend pods are still deployed within the domain, meaning they can be placed on either the 192.168.0.97 or 192.168.0.94 node.

$ kubectl create -f affinity3.yaml deployment.apps/frontend created $ kubectl get po -o wide NAME READY STATUS RESTARTS AGE IP NODE backend-658f6cb858-5bpd6 1/1 Running 0 26m 172.16.0.40 192.168.0.97 backend-658f6cb858-dlrz8 1/1 Running 0 5m38s 172.16.0.67 192.168.0.100 frontend-67ff9b7b97-dsqzn 1/1 Running 0 6s 172.16.0.70 192.168.0.97 frontend-67ff9b7b97-hxm5t 1/1 Running 0 6s 172.16.0.71 192.168.0.97 frontend-67ff9b7b97-z8pdb 1/1 Running 0 6s 172.16.0.72 192.168.0.94

Workload Anti-Affinity

In some cases, instead of grouping pods onto the same node using affinity rules, it is preferable to distribute them across different nodes to prevent some performance issues.

For workload anti-affinity, when requiredDuringSchedulingIgnoredDuringExecution is used, Kubernetes enforces a restriction through the default admission controller, LimitPodHardAntiAffinityTopology. It mandates that topologyKey can only be kubernetes.io/hostname by default. If a custom topology logic is required, the admission controller must be modified or disabled.

The following is an example of defining an anti-affinity rule. This rule divides node topology domains by the kubernetes.io/hostname label. If a pod with the app=frontend label already exists on a node in this topology domain, pods with the same label cannot be scheduled to other nodes in the topology domain.

apiVersion: apps/v1

kind: Deployment

metadata:

name: frontend

labels:

app: frontend

spec:

selector:

matchLabels:

app: frontend

replicas: 5

template:

metadata:

labels:

app: frontend

spec:

containers:

- image: nginx:alpine

name: frontend

resources:

requests:

cpu: 100m

memory: 200Mi

limits:

cpu: 100m

memory: 200Mi

imagePullSecrets:

- name: default-secret

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- topologyKey: kubernetes.io/hostname # Topology domain of a node

labelSelector: # Pod label matching rule

matchExpressions:

- key: app

operator: In

values:

- frontend

Create an anti-affinity rule and view the deployment result. In the example, node topology domains are divided by the kubernetes.io/hostname label. The label values of nodes with the kubernetes.io/hostname label are different, so there is only one node in a topology domain. If a topology domain contains only one node where a frontend pod already exists, pods with the same label will not be scheduled to that topology domain. In this example, there are only four nodes. Therefore, there is one pod which is in the Pending state and cannot be scheduled.

$ kubectl create -f affinity4.yaml deployment.apps/frontend created $ kubectl get po -o wide NAME READY STATUS RESTARTS AGE IP NODE frontend-6f686d8d87-8dlsc 1/1 Running 0 18s 172.16.0.76 192.168.0.100 frontend-6f686d8d87-d6l8p 0/1 Pending 0 18s <none> <none> frontend-6f686d8d87-hgcq2 1/1 Running 0 18s 172.16.0.54 192.168.0.97 frontend-6f686d8d87-q7cfq 1/1 Running 0 18s 172.16.0.47 192.168.0.212 frontend-6f686d8d87-xl8hx 1/1 Running 0 18s 172.16.0.23 192.168.0.94

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.