Developing a Hive SQL Job

This section introduces how to develop Hive SQL scripts on DataArts Factory.

Scenario Description

As a one-stop big data development platform, DataArts Factory supports development of multiple big data tools. Hive is a data warehouse tool running on Hadoop. It can map structured data files to a database table and provides a simple SQL search function that converts SQL statements into MapReduce tasks.

Preparations

- MRS has been enabled and an MRS cluster has been created for running Hive SQL jobs.

- Cloud Data Migration (CDM) has been enabled. A CDM cluster has been created for providing an agent for communication between DataArts Factory and MRS.

Ensure that the VPC, subnet, and security group of the CDM cluster are the same as those of the MRS cluster so that the two clusters can communicate with each other.

Creating a Hive Data Connection

Before developing a Hive SQL script, you must create a data connection to MRS Hive on the Manage Data Connections page of Management Center. The data connection name is hive1009. For how to create an MRS Hive connection, see MRS Hive Connection Parameters.

Description of key parameters:

- Cluster Name: Enter the name of the created MRS cluster.

- Agent: Select the created CDM cluster.

Developing a Hive SQL Script

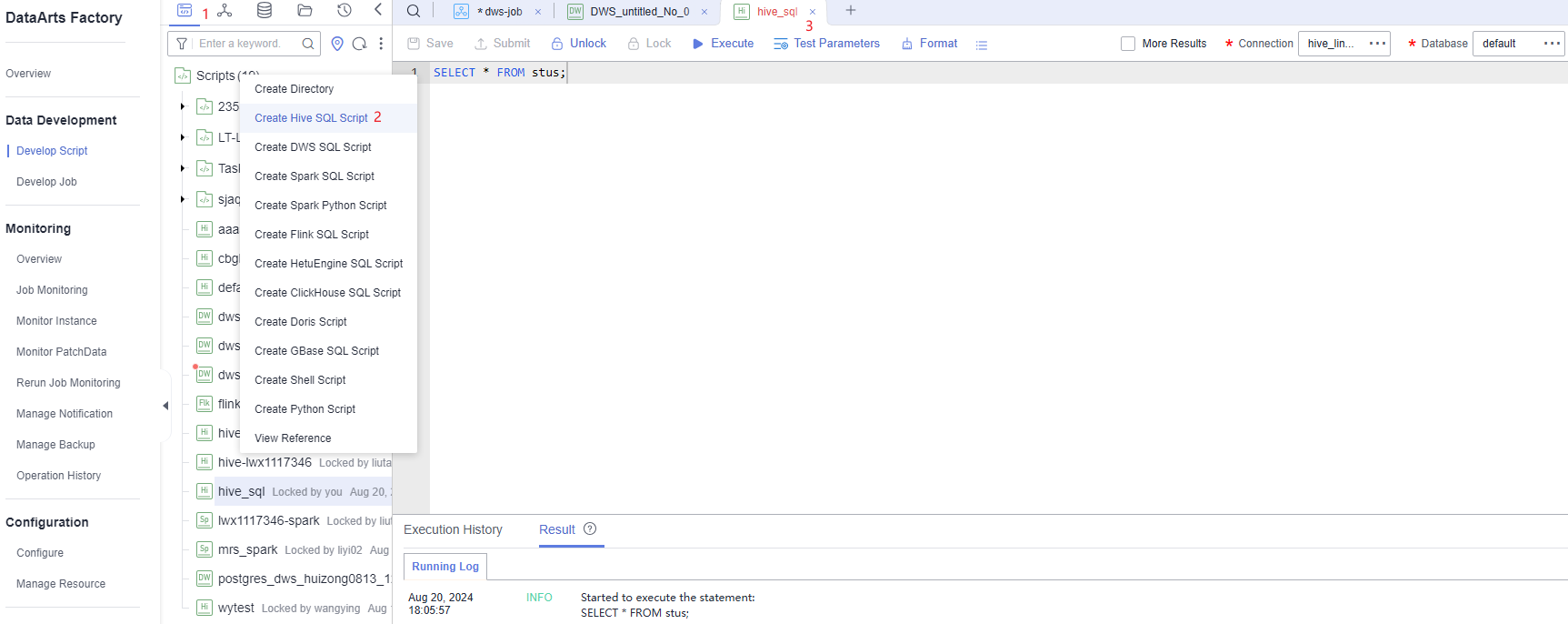

Choose Development > Develop Script and create a Hive SQL script named hive_sql. Then enter SQL statements in the editor to fulfill business requirements.

Notes:

- The script development area in Figure 1 is a temporary debugging area. After you close the tab page, the development area will be cleared. You can click Submit to save and submit a script version.

- Data Connection: Connection created in Creating a Hive Data Connection.

Developing a Hive SQL Job

After the Hive SQL script is developed, build a periodically deducted job for the Hive SQL script so that the script can be executed periodically.

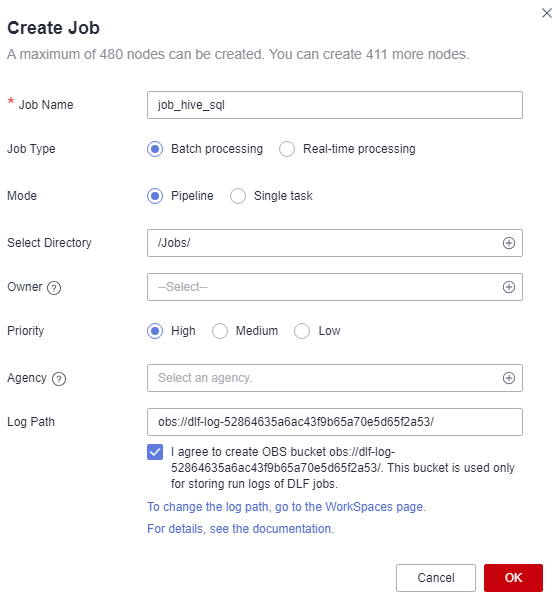

- Create an empty DataArts Factory job named job_hive_sql.

Figure 2 Creating a job named job_hive_sql

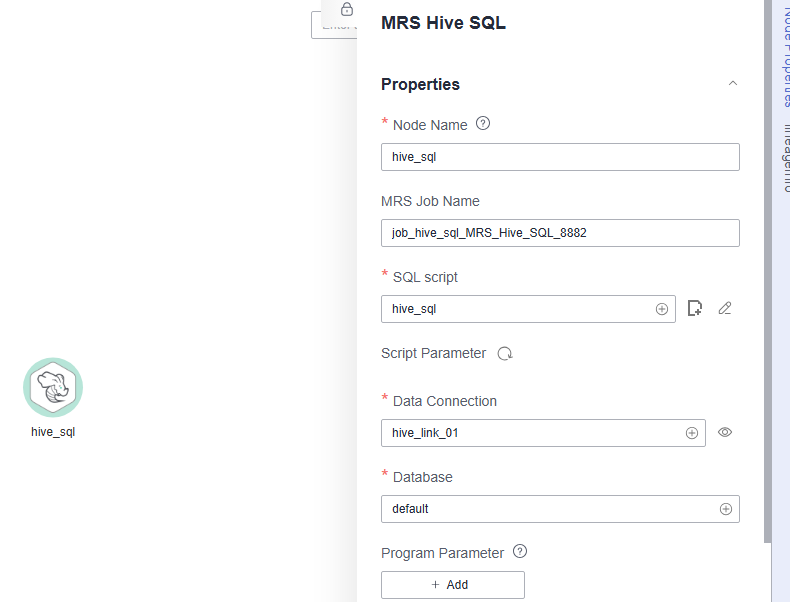

- Go to the job development page, drag the MRS Hive SQL node to the canvas, and click the node to configure node properties.

Figure 3 Configuring properties for an MRS Hive SQL node

Description of key properties:

- Node Name: Name of the SQL script hive_sql by default. The value can be changed.

- SQL Script: Hive SQL script hive_sql that is developed in Developing a Hive SQL Script.

- Data Connection: Data connection that is configured in the SQL script hive_sql is selected by default. The value can be changed.

- Database: Database that is configured in the SQL script hive_sql and is selected by default. The value can be changed.

- After configuring the job, click

to test it.

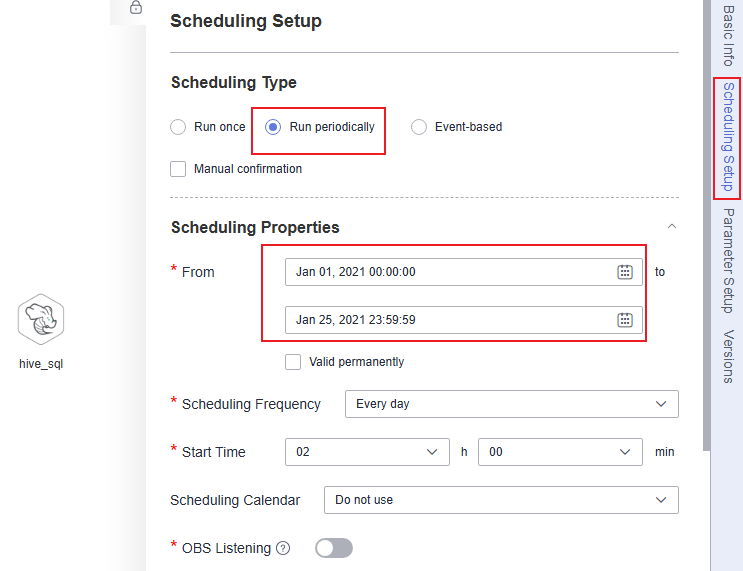

to test it. - If the job runs successfully, click the blank area on the canvas and configure the job scheduling policy on the scheduling configuration page on the right.

Figure 4 Configuring the scheduling mode

The job is executed at 02:00 every day from Jan 1, 2021 to Jan 25, 2021.

- Click Submit and Execute. The job will be automatically executed every day.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.