文档首页/

MapReduce服务 MRS/

组件操作指南(阿布扎比区域)/

使用Spark2x/

Spark2x常见问题/

SQL和DataFrame/

执行analyze table语句,因资源不足出现任务卡住

更新时间:2024-07-19 GMT+08:00

执行analyze table语句,因资源不足出现任务卡住

问题

使用spark-sql执行analyze table语句,任务一直卡住,打印的信息如下:

spark-sql> analyze table hivetable2 compute statistics;

Query ID = root_20160716174218_90f55869-000a-40b4-a908-533f63866fed

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks is set to 0 since there's no reduce operator

16/07/20 17:40:56 WARN JobResourceUploader: Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

Starting Job = job_1468982600676_0002, Tracking URL = http://10-120-175-107:8088/proxy/application_1468982600676_0002/

Kill Command = /opt/client/HDFS/hadoop/bin/hadoop job -kill job_1468982600676_0002

回答

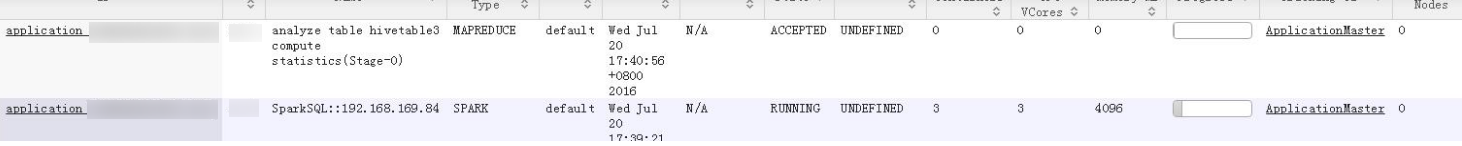

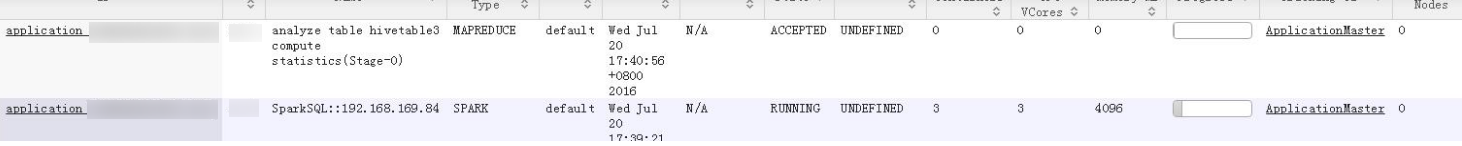

执行analyze table hivetable2 compute statistics语句时,由于该sql语句会启动MapReduce任务。从YARN的ResourceManager Web UI页面看到,该任务由于资源不足导致任务没有被执行,表现出任务卡住的现象。

图1 ResourceManager Web UI页面

建议用户执行analyze table语句时加上noscan,其功能与analyze table hivetable2 compute statistics语句相同,具体命令如下:

spark-sql> analyze table hivetable2 compute statistics noscan

该命令不用启动MapReduce任务,不会占用YARN资源,从而任务可以被执行。

父主题: SQL和DataFrame